Overview

Apache Kafka has become a cornerstone for real-time data streaming, offering high throughput and fault tolerance. Ceph, a distributed storage system, provides robust object, block, and file storage. Combining these two powerful open-source technologies seems like a logical step for building scalable and resilient data pipelines. This post explores how Kafka can work on Ceph, examining different approaches, their implications, and best practices.

Understanding the Core Components

Before diving into integration, let's briefly revisit the fundamentals of Kafka and Ceph.

Apache Kafka

Kafka is a distributed streaming platform that allows you to publish and subscribe to streams of records, similar to a message queue or enterprise messaging system . Key concepts include:

-

Brokers: Servers that form a Kafka cluster.

-

Topics: Categories or feeds to which records are published.

-

Partitions: Topics are split into partitions for parallelism, scalability, and fault tolerance. Each partition is an ordered, immutable sequence of records.

-

Log Segments: Partitions are stored as log files (segments) on disk. Kafka appends records to these logs sequentially.

-

Replication: Partitions are replicated across multiple brokers to ensure data durability.

-

Producers & Consumers: Applications that write data to and read data from Kafka topics, respectively.

-

ZooKeeper/KRaft: Used for cluster coordination, metadata management, and leader election (KRaft is replacing ZooKeeper).

Kafka's performance relies heavily on sequential disk I/O and the operating system's page cache . It generally expects a fast, local filesystem for its log.dirs .

![Apache Kafka Architecture [10]](/blog/apache-kafka-ceph-deployment-approaches-explained-comparison/1.png)

Ceph Storage

Ceph is a software-defined storage system that provides a unified platform for various storage needs . Its core components are:

-

RADOS (Reliable Autonomic Distributed Object Store): The foundation of Ceph, managing data as objects distributed across the cluster .

-

OSDs (Object Storage Daemons): Store data on physical disks and handle replication, erasure coding, and recovery.

-

Monitors (MONs): Maintain the cluster map and ensure consensus.

-

Managers (MGRs): Provide additional monitoring and interface to external management systems.

-

CRUSH Algorithm: Determines data placement and retrieval without a central lookup table, enabling scalability and dynamic rebalancing.

-

Storage Interfaces:

-

Ceph Block Device (RBD): Provides network block devices, suitable for VMs or mounting filesystems.

-

CephFS: A POSIX-compliant distributed file system that uses Metadata Servers (MDS) to manage file metadata.

-

Ceph Object Gateway (RGW): Offers an S3/Swift-compatible object storage interface .

-

Ceph is designed for durability through replication or erasure coding and scales by adding more OSDs.

![Ceph Architecture [11]](/blog/apache-kafka-ceph-deployment-approaches-explained-comparison/2.png)

How Kafka Can Leverage Ceph Storage

There are primarily three ways Kafka can interact with Ceph for its storage needs:

-

Kafka logs on CephFS: Storing Kafka's

log.dirsdirectly on a CephFS mount. -

Kafka logs on Ceph RBD: Each Kafka broker uses a dedicated Ceph RBD image, on which a traditional filesystem (like XFS or EXT4) is layered for

log.dirs. -

Kafka with Tiered Storage using Ceph RGW: Kafka's native tiered storage feature (KIP-405) offloads older log segments to an S3-compatible object store, which Ceph RGW can provide.

Let's explore each approach.

Kafka log.dirs on CephFS

Concept

In this setup, Kafka brokers mount a CephFS filesystem and configure their log.dirs path to point to a directory within CephFS. Since CephFS is POSIX-compliant, Kafka can, in theory, operate as it would on a local filesystem.

Potential Advantages

-

Shared Storage: CephFS provides a shared namespace, which might seem appealing for certain operational aspects, though Kafka itself doesn't require shared write access to log segments between brokers.

-

Centralized Storage Management: Storage is managed by the Ceph cluster.

Challenges and Considerations

-

MDS Performance & Scalability: CephFS relies on Metadata Servers (MDS) for all metadata operations (file creation, deletion, lookups, attribute changes). Kafka's log management involves frequent segment creation, deletion, and index file operations, which can put significant load on the MDS. An overloaded MDS can become a bottleneck for the entire Kafka cluster.

-

Latency: Network latency is inherent with any distributed filesystem. Kafka is sensitive to disk I/O latency for both writes (acknowledgments) and reads (consumers). CephFS operations involve network round-trips to OSDs and potentially MDS, which can increase latency compared to local SSDs/NVMe. Slow

fsync\()operations on CephFS have also been reported, which could impact Kafka if explicit flushes are configured or required by its internal mechanisms . -

Stability Concerns: Community discussions and bug reports have historically indicated stability issues with the CephFS client or MDS under certain workloads or conditions, potentially leading to client hangs or loss of access . Such issues would be catastrophic for Kafka brokers.

-

mmapIssues: Kafka uses memory-mapped files (mmap) for its offset index and time index files. There are known issues withmmapbehavior on network filesystems, potentially leading to index corruption or severe performance degradation . This is a significant risk factor for running Kafka on CephFS. -

Complexity: CephFS itself is a complex distributed system. Troubleshooting performance or stability issues involves understanding both Kafka and the intricacies of CephFS and its MDS.

Summary

Given the potential for MDS bottlenecks, network latency impact, historical stability concerns, and critical issues with mmap over network filesystems, running Kafka's primary log.dirs directly on CephFS is generally not recommended for production environments requiring high performance and stability.

Kafka log.dirs on Ceph RBD

Concept

Each Kafka broker is provisioned with one or more Ceph RBD images. A standard filesystem (commonly XFS or EXT4) is created on each RBD image, and this filesystem is then mounted by the broker to store its log.dirs . This approach is common for running VMs on Ceph and is facilitated in Kubernetes environments by CSI (Container Storage Interface) drivers for Ceph RBD.

Potential Advantages

-

Direct Block Access: RBD provides block-level access, which is generally more performant for I/O-intensive applications than a distributed filesystem layer, as it bypasses the MDS.

-

Dedicated Storage per Broker: Each broker has its own RBD image(s), isolating I/O to some extent (though all I/O still goes to the shared Ceph cluster).

-

Mature Technology: RBD is a well-established and widely used component of Ceph.

-

Ceph Durability: Data stored on RBD benefits from Ceph's replication or erasure coding.

Challenges and Considerations

-

Ceph Cluster Performance: The performance of Kafka on RBD is directly tied to the underlying Ceph cluster's capabilities (OSD types - SSD/NVMe are highly recommended, network bandwidth, CPU resources for OSDs). An under-provisioned or poorly tuned Ceph cluster will lead to poor Kafka performance.

-

Network Latency: Like CephFS, RBD is network-attached storage. Every read and write operation incurs network latency. While generally lower than CephFS for raw data I/O, it will still be higher than local NVMe drives.

-

Filesystem Choice on RBD: The choice of filesystem (e.g., XFS, EXT4) on the RBD image and its mount options can impact performance. XFS is often favored for its scalability and performance with large files and parallel I/O.

-

librbdCaching: Ceph RBD has a client-side caching layer (librbdcache). Proper configuration of this cache (e.g., write-back, write-through) is crucial. Write-back caching can improve performance but carries a risk of data loss if the client (Kafka broker host) crashes before data is flushed to the Ceph cluster. Write-through is safer but slower. The interaction betweenlibrbdcache and Kafka's reliance on the OS page cache needs careful consideration. -

mmapConcern (Potentially Mitigated): While the filesystem is on a network block device, themmapissues typically associated with network filesystems like NFS (which rely on complex client-server cache coherency protocols formmap) might be less severe if the filesystem on RBD behaves more like a local disk concerningmmapsemantics. However, thorough testing is essential as Kafka's index files are critical. -

Replication Layers: Kafka replicates data between brokers (e.g., replication factor of 3). Ceph also replicates data across OSDs (e.g., pool size of 3). Running Kafka with RF=3 on a Ceph pool with replica=3 means 9 copies of the data, which is excessive and impacts write performance and capacity.

- A common question is whether to reduce Kafka's RF (e.g., to 1) and rely solely on Ceph for data durability. While this can reduce write amplification, it has significant implications for Kafka's own availability mechanisms, leader election, and ISR (In-Sync Replica) management. If a broker fails, Kafka needs its own replicas to failover quickly. Relying only on Ceph means the broker's storage (the RBD image) is durable, but Kafka itself might not be able to recover partitions as seamlessly without its native replication. This approach is generally not recommended without deep expertise and understanding of the failure modes.

-

Complexity and Tuning: Requires careful tuning of the Ceph cluster (pools, CRUSH rules, OSDs, network) and

librbdsettings.

Summary

Running Kafka logs on Ceph RBD is more viable than CephFS but demands a robust, low-latency, and well-tuned Ceph cluster, preferably using SSDs or NVMe for OSDs. The performance will likely not match dedicated local NVMe drives, especially for latency-sensitive operations. The mmap concern needs verification. It's a trade-off between centralized storage management/scalability offered by Ceph and the raw performance/simplicity of local disks. This is seen in practice, for example, when deploying Kafka on Kubernetes platforms that use Ceph for persistent volumes.

Kafka with Tiered Storage using Ceph RGW

Concept

This is becoming a more common and often recommended pattern. Apache Kafka (since KIP-405) supports tiered storage, allowing older, less frequently accessed log segments to be offloaded from expensive local broker storage to a cheaper, scalable remote object store . Ceph RGW provides an S3-compatible interface, making it a suitable candidate for this remote tier.

How it Works

-

Primary Storage: Kafka brokers continue to use local disks (preferably fast SSDs/NVMe) for active log segments, ensuring low latency for writes and recent reads.

-

Remote Tier: Once log segments are considered "cold" (based on time or size), Kafka's tiered storage mechanism copies them to the Ceph RGW (S3) bucket. Local copies may then be deleted.

-

Consumer Reads: If a consumer needs to read data from a segment that has been moved to Ceph RGW, Kafka fetches it from RGW, potentially caching it locally for a short period.

Potential Advantages

-

Cost Savings: Reduces the need for large, expensive local storage on brokers. Object storage (like Ceph RGW) is generally more cost-effective for long-term retention.

-

Scalability & Elasticity: Storage capacity can be scaled almost infinitely with Ceph RGW, decoupled from broker compute resources.

-

Performance for Active Data: Retains high performance for writes and reads of active data by using local disks.

-

Simplified Broker Storage Management: Brokers need to manage less local data.

Challenges and Considerations

-

Read Latency for Old Data: Accessing data tiered to Ceph RGW will incur higher latency than reading from local disk, involving network calls to the RGW and then to RADOS. Prefetching and caching mechanisms in Kafka's tiered storage implementation aim to mitigate this.

-

Network Bandwidth: Moving data to and from Ceph RGW consumes network bandwidth.

-

Configuration Complexity: Setting up and configuring tiered storage requires careful planning of tiering policies, RGW performance tuning, and monitoring.

-

Not for Active Logs: This approach is specifically for older data, not for the primary, active log segments where Kafka's low-latency writes and reads occur.

Summary

Using Ceph RGW as a remote tier for Kafka's tiered storage is a highly practical and increasingly adopted solution. It balances performance for hot data with cost-effective, scalable storage for cold data. This is often the most recommended way to integrate Kafka with Ceph.

Comparing Solutions

| Feature | Kafka on CephFS (Primary Logs) | Kafka on Ceph RBD (Primary Logs) | Kafka Tiered Storage to Ceph RGW | Local Disk (Baseline) |

|---|---|---|---|---|

| Primary Use | Not Recommended | Possible with caveats | Older data | Active data |

| Performance | Low (MDS bottleneck, latency) | Medium (network latency, Ceph perf) | High (local) for active, Low (RGW) for tiered | Very High (esp. NVMe) |

| Complexity | High | High | Medium (adds tiered mgmt) | Low to Medium |

| Stability Risk | High (MDS, mmap) | Medium (Ceph perf, mmap check) | Low (well-defined interface) | Low |

| Scalability | Ceph scale | Ceph scale | Ceph RGW scale for cold data | Per-broker, manual effort |

| Cost Efficiency | Depends on Ceph | Depends on Ceph | Good (cheap object storage) | Higher for all-hot storage |

Conclusion

While it's technically possible to run Apache Kafka's primary log storage on CephFS or Ceph RBD, these approaches come with significant challenges, particularly concerning performance, stability (for CephFS), and complexity.

-

CephFS is generally not recommended for Kafka's primary logs due to MDS overhead and

mmapconcerns. -

Ceph RBD is a more viable option than CephFS but requires a high-performance Ceph cluster (ideally all-flash), careful tuning, and still may not match the performance of local NVMe drives. The implications of combining Kafka's replication with Ceph's replication and potential

mmapissues need thorough evaluation. -

The most practical and increasingly popular way to combine Kafka and Ceph is by using Ceph RGW as a backend for Kafka's native Tiered Storage feature. This approach leverages the strengths of both systems: fast local storage for Kafka's active data and cost-effective, scalable object storage via Ceph RGW for older, archived data.

Ultimately, the decision depends on specific requirements, existing infrastructure, and operational expertise. For most use cases demanding high performance from Kafka, dedicated local storage for active logs, possibly augmented with tiered storage to Ceph RGW for long-term retention, offers the best balance.

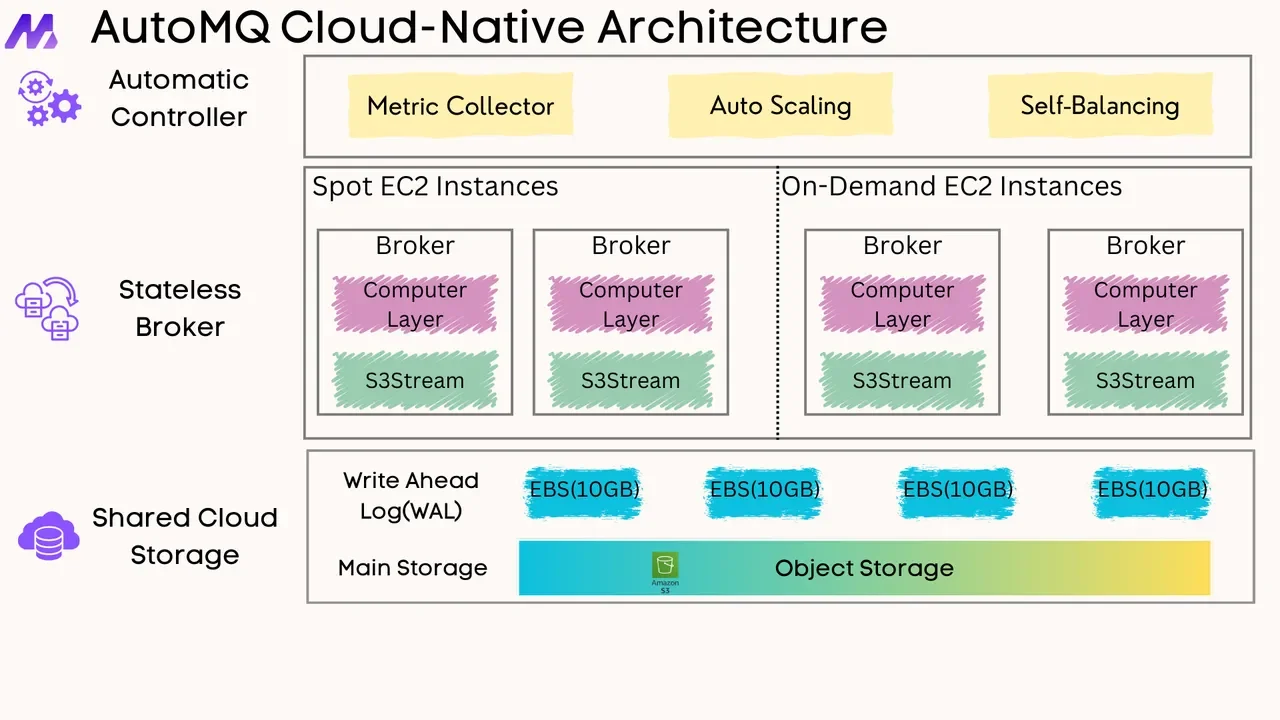

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging