Apache Kafka defined Data Streaming 15 years ago—but its disk-based architecture wasn't built for the cloud. AutoMQ keeps the API you trust and makes Kafka diskless.

Kafka's disk-based architecture creates hidden costs in the cloud: expensive storage replication, cross-AZ fees, slow scaling, and constant over-provisioning.

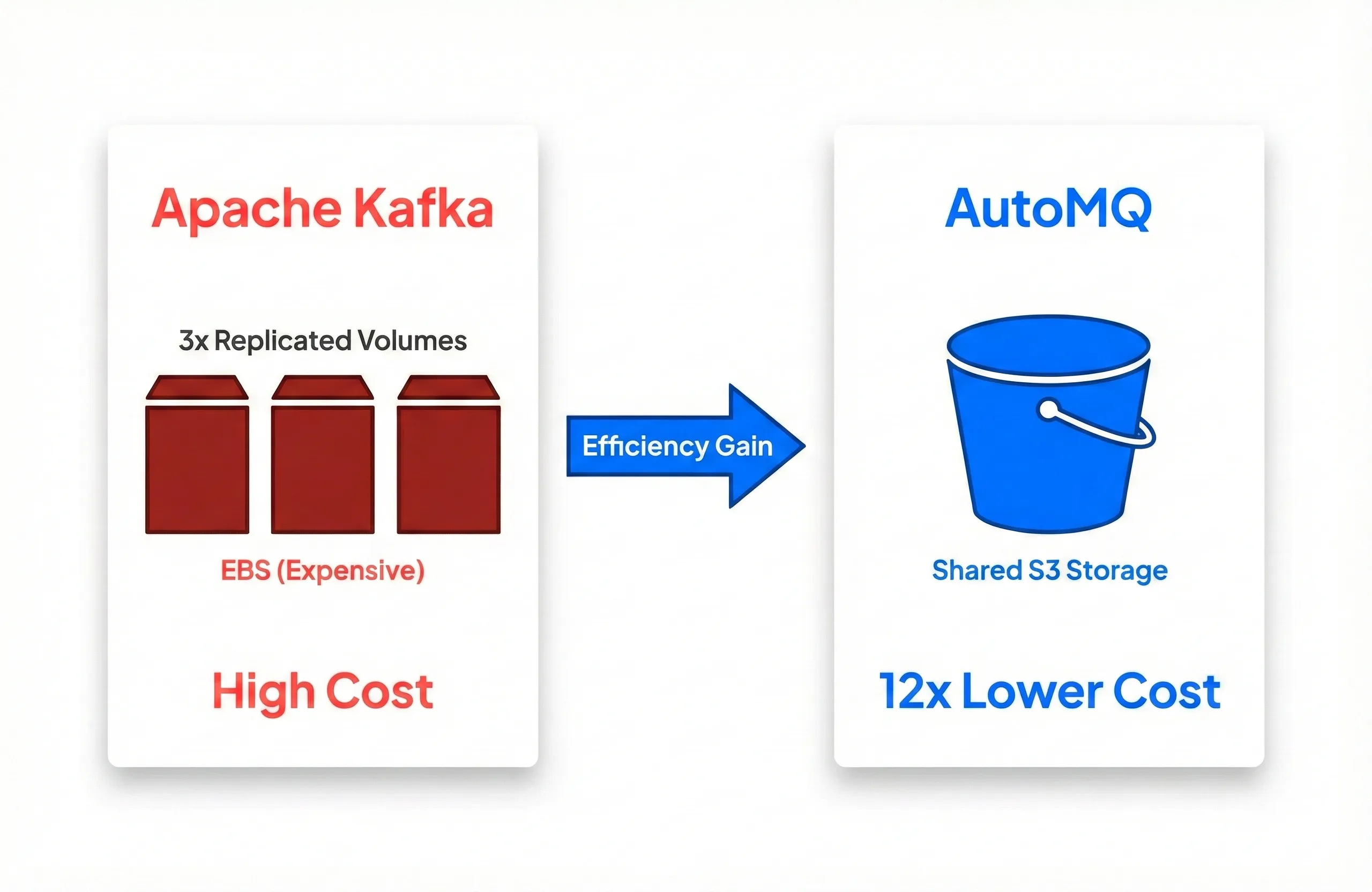

The Replication Tax — Kafka requires 3x replication on expensive EBS volumes.

Result: You're paying premium prices for data you rarely read.

S3-Native Storage — Data is written directly to object storage, no local disks needed.

Result: Over 90% lower storage costs with 11-nines durability built-in.

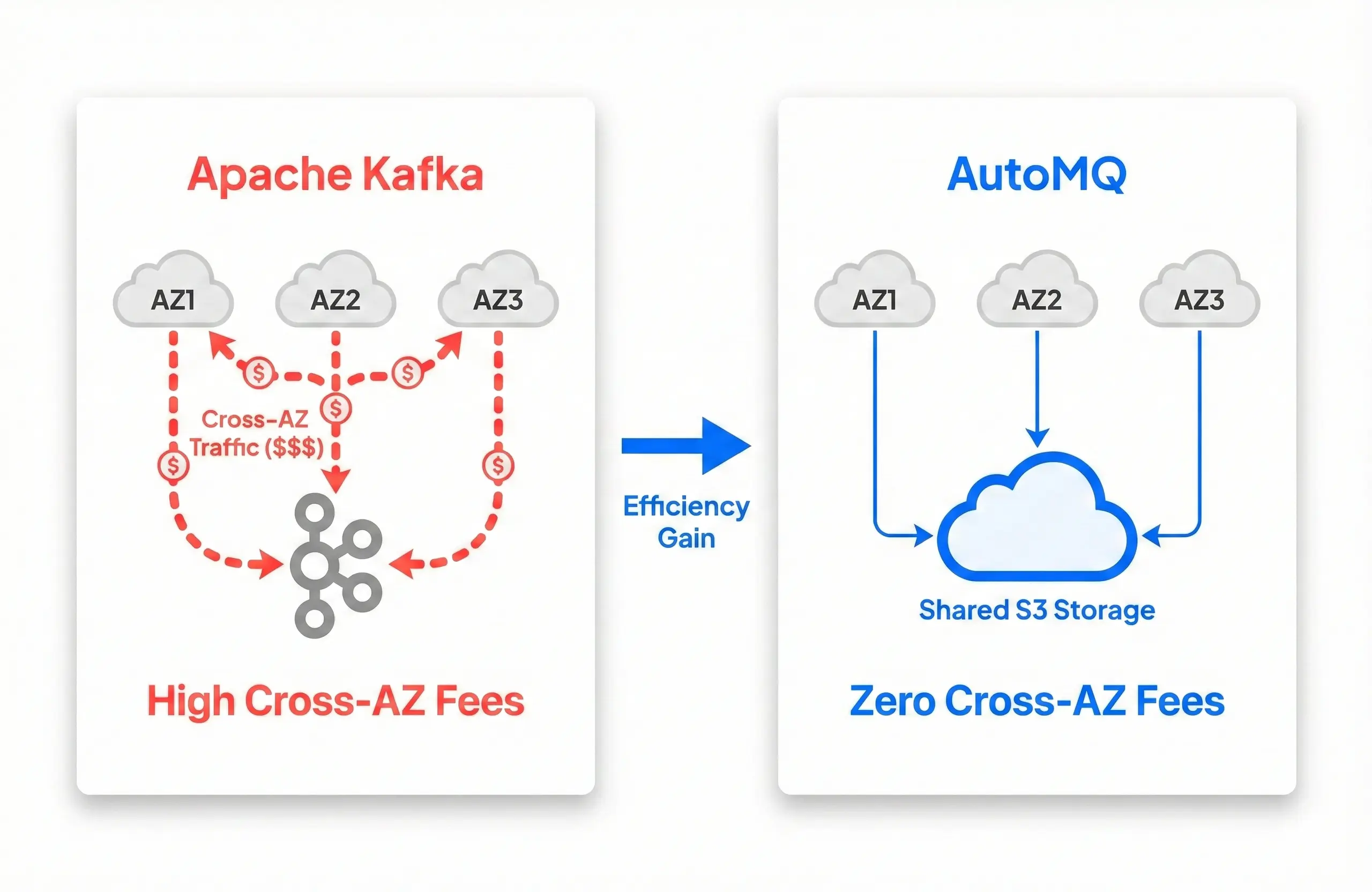

The Traffic Multiplier — Every message crosses AZ boundaries multiple times: producer, consumer, and 2x internal replication.

Result: Cross-AZ fees can exceed your compute costs.

Single-AZ Traffic, Multi-AZ Durability — Reads and writes stay within one AZ; S3 handles cross-AZ replication for you.

Result: Near-zero cross-AZ data transfer fees.

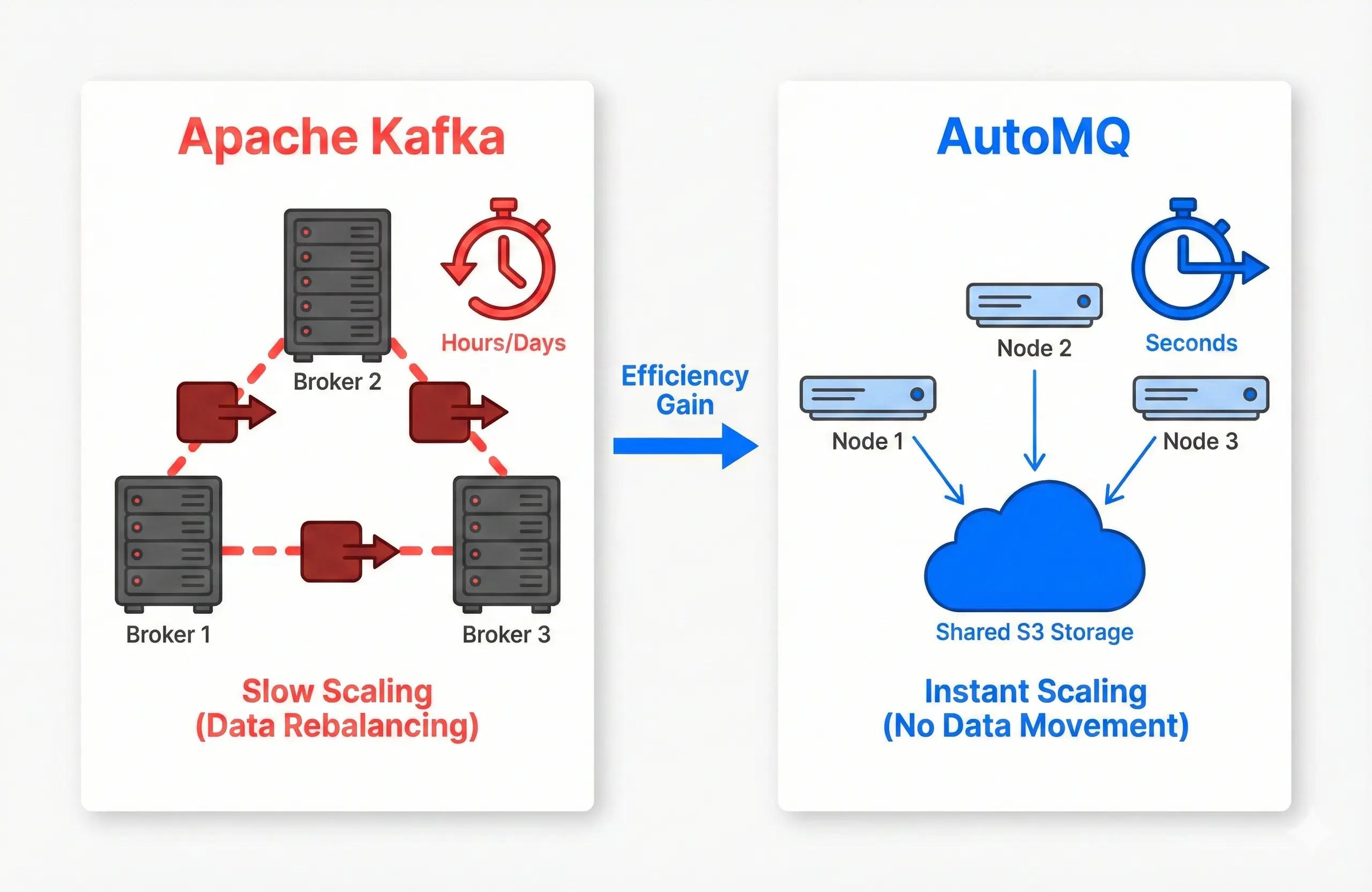

Coupled Compute & Storage — Adding brokers means rebalancing terabytes of data across the cluster.

Result: Hours of risky operations, often requiring maintenance windows.

Stateless Brokers — Partitions are reassigned by updating metadata, not moving data.

Result: Scale from 3 to 30 brokers in under 10 seconds.

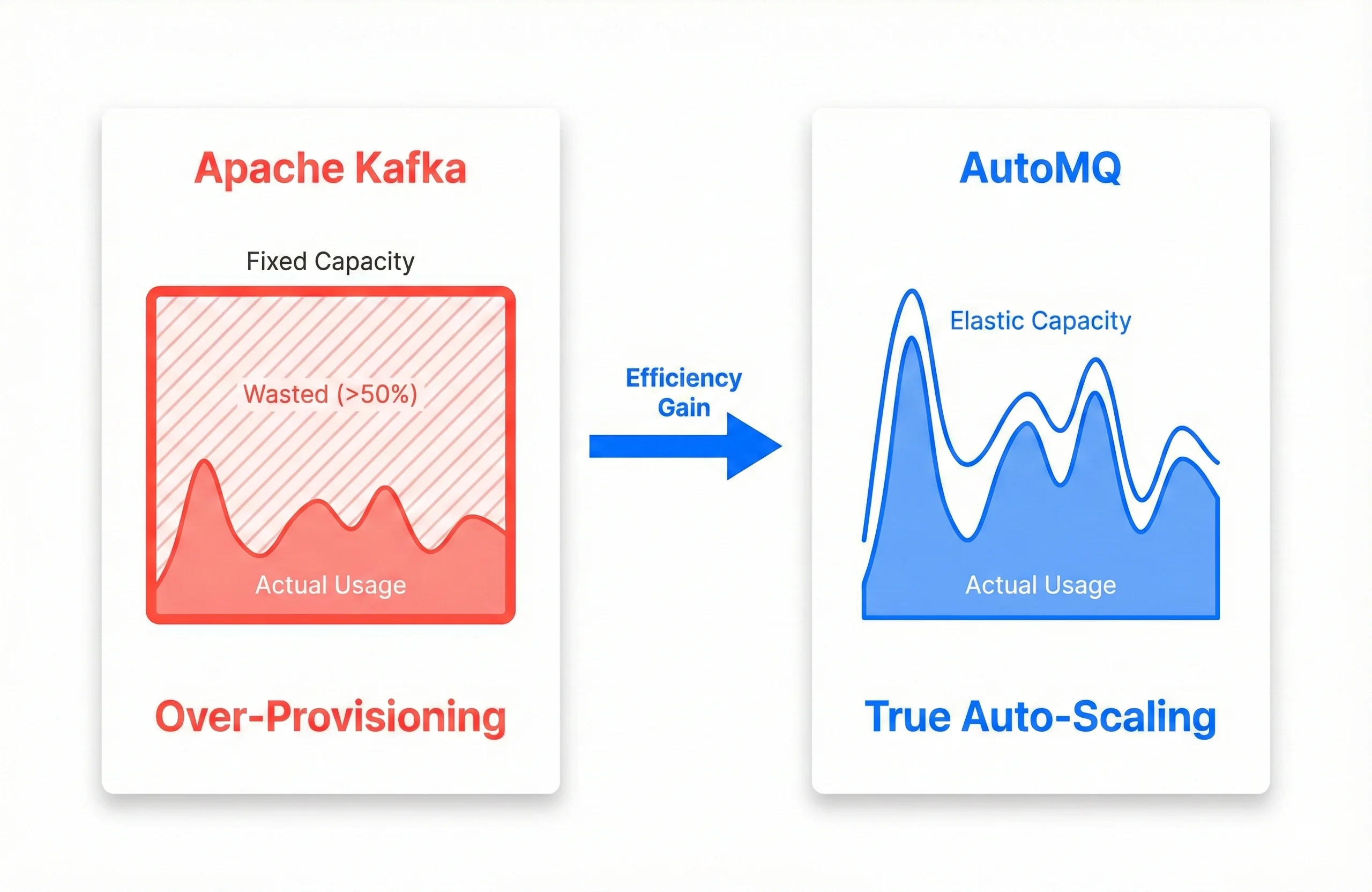

Capacity Guesswork — Slow scaling forces you to provision for peak traffic around the clock.

Result: >50% wasted resources sitting idle most of the time.

Automatic Right-Sizing — AutoMQ scales compute up and down based on real-time traffic.

Result: Pay only for what you use, not what you might need.

From operational burden to business agility—see how teams are transforming their Kafka infrastructure.

When Grab migrated to AutoMQ, they didn't just save money—they saved their team's energy. Instead of fighting fires and managing clusters, their engineers now focus on core business logic.

Benefit: Zero operational burden.

Geely—the giant behind Volvo, Lotus, Smart, and Mercedes-Benz—faced a critical issue: a traffic surge where their self-managed Kafka couldn't scale fast enough, causing business loss.

With AutoMQ, capacity planning is obsolete. The system adapts to your traffic instantly.

Benefit: Elasticity that protects revenue.

AutoMQ's Diskless architecture is purpose-built to leverage cloud primitives—S3 for storage, stateless compute for elasticity, and zero cross-AZ traffic for network efficiency.

Result: Up to 90% reduction in total infrastructure costs across compute, storage, and network.

See how much you can saveTypical production workload: 100 MiB/s write · 300 MiB/s read · 5,000 partitions · 72h retention

* Pricing based on publicly listed prices (AWS us-east-1).

Leading companies across automotive, fintech, and gaming trust AutoMQ for mission-critical streaming workloads with Diskless architecture.

Don't see an answer to your question? Check our docs, or contact us.

Available on Cloud Marketplaces

Subscribe to AutoMQ directly from your preferred cloud platform