Overview

Integrating Apache Kafka with Google Cloud Storage (GCS) allows organizations to build robust, scalable, and cost-effective data pipelines for long-term storage, archival, and batch analytics. This blog post explores how this integration works, covering key concepts, common approaches, best practices, and important considerations.

Understanding the Core Components

Before diving into the integration, let's briefly understand Apache Kafka and Google Cloud Storage.

Apache Kafka

Kafka is a distributed event streaming platform capable of handling trillions of events a day . At its core, Kafka stores streams of records—called events or messages—in categories called topics. Each topic is divided into one or more partitions, which are ordered, immutable sequences of records. Kafka brokers are the servers that form a Kafka cluster, managing the storage and replication of these partitions. Producers are client applications that publish (write) events to Kafka topics, while consumers are those that subscribe to (read and process) these events. Kafka's distributed nature provides fault tolerance and scalability.

![Apache Kafka Architecture [12]](/blog/apache-kafka-google-cloud-storage-streaming-integration-guide/1.png)

Google Cloud Storage (GCS)

Google Cloud Storage is an enterprise public cloud storage platform that allows worldwide storage and retrieval of any amount of data at any time . GCS combines the performance and scalability of Google's cloud with advanced security and sharing capabilities. Data in GCS is stored in buckets, which are containers for objects (files). GCS offers various storage classes (e.g., Standard, Nearline, Coldline, Archive) to optimize costs based on data access frequency and retention needs .

![Google Cloud Storage Storage Classes [13]](/blog/apache-kafka-google-cloud-storage-streaming-integration-guide/2.png)

How Kafka Integrates with Google Cloud Storage

The primary mechanism for integrating Kafka with GCS is Kafka Connect, a framework for reliably streaming data between Apache Kafka and other systems . Specifically, a GCS Sink Connector is used to read data from Kafka topics and write it to GCS buckets.

Kafka Connect and the GCS Sink Connector

Kafka Connect runs as a separate, scalable service from the Kafka brokers. Connectors are plugins that implement the logic for data movement. A sink connector exports data from Kafka to a target system, in this case, GCS.

The GCS Sink Connector typically performs the following functions:

-

Subscribes to one or more Kafka topics.

-

Consumes records from these topics.

-

Batches records based on size, time, or number of records.

-

Formats the records into a specified file format (e.g., Avro, Parquet, JSON, CSV).

-

Writes these batched records as objects to a designated GCS bucket, often partitioning them into logical directory structures within the bucket.

-

Manages offsets : It commits the Kafka offsets of the records successfully written to GCS, ensuring data reliability.

The data flow is typically:

Kafka Producers -> Kafka Topics -> Kafka Connect (GCS Sink Connector) -> GCS Buckets

Architecture Overview

A typical deployment involves:

-

An Apache Kafka cluster.

-

A Kafka Connect cluster (which can be a single worker for development or multiple workers for production).

-

The GCS Sink Connector plugin installed on the Kafka Connect workers.

-

A GCS bucket with appropriate permissions for the connector.

The GCS Sink Connector tasks run within the Kafka Connect workers. These tasks pull data from Kafka, buffer it, and then flush it to GCS. The number of tasks can be configured to parallelize the data transfer.

Key Configuration Options

Configuring the GCS Sink Connector involves several important parameters:

Connection and Authentication

-

Kafka Bootstrap Servers : The address of the Kafka brokers.

-

GCS Bucket Name : The target bucket in GCS.

-

GCS Credentials : Authentication is typically handled via Google Cloud service accounts. The connector needs a service account key with permissions to write objects to the specified GCS bucket (e.g.,

roles/storage.objectCreatororroles/storage.objectAdminif overwriting is needed) . Using Application Default Credentials (ADC) is a common practice on Google Cloud infrastructure .

Data Formatting and Serialization

The connector needs to know how to serialize the data from Kafka topics (which are often byte arrays) into a file format suitable for GCS. Common formats include:

-

Avro : A binary serialization format that relies on schemas. Excellent for schema evolution and integration with Schema Registry.

-

Parquet : A columnar storage format optimized for analytical workloads. Also schema-based.

-

JSON : Text-based, human-readable format. Can be schema-less or schema-based (JSON Schema).

-

CSV : Simple text-based format, good for tabular data.

Configuration options include format.class (specifying the format plugin) and format-specific settings like avro.codec or parquet.codec for compression.

Data Partitioning in GCS

How data is organized into objects and directories within the GCS bucket is crucial for efficient querying and cost management. Connectors typically support various partitioners:

-

Time-based partitioning : Organizes data by time (e.g.,

dt=YYYY-MM-DD/hr=HH/). This is very common for data lakes and facilitates time-based queries. Configuration often involvespartitioner.classset to a time-based partitioner,path.formatto define the directory structure, andlocale,timezone, andtimestamp.extractor(e.g.,RecordorRecordFieldfor event time). -

Field-based partitioning : Partitions data based on the value of a field within the Kafka record.

-

Default partitioning : Often based on Kafka topic and partition.

Proper partitioning helps query engines like BigQuery or Spark to prune data and scan only relevant objects, significantly improving performance and reducing costs .

Flush Settings

These settings control when the connector writes buffered records from Kafka to GCS objects:

-

flush.size(orfile.max.records): The number of records to buffer before writing a new object to GCS. -

rotate.interval.ms(orfile.flush.interval.ms): The maximum time to wait before writing a new object, even ifflush.sizeisn't reached. -

Some connectors might also have

rotate.schedule.interval.msfor scheduled rotations based on wall-clock time, useful for deterministic output partitioning.

Balancing these settings is important. Too frequent flushes create many small files (the "small file problem"), which can be inefficient for GCS and downstream query engines. Too infrequent flushes increase latency and memory usage on Connect workers.

Schema Management

When using schema-based formats like Avro or Parquet, integrating with a Schema Registry is highly recommended. The Schema Registry stores schemas for Kafka topics and handles schema evolution.

-

The GCS Sink Connector can retrieve schemas from the Schema Registry to interpret Kafka records and write them in the correct format to GCS.

-

Configurations like

value.converter.schema.registry.urlpoint the connector to the Schema Registry. -

schema.compatibilitysettings define how the connector handles schema changes (e.g.,NONE,BACKWARD,FORWARD,FULL). Incompatible schema changes might lead to new files being created or errors if not handled properly. Some connectors might create new GCS objects when the schema evolves for formats like Avro to ensure schema consistency within a file.

Advanced Use Cases

GCS as a Data Lake Staging Area

Kafka can stream raw data from various sources (databases via CDC, logs, IoT devices) into GCS via the sink connector. This data in GCS then serves as the landing/staging zone for a data lake. From here, data can be processed by tools like:

-

BigQuery : Load data from GCS into BigQuery tables or query it directly using BigQuery external tables, especially if data is partitioned in a Hive-compatible manner .

-

Dataproc/Spark : Run Spark jobs on Dataproc clusters to transform, enrich, and analyze data stored in GCS. Spark natively supports reading various file formats from GCS.

-

Dataflow : Build serverless data processing pipelines with Dataflow that can read from GCS.

Change Data Capture (CDC) to GCS

Using tools like Debezium, changes from databases (inserts, updates, deletes) can be streamed into Kafka topics . The GCS Sink Connector can then write these CDC events to GCS.

-

Handling CDC

op(operation type),before, andafterfields correctly in GCS is important. Often, CDC events are flattened or transformed by Single Message Transforms (SMTs) in Kafka Connect before being written. -

Representing updates and deletes in GCS for a data lake usually involves strategies like periodic compaction or using table formats (e.g., Apache Iceberg, Hudi, Delta Lake) on top of GCS that can handle row-level updates/deletes. The GCS sink itself might just append these change events.

Tiered Storage and Archival

While Kafka itself has tiered storage capabilities that can offload older segments to GCS, the GCS Sink Connector focuses on exporting data. For data exported to GCS, lifecycle management policies are crucial for long-term archival. Data can be moved from Standard to Archive storage classes for cost savings, and eventually deleted based on retention policies.

Best Practices

Adhering to best practices ensures a robust and efficient Kafka-to-GCS pipeline:

Performance Optimization

-

Tune

tasks.max: Adjust the number of connector tasks based on the number of Kafka topic partitions and available resources in the Connect cluster. -

Batching and Compression : Use appropriate

flush.sizeandrotate.interval.mssettings to create reasonably sized objects in GCS (ideally tens to hundreds of MBs). Enable compression (e.g., Snappy, Gzip, Zstd for Parquet/Avro) to reduce storage costs and improve I/O. -

Converter Choice : Native converters (e.g., optimized Avro/Parquet converters) are generally more performant than more generic ones if data is already in a suitable format.

-

Avoid Small Files : Consolidate small files in GCS if necessary using other tools or by adjusting flush settings carefully.

Data Reliability and Consistency

-

Exactly-Once Semantics (EOS) : Achieving EOS ensures that each Kafka message is written to GCS exactly once, even in the face of failures. Some GCS sink connectors support EOS, typically requiring:

-

A deterministic partitioner (e.g., time-based partitioner where the timestamp extractor is from the Kafka record itself).

-

Specific connector configurations ensuring idempotent writes or careful offset management.

-

Kafka Connect worker configurations that support EOS.

-

-

Error Handling and Dead Letter Queues (DLQ) : Configure error tolerance (

errors.tolerance) and DLQ settings (errors.deadletterqueue.topic.name). If a record cannot be processed and written to GCS (e.g., due to deserialization issues or data format violations), it can be routed to a DLQ topic in Kafka for later inspection, preventing the connector task from failing . Retries (gcs.part.retries,retry.backoff.ms) are also important for transient GCS issues.

Cost Management

-

GCS Storage Classes : Use appropriate GCS storage classes based on access patterns. Data initially landed might go to Standard, then transition to Nearline/Coldline/Archive using GCS lifecycle policies .

-

Object Size : Larger objects are generally more cost-effective for GCS operations and query performance than many small objects.

-

Compression : Reduces storage footprint and egress costs.

-

Data Retention : Implement GCS lifecycle rules to delete or archive old data that is no longer needed in hotter storage tiers.

Security

-

IAM Permissions : Follow the principle of least privilege for the service account used by the connector. Grant only the necessary GCS permissions (e.g.,

storage.objects.create,storage.objects.deleteif overwriting is needed on the specific bucket). -

Encryption :

-

In Transit : Ensure Kafka Connect uses TLS to communicate with Kafka brokers and GCS (HTTPS is default for GCS APIs).

-

At Rest : GCS encrypts all data at rest by default. For more control, Customer-Managed Encryption Keys (CMEK) can be used with GCS . Configure the connector or bucket appropriately if CMEK is required.

-

-

Secrets Management : Store sensitive configurations like service account keys securely, potentially using Kafka Connect's support for externalized secrets management.

Monitoring and Logging

-

Kafka Connect Metrics : Monitor Kafka Connect JMX metrics to track connector health, throughput, errors, and lag. Tools can scrape these metrics (e.g., Prometheus). Some managed Kafka services expose these via their cloud monitoring solutions.

-

GCS Sink Specific Metrics : Look for metrics related to GCS write operations, such as number of objects written, bytes written, GCS API errors, and GCS write latencies if exposed by the connector.

-

Logging : Configure appropriate logging levels for the connector to capture diagnostic information.

-

Google Cloud Monitoring : Utilize Google Cloud Monitoring for GCS bucket metrics (e.g., storage size, object counts, request rates).

Common Issues and Troubleshooting

-

Permissions Errors : Connector tasks failing due to insufficient GCS IAM permissions for the service account.

-

Authentication Issues : Incorrect or expired service account keys.

-

Data Format/Schema Mismatches : Errors during deserialization or when writing to GCS due to unexpected data formats or schema evolution conflicts. Using a Schema Registry and compatible evolution strategies helps.

-

Small File Problem : Incorrect flush settings leading to a large number of small files in GCS, impacting performance and cost.

-

Task Failures and Restarts : Investigate Connect worker logs and task statuses. Often related to network issues, GCS API limits, or unhandled record processing errors.

-

GCS API Rate Limiting : For very high-throughput scenarios, GCS API request limits might be hit. This usually manifests as increased latency or errors. Retries with backoff in the connector can help, but adjusting batch sizes or the number of tasks might be needed.

-

Connectivity Issues : Ensure Kafka Connect workers can reach both the Kafka cluster and GCS endpoints. Check firewall rules and network configurations.

Conclusion

Integrating Apache Kafka with Google Cloud Storage using the GCS Sink Connector provides a powerful way to offload event streams for durable storage, batch processing, and archival. By carefully configuring the connector, following best practices for performance, reliability, cost, and security, and understanding how to manage data in GCS, organizations can build scalable and efficient data pipelines that leverage the strengths of both Kafka and GCS. This enables a wide range of analytics and data warehousing use cases on Google Cloud.

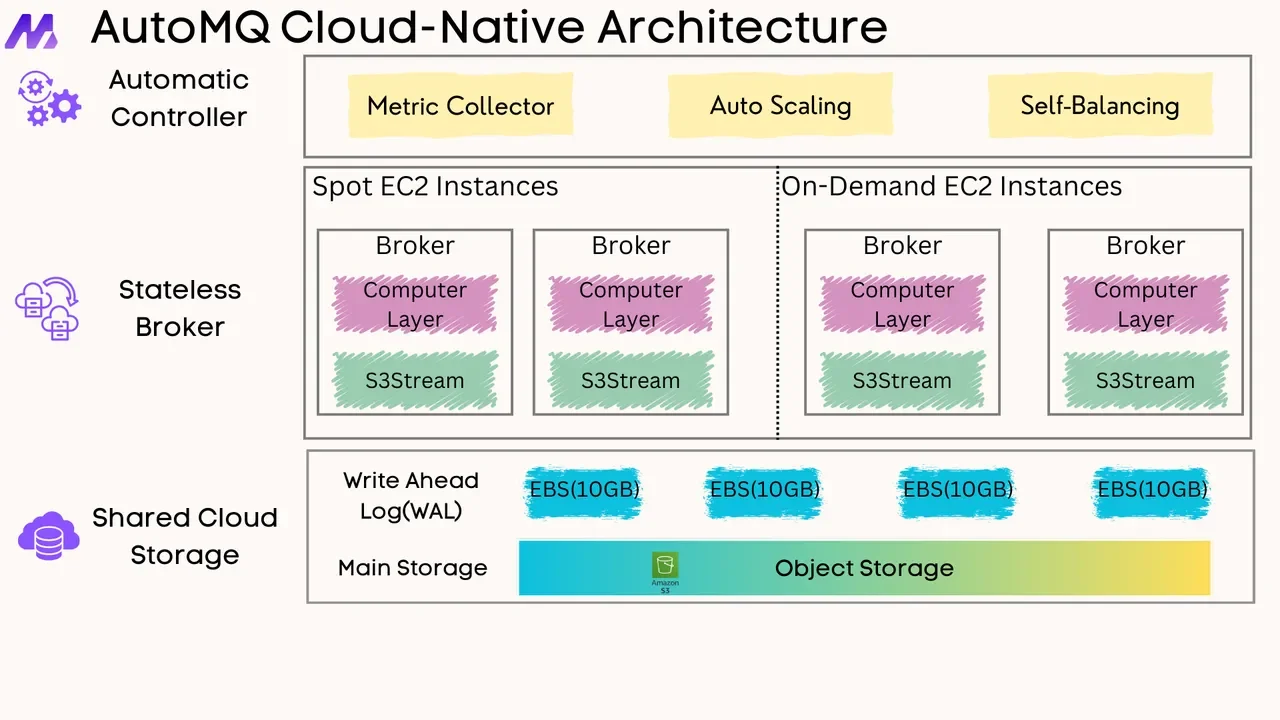

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging