Overview

Apache Kafka has become the backbone for real-time event streaming, enabling businesses to process vast amounts of data on the fly. MinIO, on the other hand, offers a high-performance, S3-compatible object storage solution that excels in storing large volumes of unstructured data. Integrating these two powerful technologies allows organizations to build robust, scalable, and cost-effective data pipelines. This blog post explores how Kafka can be effectively integrated with MinIO, covering the mechanisms, best practices, and common challenges.

Understanding the Core Components

Before diving into the integration, let's briefly touch upon Kafka and MinIO.

Apache Kafka

Apache Kafka is a distributed event streaming platform capable of handling trillions of events a day. Its core components include :

-

Brokers: Servers that form a Kafka cluster, storing data.

-

Topics: Categories or feeds to which records (messages) are published.

-

Partitions: Topics are divided into partitions, allowing for parallelism and scalability.

-

Producers: Client applications that publish records to Kafka topics.

-

Consumers: Client applications that subscribe to topics and process the published records.

-

Kafka Connect: A framework for reliably streaming data between Kafka and other systems, such as databases, search indexes, and object storage like MinIO.

![Apache Kafka Architecture [14]](/blog/apache-kafka-minio-integration-streaming-object-storage/1.png)

MinIO

MinIO is an open-source, high-performance object storage server compatible with the Amazon S3 API . It's designed for private cloud infrastructure and is well-suited for storing large quantities of data, including backups, archives, and data lakes. Key features include scalability, data redundancy through erasure coding, and robust security mechanisms .

![MinIO Architecture [15]](/blog/apache-kafka-minio-integration-streaming-object-storage/2.png)

Why Integrate Kafka with MinIO?

Integrating Kafka with MinIO addresses several important use cases:

-

Long-Term Data Archival and Retention: Kafka brokers typically retain data for a limited period due to storage costs and performance considerations. MinIO provides a cost-effective solution for long-term archival of Kafka messages, ensuring data durability and compliance with retention policies.

-

Building Data Lakes: Data streamed through Kafka can be landed in MinIO, creating a raw data lake. This data can then be processed by various analytics engines (like Apache Spark) for batch processing, machine learning model training, and ad-hoc querying.

-

Cost-Effective Tiered Storage: MinIO can serve as a cheaper storage tier for older Kafka data, freeing up more expensive storage on Kafka brokers while keeping the data accessible.

-

Backup and Disaster Recovery: Offloading Kafka data to MinIO provides a reliable backup mechanism.

-

Decoupling Stream Processing from Batch Analytics: Kafka handles real-time streams, while MinIO stores the data for subsequent batch analysis, allowing each system to perform optimally.

The Primary Integration Mechanism: Kafka Connect and S3 Sink Connectors

The most common and recommended way to integrate Kafka with MinIO is by using Kafka Connect along with an S3-compatible sink connector.

Kafka Connect Framework

Kafka Connect is a JVM-based service that runs as a separate cluster from Kafka brokers. It operates with:

-

Connectors: Plugins that manage data transfer. Source connectors ingest data from external systems into Kafka, while sink connectors export data from Kafka to external systems.

-

Tasks: Connectors are broken down into one or more tasks that run in parallel to move the data.

-

Converters: Handle the serialization and deserialization of data between Kafka Connect's internal format and the format required by the source/sink system.

-

Transforms: Allow for simple message modifications en route.

S3 Sink Connectors

Several S3 sink connectors are available that can write data from Kafka topics to S3-compatible object storage like MinIO. These connectors typically offer a rich set of features:

-

MinIO Specific Configuration:

-

Endpoint URL: You'll need to configure the connector with MinIO's service endpoint (e.g., using parameters like

store.urlor a specific S3 endpoint override property). -

Path-Style Access: MinIO often requires path-style S3 access, which usually needs to be enabled in the connector's configuration (e.g.,

s3.path.style.access.enabled=true). -

Credentials: Authentication is typically handled by providing MinIO access keys and secret keys through the connector's properties or standard AWS SDK credential provider chains.

-

-

Data Formats: Connectors support writing data in various formats, including:

-

Apache Avro: A compact binary format that supports schema evolution. Often used with a Schema Registry.

-

Apache Parquet: A columnar storage format optimized for analytics. Also supports schema evolution and is often used with a Schema Registry.

-

JSON: A human-readable text format. Can be schema-less or used with JSON Schema.

-

CSV (Comma Separated Values): Simple text-based format.

-

Byte Array: Writes raw bytes without conversion, useful for pre-serialized data.

-

-

Compression: To save storage space and improve I/O, connectors support compression codecs like Gzip, Snappy, Zstandard, etc., depending on the chosen data format (e.g.,

parquet.codec,avro.codec). -

Partitioning in MinIO: How data from Kafka topics and partitions is organized into objects and prefixes (directories) in MinIO is crucial. S3 sink connectors provide partitioners:

-

Default Partitioner: Typically mirrors Kafka's partitioning (e.g.,

topics/<TOPIC_NAME>/partition=<KAFKA_PARTITION_NUMBER>/...). -

Time-Based Partitioner: Organizes data by time (e.g., year, month, day, hour) based on message timestamps or wall-clock time. This is very common for data lake scenarios (e.g.,

path.format='year'=YYYY/'month'=MM/'day'=dd/'hour'=HH). Parameters likelocaleandtimezoneare important here. -

Field-Based Partitioner: Partitions data based on the value of one or more fields within the Kafka message. These partitioners help organize data in a way that's efficient for querying with tools that understand Hive-style partitioning.

-

-

Flush Behavior: Connectors batch messages before writing them to MinIO. Configuration parameters control this:

-

flush.size: Number of records after which to flush data. -

rotate.interval.msorrotate.schedule.interval.ms: Maximum time interval after which to flush data, even ifflush.sizeisn't met. Tuning these is key to managing the small file problem.

-

-

Schema Management and Evolution: When using formats like Avro or Parquet, integrating with a Schema Registry service is essential. The S3 sink connector (along with Kafka Connect's converters) will retrieve schemas from the registry to serialize data correctly. Key settings include

key.converter.schema.registry.urlandvalue.converter.schema.registry.url. Proper schema compatibility settings (e.g.,BACKWARD,FORWARD,FULL) in the Schema Registry are crucial for handling schema evolution without breaking downstream consumers or the sink connector. Issues can arise if schemas evolve in non-compatible ways, such asSchemaProjectorExceptionif a field used by a partitioner is removed . -

Exactly-Once Semantics (EOS): Achieving EOS when writing to S3-compatible storage like MinIO is challenging because S3 object writes are typically atomic, but there's no native multi-object transactionality in the same way as in databases. Some S3 sink connectors provide EOS guarantees under specific conditions :

-

Deterministic Partitioning: The connector must be able to determine the exact S3 object path for a given Kafka record, even across retries or task restarts. This often means using deterministic partitioners and avoiding settings like

rotate.schedule.interval.mswhich can break determinism if based on wall-clock time across different tasks/restarts. -

Atomic Uploads: Connectors often write to temporary locations and then atomically move/rename to the final destination, or rely on S3's multipart upload capabilities to ensure individual objects are written correctly.

-

Failure Handling: Connectors are designed to handle task failures and restarts idempotently, ensuring records are not duplicated or lost in MinIO.

-

Alternative Integration Approaches

While Kafka Connect is the primary method, other approaches exist:

Kafka Native Tiered Storage (KIP-405)

KIP-405 introduced an official API in Kafka for tiered storage, allowing brokers to offload older log segments to external storage systems like S3-compatible object stores . This is different from Kafka Connect, as it's a broker-level feature.

-

How it works: Log segments are copied to the remote tier (e.g., MinIO) and can be read back by consumers as if they were still on local broker disk (with some latency implications).

-

MinIO as a Backend: MinIO can serve as the S3-compatible backend for KIP-405 if a suitable remote storage manager plugin is used that supports generic S3 endpoints, path-style access, and credentials. Some open-source plugins are designed for this.

-

Comparison to Connect: KIP-405 keeps data in Kafka's log segment format in the remote tier, potentially making it directly queryable by Kafka-native tools. Kafka Connect transforms data into formats like Parquet or Avro, which are more suitable for general data lake analytics tools.

Custom Applications (Kafka Clients + MinIO SDK)

For highly specific requirements or when the overhead of Kafka Connect is undesirable, you can write custom applications:

-

Producer/Consumer Logic: A Kafka consumer application can read messages from Kafka topics.

-

MinIO SDK: Within the consumer, use the MinIO SDK (available for Java, Python, Go, etc.) to write the message data to MinIO buckets .

-

Pros: Maximum flexibility in data transformation, error handling, and target object naming.

-

Cons: Requires more development effort, and you become responsible for reliability, scalability, exactly-once processing (if needed), and operational management, which Kafka Connect largely handles.

Best Practices for Kafka-MinIO Integration

Connector Configuration & Tuning

-

Tune

flush.size,rotate.interval.ms, andpartition.duration.ms(for TimeBasedPartitioner) carefully. Small values can lead to many small files in MinIO, impacting query performance and MinIO's own efficiency . Large values might increase latency for data availability in MinIO and memory usage in Connect tasks. -

Set

tasks.maxappropriately for parallel processing, usually matching the number of partitions for the topics being sunk or a multiple thereof. -

Configure Dead Letter Queues (DLQs): Use settings like

errors.tolerance,errors.log.enable, anderrors.deadletterqueue.topic.nameto handle problematic messages (e.g., deserialization errors, schema mismatches) without stopping the connector.

MinIO Configuration and Data Organization

-

Address the Small File Problem: Besides connector tuning, MinIO itself has optimizations for small objects (e.g., inline data for objects under a certain threshold, typically around 128KB) . However, it's generally better to configure the sink connector to produce reasonably sized objects (e.g., 64MB-256MB) for optimal S3 performance.

-

Use Hive-style Partitioning: If querying data with engines like Spark or Presto, ensure the S3 sink connector writes data in Hive-compatible partition structures (e.g.,

s3://mybucket/mytopic/year=2024/month=05/day=26/...).

Security

-

Secure Kafka and Kafka Connect: Implement authentication (e.g., SASL) and authorization (ACLs) for Kafka. Secure Kafka Connect REST API and worker configurations.

-

TLS for MinIO Communication: Ensure MinIO is configured with TLS. The Kafka Connect workers' JVM must trust MinIO's TLS certificate, especially if it's self-signed or from a private CA. This usually involves importing the certificate into the JVM's default truststore (

cacerts) or a custom truststore specified via JVM system properties (e.g.,Djavax.net.ssl.trustStore). Disabling certificate validation is not recommended for production. -

MinIO Access Control: Create dedicated MinIO users/groups and policies for the S3 sink connector, granting only necessary permissions (e.g.,

s3:PutObject,s3:GetObject,s3:ListBucketon the target bucket and prefixes) .

Monitoring

-

Kafka Connect Metrics: Monitor Kafka Connect worker and task MBeans via JMX. Key generic sink task metrics include

sink-record-read-rate,put-batch-time-max-ms,offset-commit-success-percentage, and error rates. Specific S3 sink connectors might expose additional MBeans. -

MinIO Metrics: Monitor MinIO using its Prometheus endpoint for metrics like

minio_http_requests_duration_seconds_bucket(for PUT operations), error rates, disk usage, and network traffic . -

Log Aggregation: Centralize logs from Kafka Connect workers for easier troubleshooting.

Data Lifecycle Management in MinIO

- Utilize MinIO's object lifecycle management policies to automatically transition older data to different storage classes (if applicable within MinIO's setup) or expire/delete data that is no longer needed . Rules can be prefix-based, aligning with the partitioning scheme used by the S3 sink (e.g., expire objects under

topics/my-topic/year=2023/after a certain period).

Common Challenges and Solutions

-

Small Files in MinIO:

-

Challenge: Leads to poor query performance and inefficient storage.

-

Solution: Tune S3 sink connector's

flush.sizeandrotate.interval.ms. Consider periodic compaction jobs on MinIO using tools like Apache Spark.

-

-

Configuration Errors:

-

Challenge: Incorrect MinIO endpoint, credentials, path-style access settings, or S3 region (even if a placeholder for MinIO, some SDKs might require it) can cause connection failures .

-

Solution: Double-check all connection parameters. Ensure the Kafka Connect worker can resolve and reach the MinIO endpoint.

-

-

Schema Evolution Issues:

-

Challenge: Changes in Kafka message schemas (especially with Avro/Parquet) can break the S3 sink if not handled gracefully (e.g.,

SchemaProjectorException). -

Solution: Use a Schema Registry, define appropriate schema compatibility rules (e.g.,

BACKWARDfor sinks), and test schema changes thoroughly. Ensure converters and connector configurations for schema handling are correct.

-

-

Converter Installation/Classpath Issues:

-

Challenge:

ClassNotFoundExceptionfor converters (e.g., an Avro converter needed for Parquet output) if not correctly installed in Kafka Connect's plugin path. -

Solution: Ensure all necessary converter JARs and their dependencies are correctly deployed to each Kafka Connect worker and that the

plugin.pathis configured correctly.

-

-

Performance Bottlenecks:

-

Challenge: Kafka Connect tasks not keeping up with Kafka topic production rates.

-

Solution: Scale out Kafka Connect workers, increase

tasks.maxfor the connector, optimize connector configurations (batch sizes, compression), and ensure sufficient network bandwidth between Connect and MinIO. Monitor MinIO performance for any server-side bottlenecks.

-

-

Idempotency and EOS Complications:

-

Challenge: Ensuring exactly-once delivery to S3-compatible storage is complex. Misconfiguration can lead to data loss or duplication.

-

Solution: Use S3 sink connectors that explicitly support EOS. Carefully configure partitioners and rotation policies to maintain determinism. Understand the connector's specific mechanisms for achieving atomicity and handling retries.

-

Conclusion

Integrating Apache Kafka with MinIO provides a powerful and flexible solution for managing the lifecycle of real-time data. By leveraging Kafka Connect and S3 sink connectors, organizations can seamlessly offload event streams to MinIO for long-term storage, archival, and advanced analytics. While challenges exist, particularly around performance tuning, schema management, and achieving exactly-once semantics, careful planning, proper configuration, and adherence to best practices can lead to a robust and scalable data infrastructure. This combination empowers businesses to unlock the full value of their data, from real-time insights to historical analysis.

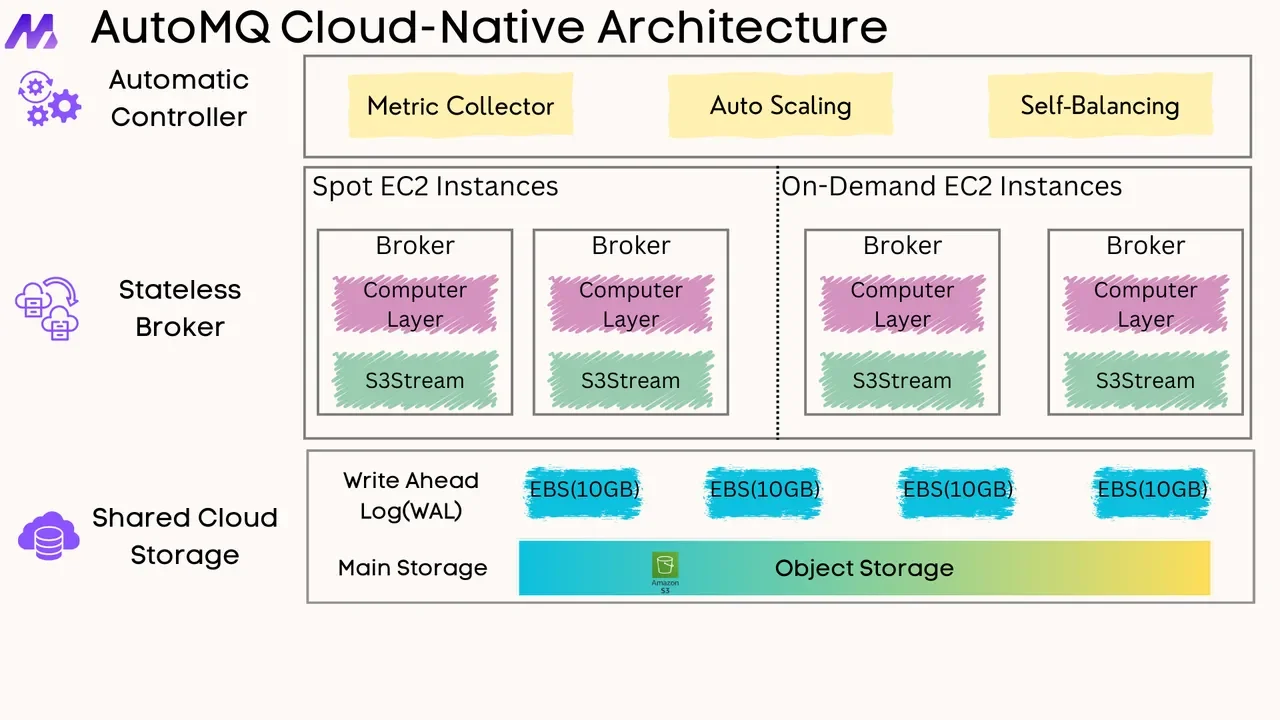

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging