Introduction

In the landscape of data engineering, two fundamental approaches dictate how we handle and process information: batch processing and stream processing . While both aim to transform raw data into valuable insights, they differ significantly in their methodologies, performance characteristics, and ideal use cases. This post provides a detailed comparison to help you understand their core distinctions and choose the right strategy for your data challenges.

A Quick Look at Batch Data Processing

Batch data processing is a traditional method where data is collected, stored, and then processed in large, predetermined groups or "batches" [3]. This processing happens at scheduled intervals—perhaps hourly, daily, or overnight—rather than instantaneously. Key characteristics include its suitability for large, finite (bounded) datasets, high latency as results are available only after a batch completes, and its operational efficiency for tasks that don't require immediate output [4, 5]. Common technologies associated with batch processing include Apache Hadoop MapReduce and Apache Spark (when used in its batch mode) [7, 8].

A Glimpse into Stream Data Processing

Stream data processing, in contrast, handles data in motion. It continuously ingests, processes, and analyzes data as it is generated or received, typically with very low latency—often in milliseconds or seconds [10]. This paradigm is designed for unbounded, continuous data flows originating from sources like application logs, IoT sensors, or financial transactions [11]. The core idea is to derive insights and react to events in near real-time [12]. Prominent technologies in this space include Apache Kafka, Apache Flink, and Apache Spark Streaming [16, 17, 18].

![Batch and Stream Processing Overview [23]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099c26057f1e37192a5871_67480fef30f9df5f84f31d36%252F685e66c7b05359450e1600d8_sGob.png)

Batch vs. Stream Processing: A Detailed Comparative Analysis

Understanding the nuances between batch and stream processing is crucial for designing effective data systems. Let's delve into a detailed comparison across several key dimensions.

Data Nature: Bounded vs. Unbounded

A primary distinction lies in the nature of the data they handle.

Batch processing is designed for bounded data, meaning datasets that have a defined start and end. Think of it as processing a complete file, a day's worth of transactions, or all historical sales records up to a certain point [3]. The entire dataset is available before processing begins.

Stream processing deals with unbounded data—continuous, never-ending sequences of events or data points [10]. There's no defined end to the dataset; data is constantly arriving. This requires a different mindset, as the system can't wait for all data to arrive before processing.

Time Sensitivity: Latency and Throughput

Latency refers to the delay between data input and the availability of processed output, while throughput is the amount of data processed over a given time.

Batch processing inherently has high latency. Since data is processed in large chunks at scheduled intervals, insights are only available after the entire batch job completes. This could be minutes, hours, or even longer [3, 13]. However, batch systems can achieve very high throughput for these large, scheduled tasks, as they are optimized to process massive volumes efficiently when they run.

Stream processing is characterized by very low latency, aiming for near real-time output [13]. The goal is to process events as they arrive, making results available within milliseconds or seconds. While individual event processing is fast, managing extremely high throughput in streaming systems, especially while maintaining state and fault tolerance, presents significant engineering challenges [15].

Processing Approach: Scheduled vs. Continuous

The mode of operation further differentiates these two paradigms.

Batch processing operates on a scheduled or periodic basis. Jobs are triggered at specific times (e.g., end of day) or when a certain volume of data accumulates [1]. This makes resource planning predictable.

Stream processing is continuous and event-driven. Processing logic is constantly active, reacting to data as it flows into the system [12]. This necessitates resources being always available to handle incoming data.

Statefulness: Managing Context

State refers to information or context that a processing system needs to remember from past data to process current data.

Batch jobs are often designed to be stateless, meaning each batch is processed independently without knowledge of previous batches. If state is required (e.g., for historical aggregations), it's typically loaded from an external data store at the beginning of the job and potentially updated at the end.

Stream processing frequently involves stateful operations. For example, calculating a running sum, detecting patterns over a time window, or enriching an event with historical user data all require maintaining and accessing state. Managing state reliably and efficiently in a distributed streaming environment is a complex challenge.

Resilience: Fault Tolerance Mechanisms

How systems handle failures is critical.

Batch processing fault tolerance often relies on restarting failed jobs. If a task within a batch fails, the entire job or a significant portion of it might be re-run [7]. Since the input data is bounded and persisted, rerunning is feasible, though it can extend the overall processing time. Checkpointing can also be used to save intermediate states.

Stream processing fault tolerance must be more sophisticated due to its continuous nature and low-latency requirements. Techniques include checkpointing distributed state, message acknowledgments, and upstream data replay capabilities (often from a durable message queue like Apache Kafka) [17, 18]. The goal is to recover quickly from failures without losing data or significantly impacting latency. Achieving exactly-once processing semantics (ensuring each message is processed precisely once, even with failures) is a key, but challenging, aspect [19].

Growth: Scalability Considerations

Systems need to adapt to growing data volumes and processing demands.

Batch processing scalability typically involves adding more compute resources (CPUs, memory, nodes) to the cluster that executes the batch jobs. The scaling might be planned in anticipation of batch window execution and potentially scaled down afterward to save costs [1, 2].

Stream processing scalability requires the ability to dynamically scale out (add more processing units) and scale in (remove units) in response to fluctuating data rates and processing loads, often in an automated fashion. This elasticity is crucial for maintaining performance and managing costs effectively [20].

Analytical Depth: Data Analysis Capabilities

The type of analysis performed also differs.

Batch processing allows for deep, complex analysis on large, complete datasets. Since the entire dataset is available, intricate algorithms, multi-pass computations, and large-scale aggregations can be performed. It's well-suited for historical analysis, reporting, and training complex machine learning models [5].

Stream processing typically focuses on simpler, faster analytics on individual events or data within defined windows (e.g., the last 5 minutes). While complex event processing (CEP) and real-time machine learning inference are possible, the emphasis is on speed and reacting to current conditions. Analyzing very large historical contexts in a purely streaming fashion can be challenging [11].

Implementation: Complexity and Development Effort

The effort required to build and maintain these systems varies.

Batch processing systems , for well-defined, repetitive tasks, can be relatively simpler to design and implement. The logic is often straightforward (extract, transform, load), and the ecosystem of tools is mature [4].

Stream processing systems are generally considered more complex to develop and operate [15]. Dealing with continuous data flow, state management, event time processing, fault tolerance in a distributed environment, and ensuring data ordering and consistency require specialized expertise and careful design.

Economics: Cost Implications

The financial aspect is always a key consideration.

Batch processing can be more cost-effective for certain workloads because compute resources can be provisioned only when needed (during the batch window) and potentially de-provisioned afterward, or utilize lower-cost spot instances [6].

Stream processing may incur higher operational costs due to the need for continuously running infrastructure and resources to handle data as it arrives [13]. However, the business value derived from real-time insights can often justify these costs.

Suitability: Typical Use Case Divergence

Their differing characteristics make them suitable for different types of tasks.

Batch processing is ideal for tasks where latency is acceptable and large volumes of data need to be processed periodically. Examples include: payroll and billing systems, end-of-day financial reporting, large-scale ETL for data warehousing, and training comprehensive machine learning models on historical data [4, 5].

Stream processing excels where immediate insights and actions are critical. Examples include: real-time fraud detection, live monitoring of systems and applications, IoT data processing for immediate response, personalized recommendations, and algorithmic trading [11, 14].

Bridging the Gap: Hybrid Architectures (Lambda and Kappa)

Recognizing that many real-world scenarios benefit from both historical depth and real-time insights, hybrid architectures have emerged.

Lambda Architecture

The Lambda architecture combines batch and stream processing to serve a wide range of query needs [21]. It consists of three layers:

Batch Layer: Pre-computes comprehensive views from all historical data.

Speed (Streaming) Layer: Processes recent data in real-time to provide low-latency updates.

Serving Layer: Merges results from both batch and speed layers to answer queries. While robust, its main drawback is the complexity of maintaining two separate processing paths and codebases.

Kappa Architecture

The Kappa architecture offers a simplification by using a single stream processing engine for both real-time processing and historical reprocessing (by replaying data from a durable log like Kafka). This reduces complexity by having a unified codebase and technology stack. However, reprocessing very large volumes of historical data via streaming can be resource-intensive and time-consuming depending on the system's capabilities [22].

Conclusion

The choice between batch and stream data processing is not always an either/or decision. Batch processing remains a cornerstone for handling large-scale, periodic computations efficiently, while stream processing unlocks the power of real-time data for immediate insights and actions. As data velocities and the demand for instant responses grow, streaming architectures are gaining prominence. However, understanding the fundamental differences in their approach to data handling, latency, state management, and fault tolerance—as detailed in this comparison—is key to selecting the most appropriate strategy, or combination of strategies, to meet specific business and technical requirements. Modern data platforms often strive to provide unified capabilities, but the underlying principles of batch and stream processing continue to inform how we build and manage data-intensive applications.

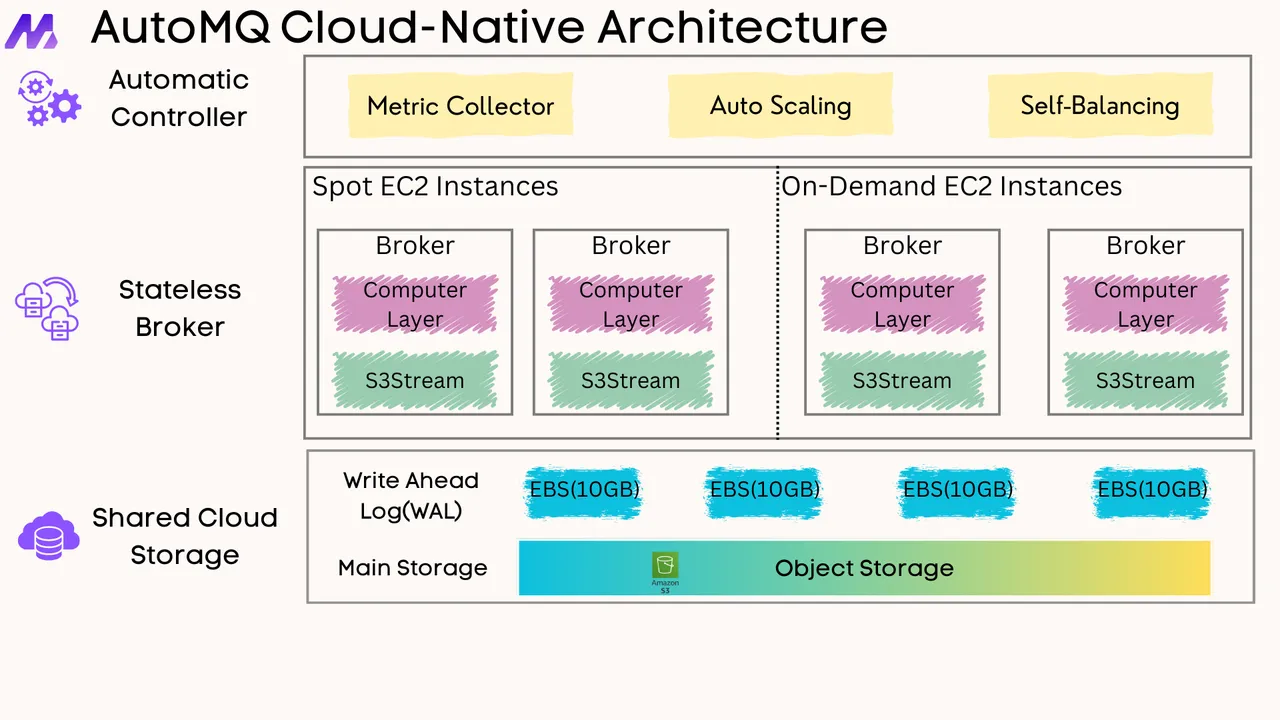

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)