Overview

In the world of computing, managing resources effectively is paramount. How we allocate resources like CPU, memory, and storage can significantly impact performance, cost, and reliability. Two primary strategies dominate this landscape: elastic scaling and fixed resource allocation . Understanding the nuances of each is crucial for designing efficient and cost-effective systems. This blog delves into both approaches, comparing them side-by-side, and discussing best practices and common challenges.

![Comparison of Two Strategies [15]](/blog/elastic-scaling-vs-fixed-resource-allocation-choosing-the-right-strategy/1.png)

What is Fixed Resource Allocation?

Fixed resource allocation, also known as static allocation, involves provisioning a predetermined amount of resources for an application or system based on anticipated peak load. This capacity is then reserved and remains constant, regardless of actual real-time demand.

How it Works:

The process typically starts with capacity planning, where future needs are forecasted based on historical data, business projections, or performance testing . Once the required resources are determined, they are allocated to the system. For instance, a web server might be provisioned with 4 CPU cores and 16 GB of RAM, and these resources remain dedicated to it.

Key Characteristics:

-

Predictable Costs: With fixed resources, costs are generally predictable, as you pay for a set amount of capacity upfront or on a recurring basis.

-

Simplicity in Management (Initially): Once set up, managing fixed resources can seem straightforward as there's no dynamic adjustment to worry about.

-

Guaranteed Capacity: Resources are always available up to the provisioned limit, which can be beneficial for applications with consistent, known demands.

What is Elastic Scaling?

Elastic scaling, often referred to as autoscaling or dynamic resource allocation, is the ability of a system to automatically and dynamically adjust its allocated resources in response to real-time workload changes . Resources can be added (scaled out or up) during demand spikes and removed (scaled in or down) during lulls.

![Elastic Scaling: Response to Real-time Workload Changes [14]](/blog/elastic-scaling-vs-fixed-resource-allocation-choosing-the-right-strategy/2.png)

How it Works:

Elastic scaling relies on continuous monitoring of key performance metrics such as CPU utilization, memory usage, network traffic, or queue lengths . Predefined scaling policies trigger actions when these metrics cross certain thresholds.

-

Horizontal Scaling (Scaling Out/In): Involves adding more instances of a resource (e.g., more virtual machines or containers) or removing them . This is common for stateless applications.

-

Vertical Scaling (Scaling Up/Down): Involves increasing the capacity of existing instances (e.g., adding more CPU or RAM to a virtual machine) or decreasing it . This can be useful for stateful applications or those with licensing constraints per instance.

Scaling Triggers:

-

Metric-based: Scaling occurs when a specific metric (e.g., CPU > 75%) hits a threshold .

-

Schedule-based: Resources are adjusted based on predictable time patterns (e.g., scaling up during business hours).

-

Predictive Scaling: Uses machine learning and historical data to forecast future demand and proactively adjust resources .

-

Event-driven Scaling: Resources are scaled based on events, such as the number of messages in a queue (e.g., using Kubernetes Event-driven Autoscaling - KEDA) .

Key Characteristics:

-

Cost Efficiency (Pay-as-you-go): Users typically pay only for the resources consumed, which can lead to significant cost savings for workloads with variable demand.

-

Performance Optimization: Ensures applications have sufficient resources to handle load, maintaining responsiveness and preventing slowdowns.

-

Improved Availability: Can automatically replace unhealthy instances or scale to absorb sudden surges, enhancing system reliability.

Side-by-Side Comparison: Elastic Scaling vs. Fixed Allocation

Let's compare these two approaches across several critical dimensions:

| Feature | Elastic Scaling | Fixed Resource Allocation |

|---|---|---|

| Cost Efficiency | Pay-per-use model; potentially lower costs for variable workloads. Reduces wasted resources from overprovisioning. Emergency scaling costs can be significantly lower. | Predictable, often higher fixed costs, as capacity is provisioned for peak load, leading to potential underutilization during off-peak times. |

| Performance | Adapts to load changes, maintaining performance under varying conditions. Can introduce slight latency during scaling events (e.g., cold starts) . Deployment speed can be very fast (seconds to minutes). | Consistent performance up to the provisioned limit. Can suffer performance degradation or outages if demand exceeds fixed capacity. Deployment can be slower (minutes to hours for physical changes). |

| Resource Utilization | Optimized to match demand, minimizing idle resources. Optimal CPU utilization often maintained. | Often leads to underutilization during non-peak hours or overutilization (and thus poor performance) if demand exceeds capacity. |

| Management Complexity | Requires initial setup and tuning of scaling policies, monitoring, and potentially complex configurations. Automation reduces ongoing manual intervention. | Simpler initial setup but requires careful upfront capacity planning . Manual intervention for adjustments. |

| Reliability & Availability | Can enhance reliability by automatically replacing failed instances and handling unexpected surges. Better fault isolation in microservice environments. Uptime can be higher. | Reliability depends on the adequacy of the fixed provisioning. Susceptible to overload if demand spikes beyond capacity. |

| Workload Suitability | Ideal for dynamic, unpredictable workloads with fluctuating demand (e.g., e-commerce sites, event-driven applications, batch processing) . | Best for stable, predictable workloads with consistent resource needs (e.g., some legacy systems, applications with known peak loads) . |

Best Practices

For Elastic Scaling:

-

Monitor Extensively: Continuously monitor key performance metrics and scaling events to understand application behavior and refine policies.

-

Set Appropriate Min/Max Limits: Define minimum instances to handle baseline load and maximum instances to control costs.

-

Use Cooldown Periods: Prevent rapid, successive scaling actions ("flapping") by implementing cooldown periods after a scaling event.

-

Implement Health Checks: Ensure that load balancers only distribute traffic to healthy instances and that autoscaling groups can replace unhealthy ones.

-

Test Scaling Policies: Regularly test how your application scales under various load conditions to validate policies and identify bottlenecks.

-

Optimize Application Initialization: For applications that scale horizontally, ensure new instances can start quickly to minimize delays during scale-out events.

-

Leverage Predictive Scaling: If your workload has predictable patterns and your platform supports it, use predictive autoscaling to provision capacity before it's needed .

-

Tag Resources: Use tags for cost allocation and management to track spending associated with dynamically scaled resources.

For Fixed Resource Allocation:

-

Accurate Demand Forecasting: Invest in thorough capacity planning and demand forecasting techniques to minimize over or under-provisioning .

-

Regular Capacity Reviews: Periodically review resource utilization and performance metrics to determine if the allocated capacity is still appropriate.

-

Maintain a Buffer: Consider provisioning a small buffer above the expected peak load to handle minor, unexpected fluctuations, but be mindful of costs.

-

Performance Testing: Regularly test the system at its provisioned capacity to ensure it meets performance targets under peak load.

-

Right-Sizing: Continuously analyze if the allocated resources (CPU, memory, storage types) are the right fit for the workload's actual needs, not just its historical allocation.

Common Issues and Challenges

Elastic Scaling Challenges:

-

Scaling Delay (Lag): Reactive autoscaling can have a lag between when a metric threshold is breached and when new resources become available and operational. This can be critical for applications needing instant responsiveness .

-

Cold Starts: For serverless functions or newly provisioned instances, "cold starts" (the time taken to initialize the runtime and application code) can introduce latency .

-

Configuration Complexity: Defining effective autoscaling policies, thresholds, and cooldown periods can be complex and may require iterative tuning.

-

Cost Management: While generally cost-effective, poorly configured autoscaling (e.g., overly aggressive scale-out, no maximum limits) can lead to unexpected cost overruns or "bill shock".

-

Flapping: If scaling policies are too sensitive or cooldowns too short, the system might repeatedly scale out and in, leading to instability and unnecessary costs.

-

Achieving True Elasticity: For stateful applications or those with licensing constraints, achieving seamless elasticity can be more challenging and may require specific architectural considerations .

-

Integration with Legacy Systems: Integrating dynamic scaling with older, less flexible systems can be difficult.

Fixed Resource Allocation Challenges:

-

Over-provisioning: Allocating resources for peak load that rarely occurs leads to wasted capacity and unnecessary costs.

-

Under-provisioning: Insufficient resources can lead to poor performance, application failures, and a negative user experience when demand exceeds capacity . This can also cause service level agreement (SLA) violations.

-

Inflexibility: Fixed allocations cannot easily adapt to sudden or unexpected changes in demand, making it difficult to respond to business opportunities or unforeseen events .

-

Capacity Planning Difficulties: Accurately predicting future demand is challenging. Errors in forecasting can lead directly to over or under-provisioning .

-

Bottlenecks in Manual Provisioning: Manually adjusting fixed resources can be slow and error-prone, especially in fast-moving environments .

Advanced Concepts Influencing Resource Allocation

-

Serverless Computing: An evolution of elastic scaling where developers don't manage servers at all. Resources are provisioned and scaled automatically by the cloud provider on a per-request basis, often scaling to zero when not in use .

-

Containerization and Orchestration (e.g., Kubernetes): Technologies like Kubernetes provide sophisticated mechanisms for managing and scaling containerized applications, including Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA), and event-driven scalers like KEDA . These tools allow for fine-grained, automated resource adjustments.

-

Hybrid Resource Allocation Models: Some systems employ a hybrid approach, combining fixed and elastic strategies. For example, a baseline capacity might be provisioned using reserved instances (fixed, cost-effective for predictable load), with elastic scaling used to handle demand above this baseline . AI and machine learning techniques are also being used to create sophisticated hybrid scheduling and resource allocation models, especially for complex workloads like distributed AI training .

-

Infrastructure as Code (IaC): Tools like Terraform allow for defining and managing infrastructure (including scaling policies and resource allocations) in a declarative, version-controlled manner, which aids in consistent and repeatable deployments for both fixed and elastic environments .

Impact on System Architecture and Total Cost of Ownership (TCO)

The choice between elastic and fixed resource allocation significantly impacts system architecture . Elastic systems often favor stateless microservices that can be easily scaled horizontally. Architectures need to be designed for failure and dynamic discovery of services. Fixed allocation might be simpler for monolithic, stateful applications but can lead to scalability bottlenecks.

Total Cost of Ownership (TCO) is also heavily influenced. Elastic scaling aims to reduce TCO by minimizing upfront investment in hardware and aligning operational costs with actual usage (pay-as-you-go). This shifts CapEx to OpEx. Fixed allocation can lead to higher TCO due to overprovisioning if demand is variable, or higher opportunity costs if underprovisioning constrains growth. However, for highly predictable, sustained workloads, long-term fixed reservations can sometimes offer lower TCO than purely on-demand elastic resources.

Conclusion

Both elastic scaling and fixed resource allocation have their place. Elastic scaling offers unparalleled flexibility, cost efficiency for variable workloads, and high availability, but comes with potential complexity in configuration and management. Fixed resource allocation provides predictability and simplicity for stable workloads but risks inefficiencies from over/under-provisioning and inflexibility.

The optimal choice often depends on the specific workload characteristics, performance requirements, budget constraints, and the organization's operational maturity. Increasingly, hybrid approaches that combine the stability of fixed resources for baseline loads with the flexibility of elastic scaling for peaks are emerging as a balanced solution . As cloud technologies evolve, the trend is towards more intelligent, automated, and fine-grained resource management, pushing the boundaries of what "elasticity" truly means.

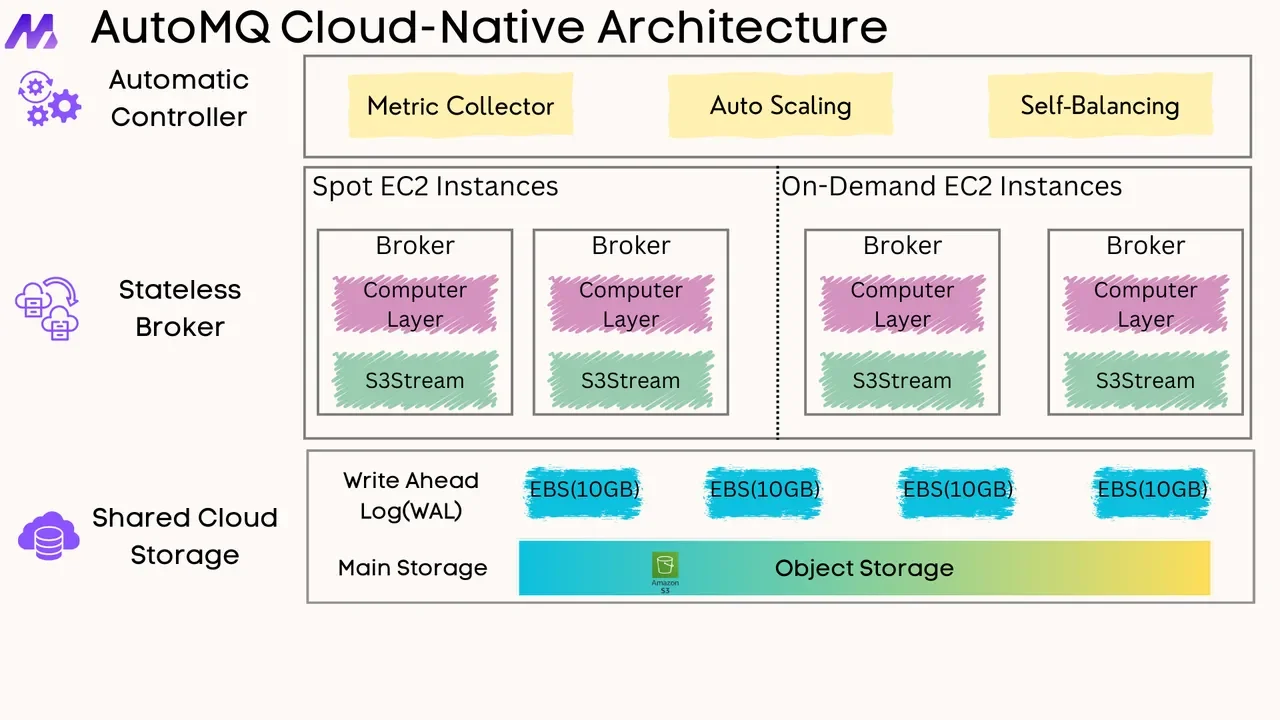

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging