Overview

Horizontal and vertical scalability are fundamental strategies for enhancing system performance, each with distinct mechanisms, use cases, and trade-offs. Horizontal scalability involves adding more machines or nodes to distribute workloads (scaling out), while vertical scalability upgrades existing hardware resources like CPU or RAM (scaling up). The choice between these approaches depends on factors such as workload predictability, cost, fault tolerance, and long-term growth requirements. This blog provides a detailed analysis of both strategies.

Core Concepts

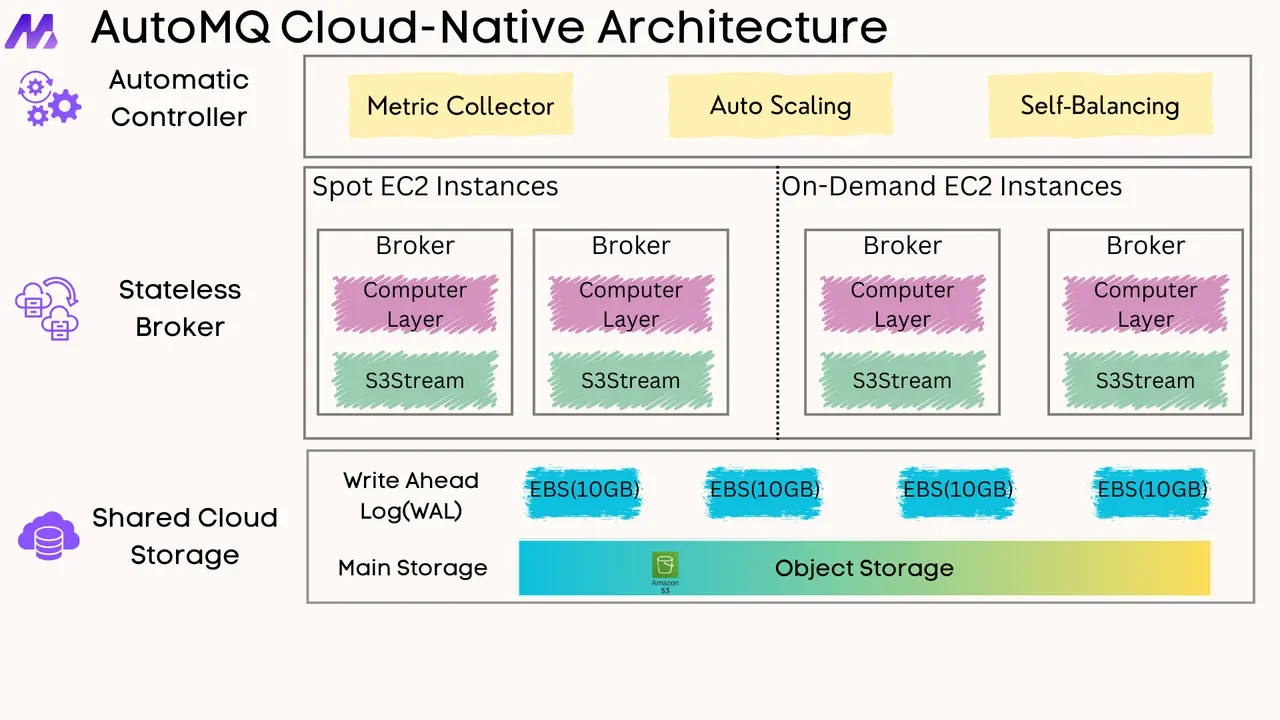

Horizontal scalability expands system capacity by adding nodes to a cluster, enabling distributed processing. For example, AutoMQ achieves efficient horizontal scaling, leveraging a streamlined architecture that simplifies node additions. This approach is ideal for cloud-native applications requiring elastic resource allocation.

Horizontal scaling excels in distributed environments. For instance, Criteo's advertising platform uses horizontal scaling to manage global traffic across thousands of servers, ensuring fault tolerance during regional outages. Microservices architectures benefit from this approach, as seen in Airbnb's transition from vertical scaling on AWS EC2 instances to horizontal scaling for search and booking services.

![Horizontal Scalability[3]](/blog/horizontal-scalability-vs-vertical-scalability/1.png)

Vertical scalability enhances individual machine capabilities, such as upgrading an 8 vCPU server to 32 vCPUs. However, vertical scaling faces physical limits-a server cannot exceed its maximum RAM or CPU capacity-making it less suitable for exponentially growing systems.

Vertical scaling suits compute-intensive tasks. Financial institutions often vertically scale transaction databases to handle peak trading volumes without rearchitecting systems.

![Vertical Scalability[15]](/blog/horizontal-scalability-vs-vertical-scalability/2.png)

Comparative Analysis

| Criteria | Horizontal Scaling | Vertical Scaling |

|---|---|---|

| Resource Allocation | Adds nodes; distributes load | Upgrades CPU/RAM on existing hardware |

| Cost Efficiency | Higher initial infrastructure costs | Lower short-term costs; hits hardware ceilings |

| Fault Tolerance | High (no single point of failure) | Low (downtime during upgrades) |

| Complexity | Requires load balancing and data consistency tools | Simple to implement |

| Use Cases | Dynamic workloads (e.g., social media, IoT) | Predictable workloads (e.g., legacy systems) |

Best Practices and Industry Applications

Hybrid Approaches

This hybrid strategy, sometimes referred to as diagonal scaling, allows companies like Uber and Airbnb to optimize specific components of their applications; for instance, Uber can ensure the low latency required for real-time location tracking through vertical scaling of those specific services, while simultaneously using cost-effective horizontal scaling for the computationally intensive but less latency-sensitive trip-matching algorithms. This balanced approach enables them to meet diverse performance demands efficiently and adaptively, scaling different parts of their system up (vertically for power) or out (horizontally for capacity) as needed, which is particularly beneficial for managing costs and resources during rapid growth phases.

Automation Tools

Automation tools are essential for managing the complexities of scaling applications, whether vertically, horizontally, or using a hybrid approach, by enabling dynamic resource adjustment based on real-time demand. Examples include Infrastructure as Code (IaC) tools like Terraform and Ansible for provisioning and configuring infrastructure, and container orchestration platforms like Kubernetes, which offer sophisticated autoscaling features for both stateless and stateful applications.

Stateful Services

Stateful applications present a greater challenge for horizontal scaling because each instance needs to maintain or access shared, consistent session data, making simple duplication of instances problematic without careful data management. Technologies like Apache Kafka's In-Sync Replicas (ISR) ensure data consistency and fault tolerance during scaling by maintaining a set of replicas that are fully caught up with the leader partition, guaranteeing that committed messages are not lost and that a consistent data view is maintained even as the system expands or contracts.

Common Challenges and Mitigations

Horizontal Scaling

-

Network Latency : Distributed systems may suffer latency. To reduce latency in horizontal scaling, technologies like load balancers distribute requests efficiently, while caching systems store frequently accessed data for faster retrieval. Data sharding and edge computing further minimize delays by dividing data and processing it closer to users, respectively.

-

Data Consistency : To ensure data consistency in horizontally scaled systems, technologies like consensus protocols (e.g., Paxos or Raft) are used to make all nodes agree on the data's state. Distributed transaction protocols, such as two-phase commit or Sagas, manage operations across multiple servers to maintain integrity. Additionally, various replication strategies and consistency models (ranging from strong to eventual consistency) are chosen based on application requirements to synchronize data copies across the distributed environment.

Vertical Scaling

-

Hardware Limits : The availability of instances with high vCPU counts, such as Google Cloud's N2D series offering configurations up to 224 virtual CPUs, allows businesses to significantly enhance the processing power of a single server to handle very demanding workloads. This robust capacity for vertical scaling means organizations can postpone reaching the absolute physical limits of a single machine, providing substantial headroom for growth before needing to consider more complex horizontal scaling architectures.

-

Downtime : Live migration technologies, exemplified by tools like VMware vMotion, enable the seamless transfer of a running virtual machine from one physical host to another without interrupting its operation or user access. This capability is crucial during vertical scaling events, such as hardware upgrades or maintenance, as it allows the VM to be moved to a more powerful server or a different host with minimal to zero perceived downtime for end-users, thereby ensuring business continuity.

Strategic Considerations

-

Start Vertical, Scale Horizontal : Begin with vertical scaling for simplicity, then transition to horizontal as demand grows.

-

Cost-Benefit Analysis : Horizontal scaling's long-term elasticity often outweighs its upfront costs for high-growth companies.

-

Geographic Distribution : Horizontal scaling supports multi-region deployments, critical for global platforms like Netflix, which uses both strategies to stream content worldwide.

Conclusion

Horizontal and vertical scalability offer complementary benefits, with horizontal excelling in elasticity and fault tolerance, and vertical providing simplicity for static workloads. Modern systems increasingly adopt hybrid models. The optimal strategy depends on workload patterns, growth projections, and infrastructure constraints, necessitating continuous evaluation as systems evolve.

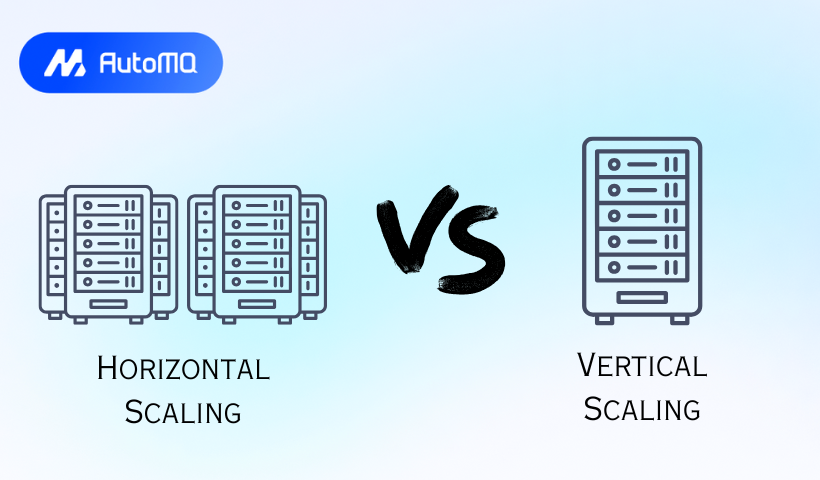

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging