Overview

Apache Kafka has become a cornerstone technology for building real-time data pipelines and streaming applications. At the heart of any Kafka implementation are the client libraries that allow applications to interact with Kafka clusters. This comprehensive guide explores Kafka clients, their configuration, and best practices to ensure optimal performance, reliability, and security.

Understanding Kafka Clients

Kafka clients are software libraries that enable applications to communicate with Kafka clusters. They provide the necessary APIs to produce messages to topics and consume messages from topics, forming the foundation for building distributed applications and microservices.

Types of Kafka Clients

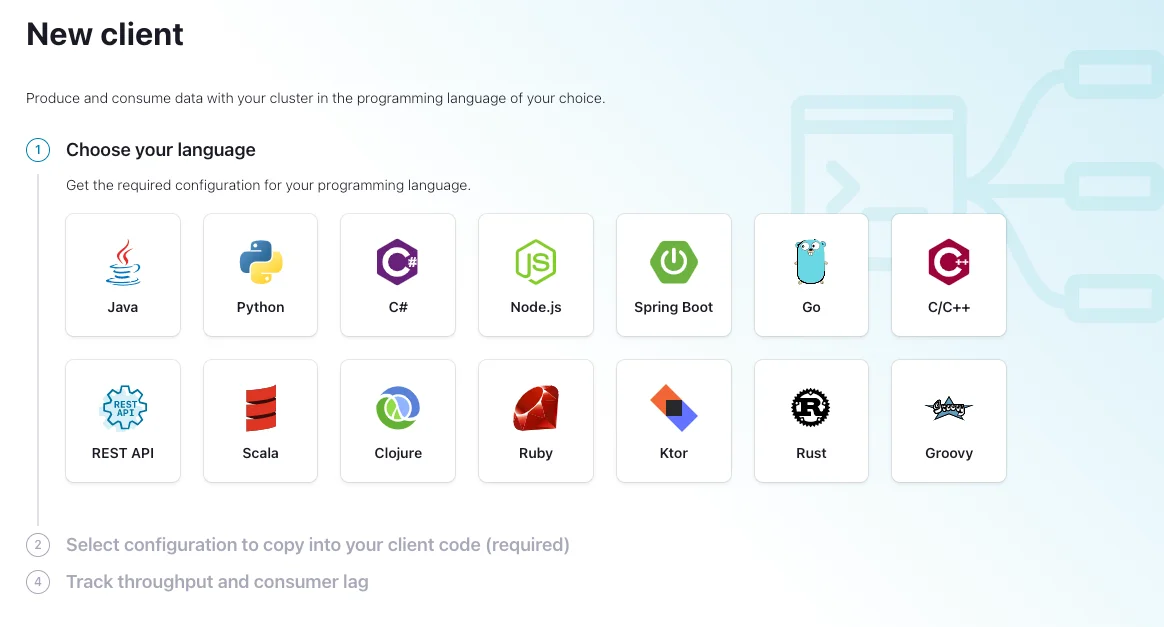

The official Confluent-supported clients include:

-

Java : The original and most feature-complete client, supporting producer, consumer, Streams, and Connect APIs

-

C/C++ : Based on librdkafka, supporting admin, producer, and consumer APIs

-

Python : A Python wrapper around librdkafka

-

Go : A Go implementation built on librdkafka

-

.NET : For .NET applications

-

JavaScript : For Node.js and browser applications

These client libraries follow Confluent's release cycle, ensuring enterprise-level support for organizations using Confluent Platform.

Producer Clients: Concepts and Configuration

Producers are responsible for publishing data to Kafka topics. Their performance and reliability directly impact the entire streaming pipeline.

Key Producer Configurations

Several configuration parameters significantly influence producer behavior:

-

Batch Size and Linger Time

-

Acknowledgments

-

Retry Mechanism

-

Idempotence and Transactions

Producer Best Practices

For optimal producer performance, consider these best practices:

-

Throughput Optimization

-

Error Handling

-

Resource Allocation

Consumer Clients: Concepts and Configuration

Consumers read messages from Kafka topics and process them. Proper consumer configuration ensures efficient data processing and prevents issues like consumer lag.

Key Consumer Configurations

Important consumer configuration parameters include:

-

Group Management

-

Offset Management

-

Performance Settings

Consumer Best Practices

For reliable and efficient consumers, implement these best practices:

-

Partition Management

-

Offset Commit Strategy

-

Error Handling

Security Best Practices

Security is paramount when implementing Kafka clients in production environments. Key security considerations include:

-

Authentication

-

Authorization

-

Secret Management

Performance Tuning and Monitoring

Achieving optimal performance requires careful monitoring and tuning of Kafka clients.

Performance Optimization Strategies

-

JVM Tuning (for Java clients)

-

Network Configuration

-

Compression Settings

Monitoring Kafka Clients

Implement comprehensive monitoring for early detection of issues:

-

Key Metrics to Watch

-

Monitoring Tools

-

Alerting

Common Issues and Troubleshooting

Even with best practices in place, issues can arise. Here are common problems and their solutions:

-

Broker Not Available

-

Leader Not Available

-

Offset Out of Range

-

In-Sync Replica Alerts

-

Slow Production/Consumption

Client Development Best Practices

When developing applications that use Kafka clients, follow these best practices:

-

Version Compatibility

-

Connection Management

-

Error Handling

-

Testing and Validation

Web User Interfaces for Kafka Management

Several web UI tools can simplify Kafka cluster management:

-

Conduktor

-

Redpanda Console

-

Apache Kafka Tools

These tools can complement your client applications by providing visibility into cluster operations and simplifying management tasks.

Check more tools here: Top 12 Free Kafka GUI Tools

Conclusion

Kafka clients form the foundation of any successful Kafka implementation. By understanding their configuration options and following best practices, you can ensure reliable, secure, and high-performance data streaming applications.

Key takeaways include:

-

Select appropriate client libraries based on your programming language and requirements

-

Configure producers and consumers with careful attention to performance, reliability, and security parameters

-

Implement proper error handling and monitoring

-

Follow security best practices to protect data and access

-

Regularly test and validate client applications under various conditions

-

Use management tools to gain visibility and simplify operations

By adhering to these guidelines, you'll be well-positioned to leverage the full potential of Apache Kafka in your data streaming architecture.

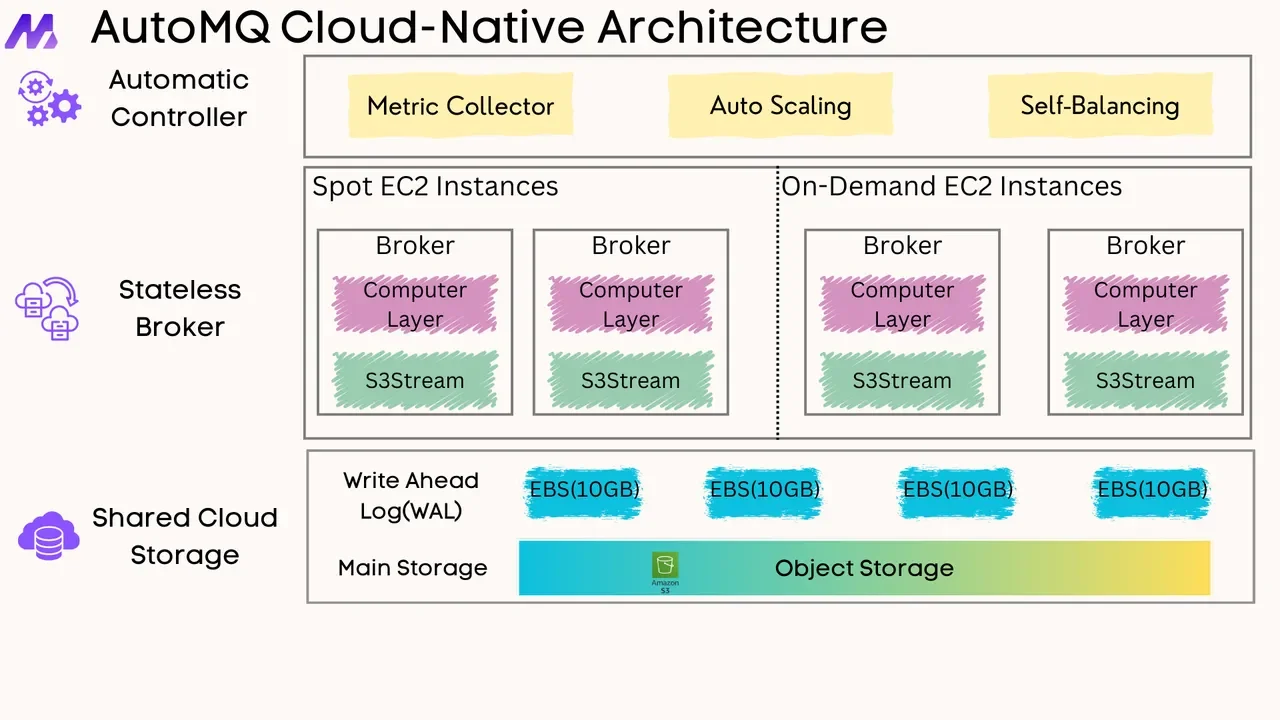

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays