Introduction

Apache Kafka is the central nervous system for countless modern data architectures. It is the backbone for event-driven systems, real-time analytics, and critical data pipelines. As organizations evolve, the need to migrate a Kafka cluster—whether for hardware upgrades, cloud adoption, or strategic platform consolidation—becomes inevitable. While the benefits are clear, the process is fraught with risk. A misstep during migration can lead to data loss, corruption, or inconsistency, causing catastrophic downstream failures.

For a software engineer, a Kafka migration is not merely an infrastructure task; it is a critical exercise in preserving the sanctity of your data. This guide provides a comprehensive framework for ensuring data integrity and consistency throughout the migration process. We will explore core concepts, proven strategies, and technical best practices to help you navigate this complex challenge successfully.

Core Concepts: Integrity and Consistency in a Kafka Context

Before diving into migration strategies, it is essential to establish a clear understanding of what we are trying to protect.

Data Integrity

Data Integrity refers to the accuracy, completeness, and validity of data over its entire lifecycle. In the context of a Kafka migration, it means ensuring that:

No Messages are Lost: Every message produced to the source cluster successfully arrives in the destination cluster.

No Messages are Corrupted: The content of each message remains unchanged from producer to consumer. This is where schema management becomes crucial for preventing serialization or deserialization errors.

No Unintended Duplicates are Processed: While at-least-once delivery is a common Kafka pattern, a migration should not introduce unexpected duplicates that downstream systems cannot handle.

Data Consistency

Data Consistency, particularly in distributed systems, is about ensuring that all stakeholders have the same view of the data. For Kafka, this primarily relates to ordering. Kafka only guarantees message order within a single partition [1]. Therefore, during a migration, preserving this partition-level ordering is paramount. If a consumer processes messages from a partition in a different order than they were produced, it could lead to incorrect state calculations, flawed analytics, and broken business logic.

The primary goal of a migration is to move from a source cluster (A) to a destination cluster (B) while upholding these two principles, ideally with minimal disruption to the applications that depend on the data streams.

The Blueprint for Success: Pre-Migration Planning

Thorough planning is the single most important factor in a successful migration. Rushing this phase is a recipe for disaster.

A. Audit Your Current Kafka Ecosystem

You cannot migrate what you do not understand. Begin by creating a detailed inventory of your source environment:

Topics and Partitions: Document all topics, their partition counts, replication factors, and configuration overrides.

Producers and Consumers: Identify every application that produces to or consumes from the cluster. Document their configurations, including

ackssettings for producers and group IDs for consumers.Data and Schemas: Understand the nature of the data. Is it governed by a schema registry? If so, the registry and its schemas must also be part of the migration plan.

Throughput and Latency: Profile your current workload. What are the peak and average message rates and sizes? What are the end-to-end latency requirements? This data is vital for provisioning the destination cluster correctly.

B. Define Migration Goals and Constraints

Not all migrations are equal. You must define the acceptable trade-offs for your specific use case:

Downtime: Is any downtime acceptable? Can you afford a maintenance window to stop producers and consumers? For most critical systems, the answer is no, which necessitates a zero-downtime strategy.

Risk Tolerance: What is the business impact of data loss or inconsistency? This will dictate how much you invest in validation and verification.

Rollback Plan: If the migration fails, how do you revert to the source cluster? A well-defined rollback procedure is a non-negotiable safety net.

Choosing Your Migration Strategy

With a solid plan in place, you can choose the right technical strategy. There are three primary approaches, each with distinct trade-offs.

| - | Stop-the-World | Dual-Write | Mirroring / Replication |

|---|---|---|---|

| Description | All producers and consumers are stopped. Data is copied. Applications are reconfigured and restarted against the new cluster. | Producers are modified to write to both the source and destination clusters simultaneously for a period. Consumers are gradually migrated. | An external tool is used to continuously replicate data from the source cluster to the destination cluster in real-time. |

| Pros | Simple to execute; conceptually easy to reason about. | Lower downtime than Stop-the-World; allows for a phased consumer cutover. | Zero or near-zero downtime; decouples migration from application logic; robust and scalable. |

| Cons | High downtime; not feasible for critical, 24/7 applications. | Complex producer logic; risk of data divergence if one write fails; difficult to manage. | Requires a dedicated replication tool; can introduce latency; requires careful offset management. |

| Best For | Non-production environments; applications with scheduled maintenance windows. | Scenarios where modifying producer applications is trivial and the number of producers is small. | Most production migrations, especially for critical systems with a no-downtime requirement. |

For the vast majority of production use cases, Mirroring is the recommended strategy. It provides the best balance of reliability, scalability, and minimal service disruption. Tools like Apache Kafka's MirrorMaker 2 are designed specifically for this purpose [2].

Executing a Mirrored Migration: A Step-by-Step Guide

Using a mirroring approach, the migration process can be broken down into distinct, manageable phases.

Phase 1: Setup and Initial Replication

Provision the Destination: Set up the new Kafka cluster (B) and any dependent services like schema registries. Ensure it has the capacity to handle your production load.

Deploy the Mirroring Tool: Configure and start the mirroring process. Data will begin flowing from cluster A to cluster B. At this point, all producers and consumers are still interacting only with cluster A.

Validate the Replication: Do not assume the replication is perfect. Use tooling to verify that message counts on cluster B are matching those on A for the mirrored topics. Check for errors in the mirroring tool's logs.

Phase 2: The Critical Cutover

This is the most delicate part of the migration, where you move your applications. The key is to migrate consumers first, then producers.

Consumer Offset Synchronization: To prevent reprocessing or skipping messages, consumers must start reading from the new cluster at the exact point they left off in the old cluster. MirrorMaker 2 helps automate this by translating consumer group offsets from cluster A to equivalent offsets on cluster B and storing them in a

checkpoints.internaltopic [2]. Ensure this feature is enabled and working correctly.Deploy and Validate New Consumers: Deploy new instances of your consumer applications configured to read from cluster B. It's a best practice to run them in a "dry run" or "passive" mode initially. They should consume and process messages without performing any side effects (e.g., writing to a database). This allows you to validate that the data arriving at cluster B is correct and complete before making the final switch.

The Consumer Cutover: Once you have validated the data on cluster B, perform the cutover. This typically involves a rolling restart of your consumer applications, with the new configuration pointing to cluster B. The consumers will fetch their translated offsets and begin processing from the correct position.

The Producer Cutover: After all consumers are successfully running against cluster B, you can switch the producers. This is often a simpler configuration change. Once producers start writing to cluster B, the migration is functionally complete.

Decommissioning: Do not immediately shut down the source cluster. Let the system run for a period (e.g., a day or a week) to ensure stability. Once you are fully confident in the new cluster, you can stop the mirroring process and decommission the old infrastructure.

Essential Best Practices for Maintaining Integrity

Underpinning any migration strategy are technical best practices that act as a safety net.

Enforce Producer Idempotency

An idempotent producer, when configured with enable.idempotence=true , ensures that messages are written to a partition exactly once per producer session [1]. During a migration, retries and recovery scenarios are common. Idempotency prevents these situations from creating duplicate messages, providing a powerful guarantee of data integrity. This should be considered a mandatory setting for all critical data producers.

Manage Schemas Centrally

If your data has a structure, a schema registry is non-negotiable. It ensures that producers and consumers agree on the format of the data. During a migration, you must also migrate your schemas. A centralized registry ensures that a producer writing to cluster B uses the same schema that a consumer reading from cluster B expects, preventing data corruption from deserialization failures.

Monitor, Monitor, Monitor

You cannot over-monitor a migration. Track key metrics on both clusters throughout the process:

Consumer Lag: Is lag increasing on either cluster?

Broker Health: CPU, memory, disk, and network I/O.

Replication Metrics: The throughput and latency of your mirroring tool.

Application-Level Metrics: Are business KPIs (e.g., orders processed, events handled) remaining stable?

An anomaly in any of these metrics is an early warning that something is wrong.

Test Your Rollback Plan

A rollback plan you haven't tested is not a plan; it's a hope. In your staging environment, practice failing the migration and executing the rollback procedure. This might involve re-pointing producers back to cluster A and having consumers fail-over. This test will build confidence and uncover any flaws in your process before you touch production.

Conclusion

Migrating a production Kafka cluster is a high-stakes operation, but it does not have to be a high-risk one. By grounding your approach in the core principles of data integrity and consistency, you can transform a daunting task into a manageable engineering project.

Success hinges on a clear, methodical process: begin with a thorough audit and plan, choose a strategy that fits your downtime requirements (usually mirroring), and execute the cutover in a controlled, consumer-first sequence. Reinforce this process with technical best practices like idempotent producers and centralized schema management. By treating your data with the respect it deserves, you can ensure a seamless migration that delivers on its promises without compromising the information that powers your business.

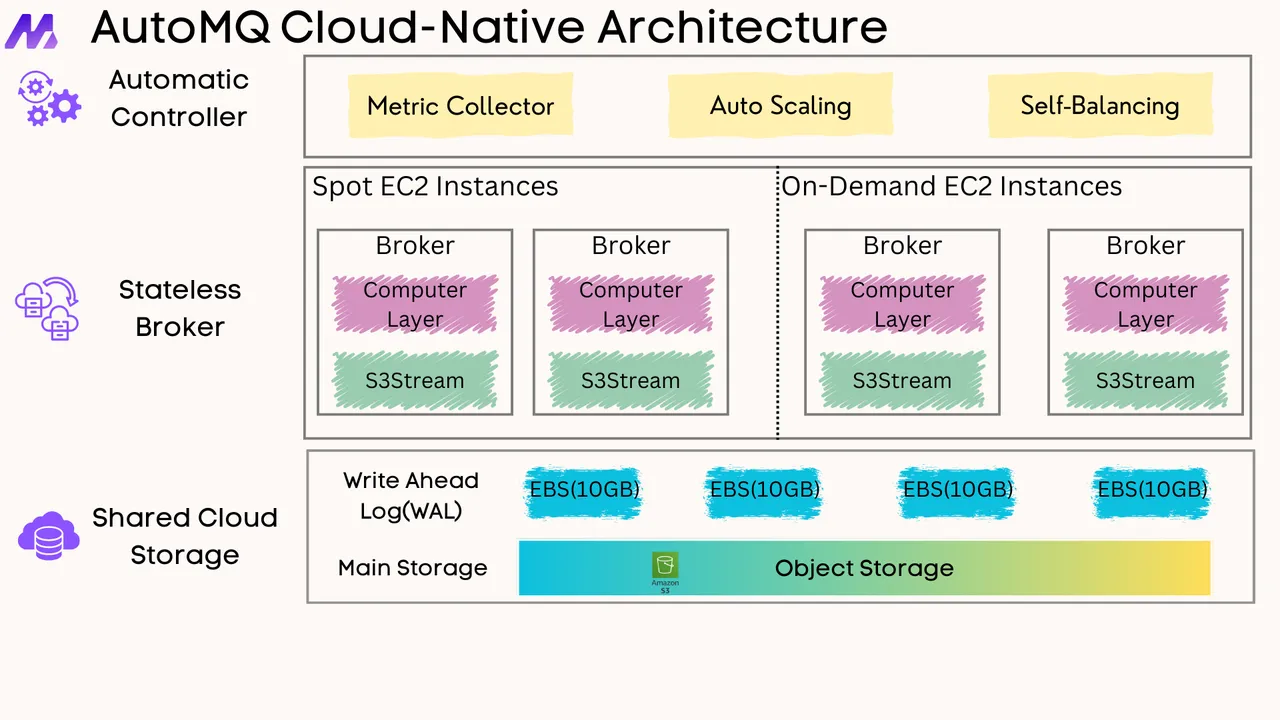

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)