Introduction

In today's data-driven world, Apache Kafka has become the backbone of real-time data pipelines for thousands of companies. It's the central nervous system that powers everything from financial transactions to live feature updates in mobile applications. But as Kafka's role becomes more critical, a single question looms large for any architect: what happens if my Kafka cluster goes down? A regional cloud outage, a network failure, or a simple human error could bring business to a halt.

This is where multi-datacenter replication comes in. To build truly resilient systems, you need to replicate your Kafka clusters across different geographical regions. The two most common architectures for this are Active-Passive and Active-Active.

Choosing between them is one of the most significant architectural decisions you'll make. It's a trade-off between availability, cost, complexity, and data consistency. This blog post provides a comprehensive comparison of these two topologies, exploring how they work, their pros and cons, and the key factors to consider when making your choice.

![Two Architectures of Kafka Replication Topologies [5]](/blog/kafka-replication-topologies-active-passive-vs-active-active/1.png)

The "Why" of Cross-Cluster Replication

Before diving into the topologies, it's crucial to understand why Kafka's built-in replication isn't enough for disaster recovery. Kafka is, by design, a distributed and fault-tolerant system. When you create a topic, you set a replication factor, which means Kafka stores copies (replicas) of your topic's partitions on multiple brokers within the same cluster. If one broker fails, another can take its place, ensuring no data loss and minimal downtime. This is known as intra-cluster replication.

However, this only protects you from failures within a single datacenter or cloud region. If a catastrophic event like a region-wide network outage or a natural disaster occurs, your entire cluster could become unavailable. To protect against this, you need cross-cluster replication, which involves running independent Kafka clusters in separate geographical regions and continuously copying data between them.

The most common open-source tool for this is Apache Kafka's MirrorMaker 2. It is designed to replicate topics, consumer group offsets, and cluster configurations from a source cluster to a target cluster, forming the foundation for multi-region architectures .

Deep Dive: The Active-Passive Topology (Design for Disaster Recovery)

The Active-Passive model is the most straightforward approach to disaster recovery. It is conceptually simple: you have one primary (active) cluster that serves all your application traffic and a secondary (passive) cluster in a different region that acts as a hot standby.

How It Works

In a typical Active-Passive setup, data flows in only one direction. A replication tool like MirrorMaker 2 continuously reads data from the active cluster and writes it to the passive one. The passive cluster does not handle any producer or consumer traffic directly; it exists only as a replica, waiting in the wings.

The Failover Process:

If the primary cluster becomes unavailable, the disaster recovery plan is initiated. This involves a series of steps:

-

Stop Replication: The replication process from the (now unavailable) active cluster is stopped to prevent any inconsistencies.

-

Redirect Traffic: Your producers and consumers are reconfigured to point to the passive cluster. This can be done by changing application configurations and restarting them or by updating DNS records.

-

Promote to Active: The passive cluster is now officially the new active cluster and begins serving all application traffic.

The Failback Process:

Once the primary datacenter is restored, you need a plan to fail back. This is often more complex than the initial failover. A carefully orchestrated failback involves ensuring that any data written to the secondary cluster during the outage is replicated back to the primary before it resumes its active role. This process must be handled meticulously to prevent data loss .

Pros and Cons

The advantages of an Active-Passive topology lie in its simplicity. It is easier to design and manage, which translates to lower operational costs. The clear, one-way data flow is easy to reason about, and because writes only happen in one location at a time, there is no risk of data conflicts that require complex resolution logic.

However, this model has significant drawbacks. The failover and failback processes can be complex, often requiring manual intervention under high-pressure circumstances. The passive infrastructure remains largely idle, leading to poor resource utilization. Most importantly, this architecture results in a higher RTO (Recovery Time Objective), as failover is not instantaneous, and a non-zero RPO (Recovery Point Objective), meaning that data in-flight during an outage may be permanently lost. It also does not support geo-routing for optimizing user latency.

When to Choose Active-Passive

The Active-Passive topology is an excellent choice for:

-

Core Disaster Recovery: When your primary goal is to have a solid backup plan in case of a major outage.

-

Cost-Conscious Environments: When you cannot justify the cost and complexity of a fully active-active system.

-

Systems with Tolerance for Downtime: Applications where a recovery time of minutes to hours is acceptable and the potential loss of a few seconds of data is tolerable.

Deep Dive: The Active-Active Topology (Design for High Availability)

The Active-Active topology is a more advanced and complex architecture where two or more clusters in different regions are simultaneously serving live traffic. Both clusters accept writes from local producers and are read from by local consumers.

How It Works

In an Active-Active setup, data replication is bidirectional. Each cluster acts as both a source and a target for replication. For example, data produced in the US region is consumed by local applications and also replicated to the EU region, and vice versa. This requires a sophisticated setup to prevent infinite replication loops, often handled by tools that automatically add prefixes to replicated topics to identify their origin .

To direct traffic, you typically use either a geo-routing load balancer or build location awareness directly into your client applications. This allows a user in Europe to be served by the EU cluster, providing significantly lower latency.

The Challenge of Conflict Resolution:

The biggest challenge in an Active-Active model is maintaining data consistency. Since writes can occur in multiple locations at once, you can run into conflicts. For example, what happens if the same record is updated in both the US and EU clusters at nearly the same time? Several strategies exist to handle this, but none are perfect:

-

Last Write Wins (LWW): You can use timestamps to determine the "latest" version of a record. However, this relies on perfectly synchronized clocks across datacenters, which is a difficult problem in itself.

-

Geo-Partitioning: You can designate a "primary" cluster for a specific subset of data. For instance, all data related to European customers is written exclusively to the EU cluster, even if the request originates from the US. This avoids conflicts but adds complexity to the application logic.

-

Idempotent Consumers: Because data is replicated in both directions, it's possible for a consumer to see the same message twice (once from the local cluster, and again as a replicated message from the remote cluster). Your consumers must be designed to be idempotent, meaning they can process the same message multiple times without causing errors or incorrect results.

Pros and Cons

The primary benefits of an Active-Active architecture are exceptional availability and performance. It offers a near-zero RTO; if one region fails, traffic is almost instantly rerouted to a healthy one. A near-zero RPO is also possible, though not always guaranteed. This model provides low latency for a globally distributed user base and ensures excellent resource utilization since all infrastructure is live and serving traffic.

On the other hand, the drawbacks are significant. This topology is extremely complex to design, implement, and operate safely, leading to higher infrastructure and operational costs. The high risk of data conflicts and inconsistencies requires very careful system design and sophisticated application logic to manage. There is also potential for increased latency if writes need to be routed to a designated primary region to avoid conflicts.

When to Choose Active-Active

The Active-Active topology is reserved for the most critical applications that require:

-

Maximum Availability: Systems where even a few minutes of downtime is unacceptable.

-

Global Low Latency: Applications with a large, geographically dispersed user base that requires fast response times.

-

Sufficient Engineering Resources: Organizations with the technical expertise and operational maturity to manage the inherent complexity and risks.

How to Choose: A Head-to-Head Comparison

The decision ultimately comes down to your business needs and technical capabilities. There is no one-size-fits-all answer. Use the table below as a guide to evaluate the trade-offs.

| Factor | Active-Passive | Active-Active |

|---|---|---|

| Primary Use Case | Disaster Recovery | High Availability, Geo-Latency |

| RTO (Recovery Time) | Minutes to Hours | Seconds to Near-Zero |

| RPO (Data Loss) | Seconds to Minutes | Near-Zero to Seconds |

| Implementation Complexity | Moderate | Very High |

| Operational Overhead | Low | High |

| Infrastructure Cost | Lower (idle resources) | Higher (all resources active) |

| Data Conflict Risk | None | High |

| Application Requirements | Can be stateful | Must be stateless and idempotent |

To make the right choice, ask yourself these questions:

-

What is our business tolerance for downtime? If the answer is "zero," you may need to invest in an Active-Active solution.

-

How much data can we afford to lose? If even a few seconds of data loss is catastrophic, an Active-Passive model's RPO might be too high.

-

Do we have a global user base? If so, the low-latency benefits of an Active-Active architecture could provide a significant competitive advantage.

-

Do we have the operational maturity and engineering talent to manage the complexity of an Active-Active system, including conflict resolution and idempotent services?

Best Practices for Any Multi-Datacenter Strategy

Regardless of which topology you choose, implementing a multi-datacenter Kafka architecture is a serious undertaking. Here are some universal best practices:

-

Monitor Everything: You need robust monitoring and alerting for your entire setup. Keep a close eye on replication lag —the delay between a message being produced in the source cluster and appearing in the replica. High lag defeats the purpose of having a replica. Also, monitor broker health, topic throughput, and consumer group lag in all clusters .

-

Automate Your Playbooks: The failover process should be as automated as possible. Manual failovers performed under pressure are prone to human error. Script and test your procedures relentlessly.

-

Test, Test, and Test Again: Don't wait for a real disaster to find out if your recovery plan works. Regularly conduct disaster recovery drills to test your failover and failback procedures. This builds muscle memory and uncovers weaknesses in your system.

-

Design for Idempotency: Even in an Active-Passive setup, retries can happen during a failover event. Designing idempotent producers and consumers from the start is a cornerstone of building robust distributed systems.

Conclusion

Choosing between Active-Passive and Active-Active Kafka topologies is a fundamental architectural decision with long-term consequences. The Active-Passive model offers a solid, cost-effective solution for disaster recovery, accepting a trade-off of some downtime and potential data loss in a worst-case scenario. The Active-Active model, while far more complex and expensive, provides the gold standard for high availability and low latency for global applications.

The right choice is not purely technical; it's a business decision rooted in your specific requirements for availability, performance, and cost. By carefully evaluating these trade-offs and understanding the operational commitments involved, you can build a resilient Kafka architecture that keeps your data flowing, no matter what happens.

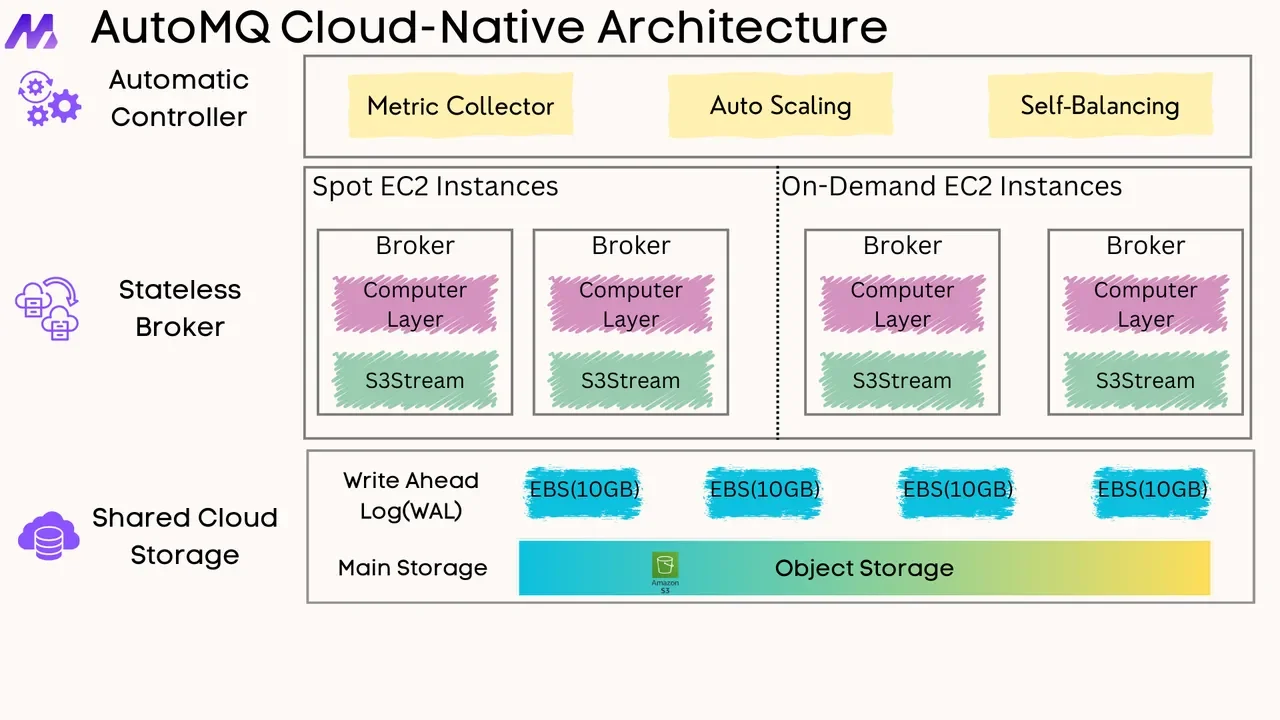

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on GitHub. Big companies worldwide are using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging