Overview

Kubernetes has become the de facto standard for container orchestration. However, working directly with a full-scale production cluster for development and testing can be cumbersome, expensive, and risky. Local Kubernetes tools address this by allowing developers to run lightweight, self-contained Kubernetes clusters on their personal machines. This facilitates rapid iteration, offline development, and isolated testing of applications before deploying them to a production environment .

This blog post dives into three prominent tools in this space: Minikube , k3s , and kind . We'll explore their core concepts, architecture, installation, resource consumption, performance, common issues, and best practices, culminating in a side-by-side comparison.

Minikube: The Established Local Cluster

Minikube has been a long-standing solution for running a single-node Kubernetes cluster locally . Its primary goal is to be the best tool for local Kubernetes application development and to support all Kubernetes features that fit .

Core Concepts and Architecture

Minikube typically runs a Kubernetes cluster inside a virtual machine (VM) on your laptop or PC, although it also supports running in a Docker container or directly on bare metal (Linux only) . It supports various hypervisors like VirtualBox, Hyper-V, KVM, and Parallels, as well as Docker for a container-based driver . This driver-based architecture offers flexibility but can influence performance and resource usage . By default, it sets up a single-node cluster, but multi-node configurations are also possible .

![Using Minikube to Create a Cluster [22]](/blog/minikube-vs-k3s-vs-kind-comparison-local-kubernetes-development/1.png)

Key Features

-

Platform Agnostic : Supports Windows, macOS, and Linux .

-

Add-ons : Provides a rich set of add-ons for easily enabling features like the Kubernetes Dashboard, ingress controllers, and storage provisioners .

-

Multiple Kubernetes Versions : Allows users to specify the Kubernetes version for their cluster .

-

LoadBalancer Support : Offers

minikube tunnelfor services of type LoadBalancer . -

Filesystem Mounts : Facilitates mounting local directories into the cluster for easier development .

Installation and Basic Usage

Installation involves downloading the Minikube binary and ensuring a hypervisor or Docker is installed.

A typical startup command is:

minikube start --driver=virtualbox --memory=4096 --cpus=2This starts a cluster with VirtualBox, 4GB RAM, and 2 CPUs . Once started, kubectl can be used to interact with the cluster. minikube dashboard opens the Kubernetes dashboard in a browser .

Resource Requirements

Minikube's resource requirements vary based on the driver and workload.

-

Minimum : 2 CPUs, 2GB RAM, 20GB free disk space .

-

Recommended for typical use : 4GB+ RAM, 2+ CPUs . A comparative test showed Minikube (Docker driver) using around 536-680 MiB of RAM for the cluster itself on an 8-CPU, 15GB host.

Common Issues and Troubleshooting

-

Resource Constraints : Ensure sufficient RAM/CPU are allocated to Minikube and the underlying VM/container.

-

Driver Issues : Hypervisor or Docker driver misconfigurations can cause startup failures. Check driver compatibility and installation.

-

Network Problems : Issues with VM networking or VPN interference.

minikube logsandkubectl describeare useful for diagnostics .

Best Practices

-

Allocate sufficient resources (CPU, memory, disk) based on your workload.

-

Use the Docker driver on Linux for better performance if a VM is not strictly needed.

-

Utilize

minikube mountfor rapid code changes without rebuilding images . -

Leverage

minikube docker-envto build images directly into Minikube's Docker daemon, speeding up local iteration . -

Keep Minikube and

kubectlupdated. -

Use addons for common services like ingress and dashboard .

k3s: The Lightweight Kubernetes Distribution

k3s, developed by Rancher (now SUSE), is a highly lightweight, fully compliant Kubernetes distribution designed for production workloads in resource-constrained, remote locations, or on IoT devices . It's also excellent for local development due to its small footprint.

Core Concepts and Architecture

k3s achieves its lightweight nature by removing non-essential components (legacy features, alpha features, non-default admission controllers) and replacing others with leaner alternatives . For example, it uses SQLite as the default datastore instead of etcd (though etcd, PostgreSQL, and MySQL are supported for HA) . It also embeds containerd, Flannel, CoreDNS, Traefik (ingress), and a local path provisioner into a single binary less than 100MB .

The architecture consists of k3s server nodes (running the control plane) and k3s agent nodes (running workloads) . For local use, a single server node setup is common.

![k3s Architecture [23]](/blog/minikube-vs-k3s-vs-kind-comparison-local-kubernetes-development/2.png)

Key Features

-

Small Binary Size : Less than 100MB .

-

Low Resource Usage : Can run on as little as 512MB RAM and 1 CPU .

-

ARM Support : Excellent for Raspberry Pi and other ARM-based devices.

-

Simplified Operations : Easy to install and manage .

-

CNCF Certified : Fully conformant Kubernetes distribution.

-

Built-in Components : Includes ingress (Traefik), service load balancer (Klipper), and local storage provisioner .

Installation and Basic Usage

Installation is typically a one-liner:

curl -sfL https://get.k3s.io | sh -This installs k3s as a service. The kubeconfig file is placed at /etc/rancher/k3s/k3s.yaml . To add agent nodes:

curl -sfL https://get.k3s.io | K3S_URL=https://myserver:6443 K3S_TOKEN=mynodetoken sh -For local development, k3d, a helper utility, is often used to run k3s clusters in Docker .

Resource Requirements

-

Minimum for Server : 512MB RAM, 1 CPU .

-

Minimum for Agent : 512MB RAM, 75MB RAM per agent . A comparative test for k3d (which runs k3s) showed memory usage around 423-502 MiB.

Common Issues and Troubleshooting

-

Permissions : Ensure the installation script is run with appropriate permissions.

-

Hostname Uniqueness : Each node in a k3s cluster must have a unique hostname .

-

Database Locking : SQLite can experience locking in some scenarios; for more robust HA, consider an external datastore .

-

Logs can be found in

/var/log/k3s.log(OpenRC) or viajournalctl -u k3s(systemd) .

Best Practices

-

For edge/IoT, leverage its small footprint and ARM support.

-

Use the embedded SQLite for simple single-server setups; consider etcd or an external SQL database for HA production clusters .

-

Regularly review security configurations and apply hardening guides if used in production .

-

For local development and CI, tools like k3d simplify managing k3s clusters in Docker containers.

-

Understand that some non-core Kubernetes features might be removed; test application compatibility if migrating from full K8s .

Kind: Kubernetes IN Docker

kind (Kubernetes IN Docker) is a tool primarily designed for testing Kubernetes itself, but it's also widely used for local development and CI/CD . It runs Kubernetes clusters by using Docker containers as "nodes."

Core Concepts and Architecture

Each node in a kind cluster is a Docker container running kubeadm and kubelet . This approach makes it very fast to create and destroy clusters, and allows for easy creation of multi-node clusters, which is a significant advantage for testing scenarios that require it . kind boots each "node" using kubeadm .

![Kind Architecture [24]](/blog/minikube-vs-k3s-vs-kind-comparison-local-kubernetes-development/3.png)

Key Features

-

Fast Cluster Creation/Deletion : Ideal for CI pipelines and ephemeral development environments .

-

Multi-node Clusters : Natively supports creating multi-node clusters (including HA control planes) with simple configuration .

-

Kubernetes Version Flexibility : Easily test against different Kubernetes versions by specifying the node image .

-

CNCF Certified : Produces conformant Kubernetes clusters.

-

Offline Support : Can operate without an internet connection if node images are pre-pulled.

Installation and Basic Usage

Installation involves downloading the kind binary. Docker must be installed.

To create a cluster:

kind create clusterTo create a multi-node cluster, a simple YAML configuration file can be used :

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: workerThen run: kind create cluster --config kind-multi-node.yaml .

Loading local Docker images into the cluster is done via kind load docker-image my-custom-image:latest .

Resource Requirements

kind is generally lightweight.

-

No official minimums are strictly defined, but it relies on Docker, so Docker's resource allocation is key.

-

Anecdotal evidence suggests an idle single-node kind cluster uses ~30% of a CPU core, and a 1 control-plane + 3 worker setup might use 40-60% on a 4-CPU Docker Desktop allocation .

-

A comparative test showed kind using ~463-581 MiB of RAM for the cluster itself. It's generally considered lighter than Minikube's Docker driver setup.

Common Issues and Troubleshooting

-

Docker Resources : Insufficient resources (CPU/memory/disk) allocated to Docker can cause failures. Docker Desktop for Mac/Windows users should ensure Docker has at least 6-8GB RAM for kind .

-

Networking : Accessing cluster services from the host can require

extraPortMappingsin the kind configuration due to Docker networking . -

kubectlVersion Skew : Ensurekubectlis not too skewed from the Kubernetes version running in kind . -

kind export logsis the primary command for gathering troubleshooting information .

Best Practices

-

Leverage fast startup/teardown for CI/CD and ephemeral testing environments .

-

Use declarative configuration files for reproducible single-node or multi-node clusters, especially for specifying Kubernetes versions, port mappings, and extra mounts .

-

Use

kind load docker-imageto quickly get locally built images into your cluster nodes without pushing to a registry . -

For CI, pin the kind node image version for consistency.

-

Ensure Docker is allocated sufficient resources, especially when running multi-node clusters or resource-intensive workloads .

-

Clean up unused clusters (

kind delete cluster) to free up resources.

Side-by-Side Comparison

| Feature | Minikube | k3s (via k3d for local) | kind |

|---|---|---|---|

| Primary Goal | Local app development | Lightweight K8s for edge, IoT, dev, CI | Testing K8s, local dev, CI |

| Underlying Tech | VM, Docker container, Bare Metal (Linux) | Lightweight binary (SQLite default), k3d runs it in Docker | Docker containers as nodes |

| Installation | Binary + Hypervisor/Docker | Single binary / Simple script (k3d for local Docker setup) | Single binary + Docker |

| Startup Time | Slower (VM), Moderate (Docker) | Fastest (especially via k3d) | Very Fast |

| Resource Usage | Higher (VM), Moderate (Docker) | Lowest | Low |

| Multi-node | Yes (experimental, can be complex) | Yes (easy via k3d) | Yes (core feature, easy config) |

| Addons/Ecosystem | Rich (dashboard, ingress, etc.) | Lean, built-in essentials (Traefik) | Minimal, requires manual setup (e.g., ingress) |

| Persistent Storage | HostPath, built-in provisioners | Local-path-provisioner by default | Docker volumes, manual config for advanced |

| Networking | minikube tunnel for LoadBalancer | Flannel, Klipper LB built-in | Docker networking, port mapping needed |

| OS Support | macOS, Windows, Linux | Linux native; macOS/Windows (via k3d/VM) | macOS, Windows, Linux (via Docker) |

| K8s Version Control | Yes | Distribution, versions with K8s; k3d allows selection | Yes (via node image) |

| Community/Support | Kubernetes SIG, Mature | Rancher/SUSE, Growing (strong in edge) | Kubernetes SIG, Strong for K8s testing |

When to Choose Which Tool

Choose Minikube if:

-

You need a stable, well-established tool with a wide range of built-in addons and features like the dashboard.

-

You prefer or require a VM-based isolated environment (though Docker is an option).

-

You are a beginner looking for a guided experience into Kubernetes.

-

You need straightforward

minikube tunnelsupport for LoadBalancer services.

Choose k3s (often with k3d for local use) if:

-

You need the absolute lightest resource footprint and fastest startup times.

-

You are developing for edge, IoT, or other resource-constrained environments.

-

You want a simple, production-ready, and conformant Kubernetes distribution with sensible defaults.

-

You appreciate a single binary for easier management and deployment.

Choose kind if:

-

Your primary need is fast creation and teardown of multi-node clusters, especially for CI/CD pipelines or testing Kubernetes controllers/operators.

-

You want to test different Kubernetes versions easily.

-

You are comfortable with Docker and managing some aspects like ingress controllers manually.

-

You need to run Kubernetes in Docker, perhaps for nested virtualization scenarios or specific CI setups.

For data streaming application development, any of these tools can host a simple Kafka setup for local testing. kind and Minikube are explicitly mentioned in some guides for setting up local development clusters for such purposes. The choice will depend on your familiarity and the specific requirements listed above. For instance, some vendor documentation for deploying their gateway locally specifically mentions Minikube as a requirement for their example setup.

Conclusion

Minikube, k3s, and kind each offer valuable capabilities for local Kubernetes development. Minikube provides a feature-rich, stable environment. k3s offers an extremely lightweight, production-grade distribution ideal for resource-constrained scenarios and rapid local setups (often via k3d). kind excels at quickly spinning up conformant multi-node clusters for testing and CI.

Understanding their architectural differences, resource implications, and feature sets, as outlined in this comparison, will empower you to select the tool that best aligns with your development workflow, resource availability, and specific project needs.

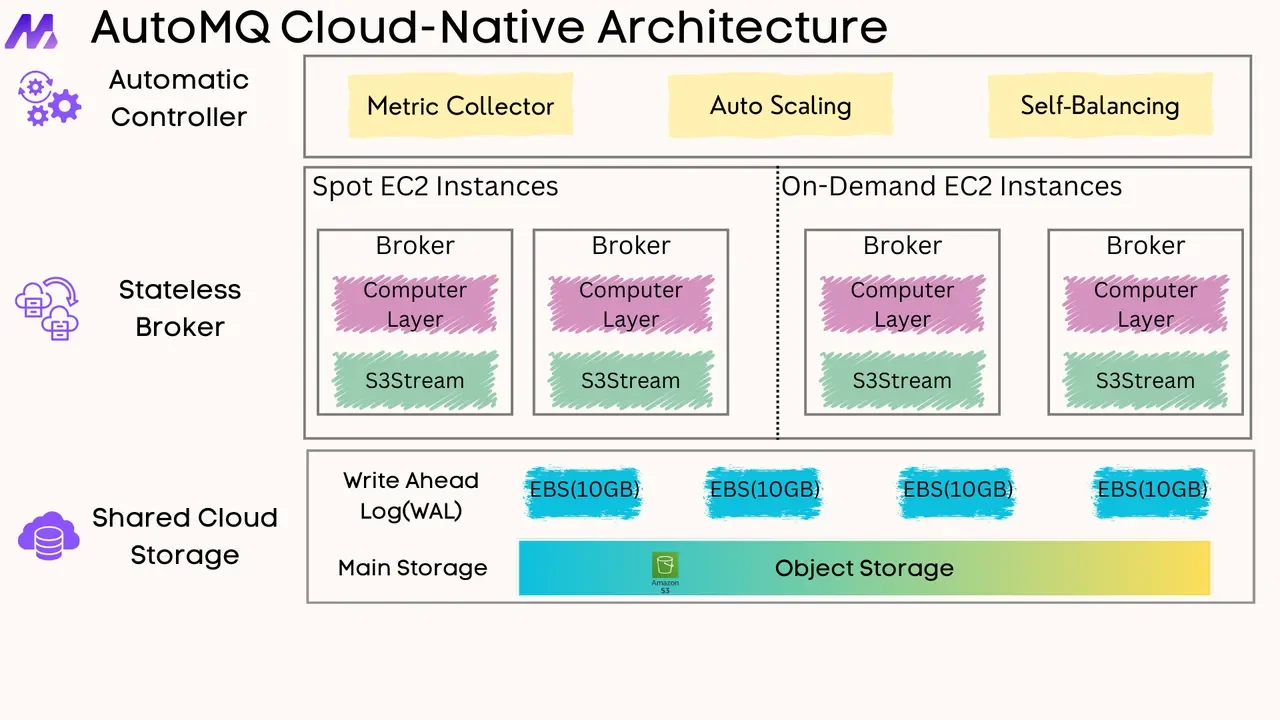

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging