Overview

The world is awash in data, and the tide is only rising. By 2025, the sheer volume, velocity, and variety of data being generated will continue to demand robust and sophisticated data engineering practices . Data engineering, the discipline of designing, building, and maintaining the systems and architectures that allow for the collection, storage, processing, and analysis of data at scale, has become the bedrock of modern data-driven organizations . As businesses increasingly rely on data for everything from operational efficiency to pioneering AI-driven innovations, the choice of data engineering tools is more critical than ever . This post will explore some of the top data engineering tools shaping the landscape in 2025.

Data Ingestion & Integration (ELT/ETL, CDC, Streaming)

These tools are responsible for collecting data from various sources (databases, SaaS applications, APIs, logs) and loading it into target systems. The trend is towards ELT (Extract, Load, Transform) over traditional ETL, especially with cloud data warehouses, and increasing demand for real-time streaming capabilities using Change Data Capture (CDC).

![Data Ingestion Overview [25]](/blog/top-data-engineering-tools-2025-data-integration-storage-processing/1.png)

Apache Kafka:

-

Overview: A distributed event streaming platform capable of handling high-volume, real-time data feeds . It uses a publish-subscribe model with durable, fault-tolerant storage.

-

Pros: Highly scalable, low latency, robust ecosystem, strong for microservices and event-driven architectures .

-

Cons: Can be complex to manage and operate at scale without managed services; its traditional architecture couples compute and storage, which can lead to scaling challenges in some self-managed scenarios .

-

Use Cases: Real-time analytics, log aggregation, event sourcing, feeding data lakes and warehouses .

Fivetran:

-

Overview: A popular managed ELT service that automates data movement from hundreds of sources to cloud data warehouses. It focuses on pre-built, maintenance-free connectors.

-

Pros: Ease of use, wide range of connectors, automated schema migration, reliable.

-

Cons: Pricing based on Monthly Active Rows (MAR) can become expensive for high-volume or frequently changing data; less flexibility than custom solutions.

-

Use Cases: Centralizing SaaS application data, database replication, populating data warehouses for BI.

Airbyte:

-

Overview: An open-source data integration platform with a rapidly growing list of connectors, offering both self-hosted and cloud versions.

-

Pros: Open-source and extensible (Connector Development Kit - CDK), large connector library, flexible pricing for the cloud version.

-

Cons: Can be resource-intensive to self-host, some connectors may be less mature than commercial alternatives, UI/UX still evolving.

-

Use Cases: Similar to Fivetran, suitable for teams needing more control or custom connector development.

AWS Glue:

-

Overview: A fully managed ETL service from Amazon Web Services that makes it easy to prepare and load data for analytics. It includes a data catalog, ETL job authoring, and scheduling.

-

Pros: Serverless, pay-as-you-go, integrates well with other AWS services, automatic schema detection via crawlers.

-

Cons: Can have a learning curve, primarily Spark-based which might be overkill for simple tasks, managing dependencies for custom scripts can be tricky.

-

Use Cases: ETL for data in S3, data preparation for Redshift, building a centralized data catalog.

Informatica Intelligent Data Management Cloud (IDMC):

-

Overview: A comprehensive, AI-powered cloud-native platform offering a suite of data management services including data integration, API management, application integration, and MDM .

-

Pros: Enterprise-grade, extensive connectivity, strong data governance and quality features, recognized as a leader by analysts .

-

Cons: Can be complex, pricing may be high for smaller organizations, some users report challenges with error reporting or specific connector performance .

-

Use Cases: Enterprise-wide data integration, cloud data warehousing, application integration, master data management .

Estuary Flow:

-

Overview: A platform for real-time data integration that unifies batch and streaming workloads, handling historical backfills and CDC within the same pipeline with low latency .

-

Pros: Unified batch and streaming, real-time SQL transformations, schema enforcement and versioning, developer-friendly with UI and CLI .

-

Cons: As a newer entrant, its ecosystem and community might be smaller than more established tools.

-

Use Cases: Real-time data warehousing, operational analytics, building event-driven applications .

Data Storage & Management (Cloud Data Warehouses, Lakehouses)

These systems are the heart of the data architecture, providing scalable and efficient storage and query capabilities.

Snowflake:

-

Overview: A cloud-native data platform offering data warehousing, data lakes, data engineering, data science, and data application development with a unique architecture that separates storage, compute, and services .

-

Pros: Excellent scalability (independent scaling of storage and compute), multi-cloud support, easy data sharing, broad ecosystem support, robust security features .

-

Cons: Can be expensive if compute resources are not managed carefully, initial setup and optimization can require expertise .

-

Use Cases: Cloud data warehousing, data lake augmentation, BI and reporting, data sharing, data applications .

Google BigQuery:

-

Overview: A serverless, highly scalable, and cost-effective multicloud data warehouse with built-in ML, geospatial analysis, and BI capabilities .

-

Pros: Serverless (no infrastructure to manage), excellent performance for large queries, strong integration with Google Cloud ecosystem and AI tools (Gemini in BigQuery), supports open table formats .

-

Cons: Pricing model (though flexible with on-demand and flat-rate) requires understanding to optimize costs.

-

Use Cases: Large-scale analytics, real-time analytics with streaming, machine learning with BigQuery ML, BI dashboards .

Amazon Redshift:

-

Overview: A fully managed, petabyte-scale cloud data warehouse service from AWS, designed for high performance and cost-effectiveness .

-

Pros: Integrates deeply with the AWS ecosystem, offers RA3 instances with managed storage for independent scaling of compute and storage, concurrency scaling, materialized views, Redshift ML .

-

Cons: Can require more tuning for optimal performance compared to some competitors, managing workload management (WLM) effectively can be complex.

-

Use Cases: BI and reporting, log analysis, real-time analytics (with streaming ingestion), data lake querying with Redshift Spectrum .

Databricks (Lakehouse Platform featuring Delta Lake):

-

Overview: A unified analytics platform built around Apache Spark that popularizes the "lakehouse" concept, combining the benefits of data lakes and data warehouses using Delta Lake .

-

Delta Lake: An open-source storage layer that brings ACID transactions, scalable metadata handling, schema enforcement/evolution, and time travel to data lakes .

-

Pros: Unified platform for data engineering, data science, and ML; excellent performance via Spark; robust data reliability with Delta Lake; collaborative notebooks .

-

Cons: Can be perceived as expensive (DBU-based pricing), platform complexity for some users .

-

Use Cases: Large-scale ETL/ELT, streaming analytics, machine learning model development and deployment, building reliable data lakes .

Apache Iceberg & Apache Hudi:

-

Overview: Open table formats that provide ACID transactions, schema evolution, and time travel to data lakes, similar to Delta Lake. They are engine-agnostic, supporting Spark, Trino, Flink, etc. .

-

Pros: Enables data warehouse capabilities on data lakes, prevents vendor lock-in, improves data reliability and manageability . Hudi offers specific features like copy-on-write and merge-on-read storage types and advanced indexing.

-

Cons: Still evolving, adoption requires understanding of their specific semantics and integration points.

-

Use Cases: Building open data lakehouses, modernizing existing data lakes, real-time analytics on lake data .

Data Processing & Transformation

These tools are used to clean, reshape, aggregate, and enrich data, making it suitable for analysis.

![Data Transformation Process [26]](/blog/top-data-engineering-tools-2025-data-integration-storage-processing/2.png)

Apache Spark:

-

Overview: A powerful open-source distributed processing engine for large-scale data workloads, supporting batch and real-time analytics through a unified API (Python, SQL, Scala, Java) .

-

Pros: High performance (especially with in-memory processing), versatile (SQL, streaming, ML, graph processing), large community, fault-tolerant .

-

Cons: Can be complex to set up and manage a self-hosted cluster, resource-intensive.

-

Use Cases: Big data processing, ETL/ELT, machine learning pipelines, real-time stream processing .

dbt (data build tool):

-

Overview: A transformation workflow tool that enables data analysts and engineers to transform data in their warehouse more effectively using SQL . It brings software engineering best practices like version control, testing, and documentation to analytics code.

-

Pros: SQL-first approach (accessible to analysts), promotes modular and reusable code, automated testing and documentation, strong community, integrates with major cloud data warehouses .

-

Cons: Primarily focused on the "T" in ELT; orchestration often handled by external tools (though dbt Cloud offers scheduling).

-

Use Cases: Building analytics-ready data models, managing complex data transformations, implementing data quality tests .

Emerging Trends in Data Engineering Tools for 2025 and Beyond

The data engineering landscape is continuously evolving. Key trends for 2025 include:

-

AI-Driven Data Engineering: AI and LLMs are increasingly embedded in data tools to assist with code generation (e.g., SQL, Python), automated code reviews, data quality anomaly detection, pipeline optimization, and even natural language querying .

-

Serverless Architectures: More tools and platforms are adopting serverless paradigms, allowing data engineers to focus on logic rather than infrastructure management, offering auto-scaling and potentially cost savings .

-

Real-Time Data Streaming: The demand for real-time insights continues to grow, pushing for wider adoption of streaming technologies and tools that can handle continuous data flows effectively .

-

Data Products and Domain Ownership: The shift towards treating data as a product, often associated with Data Mesh, influences tool selection towards those supporting discoverability, quality, and clear ownership .

-

Strengthened Data Governance & Privacy: With regulations like the EU AI Act, tools with robust governance, security, and privacy-enhancing features (e.g., automated classification, policy enforcement) are becoming non-negotiable .

-

Cloud Cost Optimization: As cloud data footprints expand, tools offering better visibility into costs and features for optimizing storage and compute are gaining importance .

-

Open Table Formats as Standard: Apache Iceberg, Delta Lake, and Hudi are solidifying their role as the foundation for open and interoperable lakehouse architectures .

Conclusion

The data engineering landscape in 2025 is characterized by powerful tools and evolving architectural paradigms designed to manage increasingly complex data challenges. From real-time streaming and ELT automation to sophisticated data lakehouses and AI-driven capabilities, the right combination of tools can empower organizations to unlock significant value from their data assets. However, tool selection must be guided by clear business objectives, a strong understanding of core data concepts, and a commitment to best practices in data management and governance. As the field continues its rapid evolution, continuous learning and adaptation will be key for data engineering teams to thrive.

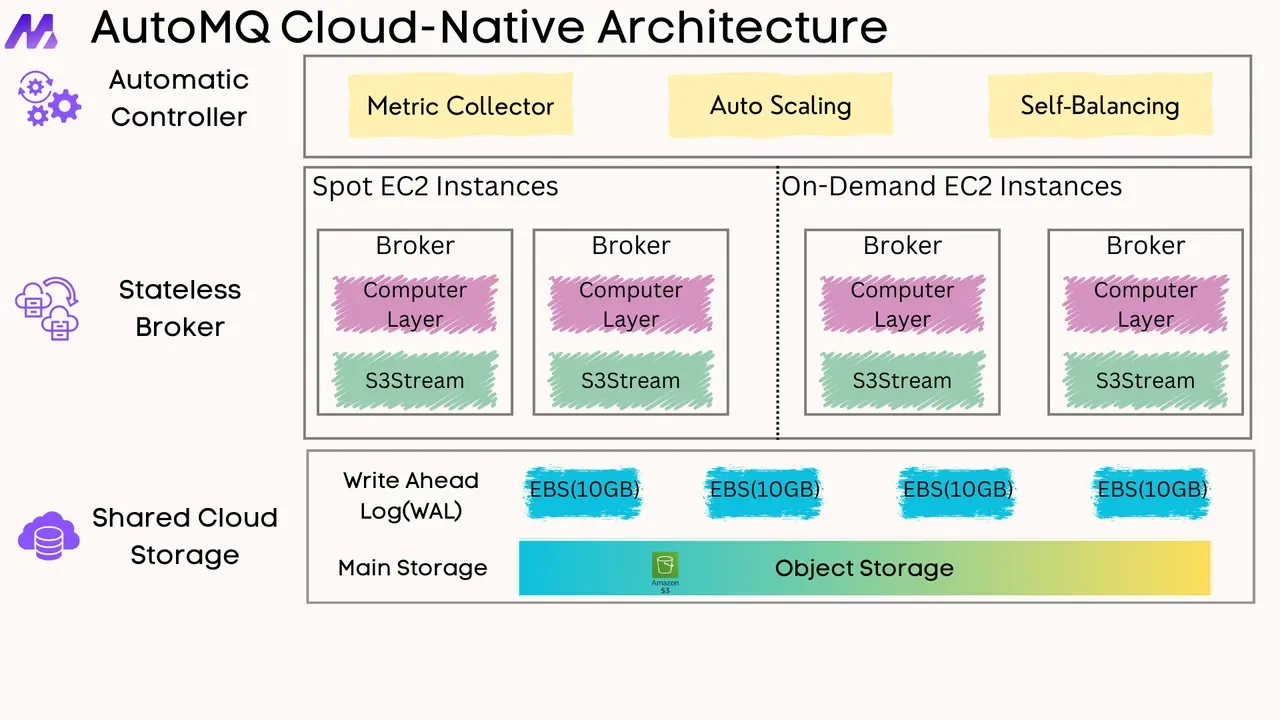

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging