Overview

Event stream processing (ESP) is a data processing paradigm that involves analyzing and acting on continuous streams of data, known as events , in real-time or near real-time. This approach enables organizations to gain immediate insights and make timely decisions based on incoming data from various sources.

What is Event Stream Processing?

Event Stream Processing (ESP) is a technology designed to analyze and act on continuous flows of data —known as event streams—as they arrive. Unlike traditional batch processing, which collects and processes data in chunks at intervals, ESP processes events in motion, enabling immediate insights and responses . An "event" itself is a record of an action or occurrence, such as a website click, a sensor reading, a financial transaction, or a social media update . These events are often described as "unbounded data" because they have no predefined start or end and are continuously generated .

The core idea behind event stream processing is to derive value from data as soon as it's created. This is crucial in scenarios where the relevance of data diminishes rapidly with time. Key characteristics of event stream processing include:

-

Real-time or Near Real-time Processing: Events are processed within milliseconds or seconds of their arrival .

-

Continuous Data Flow: ESP systems are built to handle an unending sequence of events .

-

Stateful Operations: Many ESP applications require maintaining state over time to detect patterns or anomalies across multiple events (e.g., calculating a running average or detecting a sequence of actions).

-

Scalability and Fault Tolerance: ESP systems must be able to scale to handle high volumes of events and be resilient to failures to ensure continuous operation.

-

Chronological Significance: The order and timing of events are often critical for accurate processing and analysis.

The significance of event stream processing in modern data architectures lies in its ability to power event-driven architectures (EDA). In an EDA, services react to events as they happen, leading to more responsive, scalable, and decoupled systems. This contrasts with request-driven architectures where services wait for explicit commands.

![Overview of Event Stream Processing [29]](/blog/what-is-event-stream-processing/1.png)

Core Concepts and Terminology

Understanding event stream processing involves familiarity with several key concepts:

-

Event: A data record representing an occurrence or action. Events are typically small, immutable, and carry a timestamp .

-

Event Stream: An ordered, unbounded sequence of events of the same type or related types.

-

Event Source/Producer: Applications or devices that generate events (e.g., IoT devices, web servers, mobile apps).

-

Event Sink/Consumer: Applications or systems that consume and act upon the processed events (e.g., dashboards, alerting systems, databases).

-

Event Broker (or Message Broker/Streaming Platform): A system that ingests event streams from producers, durably stores them, and makes them available to consumers or processing engines. Apache Kafka is a widely used example . Key components within brokers include:

-

Topics: Named channels to which events are published and from which they are consumed .

-

Partitions: Topics are often divided into partitions to enable parallelism and scalability. Ordering is typically guaranteed within a partition .

-

Offsets: A unique identifier for each event within a partition, indicating its position .

-

-

Stream Processing Engine: The core component that executes the logic for transforming, analyzing, and enriching event streams. Examples include Apache Flink, Apache Spark Streaming, and Kafka Streams.

-

Windowing: A technique used to divide an unbounded stream into finite "windows" for processing. Windows can be based on time (e.g., every 5 minutes) or count (e.g., every 100 events). Common window types include:

-

Tumbling Windows: Fixed-size, non-overlapping windows.

-

Sliding Windows: Fixed-size, overlapping windows.

-

Session Windows: Dynamically sized windows based on periods of activity followed by inactivity.

-

-

State Management: The ability to store and update data derived from events over time. This is crucial for complex operations like aggregations, joins, or pattern detection.

-

Watermarks: A mechanism in stream processing to estimate the progress of event time, helping to handle out-of-order events .

Related Concepts

event stream processing is part of a broader ecosystem of real-time data processing. It's important to distinguish it from, and understand its relationship with, related concepts:

-

Complex Event Processing (CEP): CEP focuses on detecting patterns and relationships among multiple events from different streams to identify higher-level, more significant "complex events" . While ESP often provides the foundation for CEP, CEP typically involves more sophisticated pattern matching and rule-based logic . For instance, detecting that "a customer added an item to their cart, then applied a discount code, then proceeded to checkout within 5 minutes" is a CEP task.

-

Stream Analytics: This term is often used interchangeably with ESP but can also refer more broadly to the end-to-end process of collecting, processing, analyzing, and visualizing streaming data to extract insights. It emphasizes the analytical aspect and business intelligence derived from event streams.

-

Real-time Databases: These are databases designed to ingest, process, and serve data with very low latency, often supporting continuous queries on changing data. While they can be part of an ESP pipeline (e.g., as a sink or a source for enrichment data), ESP itself is more focused on the in-flight processing of data streams .

-

Message Queuing Systems: Systems like RabbitMQ are designed for reliable message delivery between applications. While event brokers used in ESP (like Apache Kafka) share some characteristics with message queues (e.g., pub-sub models), Kafka is specifically optimized for handling high-throughput, persistent streams of event data suitable for stream processing and replayability.

-

Publish-Subscribe (Pub/Sub) Architecture: This is a messaging pattern where "publishers" send messages (events) to "topics" without knowing who the "subscribers" are. Subscribers express interest in specific topics and receive messages published to them . This pattern is fundamental to most ESP systems, enabling decoupling between event producers and consumers.

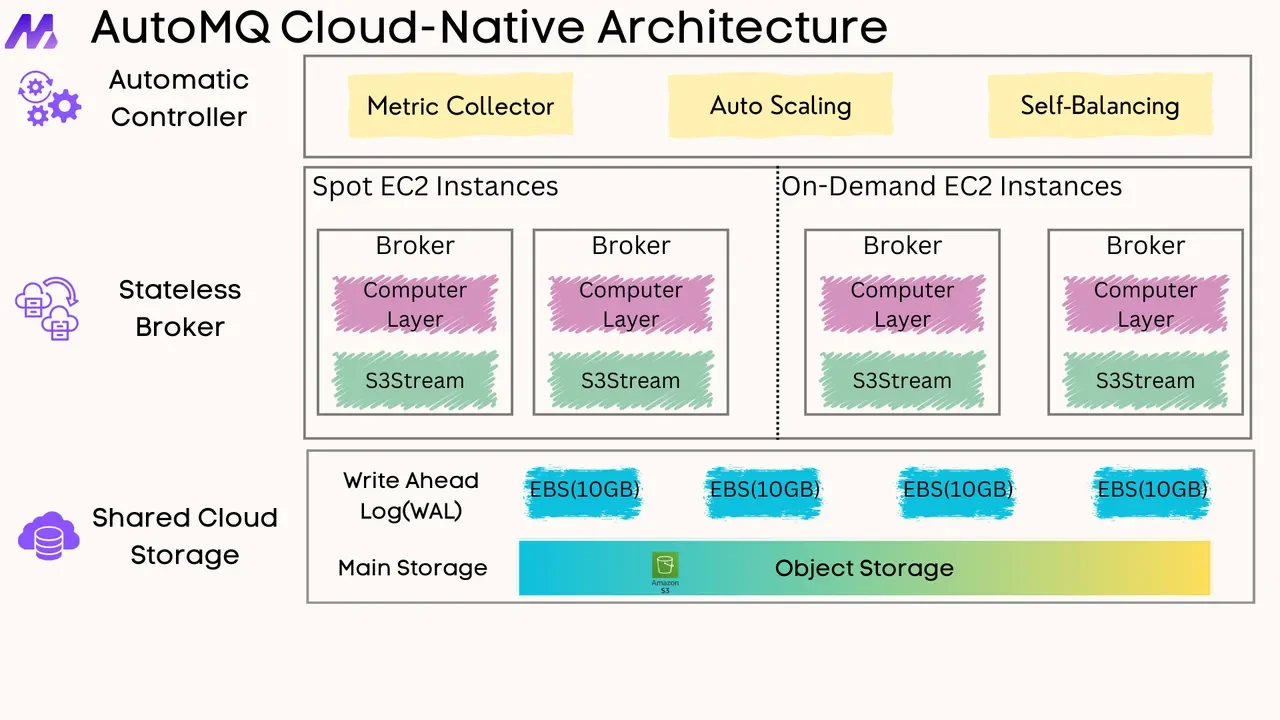

General Architectural Approach

A typical event stream processing system or pipeline follows a general architectural pattern, though specific implementations can vary. The main stages are:

-

Event Sources: These are the origins of the data streams. Examples include:

-

IoT sensors (temperature, location, etc.)

-

Application logs (web server logs, microservice logs)

-

Database change data capture (CDC) streams

-

User activity on websites or mobile apps (clicks, views, transactions)

-

Social media feeds

-

-

Event Ingestion Layer: This layer collects events from various sources and prepares them for the event broker. It might involve data validation, serialization (e.g., to Avro, JSON, Protobuf), and initial filtering.

-

Event Broker: This is the backbone of the streaming platform, responsible for:

-

Receiving and durably storing massive volumes of event streams .

-

Organizing events into topics and partitions .

-

Allowing multiple consumers to read streams independently and at their own pace.

-

Providing fault tolerance and scalability for event data.

-

-

Stream Processing Engine(s): This is where the core logic of analyzing, transforming, and acting on events resides. Processing engines consume streams from the broker, apply computations (filters, aggregations, joins, pattern detection), and may produce new, derived event streams. Common operations include:

-

Filtering: Selecting relevant events based on certain criteria.

-

Transformation/Enrichment: Modifying event data or augmenting it with information from other sources (e.g., joining with a static dataset or another stream).

-

Aggregation: Computing summaries over windows (e.g., counts, sums, averages).

-

Pattern Detection: Identifying specific sequences of events (often a CEP capability).

-

-

Event Sinks (Destinations): Once events are processed, the results are sent to various destinations, such as:

-

Databases or Data Warehouses: For long-term storage and further batch analysis.

-

Real-time Dashboards: For visualization and monitoring.

-

Alerting Systems: To notify users or trigger actions based on critical events.

-

Other Applications/Microservices: To trigger downstream business processes.

-

Two common high-level architectural patterns that incorporate stream processing are:

-

Lambda Architecture: This architecture combines batch processing and stream processing to handle massive datasets. The stream processing layer (speed layer) provides real-time views, while the batch layer (batch layer) computes more comprehensive views on all data. A serving layer then merges results from both. It aims for a balance of low latency and accuracy but can be complex to maintain.

-

Kappa Architecture: This is a simplification of the Lambda architecture that relies solely on a stream processing system to handle all data processing, both real-time and historical (by replaying streams). It uses the stream processing engine to recompute results as needed, aiming for a less complex system by using a single processing paradigm.

Comparison of Selected Open-Source event stream processing Solutions

Several popular open-source stream processing frameworks and libraries are available, each with its strengths and ideal use cases.

| Feature | Apache Flink | Apache Spark Streaming | Kafka Streams | Apache Samza |

|---|---|---|---|---|

| Primary Paradigm | True stream processing (event-at-a-time) | Micro-batching (processes small batches of data) | Library for stream processing within Kafka | Stream processing framework, tightly coupled with Kafka and YARN/standalone |

| Latency | Very low (milliseconds) | Low (seconds to sub-seconds) | Low (milliseconds to seconds) | Low (milliseconds to seconds) |

| State Management | Advanced, built-in, highly fault-tolerant | Supports stateful operations using mapWithState | Robust state stores, RocksDB integration | Local state stores, RocksDB integration |

| Windowing | Rich windowing semantics (event time, processing time) | Time-based windowing | Flexible windowing (event time, processing time) | Time-based and session windowing |

| Processing Model | Operator-based, pipelined execution | Discretized Streams (DStreams), structured streaming | KStream (record stream), KTable (changelog stream) | Task-based processing |

| Data Guarantees | Exactly-once (with appropriate sinks/sources) | Exactly-once (with appropriate sinks/sources) | Exactly-once (within Kafka ecosystem) | At-least-once |

| Deployment | Standalone, YARN, Kubernetes, Mesos | Standalone, YARN, Kubernetes, Mesos | Deployed as part of your application (library) | YARN, Standalone |

| Ease of Use | Steeper learning curve, powerful API | Easier for those familiar with Spark | Simpler for Kafka-centric applications | Moderate complexity |

| Primary Use Cases | Complex event processing, real-time analytics, stateful applications | Stream analytics, ETL, machine learning on streams | Real-time applications and microservices built on Kafka | Large-scale stateful stream processing applications |

| References |

Cloud providers also offer managed event stream processing services, such as Google Cloud Dataflow and Azure Stream Analytics, which provide serverless or managed environments for running stream processing jobs, often integrating deeply with their respective cloud ecosystems .

Conclusion

Event Stream Processing has emerged as a critical capability for organizations aiming to leverage the full potential of their real-time data. By processing and analyzing events as they occur, event stream processing enables businesses to gain immediate insights, react swiftly to changing conditions, build responsive applications, and unlock new opportunities for innovation and efficiency. While it presents unique challenges in terms of scalability, state management, and fault tolerance, the advancements in stream processing technologies and adherence to best practices allow businesses to effectively harness the power of event streams. As the volume and velocity of data continue to grow, the importance of event stream processing in modern data architectures will only increase.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging