Introduction

Modern applications often need to react to events as soon as they happen. Whether it is fraud detection, live dashboards, or continuous data integration, the foundation is the same: an event stream that never stops. Two open-source projects dominate this space. Apache Kafka offers a durable, distributed log that moves data between systems. Apache Flink executes real-time computations on that data. Although their names sometimes appear together in architectural diagrams, they solve different problems. This article explains each system, compares their designs, and shows how they complement each other in a complete streaming stack.

Apache Kafka in a Nutshell

Kafka is a publish-subscribe platform built around the idea of an append-only log. Data is organised into topics that are split into partitions for parallelism [1]. Producers write records to a chosen partition; consumers read records in the same order. Every partition keeps an ever-growing sequence of offsets, so any consumer can rewind and replay data on demand.

Architecture

Brokers store partitions, replicate them for fault tolerance, and serve read/write requests.

Leaders receive writes; followers replicate the same bytes and take over if the leader fails [2].

Producers batch and compress events before sending.

Consumers pull events and track their own offsets inside a consumer group . Kafka only guarantees order inside a single partition, which keeps concurrency high [3].

From version 3 onwards Kafka can run without ZooKeeper, using an internal Raft quorum to manage metadata, which simplifies deployment and reduces moving parts [2].

![Apache Kafka Architecture [9]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/68f5ebc2f4aa6742a902e26a_67480fef30f9df5f84f31d36%252F685e62750df4a037e5bcd8c2_hRzi.png)

Primary Use Cases

High-throughput messaging between micro-services

Durable event sourcing and audit logs

Streaming ingestion for analytical engines

Buffering bursts so downstream services read at their own speed

Apache Flink in a Nutshell

Flink is a stream-processing engine that treats unbounded data as its natural input. It exposes a fluent DataStream API for Java & Scala, and a relational Table API / SQL interface for analysts.

Core Concepts

Streams and Operators – User functions (map, filter, window, join) form a directed acyclic graph that Flink executes in parallel.

State – Keyed operators keep per-key variables such as counters, sets, or machine-learning models. Flink manages that state transparently and stores it locally or inside RocksDB [5].

Event Time and Watermarks – Time is based on the event’s original timestamp, not system clock, so late arrivals are handled correctly.

Checkpoints and Savepoints – A barrier snapshot algorithm records operator state plus input offsets on a regular schedule, giving exactly-once recovery after failure [6].

![Flink Cluster Overview [8]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/68f5ebc2f4aa6742a902e26e_67480fef30f9df5f84f31d36%252F685e63e8a838da75867dcdad_Er8o.png)

Deployment Model

A JobManager coordinates scheduling and recovery; TaskManagers execute operators. Each task has slots that run threads in isolation. Flink can run on bare metal, YARN, Kubernetes, or as a per-job “application cluster”. Resource limits, memory split, and network buffers are all configurable at startup.

Primary Use Cases

Real-time aggregations and alerting

Complex event processing (CEP) such as pattern detection

Streaming ETL pipelines that enrich, filter, and join live data

Unified batch and streaming jobs with the same API

Design Goals and Philosophies

| Aspect | Apache Kafka | Apache Flink |

|---|---|---|

| Goal | Durable transport of ordered events | Stateful computation on flowing data |

| Core Abstraction | Partitioned log on disk | Distributed operator graph in memory |

| Data Retention | Configurable hours, days, or forever | Only keeps operator state; source retains full history |

| Scaling Unit | Extra partitions and brokers | Higher parallelism and TaskManagers |

| Fault Tolerance | Replication plus client offset management [4] | Checkpointing with consistent state snapshots [6] |

| Strength | Move data reliably at terabyte scale | Compute low-latency, exactly-once analytics |

Kafka aims to be a dumb, fast, and durable log. Flink aims to be a smart, fault-tolerant calculator that sits beside that log. The projects rarely overlap, except where Kafka includes its own lightweight library for local processing. For heavy workloads, most teams still offload complex jobs to Flink.

Processing Model and State Management

Kafka’s brokers are stateless regarding message content: they write bytes to disk and replicate them. Any per-record logic runs inside clients. A consumer library may hold local state, but that state is limited to one process and is backed up by re-playing its own changelog topics, adding network overhead [2].

Flink, on the other hand, places state at the centre of its design. A keyed operator can easily hold gigabytes of data without user code for reloads. Flink periodically snapshots the state, plus the Kafka offsets of every source, to object storage. If a node crashes the job restarts from the last successful checkpoint, reads from the same offsets, and produces identical results – the essence of exactly-once semantics [6].

Fault Tolerance in Practice

Kafka

Writes are durable when the leader and a configurable number of followers have flushed them to disk.

Producers can set

acks=alland enable idempotence to eliminate duplicates [4].Consumers commit offsets after processing to guarantee at-least-once delivery.

Flink

The checkpoint interval balances overhead and recovery time.

When Flink writes back to Kafka, its sink can open a short-lived transaction for each checkpoint, commit on success, or abort if the job fails. That yields end-to-end exactly-once between the two systems [7].

Performance and Scalability

Kafka’s throughput grows with the number of partitions. Each additional broker increases cumulative disk and network capacity. The only hard limits are total partitions per cluster and disk IOPS [4].

Flink’s throughput grows with parallelism. Operators communicate through a high-performance network stack and exchange data via bounded buffers. When state becomes too large for heap, RocksDB spills it to local SSDs; incremental checkpoints then upload only binary diffs, reducing I/O cost.

Both projects have matured to run at multi-petabyte scale, but each demands its own tuning discipline. Kafka administrators watch partition counts and flush times, while Flink operators watch checkpoint duration, back-pressure graphs, and memory ratios.

Integration Patterns

A canonical pipeline looks like this:

Producers ──► Kafka (raw topic) ──► Flink job ──► Kafka (enriched topic) ──► Consumers

Producers write raw events to a topic.

Flink sources subscribe with exactly-once mode enabled.

The job filters, joins, and aggregates.

Results go to a new topic, a database, or cloud storage.

Because Kafka retains raw data, the job can replay history whenever logic changes. Because Flink holds state and offsets in checkpoints, recovery is deterministic. The pipeline therefore combines durable replay with precise computation, a key requirement for use cases such as payment processing or user segmentation.

Best Practices for Production Kafka

Replication factor three and

min.insync.replicas=2prevent data loss during maintenance [4].Even partition keys distribute load; avoid a single “hot” key.

Monitor under-replicated partitions and consumer lag to detect issues early [3].

Tune producer batching (

linger.ms,batch.size) for a sweet spot between latency and throughput.Encrypt connections with TLS and configure ACLs; the broker is otherwise open to any client [4].

Best Practices for Production Flink

Use event time plus watermarks for correct windows on out-of-order data.

Choose RocksDB state backend when keyed state is large; keep local SSDs fast.

Align checkpoint interval with recovery objectives and storage bandwidth [6].

Set a generous maxParallelism at job creation, so future scale-up reuses the same savepoint.

Watch the web UI for back-pressure; it pinpoints slow operators before latencies explode.

When to Use Which Tool

| Scenario | Recommended Tool |

|---|---|

| Moving records between micro-services | Kafka |

| Retaining a complete, immutable event history | Kafka |

| Counting events per user per minute | Flink |

| Joining two high-volume streams on session windows | Flink |

| Buffering bursts then replaying at leisure | Kafka |

| Continuous ETL from raw to enriched topic | Kafka + Flink |

Kafka will not sort, filter, or aggregate records for you; that logic belongs in a consumer or a separate engine. Flink will not store months of unprocessed data; that responsibility rests with Kafka. Together, the systems cover the full life-cycle from ingestion to insight.

A Note on Simplicity

Many teams start with only Kafka; they wire a small consumer service to do light processing. Over time, business questions grow: late events break counts, joins span hours, state exceeds memory. At that point, a dedicated stream processor like Flink becomes attractive. Migrating logic from a hand-rolled consumer into Flink often reduces code size and improves correctness because time, state, and failure recovery are solved inside the framework.

Conclusion

Apache Kafka and Apache Flink are complementary technologies. Kafka excels at capturing and serving ordered event logs, while Flink excels at stateful, low-latency analytics on those logs. A modern streaming platform therefore uses both:

Kafka as the durable, partitioned backbone that decouples producers from consumers.

Flink as the distributed brain that detects patterns, aggregates metrics, and reacts in milliseconds.

By separating storage concerns from processing concerns, architects gain clear scaling levers and operational boundaries. Kafka’s design focuses on disk, replication, and client offsets. Flink’s design focuses on state, time, and exactly-once checkpoints. Understanding those differences enables teams to place each system where it shines and to deliver reliable, real-time data applications.

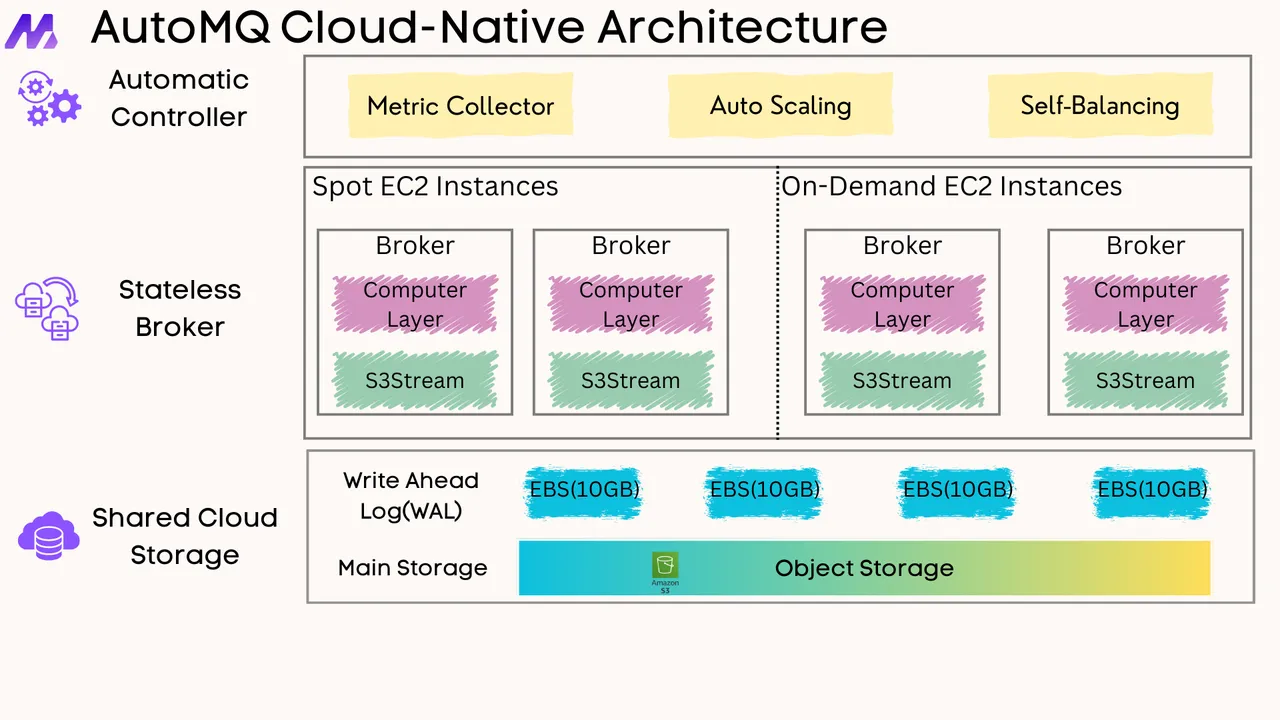

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)