Introduction

In modern enterprises, as data processing demands continue to grow, [AutoMQ][1] has become a critical component for real-time data processing due to its efficiency and low cost. However, with the expansion of cluster scale and increasing business complexity, ensuring the stability, high availability, and performance optimization of AutoMQ clusters becomes particularly important. Therefore, integrating a powerful and comprehensive monitoring system is essential for maintaining the healthy operation of AutoMQ clusters. The [Nightingale monitoring system][2] is an ideal choice for enterprises to monitor AutoMQ clusters due to its efficient data collection, flexible alert management, and rich visualization capabilities. By utilizing the Nightingale monitoring system, enterprises can grasp the operational status of AutoMQ clusters in real time, promptly identify and resolve potential issues, optimize system performance, and ensure business continuity and stability.

AutoMQ Overview

AutoMQ is a cloud-native stream processing system that maintains 100% compatibility with Apache Kafka while significantly enhancing cost-efficiency and elasticity by decoupling storage to object storage. Specifically, AutoMQ leverages S3Stream, a stream storage repository built on S3, offloading storage to shared cloud storage such as EBS and S3 provided by cloud providers. This approach delivers low-cost, low-latency, high-availability, high-durability, and virtually unlimited stream storage capacity. Compared to traditional Shared Nothing architectures, AutoMQ adopts a Shared Storage architecture, which significantly reduces storage and operational complexity while enhancing system elasticity and reliability.

AutoMQ's design philosophy and technical advantages make it an ideal choice for replacing existing Kafka clusters in enterprises. By adopting AutoMQ, enterprises can significantly reduce storage costs, simplify operations and maintenance, and achieve automatic scaling and self-balancing of clusters, thereby responding more efficiently to changes in business demands. Additionally, AutoMQ's architecture supports efficient cold read operations and zero-interruption services, ensuring stable operation under high load and burst traffic conditions. Its storage structure is as follows:

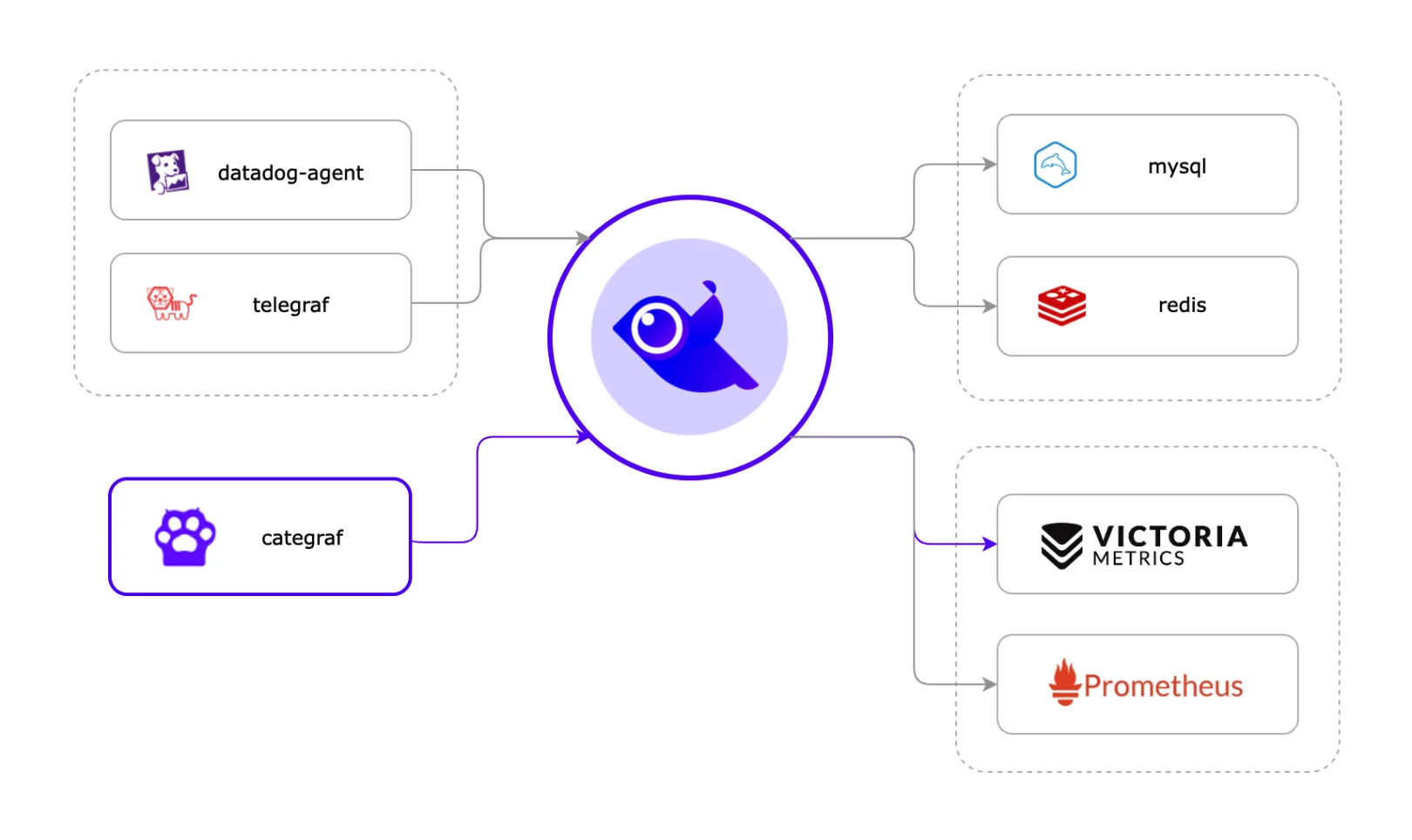

Overview of Nightingale

Nightingale Monitoring System (Nightingale) is an open-source, cloud-native observation and analysis tool that adopts an All-in-One design philosophy, integrating data collection, visualization, monitoring alerts, and data analysis. Its main advantages include efficient data collection capabilities, flexible alert strategies, and rich visualization features. Nightingale is tightly integrated with various cloud-native ecosystems, supports multiple data sources and storage backends, and provides low-latency, high-reliability monitoring services. By using Nightingale, enterprises can achieve comprehensive monitoring and management of complex distributed systems, quickly locate and resolve issues, thereby optimizing system performance and enhancing business continuity.

Prerequisites

To achieve cluster state monitoring, you need the following environment:

-

Deploy an available AutoMQ node/cluster and open the Metrics collection port

-

Deploy Nightingale monitoring and its dependencies

-

Deploy [Prometheus] [4] to obtain Metrics data

Deploy AutoMQ, Prometheus, and Nighthawk Monitoring

Deploy AutoMQ

Refer to the AutoMQ documentation: [Cluster Deployment | AutoMQ] [5]. Before starting the deployment, add the following configuration parameters to enable the Prometheus scraping interface. After starting the AutoMQ cluster with the parameters below, each node will additionally open an HTTP interface for pulling AutoMQ monitoring metrics. These metrics adhere to the Prometheus Metrics format.

bin/kafka-server-start.sh ...\

--override s3.telemetry.metrics.exporter.type=prometheus \

--override s3.metrics.exporter.prom.host=0.0.0.0 \

--override s3.metrics.exporter.prom.port=8890 \

....When AutoMQ monitoring metrics are enabled, they can be pulled from any node via the HTTP protocol at the address: http://{node_ip}:8890. An example response is shown below:

....

kafka_request_time_mean_milliseconds{otel_scope_name="io.opentelemetry.jmx",type="DescribeDelegationToken"} 0.0 1720520709290

kafka_request_time_mean_milliseconds{otel_scope_name="io.opentelemetry.jmx",type="CreatePartitions"} 0.0 1720520709290

...

For more information about the metrics, refer to the AutoMQ official documentation: [Metrics | AutoMQ] [6].

Deploy Prometheus

Prometheus can be deployed by downloading the binary package or by using Docker. The following describes these two deployment methods.

Binary Deployment

To facilitate usage, you can create a new script and modify the Prometheus download version as needed. Finally, execute the script to complete the deployment. First, create a new script:

cd /home

vim install_prometheus.sh

chmod +x install_prometheus.sh

./install_prometheus.shThe script content is as follows:

version=2.45.3

filename=prometheus-${version}.linux-amd64

mkdir -p /opt/prometheus

wget https://github.com/prometheus/prometheus/releases/download/v${version}/${filename}.tar.gz

tar xf ${filename}.tar.gz

cp -far ${filename}/* /opt/prometheus/

# config as a service

cat <<EOF >/etc/systemd/system/prometheus.service

[Unit]

Description="prometheus"

Documentation=https://prometheus.io/

After=network.target

[Service]

Type=simple

ExecStart=/opt/prometheus/prometheus --config.file=/opt/prometheus/prometheus.yml --storage.tsdb.path=/opt/prometheus/data --web.enable-lifecycle --web.enable-remote-write-receiver

Restart=on-failure

SuccessExitStatus=0

LimitNOFILE=65536

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=prometheus

[Install]

WantedBy=multi-user.target

EOF

systemctl enable prometheus

systemctl restart prometheus

systemctl status prometheusThen modify the Prometheus configuration file, add tasks to collect observability data from AutoMQ, and restart Prometheus by executing the command:

vim /opt/prometheus/prometheus.yml

systemctl restart prometheusThe configuration file content is as follows. Please replace `client_ip` with the address where AutoMQ exposes observability data:

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

static_configs:

- targets: ["localhost:9090"]

- job_name: "automq"

static_configs:

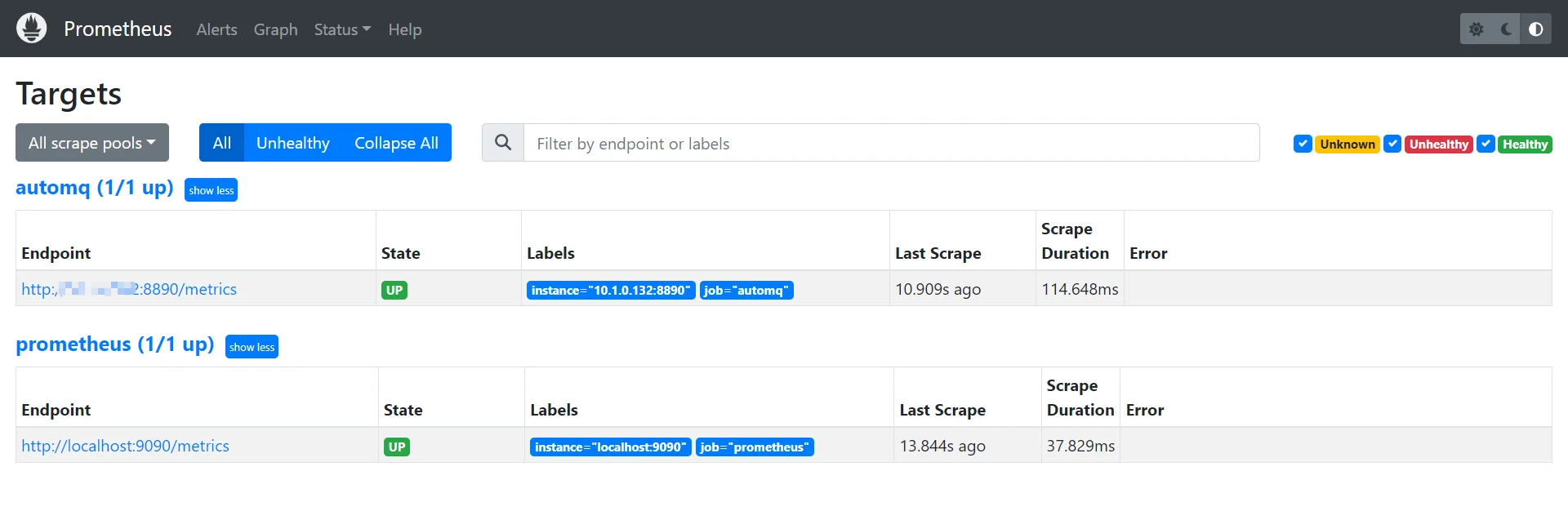

- targets: ["{client_ip}:8890"]After deployment, you can access Prometheus through a browser to check if it has successfully collected metrics data from AutoMQ by visiting `http://{client_ip}:9090/targets`:

Docker Deployment

If you already have a running Prometheus Docker container, please execute the command to remove the container:

docker stop prometheus

docker rm prometheusCreate a new configuration file and mount it when starting Docker:

mkdir -p /opt/prometheus

vim /opt/prometheus/prometheus.ymlStart Docker Container:

docker run -d \

--name=prometheus \

-p 9090:9090 \

-v /opt/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml \

-m 500m \

prom/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--enable-feature=otlp-write-receiver \

--web.enable-remote-write-receiverThis will provide you with a Prometheus service for collecting AutoMQ Metrics. For more information on integrating AutoMQ Metrics with Prometheus, refer to: [Integrating Metrics into Prometheus | AutoMQ] [7].

Deploy NocMonitor

NocMonitor can be deployed using the following three methods. For more detailed deployment instructions, refer to the [official documentation] [8]:

-

Deploy using Docker Compose

-

Deploy using Binary

-

Deploy using Helm

Next, I will proceed with the deployment using the binary method.

Download Nightingale

Please visit the [Github releases][9] page for Nightingale and select the appropriate version for download. In this example, we use version v7.0.0-beta.14. If you are using an AMD architecture machine, you can directly execute the following command:

cd /home

wget https://github.com/ccfos/nightingale/releases/download/v7.0.0-beta.14/n9e-v7.0.0-beta.14-linux-amd64.tar.gz

mkdir -p /home/flashcat

tar -xzf /home/n9e-v7.0.0-beta.14-linux-amd64.tar.gz -C /home/flashcat

cd /home/flashcatConfigure Dependencies

Nightingale relies on MySQL and Redis, so these environments need to be installed beforehand. You can deploy them using Docker or by executing commands directly. Refer to the following commands:

# install mysql

yum -y install mariadb*

systemctl enable mariadb

systemctl restart mariadb

mysql -e "SET PASSWORD FOR 'root'@'localhost' = PASSWORD('1234');"

# install redis

yum install -y redis

systemctl enable redis

systemctl restart redisHere, Redis is configured without a password. The MySQL database password is set to 1234. If you need to change the password, you must configure it in the Nightingale configuration file to ensure Nightingale can connect to your database. Modify the Nightingale configuration file:

vim /home/flashcat/etc/config.toml

# postgres: host=%s port=%s user=%s dbname=%s password=%s sslmode=%s

# postgres: DSN="host=127.0.0.1 port=5432 user=root dbname=n9e_v6 password=1234 sslmode=disable"

# sqlite: DSN="/path/to/filename.db"

DSN = "{username}:{password}@tcp(127.0.0.1:3306)/n9e_v6?charset=utf8mb4&parseTime=True&loc=Local&allowNativePasswords=true"

# enable debug mode or not

Import Database Tables

Execute the following command:

mysql -uroot -p1234 < n9e.sqlPlease use a database tool to verify whether the database tables have been successfully imported:

> show databases;

+--------------------+

| Database |

+--------------------+

| n9e_v6 |

+--------------------+

> show tables;

+-----------------------+

| Tables_in_n9e_v6 |

+-----------------------+

| alert_aggr_view |

| alert_cur_event |

| alert_his_event |

| alert_mute |

| alert_rule |

| alert_subscribe |

| alerting_engines |

| board |

| board_busigroup |

| board_payload |

| builtin_cate |

| builtin_components |

| builtin_metrics |

······Modify the Nightingale Configuration File

You need to modify the Nightingale configuration file to set up the Prometheus data source:

vim /home/flashcat/etc/config.toml

[[Pushgw.Writers]]

# Url = "http://127.0.0.1:8480/insert/0/prometheus/api/v1/write"

Url = "http://{client_ip}:9090/api/v1/write"Start Nightingale

In the root directory of Nightingale located at /home/flashcat, execute: `./n9e`. Once successfully started, you can access it in your browser at http://{client_ip}:17000. The default login credentials are:

-

Username: root

-

Password: root.2020

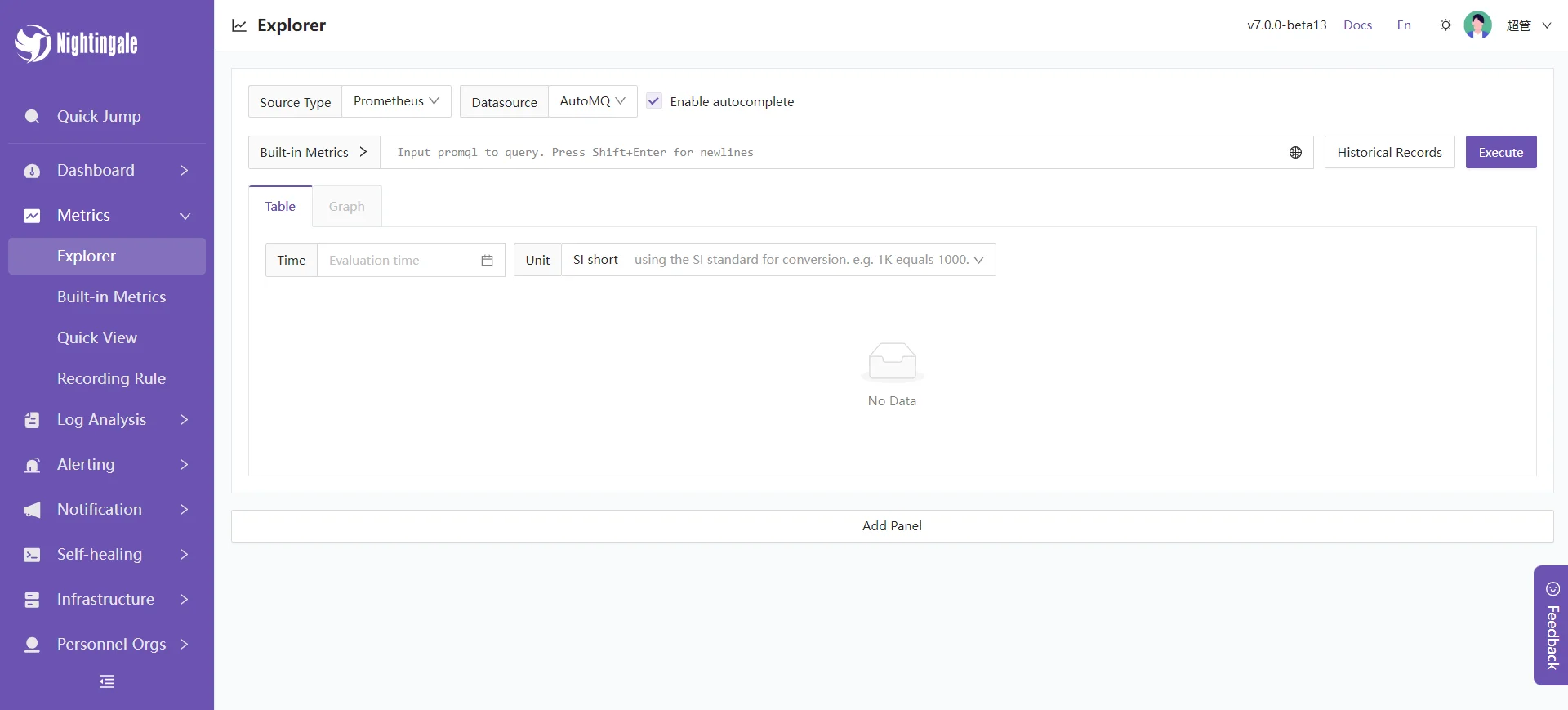

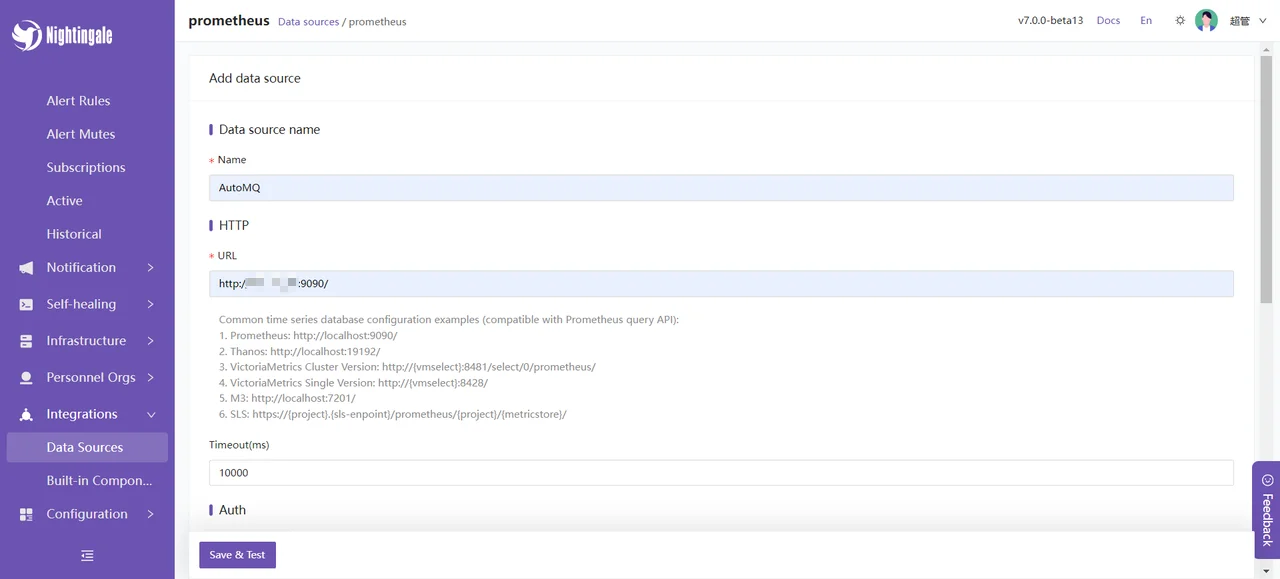

Connect to Prometheus Data Source

In the left sidebar, navigate to Integration -> Data Sources -> Prometheus.

At this point, our Nightingale monitoring deployment is complete.

Nightingale Monitoring AutoMQ Cluster Status

Next, I will introduce some features provided by Nightingale monitoring to help you better understand the available functionalities integrated with AutoMQ.

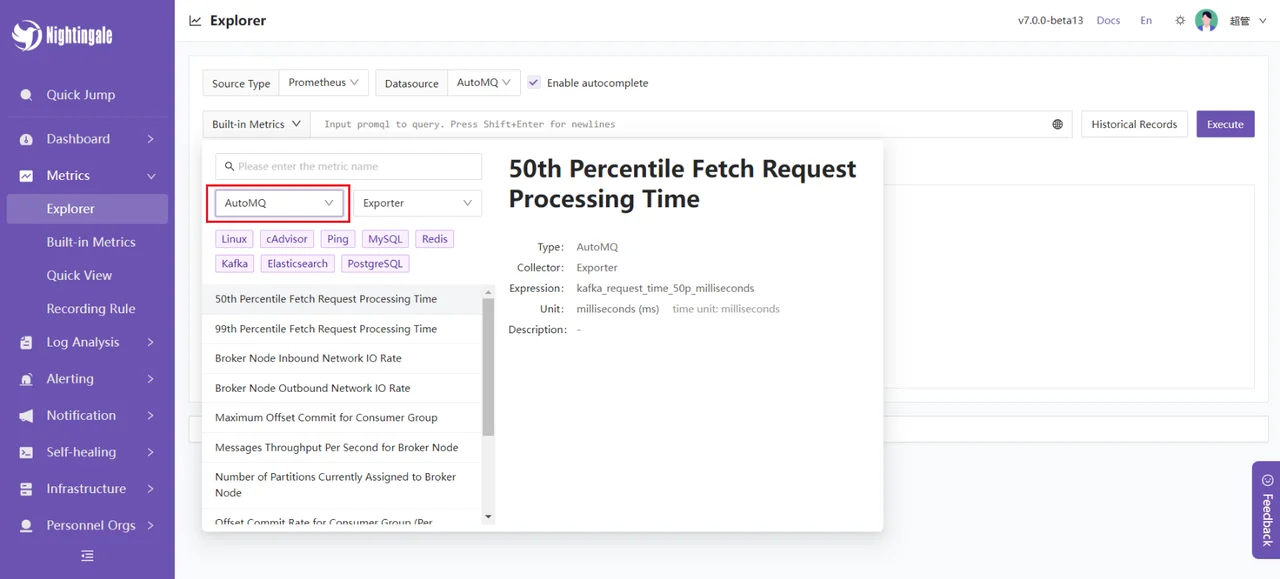

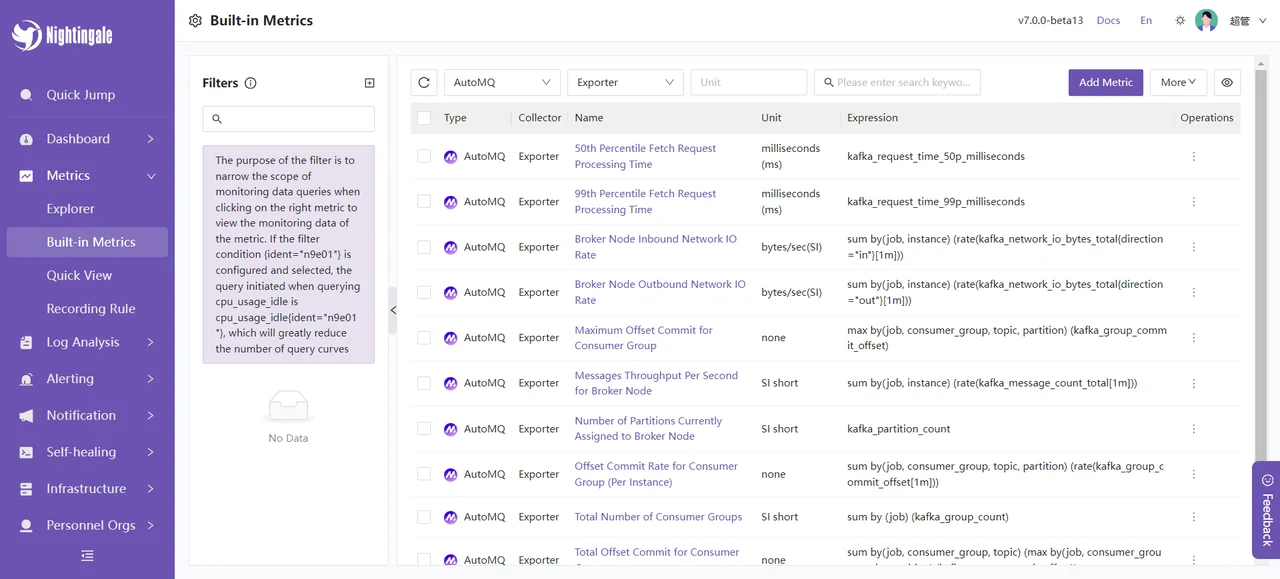

Instant Query

Select built-in AutoMQ metrics:

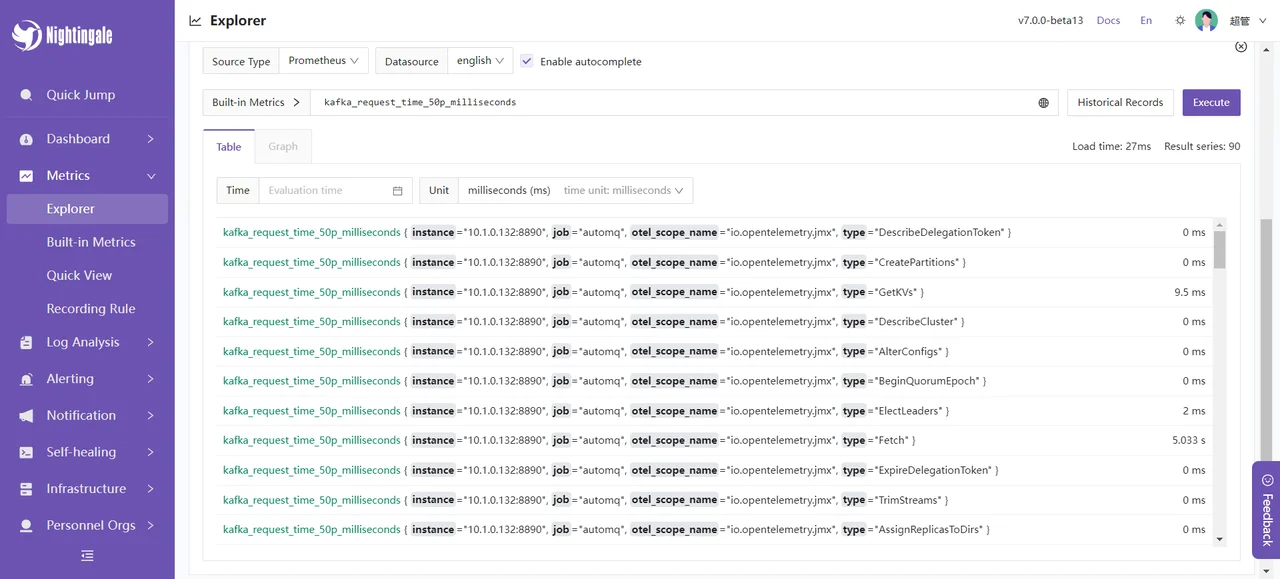

You can try querying some data, such as the average fetch request processing time: kafka_request_time_50p_milliseconds.

You can also customize some metrics and aggregate them using expressions.

Alert Function

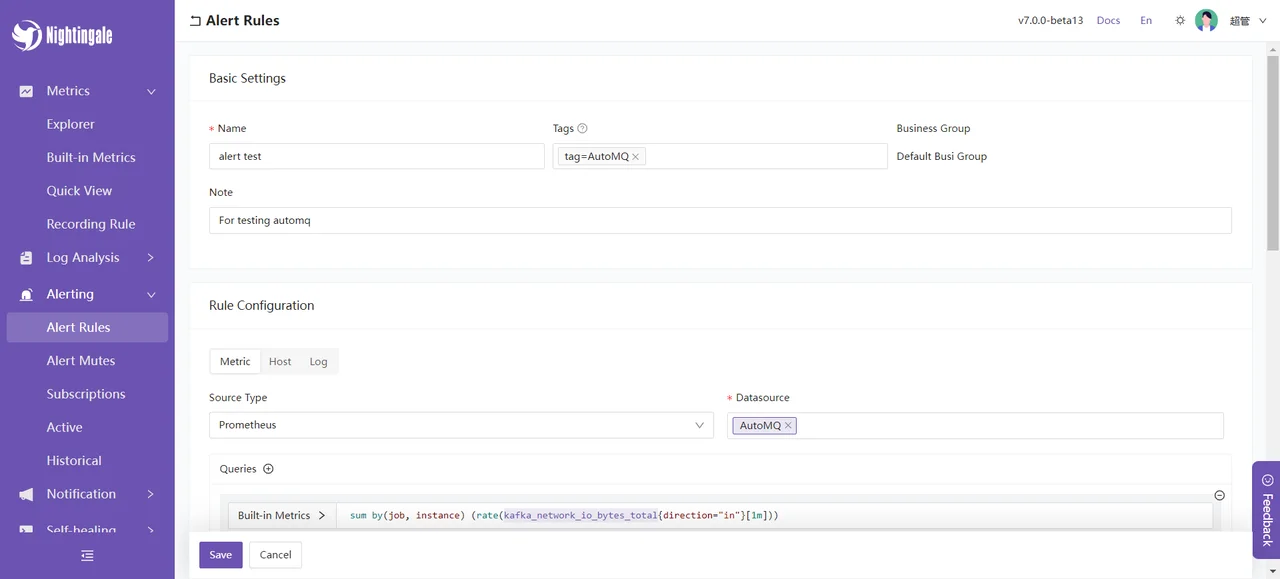

Choose the left sidebar Alerts -> Alert Rules -> New Rule. For example, we can set an alert for `kafka_network_io_bytes_total`, which measures the total number of bytes sent or received by Kafka Broker nodes over the network. By configuring an expression for this metric, you can calculate the inbound network I/O rate for Kafka Broker nodes. The expression is:

sum by(job, instance) (rate(kafka_network_io_bytes_total{direction="in"}[1m]))Configure the alert rule:

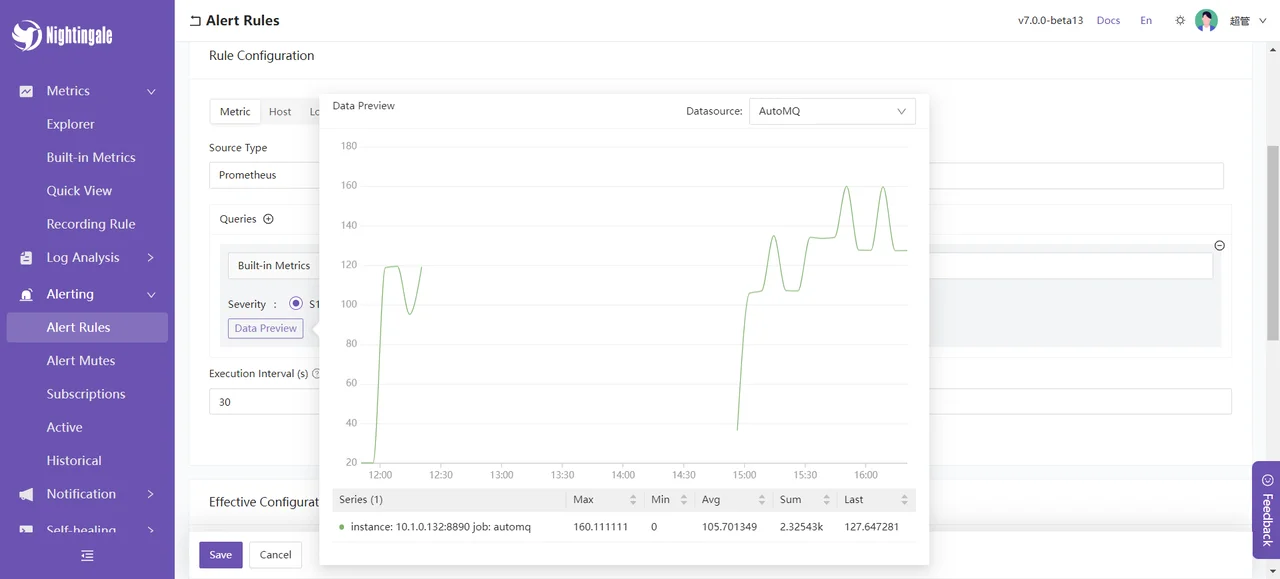

Data preview:

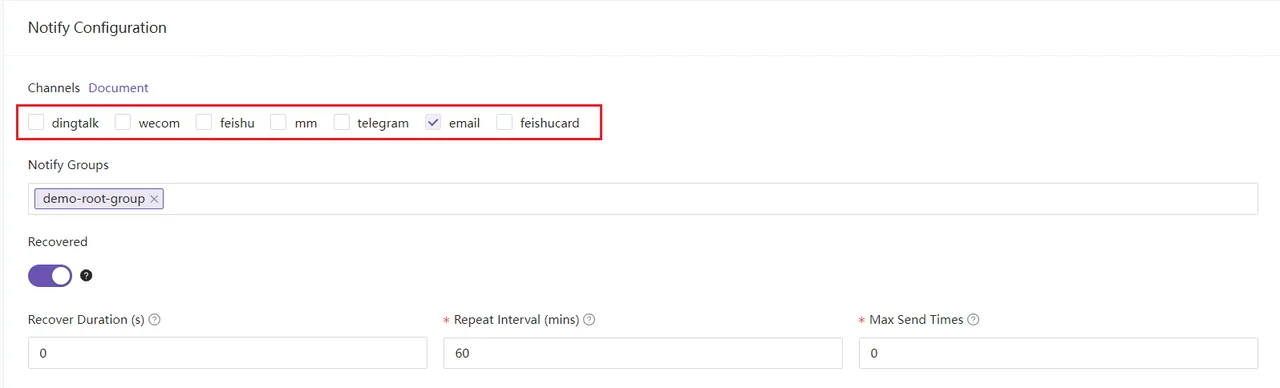

You can also set up notifications for groups when an alert occurs:

After creating the alert, let's simulate a high-concurrency message processing scenario: A total of 2,500,000 messages are sent to the AutoMQ nodes within a short period. The approach I used is to send messages via Kafka SDK, with 50 Topics in total, sending 500 messages to each Topic, and repeating this 100 times. An example is as follows:

import org.apache.kafka.clients.admin.AdminClient;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.admin.NewTopic;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.ArrayList;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

public class KafkaTest {

private static final String BOOTSTRAP_SERVERS = "http://{}:9092"; // your automq broker ip

private static final int NUM_TOPICS = 50;

private static final int NUM_MESSAGES = 500;

public static void main(String[] args) throws Exception {

KafkaTest test = new KafkaTest();

// test.createTopics(); // create 50 topics

for(int i = 0; i < 100; i++){

test.sendMessages(); // 25,000 messages will be sent each time, and 500 messages will be sent to each of 50 topics.

}

}

public void createTopics() {

Properties props = new Properties();

props.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, BOOTSTRAP_SERVERS);

try (AdminClient adminClient = AdminClient.create(props)) {

List<NewTopic> topics = new ArrayList<>();

for (int i = 1; i <= NUM_TOPICS; i++) {

topics.add(new NewTopic("Topic-" + i, 1, (short) 1));

}

adminClient.createTopics(topics).all().get();

System.out.println("Topics created successfully");

} catch (InterruptedException | ExecutionException e) {

e.printStackTrace();

}

}

public void sendMessages() {

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, BOOTSTRAP_SERVERS);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

try (KafkaProducer<String, String> producer = new KafkaProducer<>(props)) {

for (int i = 1; i <= NUM_TOPICS; i++) {

String topic = "Topic-" + i;

for (int j = 1; j <= NUM_MESSAGES; j++) {

String key = "key-" + j;

String value = "{\"userId\": " + j + ", \"action\": \"visit\", \"timestamp\": " + System.currentTimeMillis() + "}";

ProducerRecord<String, String> record = new ProducerRecord<>(topic, key, value);

producer.send(record, (RecordMetadata metadata, Exception exception) -> {

if (exception == null) {

System.out.printf("Sent message to topic %s partition %d with offset %d%n", metadata.topic(), metadata.partition(), metadata.offset());

} else {

exception.printStackTrace();

}

});

}

}

System.out.println("Messages sent successfully");

}

}

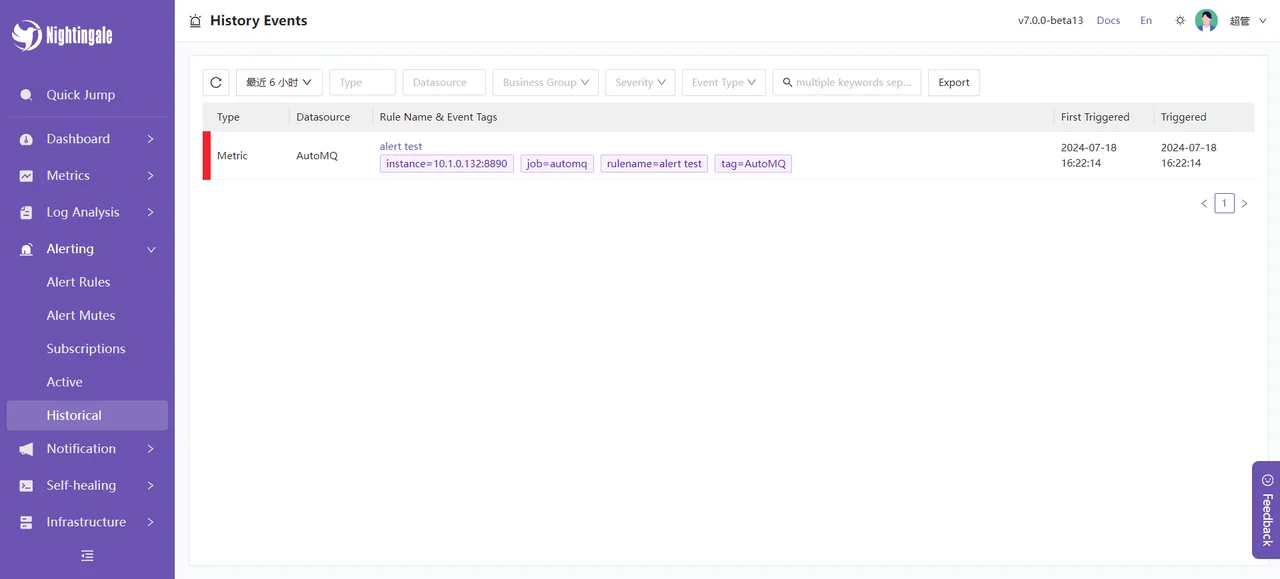

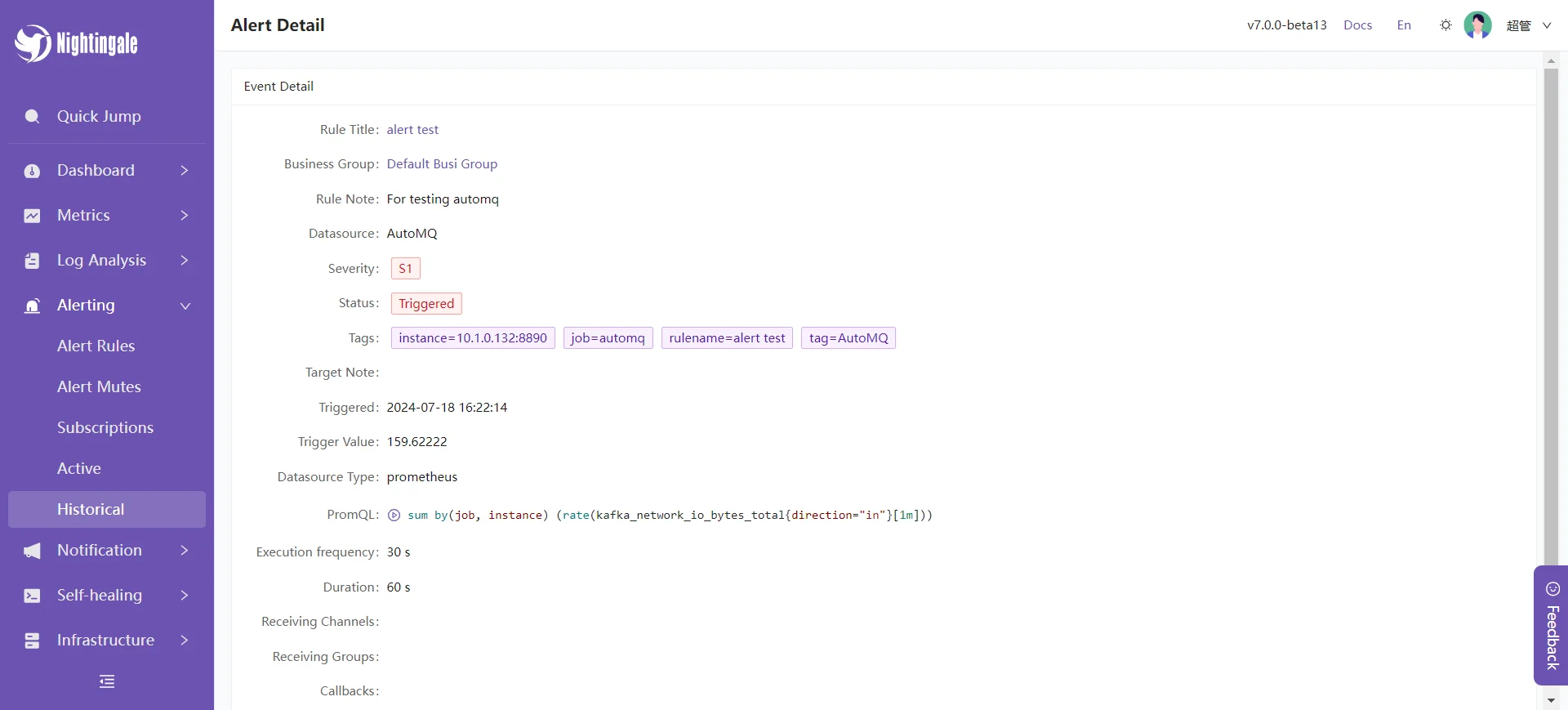

}Subsequently, we can view the alert information on the Nighthawk console:

Alert details:

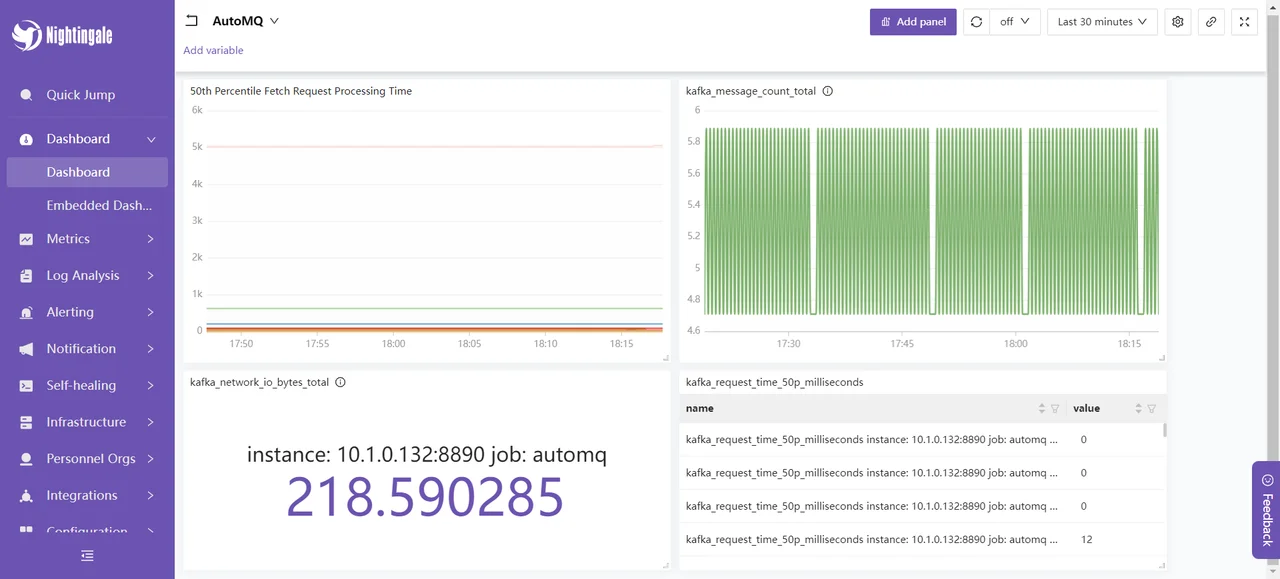

Dashboard

First, we can create our own dashboard using known metrics, such as the dashboard for AutoMQ message request processing time, total number of messages, and network IO bits, as shown below:

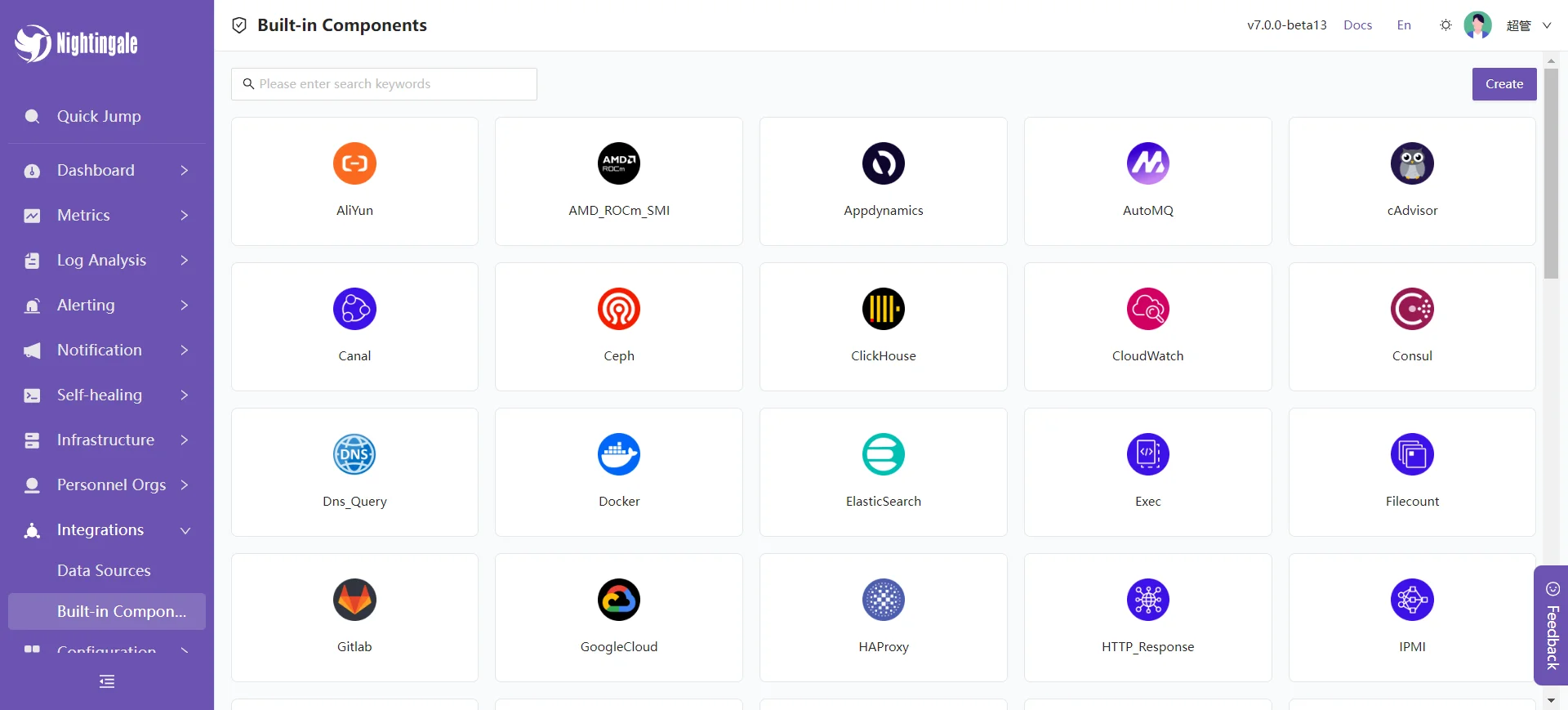

At the same time, we can use the built-in official dashboard for monitoring. Left sidebar -> Aggregation -> Template Center:

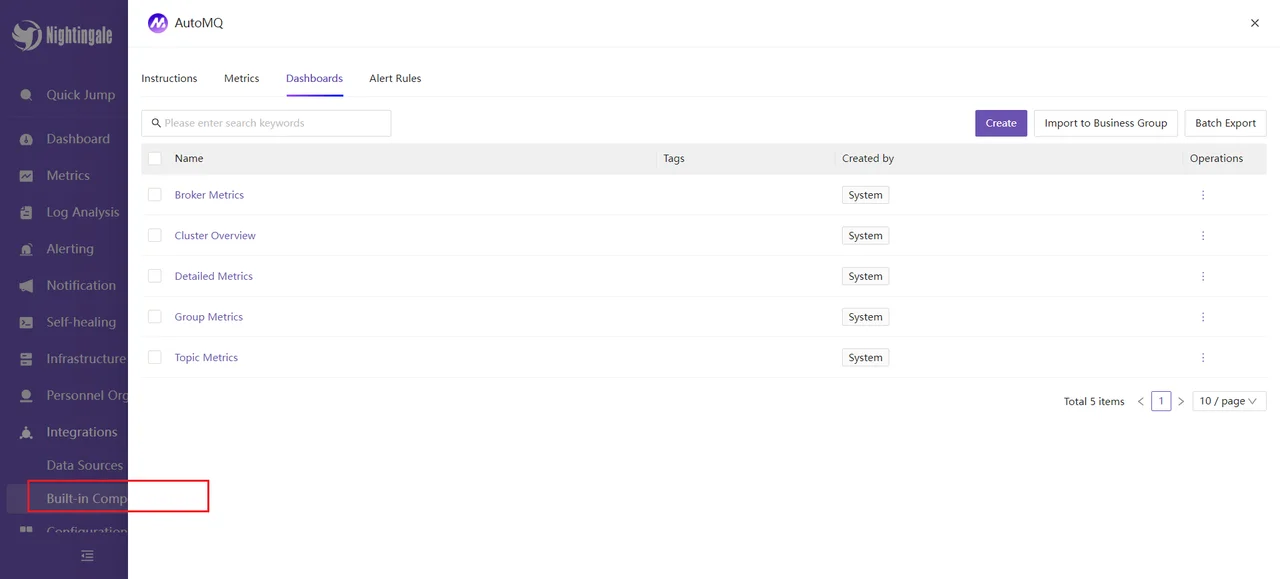

Select AutoMQ, and you will see several Dashboards available for use:

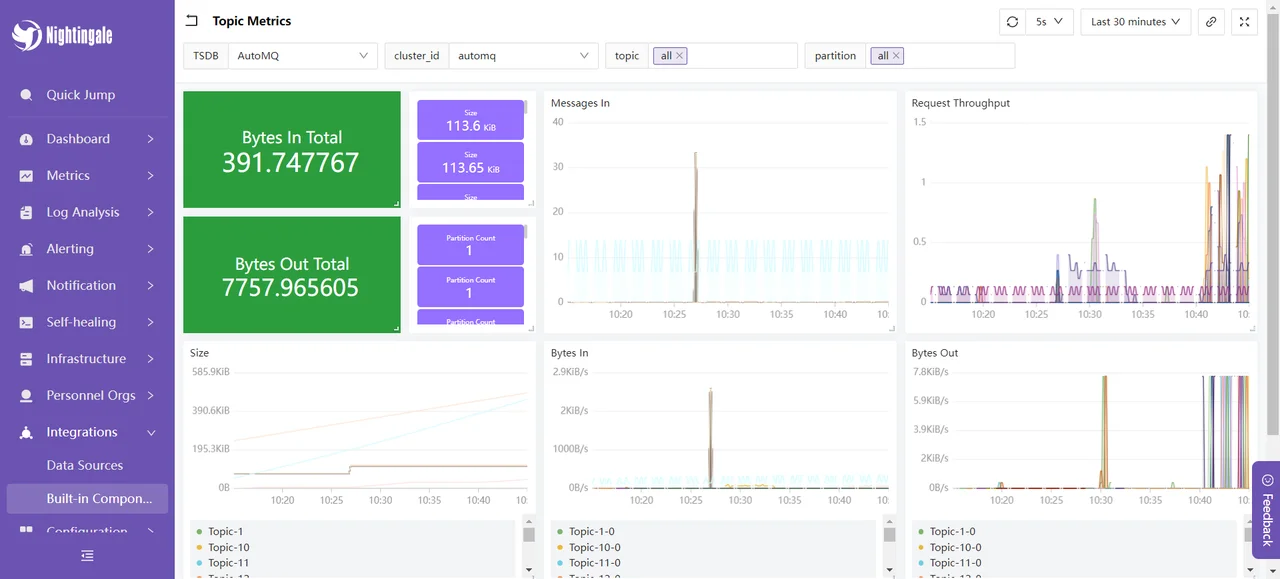

We choose the Topic Metrics dashboard, which displays the following content:

This section shows the message input and output occupancy, message input and request rates, and message sizes of the AutoMQ cluster over a recent period. These metrics are used to monitor and optimize the performance and stability of the AutoMQ cluster: By evaluating the message input and output occupancy, we can assess the load on producers and consumers to ensure that the cluster can handle the message flow properly; the message input rate is used to monitor the rate at which producers send messages in real-time, identifying potential bottlenecks or spikes in traffic; the request rate helps to understand the frequency of client requests, enabling optimization of resource allocation and processing capacity; the message size metric is used to analyze the average size of messages, allowing configuration adjustments to optimize storage and network transmission efficiency. By monitoring these metrics, performance issues can be promptly identified and resolved, ensuring the efficient and stable operation of the AutoMQ cluster.

At this point, our integration process is complete. For more usage methods, you can refer to Nightingale's [official documentation] [10] for further experience.

Summary

Through this article, we have detailed how to use the Nightingale monitoring system to comprehensively monitor the AutoMQ cluster. We started with the basic concepts of AutoMQ and Nightingale, gradually explaining how to deploy AutoMQ, Prometheus, and Nightingale, and configure monitoring and alerting rules. Through this integration, enterprises can gain real-time insight into the running status of the AutoMQ cluster, promptly identify and resolve potential issues, optimize system performance, and ensure business continuity and stability. The Nightingale monitoring system, with its powerful data collection capabilities, flexible alerting mechanisms, and rich visualization features, is an ideal choice for enterprises to monitor complex distributed systems. We hope this article provides valuable reference for your practical applications, aiding in more efficient and stable system operations.

References

[1] AutoMQ: https://www.automq.com/zh

[2] Nightingale Monitoring: https://flashcat.cloud/docs/content/flashcat-monitor/nightingale-v7/introduction/

[3] Nightingale Architecture: https://flashcat.cloud/docs/content/flashcat-monitor/nightingale-v7/introduction/

[4] Prometheus: https://prometheus.io/docs/prometheus/latest/getting_started/

[5] Cluster Deployment | AutoMQ: https://docs.automq.com/automq/getting-started/cluster-deployment-on-linux

[6] Metrics | AutoMQ: https://docs.automq.com/automq/observability/metrics

[7] Integrating Metrics with Prometheus: https://docs.automq.com/automq/observability/integrating-metrics-with-prometheus

[8] Deployment Instructions: https://flashcat.cloud/docs/content/flashcat-monitor/nightingale-v7/install/intro/

[9] Nightingale GitHub releases: https://github.com/ccfos/nightingale

[10] Nightingale Official Documentation: https://flashcat.cloud/docs/content/flashcat-monitor/nightingale-v7/overview/