Shared storage architecture of AutoMQ

AutoMQ's shared storage is highly flexible, allowing it to run on all cloud providers, including Azure. Depending on the cloud provider, we can select different storage options for optimal performance and cost efficiency.

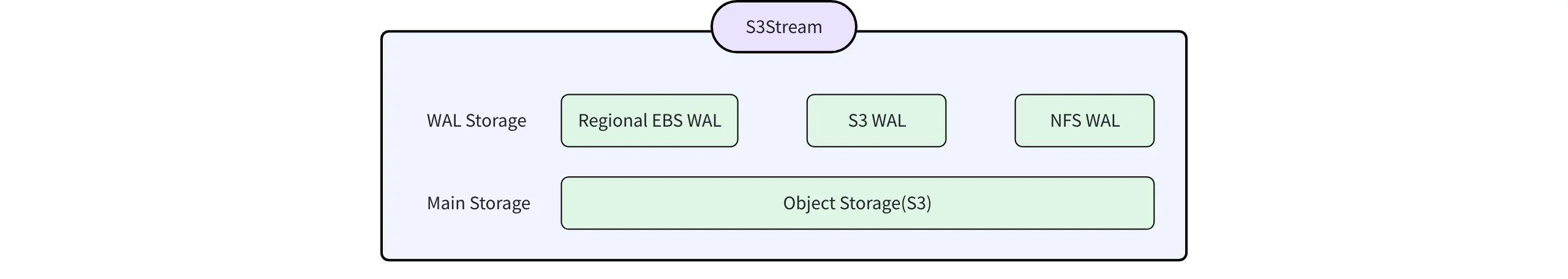

AutoMQ is built on object storage, offering high throughput and low-cost benefits. To optimize latency and reduce IOPS requirements for a partitioned system like Apache Kafka, AutoMQ introduces a WAL storage on object storage, providing customers with the flexibility to choose different storage services for WAL.

Typically, there are three options for WAL storage: block storage, file storage, and object storage. Yes, object storage can also serve as WAL storage with a low IOPS requirement. Please refer to here[1] for more details about the storage architecture of AutoMQ.

Best practice on Azure

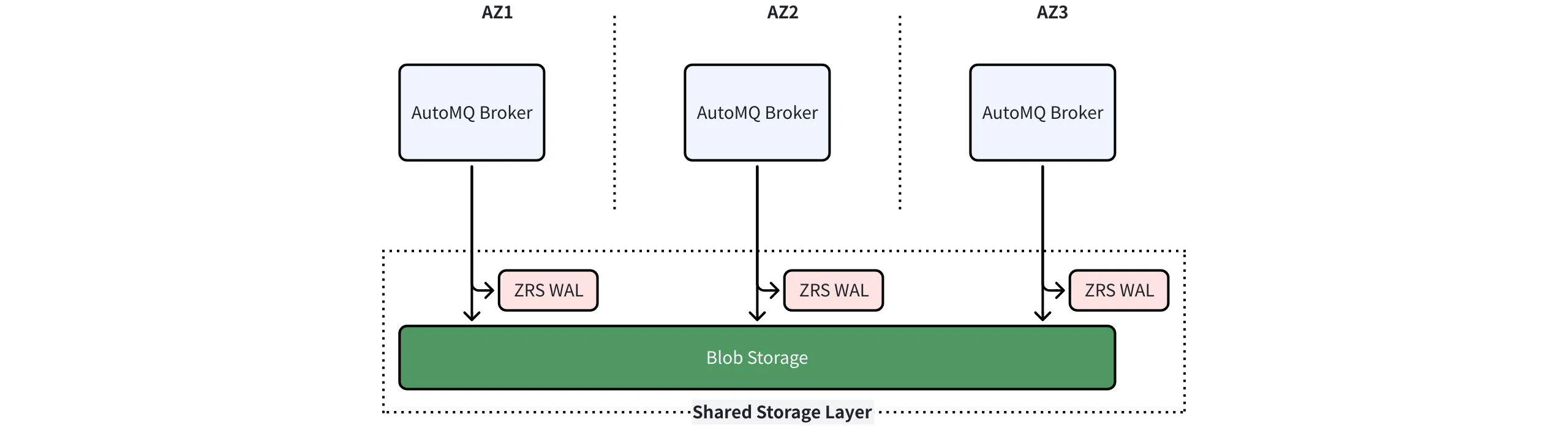

Azure provides Zone-redundant Disk, which synchronously replicates your Azure managed disk across three Azure availability zones in the region you select. Each availability zone is a separate physical location with independent power, cooling, and networking. ZRS disks provide at least 99.9999999999% (12 9's) of durability over a given year. For more details, please refer to here[2].

Given the high performance of the ZRS disk, it is the best choice for AutoMQ's WAL storage. Below is the optimal deployment option for AutoMQ on Azure.

The benchmark setup

We chose the Dasv5[3] series for the server side to deploy the AutoMQ broker. Each Dasv5 virtual machine has 4 vCPUs and 16GB of memory.

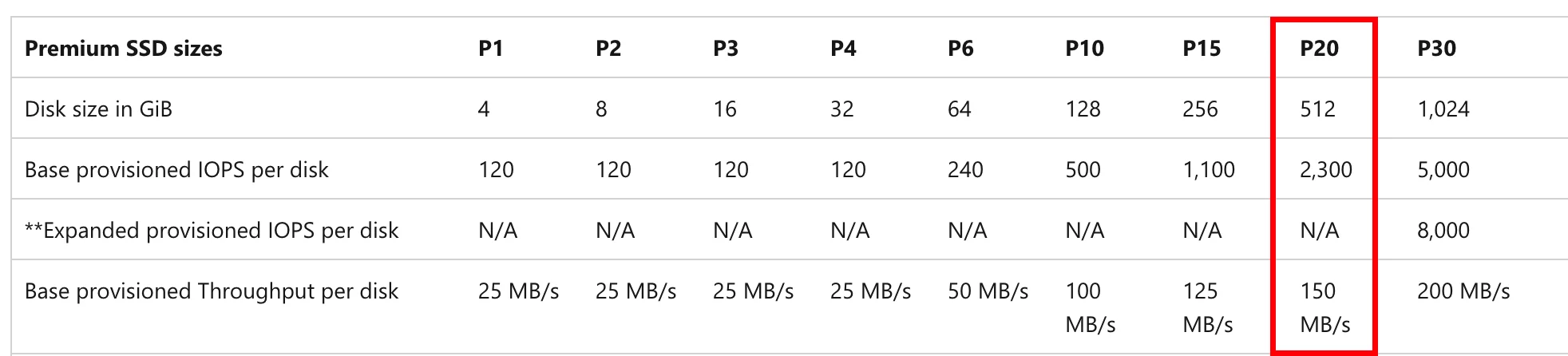

Each broker will mount a ZRS volume for WAL storage. To achieve 2000 IOPS and 150MB/s throughput, we chose Premium SSD P20 (512 GB) for WAL storage. Note that WAL storage doesn't require such a large 512GB size; we only need several GB for each WAL. However, unlike AWS GP3, Azure doesn't provide an optimistic baseline performance for smaller volumes. We need to provision a larger disk size to achieve our target performance.

In conclusion, our cluster setup is as follows:

| - | Specification | Quantity |

|---|---|---|

| Virtual Machine | Dasv5 for 4C16G | 15 |

| ZRS | Premium SSD P20 | 15 |

| Blob Storage | Pay as you go |

On the client side, we use the AutoMQ perf tool[4] to perform separate 1GB/s write and read operations. You can also reproduce the benchmark using the OpenMessaging framework. Below are the workload and client configurations for OpenMessaging.

Workload Configuration:

topics: 1

partitionsPerTopic: 288

messageSize: 65536

producerRate: 16640

producersPerTopic: 64

subscriptionsPerTopic: 1

consumerPerSubscription: 64Client Configuration:

name: automq

driverClass: io.openmessaging.benchmark.driver.kafka.KafkaBenchmarkDriver

replicationFactor: 1

reset: false

commonConfig: |

bootstrap.servers=xxx:9092

request.timeout.ms=120000

producerConfig: |

batch.size=0

consumerConfig: |

fetch.max.wait.ms=1000To mitigate the impact of different clients on batch latency, we disabled the batch feature and used regular 64 KiB packets to simulate the client's batch results.

The benchmark report

In this 1 GiB/s scenario, we focus primarily on latency and cost data.

Latency:

| 1GB/s | avg | P50 | P75 | P90 | P95 | P99 |

|---|---|---|---|---|---|---|

| Produce Latency (ms) | 9.508 | 8.125 | 10.655 | 13.281 | 16.179 | 27.538 |

| E2E Latency(ms) | 11.508 | 9.169 | 12.299 | 16.695 | 21.443 | 39.886 |

Latency is primarily impacted by ZRS volume, as ZRS replicates data across three availability zones, resulting in cross-AZ latency. Our benchmark results show a P50 latency of about 8ms , but many outliers increase the P99 latency to 27ms .

Cost:

| 1GB/s | AutoMQ Brokers* | Blob Api | Blob Storage (7 days) | Cross-AZ network traffic | Vendor fees | Total |

|---|---|---|---|---|---|---|

| Monthly cost | 3,510$ | 1,101$ | 12,029$ | 0$ | 5,804$ | 22,509$ |

| Hourly cost | 4.87$ | 1.53$ | 16.70$ | 0$ | 8.06$ | 31.16$ |

Our benchmark results indicate that in such a heavy traffic scenario (1GiB/s write and 1GiB/s read), the total AutoMQ cost is $31.16 per hour, or $14.46 per hour excluding blob storage costs.

References

[1]. https://docs.automq.com/automq/architecture/s3stream-shared-streaming-storage/wal-storage

[4]. https://www.automq.com/blog/how-to-perform-a-performance-test-on-automq