Introduction

In modern data architectures, the challenge is no longer just about storing data, but about moving it efficiently and reacting to it in real time. Batch ETL jobs that run overnight are becoming relics in a world that demands immediate data synchronization for microservices, live analytics dashboards, and responsive user experiences. This is where Change Data Capture (CDC) comes in.

CDC is a set of software design patterns used to identify and capture changes made to data in a source database so that downstream systems can act on those changes. Instead of repeatedly copying an entire dataset, CDC provides a stream of just the incremental changes—the inserts, updates, and deletes—as they happen. This approach is foundational for building robust, scalable, and efficient real-time data pipelines [1].

While several methods for implementing CDC exist, they generally fall into three categories: Query-based , Trigger-based , and Log-based . This blog post will provide a concise overview of each before diving into a detailed comparison to help you choose the right approach for your needs.

![Change Data Capture in Kafka System [9]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099c3489b1cfc5798c8e6f_67480fef30f9df5f84f31d36%252F685e62f9b6f732e1179a8698_VU1A.png)

The Core Methods: A Concise Overview

At its core, CDC is about answering the question, "What has changed in my data since I last looked?" Each method provides a different way to answer this.

Query-Based CDC

This is the most straightforward approach. It involves periodically running a query against a source table to identify rows that have changed. This identification relies on a column within the table itself, typically a LAST_UPDATED timestamp or a version number. Its primary appeal is its simplicity, as it requires no special database features beyond a way to identify recent changes. A scheduler, such as a cron job, executes a query like SELECT * FROM my_table WHERE last_updated > 'last_run_time' at regular intervals to fetch new and updated records [2]. However, this simplicity comes at a cost: it cannot inherently detect rows that have been permanently deleted (hard deletes) and can put a significant read load on the database.

Trigger-Based CDC

This method uses native database triggers—stored procedures that execute automatically in response to data modification events ( INSERT , UPDATE , DELETE ). When a change occurs on a monitored table, a trigger fires and writes the change details into a separate, custom-built "shadow" or "audit" table. This audit table typically stores a copy of the changed row, the type of operation ( INSERT , UPDATE , or DELETE ), and a timestamp. Because this write to the audit table occurs synchronously within the same database transaction as the original change, it provides a complete record but also introduces performance overhead. A downstream process, such as a Kafka Connect JDBC connector, then polls this audit table to retrieve the change events.

Log-Based CDC

This is the most sophisticated and powerful approach. It works by reading directly from the database's transaction log (also known as a write-ahead log or WAL). This log is the database's own definitive record of every single transaction. Databases use this log to guarantee data integrity and for internal processes like crash recovery and replication [3]. By tapping into this existing mechanism, log-based CDC tools (like Debezium) can capture every event—including the "before" and "after" state of a row for an update—without interfering with the database's workload. This makes it a highly efficient and complete method for sourcing change data without ever touching the source tables directly.

Head-to-Head: A Detailed Comparison

The choice between CDC methods involves critical trade-offs in performance, reliability, and complexity. The table below provides a high-level comparison, which we will explore in more detail afterward.

| Feature | Query-Based CDC | Trigger-Based CDC | Log-Based CDC |

|---|---|---|---|

| Performance Impact | High: Frequent, heavy read queries can overload the source DB. | Very High: Adds write overhead and locks to every source transaction. | Very Low: Non-intrusive; reads from logs, not production tables. |

| Data Completeness | Poor: Misses hard deletes and intermediate updates between polls. | Good: Captures all DML events, but handling hard deletes requires complex logic. | Excellent: Captures every committed change perfectly, including hard deletes. |

| Latency | High: Dependent on polling frequency; never truly real-time. | Medium: Lower latency than querying, but still requires polling the shadow table. | Very Low: Near real-time, streaming directly from the transaction log. |

| Implementation Complexity | Low: Simple to set up with basic SQL knowledge. | Medium: Triggers are standard SQL, but managing them at scale is complex. | High: Requires deep DB knowledge, special permissions, and dedicated tools. |

| Schema Impact | Medium: Requires adding a timestamp/version column to tables. | High: Requires adding triggers and separate shadow tables to the schema. | None: No changes are required on the source database schema. |

| Maintenance Overhead | Low: The query is simple to maintain. | High: Triggers and shadow tables are brittle and need manual schema updates. | Medium: Requires monitoring a distributed CDC and streaming platform. |

Performance and Source System Impact

This is often the most critical differentiator.

Log-based CDC is the clear winner, as it is almost entirely non-intrusive. Reading from a log file that the database is already writing to for its own purposes adds negligible load [4].

Trigger-based CDC has the most severe performance impact. It adds a synchronous write operation to every transaction, increasing latency and lock contention on your production tables [5].

Query-based CDC places a heavy read load on the database. As tables grow, these frequent polling queries become increasingly expensive and can degrade performance for your primary application workloads.

Data Completeness and Reliability

Your ability to capture every change accurately is paramount.

Log-based CDC provides a mathematically complete, ordered record of every committed transaction. Because it reads the database's own source of truth, it reliably captures all inserts, updates, and hard deletes [3].

Trigger-based CDC can capture all DML events, but reliably propagating hard deletes is a known challenge. The trigger can record that a delete happened, but the downstream polling process needs special logic to interpret this and create a proper "tombstone" event in a system like Kafka [6].

Query-based CDC is the least reliable. First, it cannot detect hard deletes at all, as the row is simply gone. Second, it can miss intermediate updates. If a row is updated multiple times between polls, only the final state will be captured, losing valuable transaction history [2].

Latency and Real-Time Capability

Log-based CDC offers the lowest latency, providing a near real-time stream of events as transactions are committed to the log.

Trigger-based CDC , when paired with a polling mechanism to read its shadow table, has medium latency dictated by the polling interval.

Query-based CDC has the highest latency, as it is entirely dependent on the polling frequency. Setting a very low interval to reduce latency will dramatically increase the performance impact.

Implementation and Maintenance

Query-based CDC is the simplest to set up initially, requiring only a query and a scheduler. However, it requires altering your source tables to add a timestamp column.

Trigger-based CDC is more complex than querying, as it requires writing and deploying triggers and shadow tables. Its main challenge is maintenance: these database objects are brittle and must be updated manually whenever the source schema changes, creating a high risk of failure.

Log-based CDC has the highest upfront implementation complexity. It requires specialized tools (e.g., Debezium), specific database configurations, and elevated permissions. However, once set up, it is far more robust. Modern tools can even handle source schema changes automatically, significantly reducing long-term maintenance compared to a trigger-based approach.

How to Choose the Right CDC Method

Choose Log-Based CDC as your default for all modern, performance-critical systems. It is the gold standard for microservices, data replication, and real-time analytics. Its combination of low impact, high fidelity, and low latency is unmatched.

Choose Trigger-Based CDC only as a fallback when log-based CDC is not an option. This might be due to organizational policies restricting log access or the use of a database platform that lacks a mature log-reading tool. Be prepared to accept the performance cost. Its most robust application is the Transactional Outbox pattern, which guarantees atomicity between a state change and the creation of an outbound event.

Choose Query-Based CDC as a last resort for non-critical, low-volume use cases where some data loss and latency are acceptable. It can be useful for synchronizing data with a system where near real-time updates are not necessary and hard deletes do not occur or do not need to be tracked.

Best Practices for Implementing CDC with Kafka

Design for Idempotency: Systems will inevitably have failures. With the common "at-least-once" delivery guarantee, consumers must be designed to handle duplicate messages without causing data corruption.

Use a Schema Registry: A Schema Registry is critical for managing the evolution of your data's schema over time. It helps ensure that data produced by the CDC connector can always be read by downstream consumers, even after schema changes.

Monitor Everything: A CDC pipeline has many moving parts. Actively monitor the health of the Kafka cluster, the status of the CDC connectors, and—most importantly—the replication lag to ensure data is flowing in a timely manner [7].

Secure the Pipeline: Implement security at every layer. Use TLS to encrypt data in transit, configure authentication and authorization for Kafka and the source database, and always follow the principle of least privilege for database credentials [8].

Conclusion

Change Data Capture is a powerful technique that unlocks the value of data by making it available in real time. While all three methods can feed a Kafka pipeline, they offer vastly different trade-offs.

Log-based CDC stands out as the clear winner for modern, performance-critical applications. Its ability to deliver a complete, low-latency stream of changes with minimal impact on the source system is unmatched. Trigger-based CDC remains a viable, albeit costly, alternative for specific situations where logs are off-limits, while Query-based CDC should be reserved for the simplest, least critical tasks. By understanding the deep trade-offs between performance, reliability, and complexity, you can design robust and efficient data systems that are ready for the demands of a real-time world.

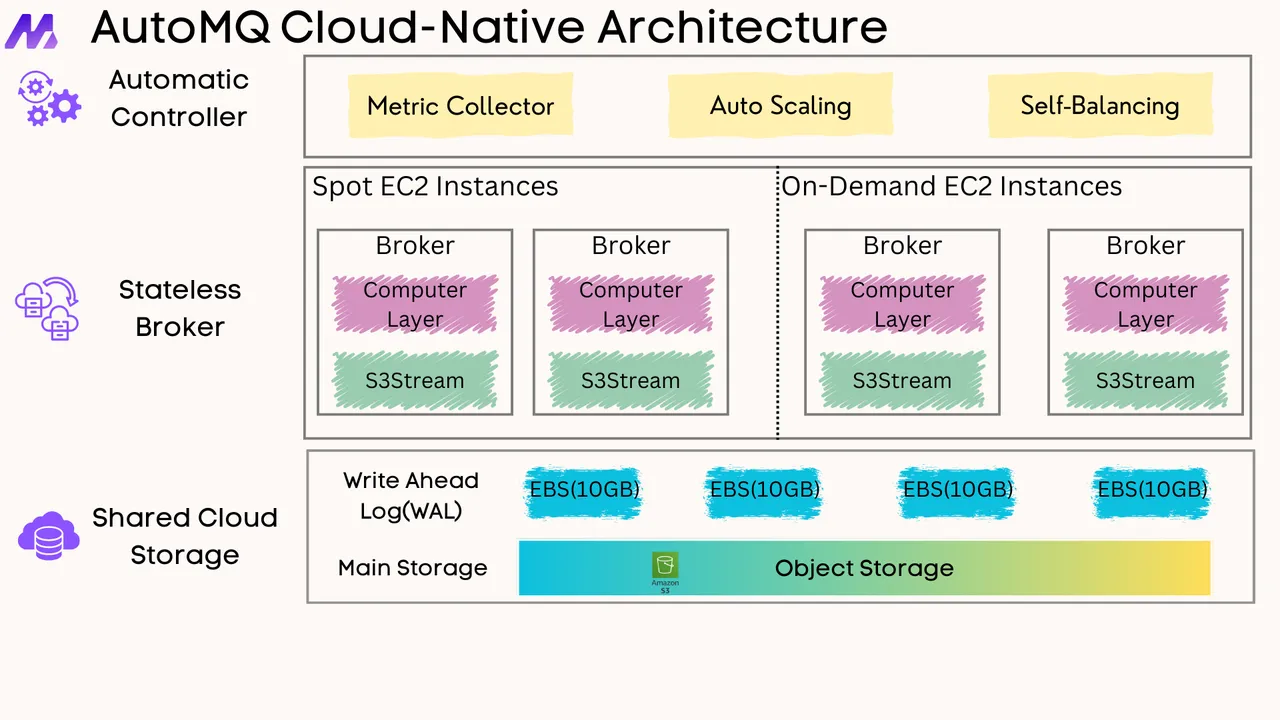

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)