Overview

Integrating Kafka with OCI Object Storage addresses several key needs in data pipeline architectures:

Cost Optimization : Kafka brokers traditionally store data on local disks, which can be expensive for long-term retention or large data volumes. OCI Object Storage provides a much more cost-effective alternative for storing older, less frequently accessed data .

Long-Term Data Retention & Archival : Many regulatory and business requirements mandate long-term data retention. Object storage is ideal for this, offering durability and accessibility for historical data analysis or compliance.

Decoupling Compute and Storage : Separating Kafka's processing (brokers) from its long-term storage allows for independent scaling. You can scale your broker resources based on throughput needs and your object storage based on retention needs.

Data Lake Integration : Data stored in OCI Object Storage can be easily accessed by other big data processing and analytics services within OCI, such as OCI Data Flow (based on Apache Spark) or queried using services like Oracle Database with

DBMS_CLOUD.Improved Disaster Recovery : Storing critical Kafka data in a highly durable and replicated object storage service like OCI Object Storage can simplify disaster recovery strategies.

Core Concepts: Kafka and OCI Object Storage

Apache Kafka Fundamentals

Kafka is a distributed streaming platform that allows you to publish and subscribe to streams of records, similar to a message queue or enterprise messaging system. Key Kafka concepts include:

Topics : Categories or feed names to which records are published.

Partitions : Topics are split into partitions, which are ordered, immutable sequences of records. Partitions allow for parallelism.

Brokers : Kafka servers that store data and serve client requests.

Producers : Client applications that publish (write) streams of records to Kafka topics.

Consumers : Client applications that subscribe to (read and process) streams of records from Kafka topics.

Log Segments : Data within a partition is stored in log segments, which are individual files on the broker's disk.

![Apache Kafka Architecture [12]](https://cdn.prod.website-files.com/6809c9c3aaa66b13a5498262/68f5ebc2f4aa6742a902e26a_67480fef30f9df5f84f31d36%252F685e62750df4a037e5bcd8c2_hRzi.png)

OCI Object Storage Fundamentals

OCI Object Storage is a high-performance, scalable, and durable storage platform. Key features include:

Buckets : Logical containers for storing objects. Buckets exist within a compartment in an OCI tenancy.

Objects : Any type of data (e.g., log files, images, videos) stored as an object. Each object consists of the data itself and metadata about the object.

Namespace : Each OCI tenancy has a unique, uneditable Object Storage namespace that spans all compartments and regions. Bucket names must be unique within a namespace.

Durability & Availability : OCI Object Storage is designed for high durability and availability, automatically replicating data across fault domains or availability domains depending on the storage tier and region.

S3 Compatibility API : OCI Object Storage provides an Amazon S3-compatible API, allowing many existing S3-integrated tools and SDKs to work with OCI Object Storage with minimal changes . This is a crucial feature for Kafka integration.

Storage Tiers : Offers different storage tiers (e.g., Standard, Infrequent Access, Archive) to optimize costs based on data access patterns.

Mechanisms for Kafka Integration with OCI Object Storage

There are two primary mechanisms for integrating Kafka with OCI Object Storage: Kafka Connect S3 Sink and Tiered Storage .

Kafka Connect with an S3-Compatible Sink

Kafka Connect is a framework for reliably streaming data between Apache Kafka and other systems. You can use a "sink" connector to export data from Kafka topics to an external store. Since OCI Object Storage offers an S3-compatible API, S3 sink connectors can be used to move Kafka data into OCI Object Storage buckets.

How it Works:

The S3 sink connector subscribes to specified Kafka topics.

It reads data from these topics.

It batches the data and writes it as objects to a designated OCI Object Storage bucket.

The connector handles data formatting (e.g., Avro, Parquet, JSON), partitioning of data within the bucket (often by Kafka topic, partition, and date), and ensures exactly-once or at-least-once delivery semantics to object storage.

OCI Configuration for Kafka Connect S3 Sink:

OCI Customer Secret Keys : Generate S3 Compatibility API keys (Access Key ID and Secret Access Key) for an OCI IAM user . These keys will be used by the S3 sink connector to authenticate with OCI Object Storage.

Environment Variables : Set

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYenvironment variables on the Kafka Connect worker nodes using the generated OCI keys.Connector Properties :

s3.region: Your OCI region (e.g.,us-ashburn-1).store.urlors3.endpoint: The OCI S3 compatibility endpoint:https://<namespace>.compat.objectstorage.<region>.oraclecloud.com.s3.pathstyle.access(or similar property likes3.path.style.access.enabled): Should be set totrue, as OCI Object Storage typically requires path-style access for its S3-compatible API . Many modern S3 connectors default to path-style access.format.class: Specifies the data format for objects stored in OCI (e.g.,io.confluent.connect.s3.format.avro.AvroFormat).storage.class:io.confluent.connect.s3.storage.S3Storage.Other properties control batching, flushing, partitioning, error handling, etc.

Example S3 Sink Connector Configuration for OCI Object Storage

// Example S3 Sink Connector Configuration for OCI Object Storage

{

"name": "s3-sink-oci-example",

"config": {

"connector.class": "io.confluent.connect.s3.S3SinkConnector", // Or other S3 sink connector

"tasks.max": "1",

"topics": "my_topic_to_archive",

"s3.bucket.name": "my-oci-kafka-archive-bucket",

"s3.region": "us-ashburn-1", // Your OCI region

"store.url": "https://<your_namespace>.compat.objectstorage.us-ashburn-1.oraclecloud.com", // OCI S3 endpoint

"flush.size": "10000",

"format.class": "io.confluent.connect.s3.format.json.JsonFormat",

"storage.class": "io.confluent.connect.s3.storage.S3Storage",

"partitioner.class": "io.confluent.connect.storage.partitioner.TimeBasedPartitioner",

"path.format": "'year'=YYYY/'month'=MM/'day'=dd/'hour'=HH",

"partition.duration.ms": "3600000", // Hourly partitions

"locale": "en-US",

"timezone": "UTC",

"s3.path.style.access.enabled": "true" // Explicitly set, though often default

}

}

Tiered Storage

Tiered storage is a feature in some Kafka distributions and also an emerging capability in open-source Apache Kafka (via KIP-405) that allows Kafka brokers themselves to offload older log segments from local broker storage to a remote, cheaper storage system like OCI Object Storage.

How it Works (General Concept):

Local Tier : Kafka brokers continue to write new data to their local disks (the "hot" tier) for low-latency reads and writes.

Remote Tier : As data segments age and become less frequently accessed, the broker asynchronously copies these segments to the remote storage tier (e.g., OCI Object Storage).

Metadata Management : The broker maintains metadata about which segments are local and which are remote.

Data Access :

Consumers requesting recent data are served from the local tier.

Consumers requesting older data (that has been tiered) will have that data transparently fetched from the remote tier (OCI Object Storage) by the broker. This might incur higher latency compared to local reads.

Retention : Local retention policies can be set for a shorter duration, while data in the remote tier can be retained for much longer, aligning with cost and compliance needs.

OCI Configuration for Tiered Storage:

The specific configuration depends on the Kafka distribution or the KIP-405 plugin being used. However, the principles for S3-compatible storage are similar:

S3 Endpoint Override : Configure the Kafka broker or plugin with the OCI S3 compatibility endpoint:

https://<namespace>.compat.objectstorage.<region>.oraclecloud.com.OCI Region : Specify your OCI region.

Authentication : Provide OCI Customer Secret Keys (Access Key ID and Secret Key) through configuration files, environment variables, or IAM instance principals if brokers run on OCI Compute.

Path-Style Access : Ensure path-style access is enabled.

Bucket Information : Specify the OCI bucket to be used for tiered data.

Example properties for a KIP-405 RemoteStorageManager plugin for OCI

## Example properties for a KIP-405 RemoteStorageManager plugin for OCI

## Actual property names may vary based on the specific plugin implementation.

rsm.config.storage.backend.class=com.example.S3RemoteStorageManager

rsm.config.s3.endpoint=https://<your_namespace>.compat.objectstorage.<oci_region>.oraclecloud.com

rsm.config.s3.region=<oci_region>

rsm.config.s3.bucket.name=my-kafka-tiered-storage-bucket

rsm.config.s3.access.key.id=<OCI_customer_access_key>

rsm.config.s3.secret.access.key=<OCI_customer_secret_key>

rsm.config.s3.path.style.access.enabled=true

Some Kafka distributions provide this capability as a built-in feature, and their documentation will specify the exact properties for S3-compatible tiered storage configuration targeting OCI.

Comparison of Approaches

| Feature | Kafka Connect S3 Sink | Tiered Storage (Broker-Integrated) |

|---|---|---|

| Mechanism | Separate Kafka Connect cluster/workers | Integrated into Kafka brokers |

| Data Offload | Data copied from topics to Object Storage | Log segments moved from local to remote store |

| Consumer Access | Consumers read from Kafka; data in OCI is for archival/analytics | Consumers can read older data transparently via brokers (may incur latency) |

| Complexity | Requires managing Kafka Connect | Managed within the Kafka cluster |

| Broker Impact | Minimal impact on broker storage (data consumed by Connect) | Reduces broker disk usage for older data |

| Latency (for old data) | N/A (data is offline from Kafka perspective) | Higher for reads from remote tier |

| Use Case | Archival, data lake ingestion, batch analytics | Infinite retention within Kafka abstraction, cost saving on broker storage |

| Compacted Topics | Supported for archival | Support varies by implementation (KIP-405 typically doesn't, some distributions do) |

| OCI S3 Endpoint | Configured in Connect worker/connector | Configured in broker settings/plugin |

| OCI Auth | OCI IAM user keys for Connect workers | OCI IAM user keys or instance principals for brokers |

Best Practices for Kafka with OCI Object Storage

IAM Policies

Follow the principle of least privilege. Create dedicated OCI IAM users or dynamic groups (for OCI Compute instances) for Kafka brokers (if using tiered storage) or Kafka Connect workers.

Grant these principals only the necessary permissions (e.g.,

manage object-familyor more granularobject-put,object-get,object-delete,list-buckets) on the specific OCI bucket(s) they need to access .Example Policy for a dynamic group:

Allow dynamic-group <kafka_component_dynamic_group_name> to manage object-family in compartment <your_compartment_name> where target.bucket.name = '<your_kafka_bucket_name>'

Allow dynamic-group <kafka_component_dynamic_group_name> to use buckets in compartment <your_compartment_name> where target.bucket.name = '<your_kafka_bucket_name>'

OCI Bucket Configuration

Use separate buckets for different Kafka clusters or purposes.

Enable server-side encryption (SSE) for data at rest. OCI Object Storage encrypts all data by default . Consider using Customer-Managed Keys (CMK) via OCI Vault for enhanced control.

Enable versioning on buckets if you need to protect against accidental overwrites or deletions, though this increases storage costs.

Implement Object Lifecycle Management policies to transition data to cheaper storage tiers (e.g., Infrequent Access, Archive) or delete it after a certain period, based on your retention requirements.

Data Formats

For Kafka Connect S3 sink, choose a format suitable for your downstream use cases.

Apache Avro : Good for schema evolution and compact binary storage; often used with a schema registry.

Apache Parquet : Columnar format, excellent for analytical queries on data lakes. Data is often converted from Avro (streaming format) to Parquet (analytics format) in object storage.

Security

Use HTTPS for all connections to OCI Object Storage. The OCI S3-compatible endpoint uses HTTPS.

Securely manage OCI Customer Secret Keys. Use OCI Vault for storing secrets, or environment variables in secure environments. For Kafka Connect, some frameworks allow for

ConfigProviderplugins to fetch secrets from secure stores .Use OCI Network Security Groups (NSGs) or Security Lists to restrict network access to Kafka brokers and Connect workers.

If Kafka components run within an OCI VCN, use a Service Gateway to access OCI Object Storage without traffic traversing the public internet .

Performance & Cost

Tune Kafka Connect S3 sink properties (e.g.,

flush.size,rotate.interval.ms) to balance between write frequency to OCI and data freshness/latency.For tiered storage, be mindful of the potential latency impact for consumers reading older, tiered data. Segment size can also impact remote read performance.

Monitor OCI Object Storage costs, paying attention to storage volume, request rates (PUT, GET, LIST), and data retrieval costs (especially from archive tiers).

Use appropriate OCI Object Storage tiers. Standard tier for frequently accessed archival data, Infrequent Access for less frequent, and Archive for long-term cold storage.

Monitoring

Monitor Kafka Connect worker JMX metrics (e.g., task status, records sent, errors).

For tiered storage, monitor broker metrics related to remote storage operations (if exposed by the implementation), such as upload/download rates, errors, and latency.

Monitor OCI Object Storage metrics via OCI Monitoring service:

PutRequests,GetRequests,ListRequests,ClientErrors(4xx),ServerErrors(5xx),StoredBytes,FirstByteLatencyfor the target bucket . Correlate these with Kafka-side metrics.

Conclusion

Integrating Apache Kafka with OCI Object Storage offers a powerful solution for managing streaming data archives efficiently and cost-effectively. Whether using Kafka Connect S3 sinks for offloading data to a data lake or leveraging tiered storage for extending Kafka's own storage capacity, OCI provides a robust and scalable backend. By understanding the mechanisms, correctly configuring the integration, and following best practices for security and IAM, organizations can unlock significant benefits for their real-time data pipelines and long-term data retention strategies.

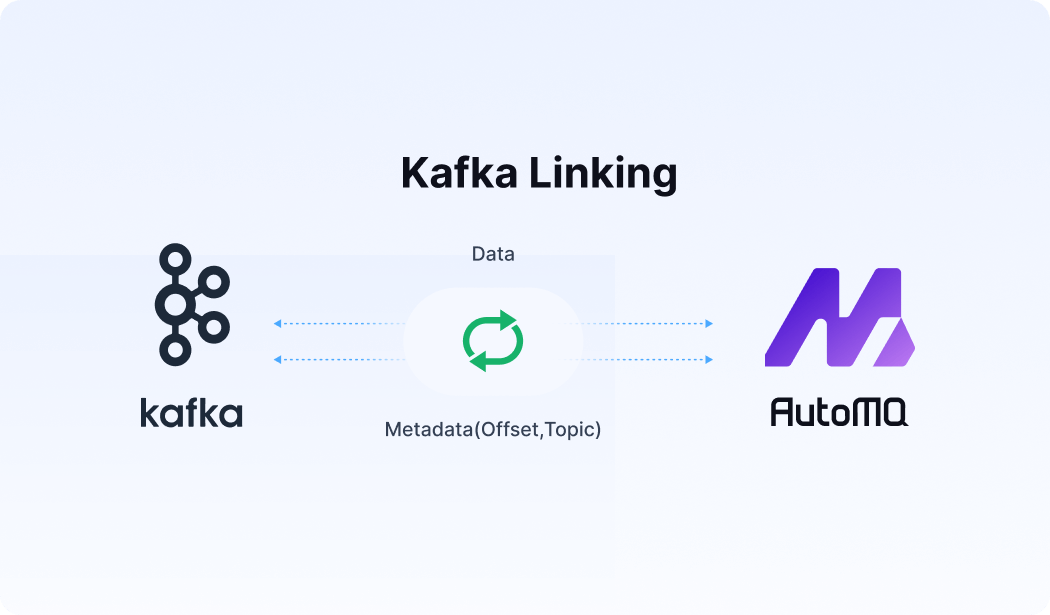

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)