Overview

Data storage and management solutions have evolved significantly to handle the exponential growth of data in modern organizations. Data lakes and data warehouses represent two distinct approaches to storing, processing, and analyzing data. This blog provides a detailed comparison of these technologies, their architectures, use cases, and best practices.

Introduction to Data Lakes and Data Warehouses

Data Lakes

A data lake is a centralized repository designed to store vast amounts of structured, semi-structured, and unstructured data in its native format[1]. The concept emerged as organizations needed a solution to store and process the growing volumes of diverse data types that traditional systems couldn't efficiently handle. Data lakes allow organizations to ingest raw data without having to structure it first, following the principle of "store now, analyze later."

![Data Lake Overview[81]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099c4f4d1460920c1738cd_67480fef30f9df5f84f31d36%252F685e663aa786775a233fe66b_8HBD.png)

Data Warehouses

A data warehouse is an organized collection of structured data that has been processed and transformed for specific analytical purposes[5]. Data warehouses are optimized for fast query performance and business intelligence applications, containing data that has already been cleansed, formatted, and validated. They serve as a central repository for integrated data from multiple disparate sources[3].

![Data Warehouse Overview[80]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099c4f4d1460920c1738d0_67480fef30f9df5f84f31d36%252F685e663ae2bfb92fe1a544d6_Qydl.png)

Core Differences Between Data Lakes and Data Warehouses

Data Structure and Schema Approach

The most fundamental difference between data lakes and data warehouses lies in their approach to data structure and schema:

Data Lakes use a schema-on-read approach where data is stored in its raw format, and structure is applied only when the data is read or analyzed[4]. This provides flexibility but requires more processing at query time.

Data Warehouses employ a schema-on-write approach where data is structured, cleansed, and transformed before it's loaded into the system[4]. This front-loading of work ensures faster query performance but less flexibility[4].

Data Processing and Storage

Data Lakes:

Store raw data in its native format

Support all data types (structured, semi-structured, unstructured)

Focus on low-cost storage for large volumes of data

Use Extract, Load, Transform (ELT) processes[19]

Data Warehouses:

Store processed and transformed data

Primarily support structured data

Optimize storage for query performance

Use Extract, Transform, Load (ETL) processes[19]

Comprehensive Comparison Table

| Characteristic | Data Lake | Data Warehouse |

|---|---|---|

| Data Types | Structured, semi-structured, and unstructured | Primarily structured data |

| Schema | Schema-on-read | Schema-on-write |

| Data Quality | Raw, unprocessed (may include duplicates or errors) | Curated, processed, verified |

| Users | Data scientists, data engineers, architects, analysts | Business analysts, data developers |

| Use Cases | Machine learning, exploratory analytics, big data, streaming | Business intelligence, reporting, historical analysis |

| Cost | Lower storage cost, higher processing cost | Higher storage cost, lower processing cost |

| Performance | Prioritizes storage volume and cost over query speed | Optimized for fast query execution |

| Flexibility | Highly flexible for various data types and analyses | Less flexible, designed for specific analytical needs |

| Complexity | Higher complexity to manage and access data | Lower complexity with predefined structures |

| Processing Pattern | ELT (Extract, Load, Transform) | ETL (Extract, Transform, Load) |

Architectural Considerations

Data Lake Architecture

Traditional data lake architectures were on-premise deployments built on platforms like Hadoop[2]. These were created before cloud computing became mainstream and required significant management overhead. As cloud computing evolved, organizations began creating data lakes in cloud-based object stores, accessible via SQL abstraction layers[2].

Modern data lake architectures are often cloud-based analytics layers that maximize query performance against data stored in a data warehouse or an external object store[2]. This enables more efficient analytics across diverse data sets and formats.

Data Warehouse Architecture

Data warehouse architectures typically follow one of two models:

Two-Tier Architecture : Uses staging to extract, transform, and load data into a centralized repository paired with analytical tools[3].

Three-Tier Architecture : Adds an Online Analytical Processing (OLAP) Server between the data warehouse and end users, providing an abstracted view of the database for better scalability and performance[3].

More complex implementations might include bus, hub-and-spoke, or federated models to address specific organizational needs[3].

Use Cases and Applications

Data Lake Applications

Real-time data aggregation from diverse sources : Organizations with numerous data sources like IoT devices, customer data, social media, and corporate systems benefit from data lakes' ability to ingest diverse data types[10].

Big data processing and analytics : Data lakes integrate easily with advanced analytics and machine learning tools, enabling data scientists to perform deep data analysis and implement machine learning models[10].

Business continuity : Data lakes can serve as a single storage system to speed up service delivery and maintain business continuity, as demonstrated by Grand River Hospital, which migrated nearly three terabytes of patient data to eliminate the need for 27 diverse healthcare applications[10].

Always-on business services : Real-time data ingestion allows data lakes to make business data available at any time, supporting mission-critical applications like banking systems and clinical decision-making software[10].

Data Warehouse Applications

Business intelligence and reporting : Data warehouses excel at providing structured data for consistent reporting and dashboarding.

Historical data analysis : The structured nature of data warehouses makes them ideal for analyzing trends over time and comparing historical performance.

Regulatory compliance reporting : The highly curated nature of data warehouses ensures data consistency for compliance reporting requirements.

Best Practices

Data Lake Best Practices

Use the data lake as a foundation for raw data : Store data in its native format without transformation (except for PII removal) to avoid losing potentially valuable information[13].

Implement proper data governance : Establish a robust framework including policies for data classification, lineage, access controls, and audit trails to maintain data quality, security, and compliance[16].

Regular audits and maintenance : Conduct regular reviews of data quality, governance policies, access controls, and performance metrics to prevent the data lake from becoming a "data swamp"[16].

Data lifecycle management : Implement policies defining retention periods for different data types based on regulatory requirements and business needs to optimize storage costs and performance[16].

User access control : Use role-based access control (RBAC) to ensure users only access the data they need, and regularly review access logs to detect unauthorized access attempts[16].

Data Warehouse Best Practices

Understand your data warehousing needs : Identify whether a data warehouse is appropriate for your specific use case or if a data lake or RDBMS might be more suitable[9].

Choose the right data warehouse architecture : Select between cloud-based and on-premises solutions based on organizational size, business scope, and specific requirements[9].

Establish an operational data plan : Develop a strategy covering development, testing, and production to anticipate current and future warehousing needs and determine capacity requirements[9].

Define access controls : Implement governance rules specifying who can access the system, when, and for what purposes, complemented by appropriate cybersecurity measures[9].

Modern Approach: Data Lakehouse

The data lakehouse architecture combines elements of both data lakes and warehouses, using similar data structures and management features to those in a data warehouse but running them directly on the low-cost, flexible storage used for cloud data lakes[13]. This architecture allows traditional analytics, data science, and machine learning to coexist in the same system.

The lakehouse architecture bridges this divide by implementing warehouse-like management features directly on cost-efficient cloud storage – using metadata layers like Delta Lake to enable ACID transactions, schema enforcement, and version control while maintaining the flexibility to handle diverse data types. This evolution specifically targets three core problems: 1) eliminating data silos between analytical and machine learning systems, 2) reducing ETL complexity and data duplication across separate lake/warehouse infrastructures, and 3) enabling concurrent access to fresh data for BI, SQL analytics, and advanced AI use cases within a single platform.

By combining the structured data management of warehouses with the scalability of lakes, lakehouses provide a unified architecture that supports both batch and real-time processing while maintaining data integrity through transactional guarantees.

![Data Lakehouse Architecture Compares to Data Warehouse and Data Lake[80]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099c4f4d1460920c1738d3_67480fef30f9df5f84f31d36%252F685e663bb6f732e1179cbbc9_NH4c.png)

Conclusion

The choice between data lakes and data warehouses depends on an organization's specific needs, data types, and analytical requirements. Many organizations implement both solutions as complementary technologies in their data ecosystem.

Data lakes provide flexibility, cost-effective storage for diverse data types, and support for advanced analytics and machine learning. They are ideal for organizations with large volumes of varied data requiring exploratory analysis and deep insights.

Data warehouses offer optimized performance for structured data queries, reliable reporting, and business intelligence applications. They remain the preferred solution for organizations needing consistent, high-quality data for decision-making.

Modern approaches like data lakehouse are blurring the lines between these technologies, allowing organizations to leverage the strengths of both. As data volumes continue to grow and real-time processing becomes increasingly important, integration with streaming data platforms will be critical for maintaining data consistency across all storage systems.

[1][5][10][13][16][17][19]

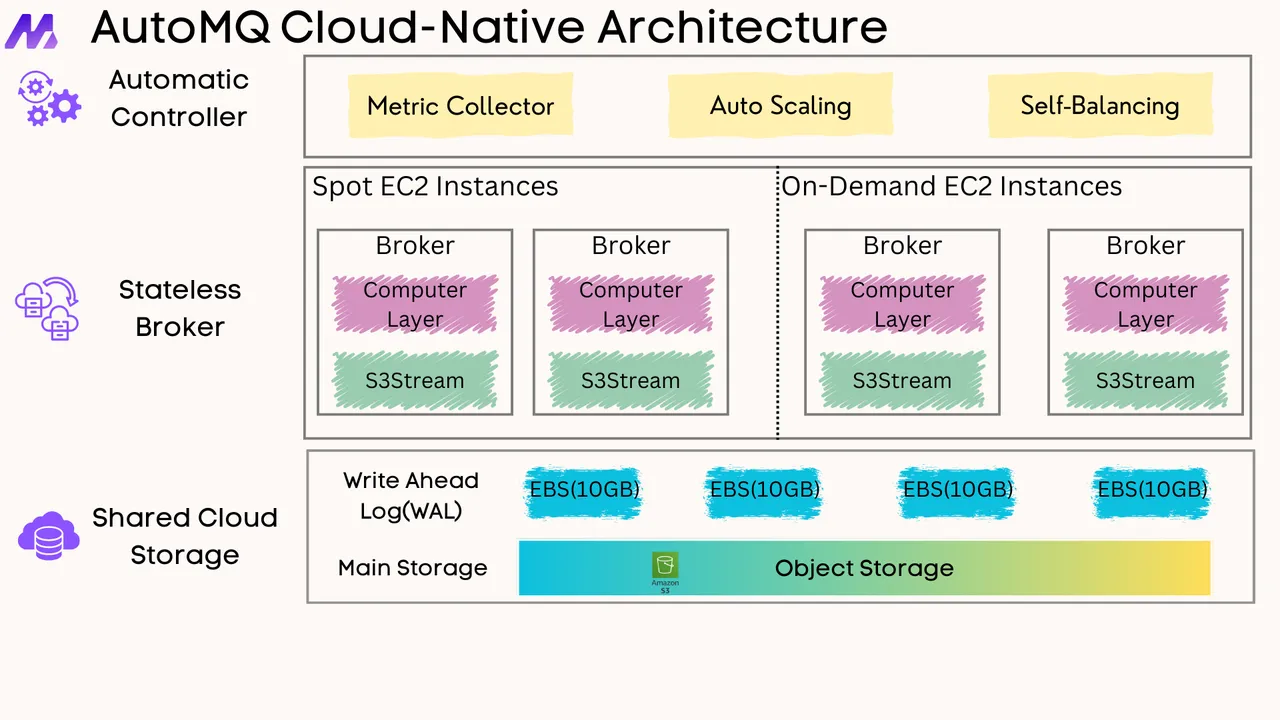

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

References

Best Practices for Building a Modern Data Lake with Amazon S3

SQL Over Kafka: Transforming Real-Time Data Into Instant Insights

Unlocking AI's Full Potential: Gartner D&A Summit 2025 Insights

Building Data Pipelines for Supply Chain Management with Amazon Redshift

Difference Between Data Warehouse And Data Mart | Talent500 blog

.png)