Introduction

Kafka is a powerful distributed streaming platform widely used for building real-time data pipelines and streaming applications. Deploying Kafka on Kubernetes can provide significant advantages in terms of scalability, flexibility, and resource management. This article explores the top three methods for deploying Kafka on Kubernetes, the challenges associated with these methods, and introduces AutoMQ as a Kubernetes-native, stateless alternative that simplifies deployment and scaling. Finally, we'll recommend a trial of AutoMQ on AWS EKS.

Top 3 Methods to Deploy Kafka on Kubernetes

Strimzi

Strimzi is an open-source project that provides a way to run Apache Kafka on Kubernetes in various deployment configurations. It includes a Kafka Operator that simplifies the deployment, management, and scaling of Kafka clusters on Kubernetes.

Features:

Helm Charts and Operators: Strimzi provides Helm charts and Kubernetes Operators for easy deployment and management of Kafka clusters.

Automated Operations: Strimzi automates many Kafka operations, including rolling updates, upgrades, and scaling.

Monitoring and Alerting: Strimzi integrates with Prometheus and Grafana for monitoring Kafka clusters and setting up alerts.

Security: Strimzi supports TLS encryption, authentication using SASL, and authorization using ACLs.

Pros:

Comprehensive automation of Kafka operations.

Strong community support and regular updates.

Built-in monitoring and security features.

Cons:

Operational Complexity: Managing and operating a Kubernetes Operator like Strimzi can be complex. It requires a deep understanding of both Kubernetes and Kafka, as well as the Operator's specific configurations and behaviors.

Learning Curve: There is a significant learning curve associated with setting up and managing the Strimzi Operator, which may require additional training and experience.

Resource Management: Ensuring that the Operator has the necessary resources and permissions to function correctly can add to the operational overhead.

Bitnami Kafka

Bitnami offers a set of Helm charts for deploying Kafka on Kubernetes. These charts simplify the deployment process and provide a robust and scalable Kafka cluster setup.

Features:

Ease of Deployment: Bitnami's Helm charts make it easy to deploy Kafka clusters with minimal configuration.

Customizability: The Helm charts are highly configurable, allowing users to customize various aspects of the Kafka deployment.

Integration: Bitnami's Kafka charts integrate well with other Bitnami components, such as ZooKeeper and Prometheus.

Security: Bitnami provides options for enabling TLS encryption and configuring authentication mechanisms.

Pros:

Simple and quick deployment process.

Highly customizable Helm charts.

Good integration with other Bitnami components.

Cons:

Less automation for operational tasks compared to Strimzi.

Requires manual intervention for some scaling and maintenance tasks.

KUDO Kafka

KUDO (Kubernetes Universal Declarative Operator) Kafka is an operator that simplifies the deployment and management of Kafka on Kubernetes. It leverages the KUDO framework to provide a declarative and extensible approach to managing Kafka clusters.

Features:

Declarative Management: KUDO Kafka allows users to manage Kafka clusters declaratively, making it easier to define and maintain cluster configurations.

Simplified Upgrades: KUDO Kafka simplifies the process of upgrading Kafka clusters by handling the necessary steps automatically.

Extensibility: The KUDO framework allows users to extend and customize the operator with custom plans and tasks.

Pros:

Declarative approach simplifies management.

Easy upgrades and scaling operations.

Extensible with custom plans and tasks.

Cons:

May require additional learning curve for the KUDO framework.

Less mature compared to Strimzi and Bitnami solutions.

Pain Points of Existing Solutions

While the above methods provide robust solutions for deploying Kafka on Kubernetes, they come with several challenges:

Complexity in Scaling

Manual Intervention: Scaling Kafka clusters often requires manual intervention, including rebalancing partitions and ensuring data consistency.

Resource Management: Efficiently managing resources and ensuring optimal performance during scaling operations can be challenging.

Operational Overhead

Configuration Management: Managing configurations for Kafka brokers, ZooKeeper nodes, and other components can be complex.

Maintenance: Regular maintenance tasks, such as rolling updates and upgrades, require careful planning and execution to avoid downtime.

Performance and Latency

Network Latency: Ensuring low-latency communication between Kafka brokers and clients can be difficult, especially in multi-zone or multi-region deployments.

Resource Contention: Kafka's performance can be impacted by resource contention on shared Kubernetes nodes.

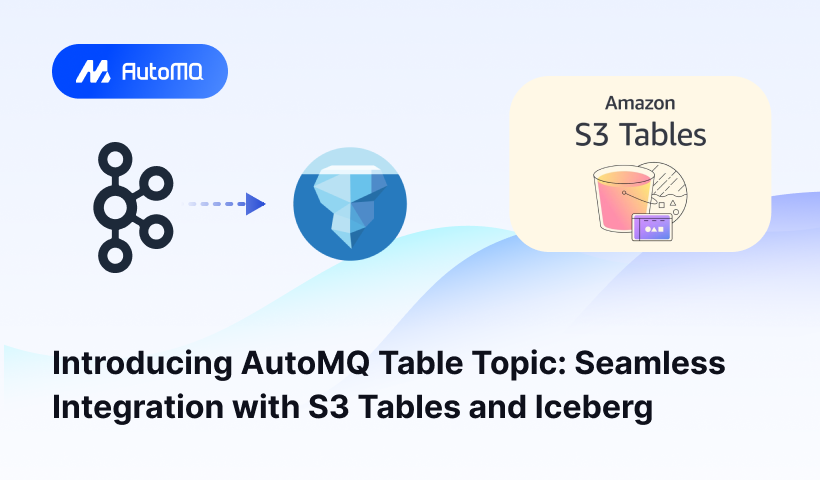

Introducing AutoMQ: A Stateless, Kubernetes-Native Architecture

AutoMQ is designed to address the pain points of traditional Kafka deployments on Kubernetes by leveraging a completely stateless architecture. This section explains why AutoMQ is a Kubernetes-native solution and how it simplifies deployment and scaling.

Stateless Architecture

AutoMQ eliminates the need for persistent state, making it easier to manage and scale. Each AutoMQ node can be independently scaled without worrying about data consistency or partition rebalancing.

Kubernetes-Native Design

AutoMQ is built with Kubernetes in mind, ensuring seamless integration with Kubernetes features and workflows:

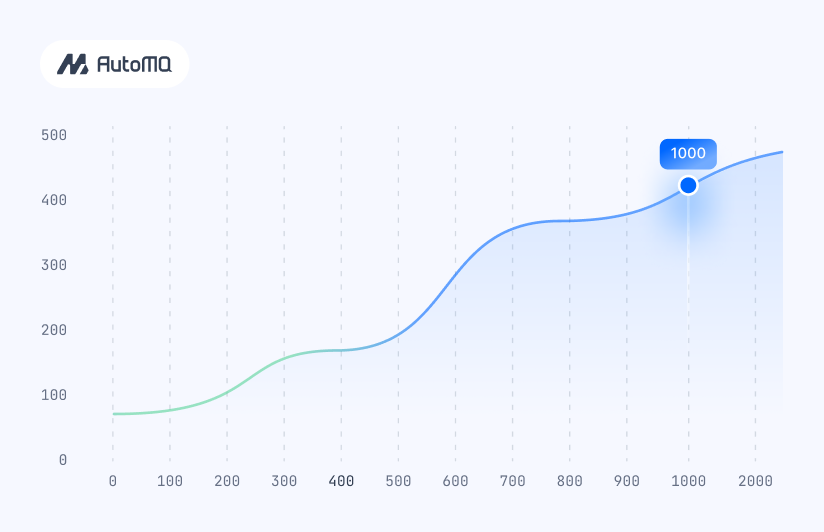

Auto-Scaling: AutoMQ can leverage Kubernetes' native auto-scaling capabilities to dynamically adjust the number of nodes based on load.

Simplified Configuration: Configuration management is simplified with Kubernetes ConfigMaps and Secrets.

Rapid Scaling: Nodes can be scaled in or out within seconds, providing unparalleled flexibility and responsiveness to changing workloads.

Trying AutoMQ on AWS EKS

AutoMQ on AWS EKS (Elastic Kubernetes Service) provides a powerful and flexible platform for running your streaming applications. AWS EKS offers a fully managed Kubernetes service, making it easy to deploy and manage AutoMQ clusters.

Steps to Deploy AutoMQ on AWS EKS

Create an EKS Cluster: Use the AWS Management Console or AWS CLI to create an EKS cluster.

Install AutoMQ: Deploy AutoMQ using Helm charts or Kubernetes manifests provided by AutoMQ.

Configure Auto-Scaling: Set up Kubernetes Horizontal Pod Autoscaler (HPA) to automatically scale AutoMQ nodes based on load.

Monitor and Manage: Use AWS CloudWatch and Kubernetes-native tools to monitor and manage your AutoMQ deployment.

More details please refer to: https://docs.automq.com/automq-cloud/deploy-automq-on-kubernetes/deploy-to-aws-eks

Conclusion

Deploying Kafka on Kubernetes can be challenging, but solutions like AutoMQ offer a simpler, more efficient approach. With its stateless architecture and Kubernetes-native design, AutoMQ provides an easy-to-deploy, scalable, and high-performance alternative to traditional Kafka deployments. We recommend trying AutoMQ on AWS EKS to experience the benefits firsthand.

.png)