Overview

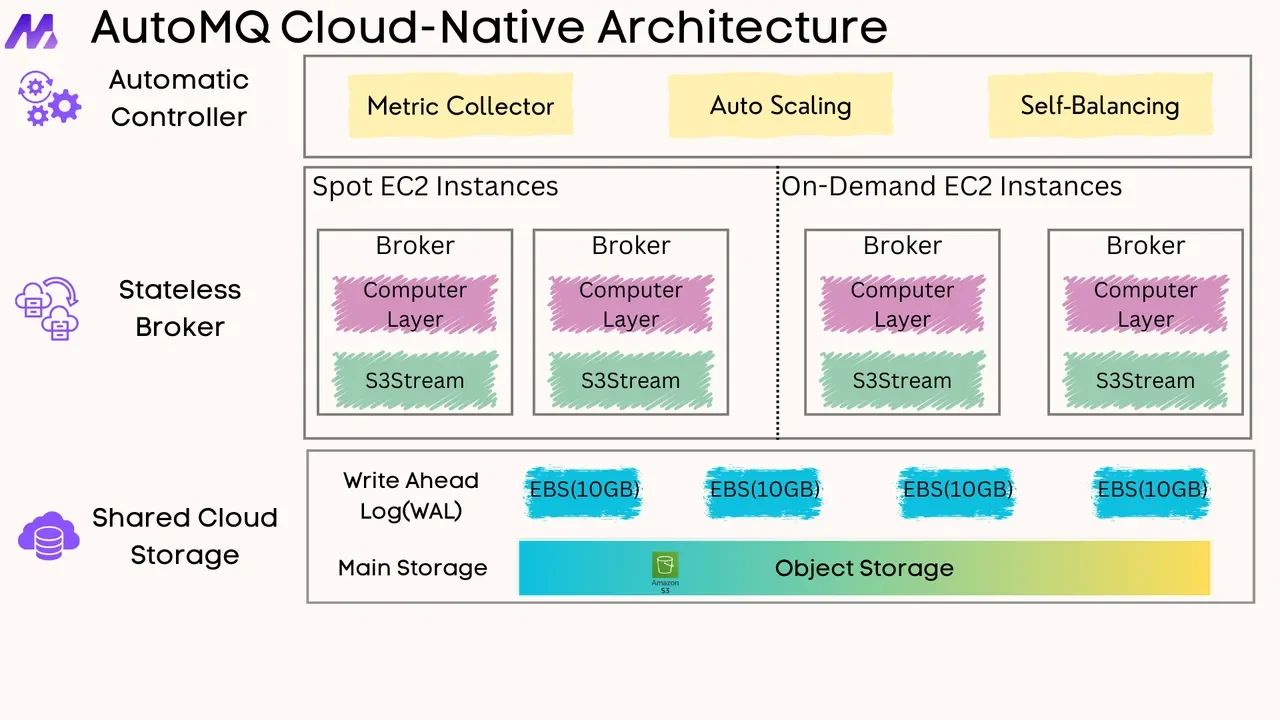

The world runs on real-time data. From instant fraud detection and personalized recommendations to live dashboards monitoring IoT devices, the ability to process continuous streams of information as they arrive is no longer a luxury but a necessity. This is the realm of stream processing. However, when designing a stream processing architecture, a fundamental decision revolves around where the data and application state primarily reside during computation: in-memory or on disk. Each approach presents distinct advantages, trade-offs, and ideal use cases. This blog post delves into a comprehensive comparison of in-memory and disk-based stream processing, exploring their concepts, architectures, performance characteristics, and best practices to help you choose the right engine for your needs.

Understanding Stream Processing Fundamentals

Before diving into the specifics of in-memory and disk-based approaches, let's briefly touch upon what stream processing entails. Stream processing is a paradigm that deals with unbounded data—data that is continuous and has no defined end . Unlike batch processing, which collects and processes data in discrete chunks, stream processing systems ingest, analyze, and act on data in real-time or near real-time, typically within milliseconds or seconds .

A typical stream processing architecture involves several key components: a data ingestion layer to collect data from various sources, a stream processing engine to perform computations, a state management mechanism to store and retrieve data needed across events, and an output sink to deliver processed results or trigger actions . The choice between in-memory and disk-based processing primarily impacts how the processing engine and state management components operate.

![Data Stream Processing [50]](/blog/in-memory-stream-processing-vs-disk-based-stream-processing-/1.png)

In-Memory Stream Processing: The Need for Speed

In-memory stream processing, as the name suggests, performs computations and manages application state predominantly within the Random Access Memory (RAM) of the processing nodes . The core idea is to eliminate the latency associated with reading and writing data to slower disk drives, thereby achieving extremely high throughput and low-latency processing .

How It Works and Architecture

In-memory processing systems often utilize distributed architectures where multiple machines pool their RAM to form a large, fast storage and computation layer . Technologies like In-Memory Data Grids (IMDGs), such as Hazelcast, play a crucial role in managing this distributed memory, distributing data, and parallelizing processing tasks across the cluster . Data is loaded into RAM, and computations are performed directly on this in-memory data. Stream processing engines like Hazelcast Jet are built on this principle, designed for high-performance, low-latency stateful computations . Apache Flink and Apache Spark Streaming can also be configured to heavily prioritize in-memory operations for both data and state .

![Server RAM [51]](/blog/in-memory-stream-processing-vs-disk-based-stream-processing-/2.png)

Advantages

The primary advantage of in-memory stream processing is its exceptional performance. Accessing data from RAM is orders of magnitude faster than from disk, leading to significantly lower latency and higher throughput, crucial for use cases like algorithmic trading or real-time bidding . This approach can also simplify certain aspects of I/O management by reducing reliance on disk operations for active data sets.

Disadvantages and Challenges

However, speed comes at a price. RAM is considerably more expensive than disk storage, which can make in-memory solutions costly for applications dealing with very large datasets or requiring vast amounts of state to be maintained . Furthermore, data stored in RAM is volatile; a system crash or power outage can lead to data loss unless robust fault tolerance mechanisms are in place . These mechanisms often involve replicating data across multiple in-memory nodes or periodically checkpointing state to a durable persistent store, adding complexity . Managing memory effectively is also a significant challenge. In-memory systems are susceptible to OutOfMemoryErrors (OOMEs) and performance degradation due to Java Virtual Machine (JVM) garbage collection (GC) pauses. This necessitates careful memory tuning, potentially using off-heap memory, and implementing strategies for efficient data structure management .

Disk-Based Stream Processing: Durability and Scale

Disk-based stream processing does not imply that all operations exclusively happen on disk. Instead, it refers to systems where disk storage plays a more central and enduring role, particularly for managing large application states, persisting message logs, or even handling intermediate data when memory is constrained. This often involves a hybrid approach, where memory (like the OS page cache) is still heavily utilized for performance, but the primary persistence or overflow mechanism is disk .

![Storage Server [52]](/blog/in-memory-stream-processing-vs-disk-based-stream-processing-/3.png)

How It Works and Architecture

Disk-based strategies manifest in several ways:

-

Persistent State Management: For applications with large states that exceed available RAM or require strong durability guarantees, state is stored on disk. Stream processing engines like Apache Flink and Kafka Streams can use embedded databases like RocksDB, which persist data to local disk . Cloud-native solutions like RisingWave might use object stores like Amazon S3 for durable and scalable state management .

-

Message Logging: Systems like Apache Kafka and some Kafka-compatible alternatives are inherently disk-based for their primary data storage—the distributed commit log . They write message streams to disk, providing high durability and the ability to replay messages. Performance is maintained by leveraging sequential I/O and the operating system's page cache, which keeps frequently accessed data in RAM .

-

Intermediate Data Spilling: Some data processing systems (and stream processors under memory pressure) can "spill" intermediate results of complex operations (like joins or large aggregations) to disk if they don't fit in memory . This allows the system to process datasets larger than available RAM, preventing OOMEs but at the cost of performance.

The architecture typically involves the stream processing engine interacting with disk for these purposes, often with the OS page cache playing a vital role in optimizing read/write performance from disk .

Advantages

The most significant advantage is the ability to handle very large data volumes and application states cost-effectively, as disk storage (especially HDDs or cloud object storage) is much cheaper than RAM . This approach offers inherent durability for data and state stored on persistent disks. For operations that might otherwise cause memory exhaustion, spilling intermediate data to disk provides stability and allows processing to complete, albeit more slowly .

Disadvantages and Challenges

The primary drawback is higher latency and lower throughput compared to purely in-memory processing, due to the slower nature of disk I/O . I/O bottlenecks can become a significant performance impediment if not managed properly, requiring careful disk configuration, use of fast SSDs, and I/O tuning . While offering durability, recovery from disk-based state can be slower than recovering in-memory state that can be quickly rebuilt.

In-Memory vs. Disk-Based: A Side-by-Side Comparison

| Feature | In-Memory Stream Processing | Disk-Based Stream Processing (Primarily Disk for State/Logs/Spill) |

|---|---|---|

| Performance (Latency) | Very Low | Higher (due to disk I/O, page cache helps) |

| Performance (Throughput) | Very High | Moderate to High (I/O bound, page cache helps) |

| Scalability (Data Volume) | Limited by RAM cost & capacity | High (disk is cheaper for large volumes) |

| Scalability (State Size) | Limited by RAM cost & capacity | High (can store very large states on disk/cloud storage) |

| Fault Tolerance & Durability | Requires replication/checkpointing to disk for durability; state volatile if not persisted. Recovery can be fast if state is small or rebuilt quickly. | State on disk is inherently durable. Logs on disk are durable. Recovery may involve loading from disk. |

| Cost (Hardware) | High (RAM is expensive) | Lower (Disk/SSD/Cloud Storage is cheaper per GB) |

| Cost (Operational) | Can be high due to memory tuning complexity. | Can be high due to I/O tuning, disk management. Cloud storage may reduce operational cost for state. |

| Complexity (Development) | Memory management (GC, OOMs), state persistence logic. | I/O optimization, managing disk resources, large state handling. |

| Complexity (Maintenance) | Tuning JVM/GC, monitoring memory. | Monitoring disk I/O, managing disk space, backup/recovery from disk. |

| Primary Data Location | RAM | RAM (for active processing, page cache) + Disk (for state, logs, spill) |

Common Issues and Mitigation Strategies

Both approaches come with their own set of common issues:

In-Memory Stream Processing

-

Issue: OutOfMemoryErrors (OOMEs) and long Garbage Collection (GC) pauses are common, impacting stability and performance .

-

Mitigation:

-

JVM Tuning: Optimizing heap size, young/old generation ratios, and GC algorithms (e.g., G1GC, ZGC) .

-

Off-Heap Memory: Storing state or large data structures off the JVM heap to reduce GC pressure (e.g., Flink's managed memory, RocksDB's off-heap cache) .

-

Efficient Data Structures & Serialization: Using memory-efficient data structures and optimized serialization formats (like Apache Avro or Kryo) .

-

State TTL & Pruning: Implementing Time-To-Live policies for state entries to evict old data and keep state size manageable .

-

Capacity Planning: Accurately estimating memory requirements and provisioning resources accordingly.

-

Disk-Based Stream Processing

-

Issue: I/O bottlenecks leading to high latency and reduced throughput .

-

Mitigation:

-

Fast Storage: Using SSDs or NVMe drives instead of HDDs for state stores or spill directories .

-

OS Page Cache Optimization: Ensuring sufficient free memory for the OS page cache, especially for systems like Kafka .

-

Data Partitioning & Layout: Optimizing how data is partitioned and laid out on disk to improve access patterns.

-

Asynchronous I/O: Utilizing asynchronous disk operations where possible to avoid blocking processing threads.

-

Compression: Compressing data written to disk can reduce I/O volume at the cost of CPU cycles .

-

Stateful Stream Processing (General Challenges)

-

Issue: Managing large and evolving state, ensuring exactly-once processing semantics, and handling fault tolerance efficiently are persistent challenges .

-

Mitigation:

-

Robust Checkpointing: Implementing frequent and efficient checkpointing to durable storage .

-

Choice of State Backend: Selecting a state backend appropriate for the state size and access patterns (e.g., in-memory for small, fast state; RocksDB for large, durable state) .

-

Watermarking & Event-Time Processing: Accurately handling out-of-order events to ensure correctness in stateful computations .

-

Scalable State Migration: For dynamic scaling, ensuring state can be repartitioned and migrated efficiently .

-

Best Practices for Designing Your Stream Processing Solution

Regardless of whether you lean towards in-memory or disk-based approaches, several best practices apply :

-

Adopt a Streaming-First Mindset: Design systems with continuous data flow in mind from the outset.

-

Handle Time Correctly: Understand the difference between event time and processing time and use watermarks to handle late or out-of-order data accurately.

-

Ensure Fault Tolerance and Data Guarantees: Implement mechanisms for checkpointing, replication, and exactly-once semantics where data integrity is critical.

-

Design for Scalability: Anticipate data growth and design your pipelines to scale horizontally.

-

Monitor Continuously: Implement robust monitoring and alerting to track pipeline health, performance bottlenecks, and resource utilization.

-

Choose Appropriate State Management: Select an in-memory, disk-based, or hybrid state solution based on latency, volume, cost, and durability requirements.

-

Optimize Data Serialization: Use efficient serialization formats to reduce data size and processing overhead.

-

Implement Backpressure Mechanisms: Prevent upstream components from overwhelming downstream systems.

Conclusion: Finding the Right Balance

The choice between in-memory and disk-based stream processing is not a simple dichotomy but a spectrum. In-memory processing offers unparalleled speed and low latency, making it ideal for applications where every millisecond counts and state sizes are manageable within RAM budgets. Disk-based approaches, including those leveraging persistent state stores on disk or intelligent log management with OS page caching, provide scalability for massive data volumes and state, enhanced durability, and often better cost-efficiency for large-scale deployments, albeit with generally higher latency.

Modern stream processing frameworks like Apache Flink, Spark Streaming, and Kafka Streams increasingly offer hybrid models, allowing developers to make fine-grained decisions about where data and state reside. The trend is towards systems that can intelligently use memory for performance-critical operations while leveraging disk (or cloud storage) for durability, large-scale state, and cost optimization . As technologies like AI/ML become more integrated with stream processing and use cases at the edge expand, the demand for flexible, scalable, and efficient stream processing architectures that can smartly balance memory and disk usage will only continue to grow . Ultimately, understanding the specific requirements of your use case—latency, throughput, data volume, state size, fault tolerance, and budget—will guide you to the optimal stream processing strategy.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging