Overview

Apache Kafka has become a cornerstone for handling real-time data streams, powering everything from event-driven architectures to large-scale data pipelines. As organizations increasingly leverage cloud storage for its scalability and cost-effectiveness, a common requirement is to move data from Kafka topics into Azure Blob Storage. This durable object storage solution on Microsoft Azure is ideal for archiving, data lake implementations, and feeding downstream analytics.

This blog post explores the primary methods for integrating Kafka with Azure Blob Storage, discussing their architectures, common use cases, and key considerations.

Core Concepts: Kafka and Azure Blob Storage

Before diving into integration methods, let's briefly revisit the core components.

Apache Kafka

Apache Kafka is a distributed streaming platform that enables applications to publish, subscribe to, store, and process streams of records in a fault-tolerant way . Key Kafka concepts include:

-

Topics : Categories or feed names to which records are published.

-

Brokers : Kafka servers that store data and serve client requests.

-

Producers : Applications that publish streams of records to Kafka topics.

-

Consumers : Applications that subscribe to topics and process the streams of records.

-

Partitions : Topics are divided into partitions, allowing for parallel processing and scalability.

-

Kafka Connect : A framework for reliably streaming data between Apache Kafka and other systems.

![Apache Kafka Architecture [15]](/blog/kafka-azure-blob-storage-streaming-integration-guide/1.png)

Azure Blob Storage

Azure Blob Storage is Microsoft's object storage solution for the cloud . It's optimized for storing massive amounts of unstructured data, such as text or binary data. Key features include:

-

Storage Accounts and Containers : Blobs are stored in containers, which are organized within a storage account.

-

Blob Types : Block blobs (for text/binary data), Append blobs (for append operations), and Page blobs (for random read/write operations, often used for IaaS disks). Block blobs are typically used for Kafka data.

-

Storage Tiers : Hot, Cool, and Archive tiers allow for cost optimization based on data access frequency .

-

Durability and Availability : Offers high durability and availability options.

-

Data Lake Storage : Azure Data Lake Storage Gen2 is built on Azure Blob Storage, providing a scalable and cost-effective data lake solution with hierarchical namespace capabilities .

![Azure Blob Storage Structure [16]](/blog/kafka-azure-blob-storage-streaming-integration-guide/2.png)

Primary Integration Methods

There are several ways to get your Kafka topic data into Azure Blob Storage. The most common and robust methods involve using Kafka Connect sink connectors, leveraging Azure-native streaming services, or employing Kafka's built-in tiered storage capabilities.

Kafka Connect with Dedicated Azure Blob Storage Sink Connectors

Kafka Connect is a powerful framework for building and running reusable connectors that stream data in and out of Kafka . Several sink connectors are specifically designed to write data from Kafka topics to Azure Blob Storage. These connectors typically run as part of a Kafka Connect cluster and handle the complexities of data formatting, partitioning in Blob Storage, and ensuring delivery guarantees.

How it Works:

-

The sink connector subscribes to specified Kafka topics.

-

It consumes messages from these topics in batches.

-

It converts messages into a desired output format (e.g., Avro, Parquet, JSON).

-

It writes these messages as objects (blobs) into a designated Azure Blob Storage container.

-

Connectors manage file rotation based on criteria like message count, time duration, or file size.

-

They also handle partitioning of data within Blob Storage, often based on Kafka topic, partition, and message timestamps, allowing for efficient querying by downstream systems.

Key Features and Considerations:

-

Data Formats : Support for various formats like Avro, Parquet, JSON, and raw bytes is common. Avro and Parquet are popular for data lake scenarios due to their schema support and compression efficiency.

-

Partitioning : Connectors offer strategies to partition data in Blob Storage (e.g.,

/{topic}/{date}/{time}/,/{topic}/{kafka_partition}/). This is crucial for organizing data and optimizing query performance. -

Exactly-Once Semantics (EOS) : Many dedicated connectors offer EOS, ensuring that each Kafka message is written to Blob Storage exactly once, even in the event of failures, provided configurations are set correctly (e.g., using deterministic partitioners).

-

Schema Management : Integration with a Schema Registry is often supported, especially for formats like Avro and Parquet. This allows for schema evolution and validation.

-

Error Handling & Dead Letter Queues (DLQ) : Robust error handling, including retries and the ability to route problematic messages to a DLQ, is a standard feature.

-

Configuration : Connectors come with a rich set of configuration options to control flush size, rotation intervals, authentication with Azure (e.g., connection strings, Managed Identities), compression (e.g., Gzip, Snappy), and more .

-

Management : Kafka Connect clusters require management, including deployment, monitoring, and scaling of connector tasks.

Open-source and commercially supported versions of Azure Blob Storage sink connectors are available. For instance, Coffeebeans Labs offers an open-source Kafka Connect Azure Blob Storage connector .

Azure Stream Analytics (ASA)

Azure Stream Analytics is a real-time analytics and complex event-processing service that allows you to analyze and process high volumes of fast streaming data from multiple sources simultaneously . ASA can ingest data from Kafka via Azure Event Hubs and output it to Azure Blob Storage.

How it Works:

-

Kafka to Event Hubs : Azure Event Hubs can expose a Kafka-compatible endpoint, allowing Kafka producers to send messages to an Event Hub as if it were a Kafka cluster . Alternatively, Kafka Connect or other tools can stream Kafka topic data into Event Hubs.

-

ASA Job : An Azure Stream Analytics job is configured with Event Hubs as an input source and Azure Blob Storage as an output sink.

-

Querying and Transformation : ASA uses a SQL-like query language to process incoming events. This allows for filtering, transformation, and aggregation of data before it's written to Blob Storage.

-

Output to Blob : ASA writes the processed data to Azure Blob Storage, supporting formats like JSON, CSV, Avro, Parquet, and Delta Lake .

Key Features and Considerations:

-

Serverless Nature : ASA is a fully managed PaaS offering, reducing operational overhead associated with managing a Kafka Connect cluster.

-

Real-time Processing & Transformation : ASA excels at performing transformations, aggregations, and windowing operations on streaming data before it lands in Blob Storage.

-

Integration with Azure Ecosystem : Seamless integration with other Azure services, including Azure Event Hubs for ingestion and Azure Schema Registry for handling schemas (primarily for Avro, JSON Schema, and Protobuf on input from Event Hubs) .

-

Data Formats & Partitioning : Supports multiple output formats and allows for dynamic path partitioning in Blob Storage using date/time tokens or custom fields from the event data (e.g.,

/{field_name}/{date}/{time}/) . -

Exactly-Once Semantics : ASA provides exactly-once processing guarantees under specific conditions for its Blob Storage output, particularly when using path patterns with date and time and specific write modes .

-

Scalability : ASA jobs can be scaled by adjusting Streaming Units (SUs).

-

Cost Model : Pricing is based on the number of Streaming Units (SUs) provisioned and data processed.

Tiered Storage for Kafka

Tiered storage is a feature in some Kafka distributions that allows Kafka brokers to offload older log segments from local broker storage to a more cost-effective remote object store, such as Azure Blob Storage. This isn't strictly an "export" pipeline in the same way as Kafka Connect or ASA; rather, it extends Kafka's own storage capacity.

How it Works:

-

Kafka brokers are configured to use Azure Blob Storage as a remote tier.

-

As data in Kafka topics ages (based on time or size retention policies on the broker), segments that are no longer actively written to are moved from the local broker disks to Azure Blob Storage.

-

These segments remain part of the Kafka topic's log and can still be consumed by Kafka clients, though with potentially higher latency for data fetched from the remote tier.

-

The Kafka brokers manage the metadata and access to these tiered segments.

Key Features and Considerations:

-

Infinite Retention (Effectively) : Allows for virtually limitless storage of Kafka topic data, constrained only by Blob Storage capacity and cost.

-

Cost Reduction : Moves older, less frequently accessed data to cheaper object storage.

-

Transparent to Consumers (Mostly) : Consumers can read historical data without knowing it's served from Blob Storage, though performance characteristics might differ.

-

Broker-Level Feature : This is a capability built into the Kafka broker software itself (or its distribution) and is not a separate ETL process.

-

Use Cases : Primarily for long-term retention and replayability of Kafka data, rather than for feeding data lakes where data needs to be in specific queryable formats (like Parquet) immediately. The data in Blob Storage remains in Kafka's internal log segment format.

-

Specific Implementations : The exact setup and capabilities depend on the Kafka distribution offering this feature .

Comparing the Approaches

| Feature | Kafka Connect Sink | Azure Stream Analytics (via Event Hubs) | Tiered Storage |

|---|---|---|---|

| Primary Use Case | Exporting/archiving to Blob, data lake feeding | Real-time processing & Blob archiving | Extending Kafka broker storage, long retention |

| Data Transformation | Limited (SMTs); primarily format conversion | Rich (SQL-like queries) | None (data remains in Kafka log format) |

| Output Formats | Avro, Parquet, JSON, Bytes, etc. | JSON, CSV, Avro, Parquet, Delta Lake | Kafka log segment format |

| Schema Management | Good (Schema Registry integration) | Good for input (Azure Schema Registry) | N/A (internal Kafka format) |

| Exactly-Once | Yes (with proper configuration) | Yes (under specific conditions) | N/A (within Kafka's own replication) |

| Management Overhead | Moderate (Kafka Connect cluster) | Low (PaaS service) | Low to Moderate (Broker configuration) |

| Latency to Blob | Near real-time (batch-based) | Near real-time (streaming) | N/A (data ages into tier) |

| Cost Model | Connector infra, Blob storage | ASA SUs, Event Hubs, Blob storage | Broker infra, Blob storage (for tiered data) |

| Azure Native | No (runs on VMs/containers) | Yes | No (Kafka feature, uses Azure as backend) |

Key Considerations and Best Practices

When integrating Kafka with Azure Blob Storage, consider the following:

-

Data Format Selection :

-

Avro/Parquet : Recommended for data lake scenarios. They are compact, support schema evolution, and are well-suited for analytics. Parquet is columnar and excellent for query performance with tools like Azure Synapse Analytics or Azure Databricks.

-

JSON : Useful for human readability or when schemas are highly dynamic, but less efficient for storage and querying large datasets.

-

Compression : Always use compression (e.g., Snappy, Gzip) to reduce storage costs and improve I/O. Snappy offers a good balance between compression ratio and CPU overhead.

-

-

Partitioning Strategy in Blob Storage :

-

Plan your directory structure in Blob Storage carefully. Partitioning by date (e.g.,

yyyy/MM/dd/HH/) is common and helps with time-based queries and data lifecycle management. -

Partitioning by a key field from your data can also optimize queries if that field is frequently used in filters.

-

Avoid too many small files, as this can degrade query performance. Aim for reasonably sized files (e.g., 128MB - 1GB). Kafka Connect sink connectors often have settings to control file size/rotation.

-

-

Schema Management :

- Use a Schema Registry (like Azure Schema Registry with Event Hubs/ASA, or a separate one with Kafka Connect) to manage Avro or Parquet schemas. This ensures data consistency and facilitates schema evolution.

-

Security :

-

Authentication : Use Managed Identities for Azure resources (Kafka Connect running on Azure VMs/AKS, ASA) to securely access Azure Blob Storage and Event Hubs . Alternatively, use SAS tokens or account keys, but manage them securely using Azure Key Vault.

-

Network Security : Use Private Endpoints for Azure Blob Storage and Event Hubs to ensure traffic stays within your virtual network.

-

Encryption : Data is encrypted at rest by default in Azure Blob Storage. Ensure encryption in transit (TLS/SSL) for Kafka communication and access to Azure services.

-

-

Exactly-Once Semantics (EOS) :

- Achieving EOS is critical for data integrity. Understand the EOS capabilities and configuration requirements of your chosen method (Kafka Connect connector or ASA). This often involves idempotent producers, transactional commits, or deterministic partitioning.

-

Error Handling and Monitoring :

-

Implement robust error handling, including retries and Dead Letter Queues (DLQs) for messages that cannot be processed or written.

-

Monitor your pipeline for throughput, latency, errors, and resource utilization. Kafka Connect metrics, Azure Monitor for ASA, and Event Hubs metrics are essential.

-

-

Cost Optimization :

-

Choose appropriate Azure Blob Storage tiers (Hot, Cool, Archive) based on access patterns. Use lifecycle management policies to automatically move data to cooler tiers or delete it.

-

Optimize Kafka Connect resource allocation or ASA Streaming Units to match your workload.

-

Consider data compression and efficient data formats to reduce storage and egress costs.

-

-

Azure Data Factory (ADF) : While ADF doesn't offer a direct built-in Kafka source connector to Azure Blob Storage sink, it can be used to orchestrate Kafka Connect jobs (via its REST API) or to process data once it has landed in Blob Storage .

Conclusion

Moving data from Apache Kafka to Azure Blob Storage is a common requirement for building scalable data pipelines and data lakes in the cloud. Kafka Connect sink connectors provide a flexible and robust way to export data with rich formatting and partitioning options. Azure Stream Analytics offers a serverless, Azure-native approach with powerful real-time processing capabilities. Tiered Storage within Kafka itself presents a solution for extending Kafka's storage capacity with Azure Blob for long-term retention.

The best choice depends on your specific requirements regarding data transformation, operational overhead, schema management, existing infrastructure, and cost considerations. By understanding these methods and applying best practices, you can effectively and reliably stream your Kafka data into Azure Blob Storage.

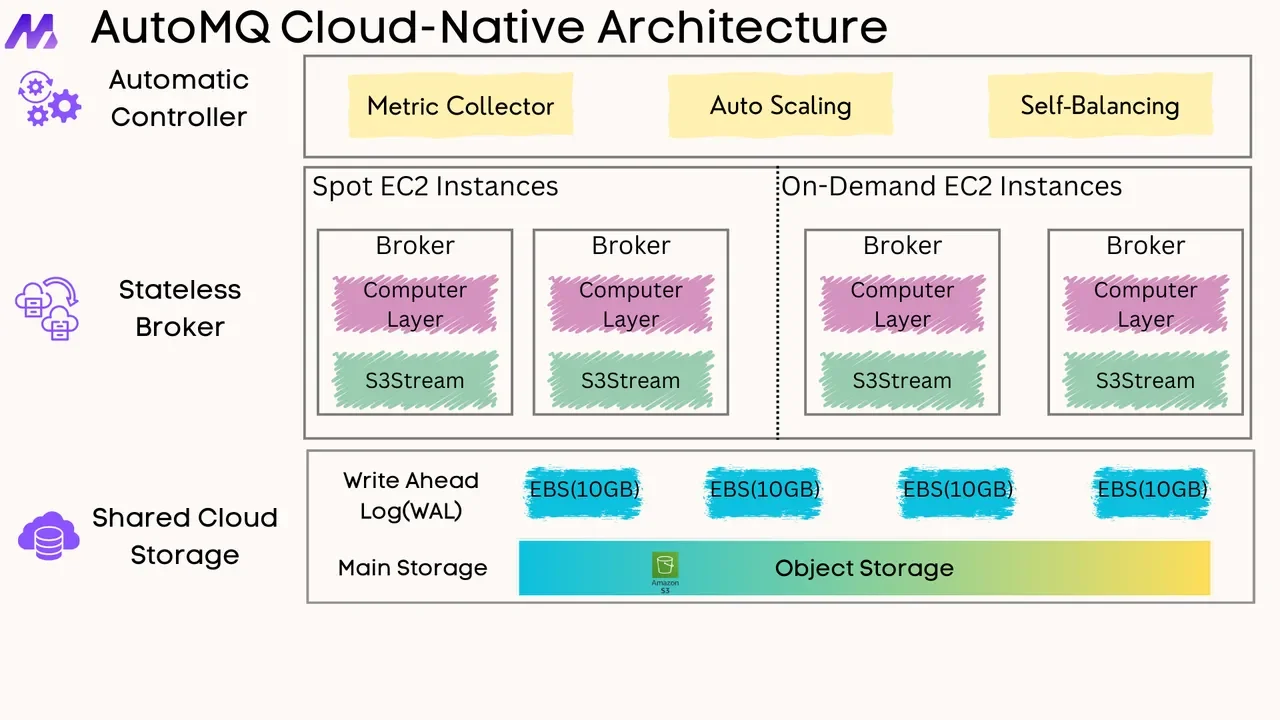

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging