Introduction

As data becomes the lifeblood of modern applications, moving it efficiently and reliably between different systems is a critical challenge. In event-driven architectures, Apache Kafka has emerged as the de facto standard for a central streaming platform. However, Kafka itself doesn't move data to and from other systems like databases, object stores, or SaaS applications. That crucial role is filled by Kafka Connect, a powerful framework within the Kafka ecosystem designed for exactly this purpose.

At the heart of Kafka Connect are two fundamental concepts: Source Connectors and Sink Connectors . Understanding the distinction between them is the first step toward mastering data integration with Kafka. This blog will dive deep into what these connectors are, how they work, their key differences, and the best practices for using them to build robust data pipelines.

What is Kafka Connect?

Before we compare Source and Sink connectors, it's essential to understand the framework they belong to. Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other data systems . It provides a simple, configuration-driven way to build data pipelines without writing custom integration code.

Instead of every developer building their own application to pull data from a database and produce it to Kafka, or consume from Kafka and write to a search index, Kafka Connect offers a standardized solution. It operates with a few key components:

-

Workers : These are the running processes that execute the connectors and their tasks. They can run in a scalable and fault-tolerant distributed mode or as a single standalone process for development and testing.

-

Connectors : A connector is a high-level configuration that defines how to connect to an external system. It's a blueprint for data movement.

-

Tasks : The worker process breaks a connector's job down into smaller pieces of work called tasks, which run in parallel to move the actual data.

-

Converters : These components handle the format of the data, converting it between Kafka Connect's internal representation and the format stored in Kafka, such as JSON, Avro, or Protobuf.

-

Transformations (SMTs) : Single Message Transformations (SMTs) allow for simple, in-flight modifications to data as it passes through the pipeline, such as renaming fields, masking data, or adding metadata .

Now, let's explore the two types of connectors that bring this framework to life.

![Kafka Connect in Kafka System [5]](/blog/kafka-connect-source-vs-sink-connectors/1.png)

Source Connectors: The Ingress Gateway to Kafka

A Source Connector is responsible for ingesting data from an external system and publishing it into Kafka topics. Think of it as the on-ramp to the Kafka streaming highway. It continuously monitors a source system for new data and converts it into records that can be streamed through Kafka.

How Source Connectors Work

The data flow for a source connector is straightforward: External Source System → Source Connector → Kafka Topic .

The core responsibility of a source connector is to read data from a source—be it a relational database, a set of log files, or a message queue. A critical part of this process is offset management. The connector must keep track of what data it has already successfully read and published to Kafka. This ensures that if the connector is stopped and restarted, it can resume from where it left off without missing data or creating duplicates. This progress, or "offset," is typically stored in a dedicated, compacted Kafka topic within the Kafka cluster itself, known as the offset.storage.topic.

Source connectors employ various ingestion patterns depending on the source system:

-

Query-based Polling : A common pattern for databases, where the connector periodically runs a SQL query to find new or updated rows. For example, it might query for records with a timestamp greater than the last one it processed.

-

Change Data Capture (CDC) : A more advanced and real-time pattern for databases. Instead of polling the tables, a CDC connector reads the database's transaction log (e.g., PostgreSQL's WAL or MySQL's binlog). This allows it to capture every single

INSERT,UPDATE, andDELETEoperation as a distinct event, providing a granular, low-latency stream of changes. -

File Tailing/Spooling : Used for ingesting data from files. A connector can "tail" a file, reading new lines as they are appended (common for application logs), or it can monitor a "spool directory" and ingest each new file that is placed there.

-

API Polling : Some source connectors are designed to periodically call an external HTTP API to fetch data and publish it to Kafka.

Common examples include JDBC connectors for databases, SpoolDir connectors for files, and connectors for various message queueing systems.

Sink Connectors: The Egress Gateway from Kafka

A Sink Connector does the opposite of a source connector. It is responsible for reading data from Kafka topics and writing it to an external destination system. It's the off-ramp from the Kafka highway, delivering processed events to their final destination for storage, analysis, or indexing.

How Sink Connectors Work

The data flow for a sink connector is the mirror image of a source: Kafka Topic → Sink Connector → External Destination System .

Sink connectors operate as Kafka consumers. They subscribe to one or more Kafka topics, read records in batches, and then write those records to a target system, such as a data lake, a search index, or a data warehouse.

For offset management, sink connectors leverage Kafka's built-in consumer group mechanism. As the connector successfully writes batches of records to the destination, it commits the corresponding offsets back to Kafka. This is the standard way Kafka consumers track their progress, ensuring data is processed reliably. If a sink connector task fails and restarts, it will resume reading from the last committed offset in the Kafka topic.

Common egress patterns for sink connectors include:

-

Data Lake/Warehouse Loading : A very popular use case where connectors consume data from Kafka and write it to object storage (like Amazon S3 or Google Cloud Storage) in formats like Parquet or Avro. This is a foundational pattern for building data lakes and feeding analytics platforms.

-

Search Indexing : Streaming events from Kafka directly into a search platform like Elasticsearch or OpenSearch. This enables real-time searchability of data as it's generated.

-

Database Upserting : Writing records to a relational or NoSQL database. Many sink connectors can perform "upsert" operations, where a new record is inserted, but if a record with the same key already exists, it is updated instead.

-

API Calls : Using an HTTP sink connector to forward Kafka messages to an external REST API, integrating Kafka with a wide range of web services and SaaS platforms.

Core Differences: A Side-by-Side Comparison

While both are part of the Kafka Connect framework, their roles and operational mechanics are fundamentally different. The following table summarizes their key distinctions:

| Feature | Source Connector | Sink Connector |

|---|---|---|

| Primary Function | Data Ingestion | Data Egress |

| Data Flow Direction | External System → Kafka | Kafka → External System |

| Kafka Role | Acts like a Kafka Producer | Acts like a Kafka Consumer |

| Offset Management | Manages its own source progress (e.g., last timestamp, log position). Stores this in offset.storage.topic. | Uses standard Kafka consumer group offsets, stored in Kafka's __consumer_offsets topic. |

| Parallelism (tasks.max) | Parallelism is determined by the source system's ability to be partitioned (e.g., multiple files, DB table partitions). | Parallelism is limited by the number of partitions in the source Kafka topic(s) . |

| Key Configuration Focus | Connection details for the source, polling intervals, topic creation rules, CDC-specific settings. | Connection details for the target, input topics, batch sizes, delivery guarantees, error handling (DLQs). |

Best Practices for Using Kafka Connectors

To move from basic usage to building production-grade data pipelines, it's crucial to follow established best practices.

Use a Schema Registry

For any serious use case, avoid plain JSON. Use a structured data format like Avro or Protobuf in conjunction with a Schema Registry. This provides several benefits :

-

Data Contracts : Enforces a schema on the data written to Kafka, preventing data quality issues from "garbage" data produced by upstream systems.

-

Efficient Serialization : Binary formats like Avro are more compact and efficient than JSON.

-

Safe Schema Evolution : The registry manages schema versions, ensuring that downstream consumers (including sink connectors) can handle schema changes without breaking.

Run in Distributed Mode

While standalone mode is useful for development, production workloads should always use distributed mode. This provides scalability (by adding more workers to the cluster), high availability, and automatic fault tolerance. If a worker node goes down, Kafka Connect will automatically rebalance the running connectors and tasks among the remaining workers.

Prioritize Idempotency

Whenever possible, use sink connectors that support idempotent writes. Idempotency means that writing the same data multiple times has the same effect as writing it once. This is a key feature for achieving exactly-once processing semantics. If a sink connector retries a batch of messages due to a temporary network failure, an idempotent sink ensures that duplicate records are not created in the destination system.

Master Error Handling

Data pipelines will inevitably encounter bad data or temporary system unavailability. Configure a robust error handling strategy. Kafka Connect provides mechanisms for retries with exponential backoff. For non-retriable errors (like a message that can never be parsed), configure a Dead Letter Queue (DLQ). This sends the problematic message and its metadata to a separate Kafka topic for later inspection, allowing the main pipeline to continue processing valid data.

Manage Configurations as Code

Treat your connector configurations like you treat your application code. Store the JSON configuration files in a version control system like Git and automate their deployment using the Kafka Connect REST API. This makes your data pipelines repeatable, auditable, and easier to manage across different environments (dev, staging, prod).

How to Choose the Right Connector

Choosing a connector is a straightforward process:

-

Determine the Data Direction : Is your goal to get data into Kafka or out of Kafka? This tells you whether you need a Source or a Sink connector.

-

Identify the External System : What is the other system involved? A PostgreSQL database, an S3 bucket, a Salesforce instance?

-

Find a Pre-Built Connector : The vast majority of common data systems have pre-built connectors available, many as open-source projects. Search community hubs and documentation for a connector that matches your system.

-

Consider Custom Development (as a Last Resort) : If a suitable connector doesn't exist, you can develop your own using Kafka Connect's APIs. However, this is a significant undertaking that requires deep knowledge of both the external system and the Kafka Connect framework. Always favor a well-maintained, pre-built connector if one is available.

Conclusion

Source and Sink connectors are the two pillars of Kafka Connect, providing the essential ingress and egress gateways for your Kafka cluster. Source connectors act as producers, bringing data in from external systems, while Sink connectors act as consumers, delivering data out to its final destination.

By leveraging this powerful, configuration-driven framework and its vast ecosystem of pre-built connectors, you can build and manage complex, real-time data pipelines with remarkable efficiency. Moving beyond custom code to a standardized, scalable, and fault-tolerant solution like Kafka Connect is a defining step in maturing a modern data architecture.

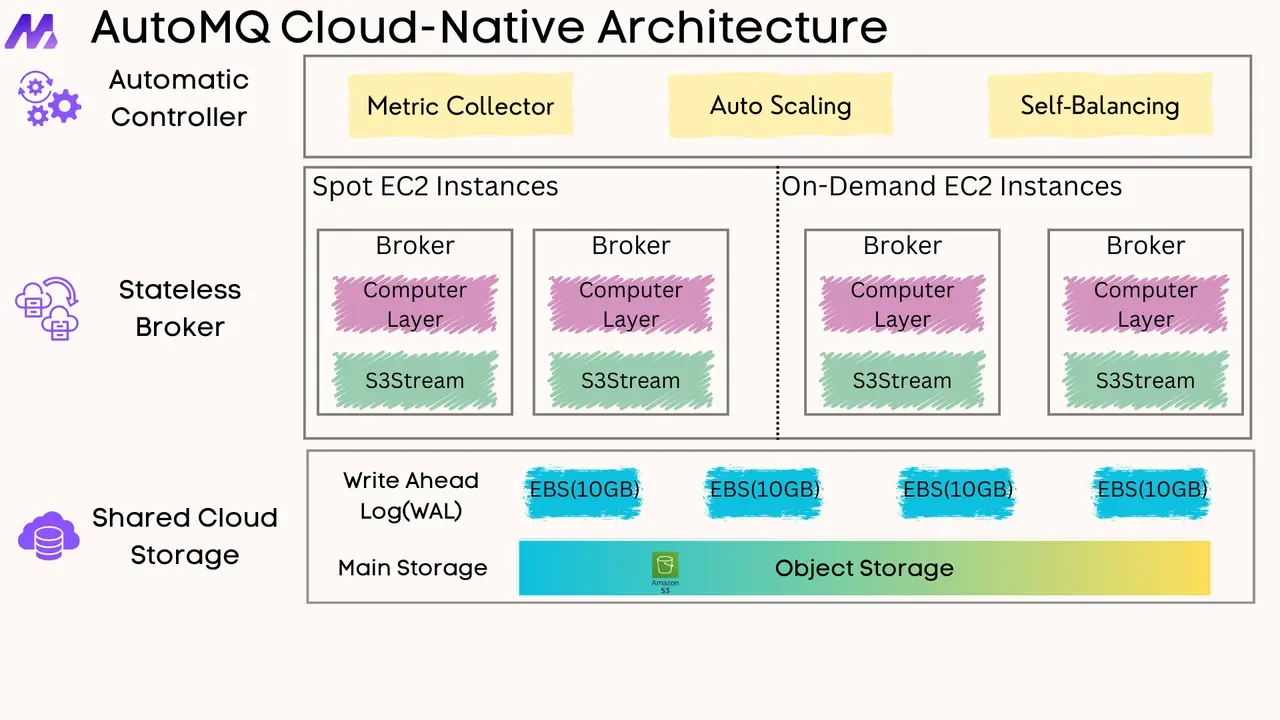

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging