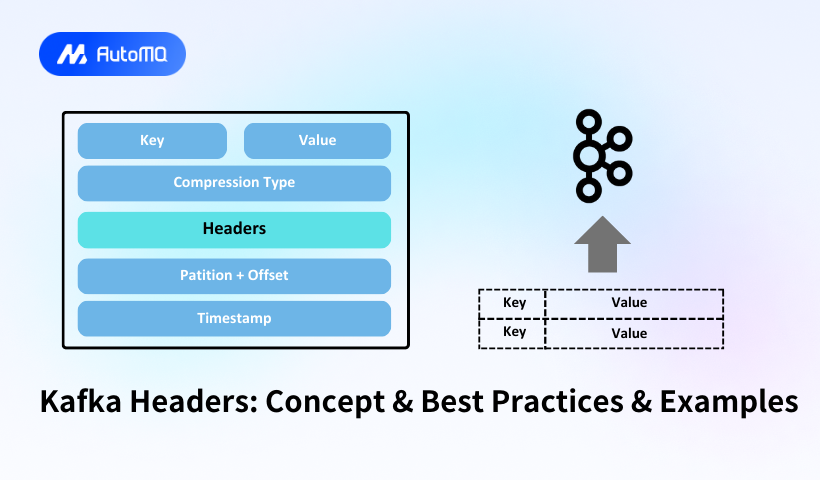

Kafka headers provide a powerful mechanism for attaching metadata to messages, enabling sophisticated message routing, tracing, and processing capabilities. Introduced in Apache Kafka version 0.11.0.0, headers have become an essential feature for building advanced event-driven architectures. This comprehensive guide explores Kafka headers from their fundamental concepts to implementation details and best practices.

Understanding Kafka Headers

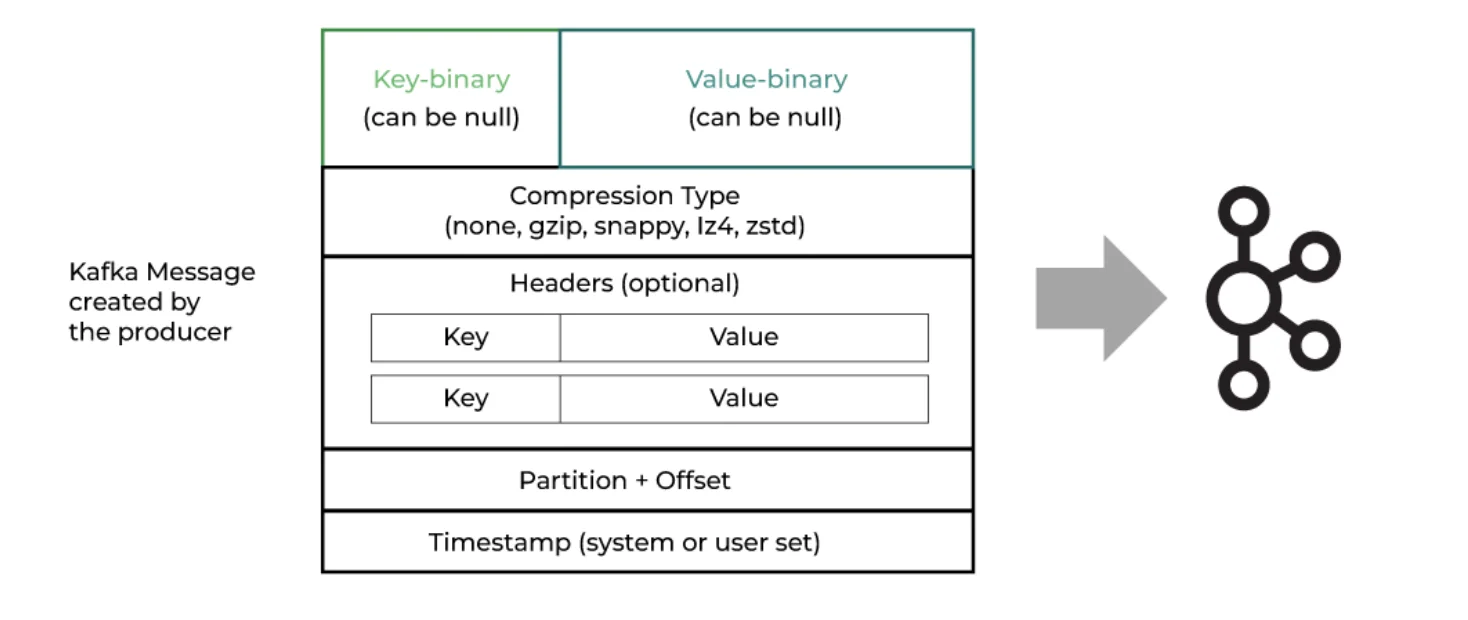

Kafka headers are key-value pairs that accompany the main message payload, functioning similarly to HTTP headers by providing additional context and metadata about the message. Unlike the message key and value, which typically contain the primary content, headers offer a structured way to include supplementary information that enhances message processing capabilities.

Definition and Structure

At their core, Kafka headers consist of a key (String) and a value (byte array). The key must be unique within a single message to avoid ambiguity, while the value can store various types of data in serialized form. Headers are appended to the end of the Kafka message format, providing a flexible extension mechanism without disrupting existing message structures.

Purpose and Significance

Headers fulfill several critical needs in Kafka-based systems:

-

Metadata Storage : Headers provide a dedicated space for metadata, keeping it separate from the business payload.

-

Message Context : Headers enhance the context of messages by including information about their origin, purpose, and processing requirements.

-

Processing Instructions : Headers can contain directives for consumers on how to handle the message.

-

System Integration : Headers enable seamless integration with other systems by carrying protocol-specific information.

By separating metadata from the actual message content, headers allow for more flexible and maintainable message processing pipelines, especially in complex distributed systems.

Implementing Kafka Headers

Implementing Kafka headers involves both producer-side creation and consumer-side interpretation. Different client libraries provide specific mechanisms for working with headers, but the underlying concepts remain consistent.

Producer-Side Implementation

Java Implementation

In Java, headers are added using the ProducerRecord class:

ProducerRecord<String, String> record = new ProducerRecord<>(topic, key, value);

record.headers().add("content-type", "application/json".getBytes());

record.headers().add("created-at", Long.toString(System.currentTimeMillis()).getBytes());

record.headers().add("trace-id", "12345".getBytes());

producer.send(record);This approach allows for multiple headers to be attached to a single message.

Python Implementation

In Python, using the confluent_kafka library:

from confluent_kafka import Producer

producer = Producer({'bootstrap.servers': "localhost:9092"})

headers = [('headerKey', b'headerValue')]

producer.produce('custom-headers-topic', key='key', value='value',

headers=headers, callback=delivery_report)

producer.flush()The Python implementation requires headers to be provided as a list of tuples, with values as byte arrays.

.NET Implementation

Using Confluent's .NET client:

var headers = new Headers();

headers.Add("content-type", Encoding.UTF8.GetBytes("application/json"));

var record = new Message<string, string>

{

Key = key,

Value = value,

Headers = headers

};

producer.Produce(topic, record);The .NET implementation provides a dedicated Headers class with methods for adding and manipulating headers.

Consumer-Side Implementation

On the consumer side, headers can be accessed and processed as follows:

Java Implementation

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

Headers headers = record.headers();

for (Header header : headers) {

System.out.println("Key: " + header.key() + ", Value: " +

new String(header.value()));

}

}This allows consumers to inspect and utilize the header information for processing decisions.

Python Implementation

from confluent_kafka import Consumer

consumer = Consumer({

'bootstrap.servers': "localhost:9092",

'group.id': "test-group",

'auto.offset.reset': 'earliest'

})

consumer.subscribe(['custom-headers-topic'])

msg = consumer.poll(timeout=1.0)

if msg is not None:

print('Headers: {}'.format(msg.headers()))Python consumers can access the headers as a list of key-value tuples.

Use Cases for Kafka Headers

Kafka headers enable numerous sophisticated use cases in event-driven architectures:

Enhanced Message Routing

Headers facilitate advanced routing mechanisms, allowing systems to direct messages based on metadata rather than content. For example, a service identifier in the header can route messages to specific processing pipelines without deserializing the payload, improving performance and reducing coupling between systems.

Distributed Tracing and Observability

Headers are ideal for implementing distributed tracing across microservices. By including trace IDs in headers, organizations can track transactions as they traverse different applications and APIs connected through Kafka. APM solutions like NewRelic, Dynatrace, and OpenTracing take advantage of this capability for end-to-end transaction monitoring.

Metadata for Governance and Compliance

Headers can store audit information such as message origins, timestamps, and user identities. This metadata supports governance requirements, enables data lineage tracking, and facilitates compliance with regulatory standards without modifying the actual business payload.

Content Type and Format Indication

Headers can specify the format and encoding of the message payload (e.g., "content-type": "application/json"), allowing consumers to properly deserialize and process the content without prior knowledge of its structure.

Message Filtering

Consumers can efficiently filter messages based on header values without deserializing the entire payload, significantly improving performance for selective processing scenarios.

Best Practices for Kafka Headers

Implementing Kafka headers effectively requires careful consideration of several best practices:

Header Naming and Structure

-

Standardize Header Fields : Establish consistent naming conventions for headers across all producers to ensure uniformity and predictability in your Kafka ecosystem.

-

Use Descriptive, Concise Keys : Select meaningful, yet concise header keys that clearly indicate their purpose, such as "content-type" instead of "ct" or "message-format".

-

Ensure Key Uniqueness : Within a single message, each header key should be unique to prevent ambiguity and data loss. Adding a header with an existing key will overwrite the previous value.

Performance Considerations

-

Minimize Header Size : Keep headers light to reduce overhead in message transmission and storage. Large headers can impact Kafka's performance, especially in high-throughput scenarios.

-

Limit the Number of Headers : Use only necessary headers rather than including every possible piece of metadata, focusing on information needed for routing, processing, or compliance.

-

Consider Serialization Efficiency : When serializing complex objects for headers, use efficient formats to minimize size and processing overhead.

Header Content Recommendations

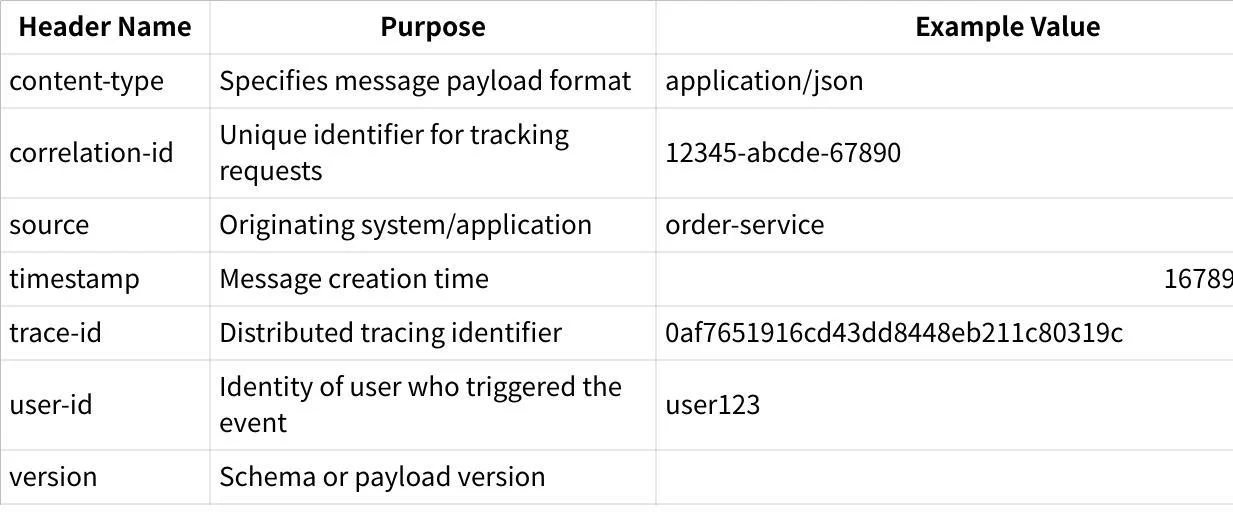

The following table outlines commonly used headers and their purposes:

Integration Patterns

-

Consider CloudEvents Standard : The CloudEvents specification provides a standardized format for event metadata that can be mapped to Kafka headers for interoperability with other event-driven systems.

-

Consistent Header Processing : Implement consistent header processing logic across all consumers to ensure uniform handling of metadata.

-

Header-Based Routing : Design systems that can route messages based on header values rather than requiring payload deserialization for basic routing decisions.

Common Issues and Solutions

Working with Kafka headers may present several challenges that require careful handling:

Performance Impact

Headers increase the size of Kafka messages, which can impact storage requirements and network overhead, especially in high-volume systems. To mitigate this:

-

Keep headers small and focused on essential metadata.

-

Consider using abbreviated keys for frequently used headers.

-

Monitor the impact of headers on message size and adjust accordingly.

Serialization and Compatibility

Since header values are stored as byte arrays, serialization and deserialization require careful handling:

-

Implement consistent serialization/deserialization mechanisms across all producers and consumers.

-

Consider using standardized formats like Protocol Buffers or JSON for complex header values.

-

Ensure backward compatibility when evolving header structures over time.

Older Client Compatibility

Not all Kafka clients support headers, particularly those designed for versions earlier than 0.11.0.0:

-

Check client library compatibility before implementing headers.

-

Consider fallback mechanisms for systems using older clients.

-

Plan for a gradual transition to header-based architectures in heterogeneous environments.

Conclusion

Kafka headers provide a powerful mechanism for enhancing message processing capabilities in event-driven architectures. By separating metadata from the actual payload, headers enable sophisticated routing, tracing, and filtering capabilities without sacrificing performance or flexibility.

Effective implementation of Kafka headers requires careful consideration of naming conventions, performance implications, and serialization strategies. When properly implemented, headers can significantly improve the robustness, observability, and maintainability of Kafka-based systems.

Organizations building complex event-driven architectures should consider incorporating Kafka headers into their messaging patterns, establishing clear standards and guidelines to ensure consistent usage across their ecosystem. With the right approach, Kafka headers can transform simple messages passing into sophisticated, context-aware event processing.

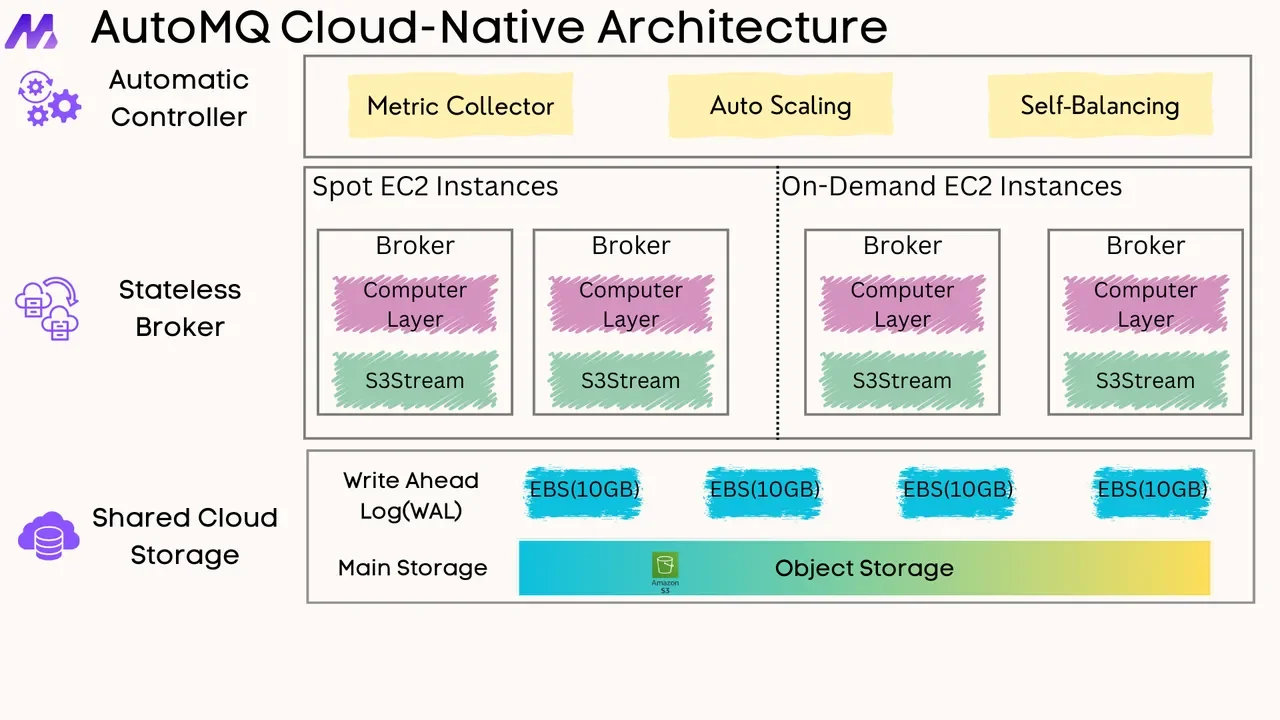

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging