Overview

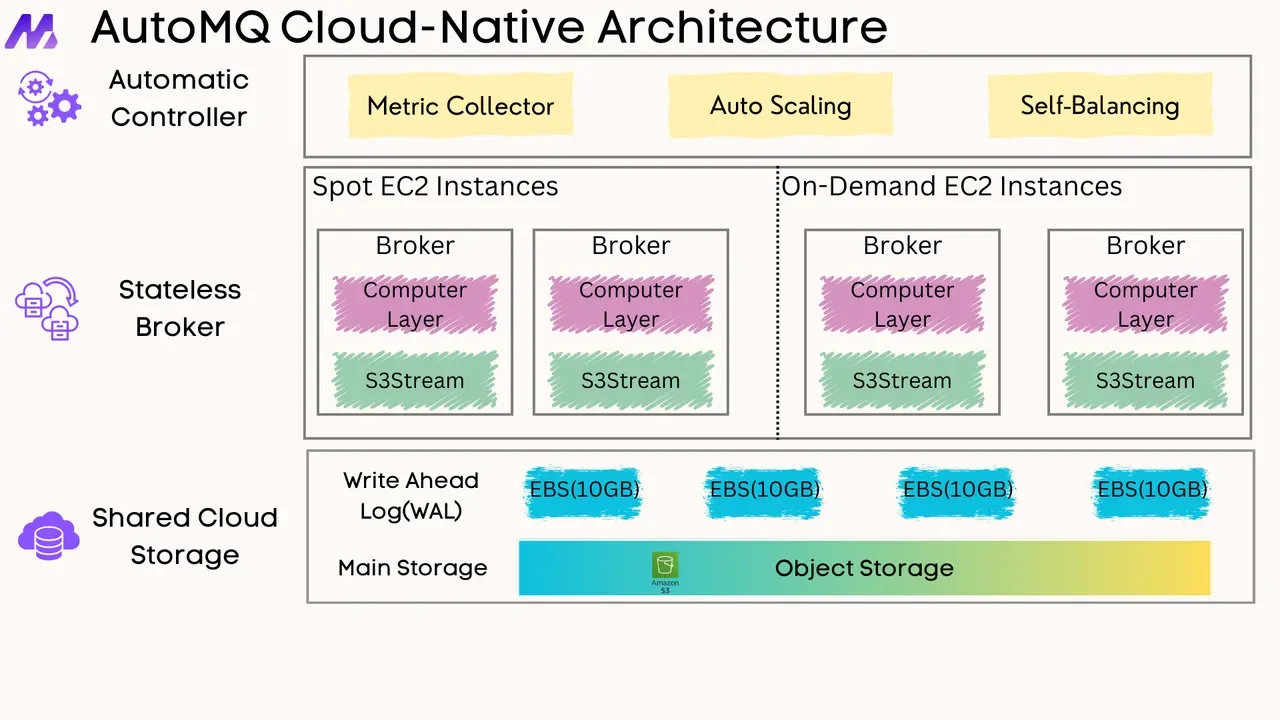

Apache Kafka has become the backbone of real-time data streaming for countless organizations. Choosing how to deploy and manage Kafka is a critical decision that impacts scalability, operational overhead, and cost. Two primary approaches dominate the landscape: traditional Virtual Machines (VMs) and modern container orchestration with Kubernetes. This blog explores both, helping you decide which path best suits your needs.

Understanding Apache Kafka: The Core Concepts

Before diving into deployment models, let's briefly touch upon what makes Kafka tick. Kafka is a distributed event streaming platform designed to handle high volumes of data with low latency .

-

Brokers : These are the Kafka servers that store data. A Kafka cluster consists of multiple brokers.

-

Topics : Think of topics as categories or feeds to which records (messages) are published. For example, you might have a topic for

user_clicksand another fororder_updates. -

Partitions : Topics are split into partitions. Each partition is an ordered, immutable sequence of records. Partitions allow Kafka to scale horizontally by distributing data and load across multiple brokers.

-

Producers : Applications that publish streams of records to one or more Kafka topics.

-

Consumers : Applications that subscribe to topics and process the streams of records.

-

Offsets : Each record in a partition is assigned a unique sequential ID called an offset. Consumers keep track of this offset to know which records they've processed.

-

ZooKeeper/KRaft : Historically, Kafka used ZooKeeper for metadata management and leader election. However, newer Kafka versions are moving towards KRaft (Kafka Raft Metadata mode), which integrates metadata management directly into Kafka brokers, simplifying the architecture .

Kafka's power lies in its ability to decouple data producers from data consumers, providing a durable and scalable log for event-driven architectures .

Kafka on Virtual Machines: The Traditional Approach

Deploying Kafka on VMs means you're setting up Kafka brokers on individual virtual servers, whether in your on-premises data center or in the cloud (e.g., on EC2 instances or Azure VMs).

![Virtual Machine Structure [15]](/blog/kafka-in-virtual-machines-vs-kafka-in-containerskubernetes/1.png)

How it Works & Setup

-

Provision VMs : You start by creating several VMs. The number and size depend on your expected load and fault tolerance requirements.

-

Install Dependencies : Each VM needs Java installed, as Kafka runs on the JVM.

-

Download & Configure Kafka : You download the Kafka binaries and configure each broker. Key configuration files like

server.propertiesneed to be tailored for each broker (e.g.,broker.id,listeners,advertised.listeners,log.dirs) . If using ZooKeeper,zookeeper.connectpoints to your ZooKeeper ensemble. -

Start Services : You start ZooKeeper (if used) and then the Kafka brokers on each VM.

Resource Management & Scaling

-

CPU & Memory : Kafka brokers benefit from multi-core CPUs and sufficient RAM, especially for page cache, which is crucial for performance. A common recommendation is at least 32GB of RAM, but 64GB or more isn't uncommon for heavy workloads .

-

Disk : Fast, reliable disks are essential. SSDs are preferred. You can use RAID 10 for a balance of performance and redundancy, or a "Just a Bunch Of Disks" (JBOD) setup where Kafka manages data distribution across multiple directories .

-

Network : A high-speed, low-latency network is critical.

-

Scaling : Scaling on VMs is often a manual process. To add capacity, you'd provision new VMs, configure Kafka, and then potentially rebalance partitions across the expanded cluster.

Operational Management

-

Monitoring : You'll need to set up monitoring for Kafka metrics (broker health, topic/partition status, throughput, latency) and system metrics (CPU, memory, disk, network) using tools like JMX exporters feeding into systems like Prometheus and Grafana .

-

Maintenance : Tasks like rolling restarts for configuration changes or upgrades, log compaction management, and partition rebalancing are typically manual or scripted operations .

-

Troubleshooting : This involves checking Kafka logs, ZooKeeper logs (if applicable), and system-level diagnostics on individual VMs. Common issues include network connectivity problems, disk space exhaustion, and broker failures .

Pros

-

Full Control : You have complete control over the operating system, Kafka configuration, and hardware resources.

-

Mature Practices : Many organizations have well-established processes for managing VMs.

-

Performance Potential : Direct access to hardware can sometimes offer raw performance advantages if tuned correctly.

Cons

-

Manual Effort : Deployment, scaling, and maintenance are often manual and time-consuming.

-

Higher Operational Overhead : Managing individual VMs and the Kafka software on them can be complex and resource-intensive.

-

Slower Agility : Provisioning new VMs and scaling the cluster can be slow compared to containerized environments.

-

Configuration Drift : Ensuring consistent configurations across all VMs can be challenging without robust automation.

Kafka on Containers/Kubernetes: The Modern Approach

Running Kafka on Kubernetes involves packaging Kafka brokers as Docker containers and managing them using Kubernetes. This approach leverages Kubernetes' orchestration capabilities for deployment, scaling, and self-healing.

![Kubernetes Structure [16]](/blog/kafka-in-virtual-machines-vs-kafka-in-containerskubernetes/2.png)

How it Works & Setup

-

Containerize Kafka : Kafka brokers are run inside Docker containers. Official or well-maintained Kafka Docker images are typically used. Configuration is often managed via environment variables passed to the containers.

-

Kubernetes Resources :

-

StatefulSets : Kafka is a stateful application. Kubernetes StatefulSets are used to manage Kafka brokers, providing stable network identifiers, persistent storage, and ordered, graceful deployment and scaling .

-

Persistent Volumes (PVs) : Each Kafka broker pod needs persistent storage for its logs. PVs and PersistentVolumeClaims (PVCs) ensure that data survives pod restarts . Local Persistent Volumes can offer better performance by using local disks on Kubernetes nodes.

-

Services : Kubernetes Services are used to expose Kafka brokers.

ClusterIPservices are used for internal communication within the Kubernetes cluster, whileNodePort,LoadBalancer, or Ingress controllers can expose Kafka to clients outside the cluster. -

Configuration : ConfigMaps or custom resources managed by operators are used to manage Kafka configurations.

-

-

Operators : Kubernetes Operators significantly simplify running Kafka on Kubernetes. Operators are custom controllers that extend the Kubernetes API to create, configure, and manage instances of complex stateful applications like Kafka. Popular community operators like Strimzi automate tasks such as deployment, configuration management, upgrades, scaling, and even managing topics and users .

Resource Management & Scaling

-

Declarative Configuration : You define your Kafka cluster (number of brokers, resources, configuration) in YAML manifests.

-

Automated Scaling : Kubernetes and operators can automate scaling. Horizontal Pod Autoscalers (HPAs) can scale consumer applications, and operators can help scale the Kafka cluster itself, though scaling Kafka brokers often requires careful partition rebalancing .

-

Resource Efficiency : Containers are more lightweight than VMs as they share the host OS kernel, leading to better resource utilization.

Operational Management

-

Simplified Operations : Operators automate many routine tasks, such as rolling updates, broker configuration changes, and managing security (TLS certificates, user credentials) .

-

Monitoring : The Kubernetes ecosystem offers rich monitoring tools like Prometheus and Grafana. Operators often expose Kafka metrics in a Prometheus-compatible format.

-

Self-Healing : Kubernetes can automatically restart failed Kafka pods, contributing to higher availability.

-

Troubleshooting : Involves inspecting pod logs (

kubectl logs), Kubernetes events (kubectl describe pod), and operator-specific diagnostics. Issues can arise from Kubernetes networking, storage, or the Kafka application itself.

Pros

-

Scalability & Elasticity : Easier and faster to scale your Kafka cluster up or down.

-

Improved Resource Efficiency : Containers share resources, leading to lower overhead per broker compared to VMs.

-

Deployment Velocity & Consistency : Standardized container images and declarative configurations ensure consistent deployments.

-

Resilience : Kubernetes' self-healing capabilities and operator logic enhance fault tolerance.

-

Operational Automation : Operators significantly reduce the manual effort required for managing Kafka.

Cons

-

Kubernetes Complexity : Managing Kubernetes itself has a learning curve and operational overhead.

-

Stateful Application Challenges : Kafka's stateful nature can still present challenges in a dynamic container environment (e.g., page cache behavior when pods are rescheduled, storage management complexities) .

-

Network Overhead : Kubernetes networking adds layers of abstraction that can potentially introduce latency, though this is often manageable.

-

Storage Intricacies : Configuring and managing persistent storage for Kafka in Kubernetes requires careful planning.

Side-by-Side Comparison: VMs vs. Kubernetes

| Feature | Kafka on VMs | Kafka on Containers/Kubernetes |

|---|---|---|

| Deployment Speed | Slower, manual provisioning | Faster, automated with containers & orchestration |

| Resource Efficiency | Lower (OS overhead per VM) | Higher (shared OS kernel) |

| Scalability | Manual, slower | Automated/Semi-automated, faster |

| Management Overhead | High for Kafka specifics, VM management | Lower for Kafka (with Operators), K8s management |

| Operational Tasks | Mostly manual or scripted | Largely automated by Kubernetes & Operators |

| Fault Tolerance | Relies on Kafka's replication, manual recovery | Kafka replication + K8s self-healing, Operator recovery |

| Control | Full control over OS and environment | Less direct OS control, abstraction via K8s |

| Complexity | Simpler underlying infrastructure (VMs) | Higher with Kubernetes platform itself |

| Portability | Tied to VM image/environment | Highly portable across K8s clusters/clouds |

| Cost | Can be higher due to less efficient resource use | Potentially lower due to efficiency, but K8s/network costs |

| Performance | Potentially high with direct hardware access | Good, but network/storage abstraction can add overhead |

Best Practices

For Kafka on VMs

-

Sufficient Resources : Allocate ample RAM (for page cache), fast disks (SSDs), and multi-core CPUs .

-

OS Tuning : Increase file descriptor limits and

vm.max_map_count. -

Separate Disks : Use dedicated disks for Kafka data logs, separate from OS and application logs.

-

Monitor Extensively : Track broker health, JMX metrics, system resources, and ZooKeeper (if used) .

-

Automate : Script routine tasks like deployments, restarts, and backups.

-

Security : Implement network segmentation, encryption (TLS/SSL), authentication (SASL), and authorization (ACLs) .

For Kafka on Containers/Kubernetes

-

Use an Operator : Strongly consider using a Kubernetes Operator like Strimzi to manage Kafka .

-

Understand StatefulSets : Know how StatefulSets manage your Kafka brokers.

-

Proper Storage : Use appropriate Persistent Volume types (e.g., high-performance SSDs, Local PVs for low latency) and configure

storageClassName. -

Network Configuration : Plan your Kafka exposure carefully (internal vs. external access) and secure it using Network Policies.

-

Resource Requests/Limits : Set appropriate CPU and memory requests and limits for Kafka pods.

-

Monitoring : Leverage Prometheus and Grafana, often integrated by operators.

-

Security : Utilize Kubernetes secrets for sensitive data, Pod Security Policies/Contexts, Network Policies, and enable Kafka's internal security features .

-

KRaft Mode : Prefer KRaft mode for new deployments to simplify architecture by removing the ZooKeeper dependency.

Common Issues and Considerations

VM-based Deployments

-

Manual Scaling Bottlenecks : Responding to load changes can be slow.

-

Configuration Drift : Maintaining consistency across brokers can be hard.

-

Disk Performance : Inadequate disk I/O is a common performance killer.

-

ZooKeeper Management : If used, ZooKeeper is another distributed system to manage and troubleshoot.

Kubernetes-based Deployments

-

Page Cache Warm-up : When a Kafka pod is rescheduled to a new node, the page cache on that node is cold, potentially impacting performance until it's warmed up .

-

Network Complexity : Debugging network issues within Kubernetes can be challenging. Cross-AZ traffic for Kafka replication can also lead to higher costs .

-

Storage Reliability & Performance : Misconfigured or slow persistent storage can cripple Kafka.

-

Upgrade Complexity : While operators help, upgrading Kafka or the operator itself still requires careful planning and execution.

-

Resource Contention : If resource requests/limits are not set correctly, Kafka pods might compete with other workloads.

Conclusion: Which Approach is Right for You?

The choice between running Kafka on VMs or Kubernetes depends on your team's expertise, organizational maturity, and specific requirements.

-

Choose VMs if:

-

Your team has strong traditional infrastructure and VM management skills but limited Kubernetes experience.

-

You require absolute control over the OS and hardware environment.

-

Your Kafka deployment is relatively static with predictable scaling needs.

-

The overhead of managing a Kubernetes cluster outweighs the benefits for your use case.

-

-

Choose Containers/Kubernetes if:

-

Your organization has adopted Kubernetes as a standard platform and has the requisite expertise.

-

You need rapid scalability, elasticity, and deployment agility.

-

You want to leverage automation for operational tasks (deployments, upgrades, self-healing) via operators.

-

You aim for better resource utilization and standardized deployments across environments.

-

You are building a cloud-native streaming platform.

-

Many organizations are migrating Kafka workloads to Kubernetes to gain agility and operational efficiency, especially with the maturity of Kafka operators. However, it's crucial to understand the complexities involved and invest in the necessary Kubernetes skills. Regardless of the path, a well-architected and properly managed Kafka deployment is key to successful real-time data streaming.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging