Introduction

Apache Kafka has become the backbone of modern data infrastructure for countless organizations, powering everything from real-time analytics to critical microservices communication. As systems evolve, the need to migrate a Kafka cluster—whether to new hardware, a different data center, a new version, or a managed cloud service—is not a matter of if, but when.

A Kafka migration is a significant undertaking, fraught with risks of downtime, data loss, and performance degradation. Choosing the right strategy is the single most important factor in determining its success. This blog post explores the two primary approaches to Kafka migration: the high-risk, high-reward "Big Bang" Cutover and the complex but safer Incremental Phased Migration. We will delve into their concepts, workflows, pros and cons, and provide a framework to help you decide which path is right for your organization.

The "Big Bang" Cutover Approach

The "Big Bang" or "lift-and-shift" approach is the most straightforward migration strategy. As the name implies, it involves a complete and immediate switch from the old Kafka cluster (the source) to the new one (the target) in a single, planned event.

How It Works

A Big Bang migration is executed within a predefined, and often lengthy, maintenance window. The core idea is to stop all data flow, move the data, and then redirect all producers and consumers to the new cluster before resuming operations.

A typical workflow includes:

Pre-Migration & Planning: The new target cluster is provisioned and configured to mirror the source environment’s topics, partitions, and access controls. This phase involves extensive testing of the new cluster to ensure it can handle the production load. A clear rollback plan must be established.

The Cutover Event (Downtime Begins):

Stop Producers: All client applications that write data to the source cluster are stopped. This is the beginning of the service outage.

Ensure Data Drainage: Consumers are allowed to continue reading from the source cluster until they have processed all buffered and in-flight messages. This is crucial to prevent data loss.

Data Migration: Any remaining data on the source cluster that needs to be preserved is replicated to the target cluster. This can be done using snapshot tools or data replication utilities.

Reconfigure and Redirect: All producers and consumers are reconfigured to point to the new target cluster. This might involve changing bootstrap server URLs in application configs and restarting the services.

Validation: A series of tests are run to verify that producers can write and consumers can read from the new cluster successfully.

Post-Migration (Downtime Ends): Once the new cluster is validated as fully operational, normal service resumes. The old cluster is kept online temporarily as part of the rollback plan but does not receive any new traffic.

Pros

Simplicity: The logic is straightforward—stop, move, and restart. There is no need to manage two active clusters simultaneously.

Shorter Project Duration: The migration event itself is condensed, and the entire project timeline from planning to completion is generally shorter than a phased approach.

Clean Cutover: Avoids the complexity of data synchronization issues or routing logic that can arise when running two systems in parallel.

Cons

High Risk: The all-or-nothing nature means that any failure during the cutover can lead to a catastrophic and lengthy outage.

Significant Downtime: Requires a full service outage, which is unacceptable for most business-critical applications with strict SLAs.

Difficult Rollback: Reverting to the old cluster is often a complex and stressful process, especially if new data has been written to the target cluster.

When to Choose a Big Bang Migration

This approach is best suited for:

Non-critical workloads where significant downtime is acceptable.

Smaller, less complex clusters with a manageable number of topics and clients.

Development or testing environments where service availability is not a primary concern.

Organizations that have wide and flexible maintenance windows.

The Incremental Phased Migration Approach

In stark contrast to the Big Bang, an incremental or phased migration is a gradual, controlled process that moves producers, consumers, and data to the new cluster piece by piece, often with no discernible downtime for end-users. This approach prioritizes safety and service availability over speed.

How It Works

An incremental migration involves running the source and target clusters in parallel for a period. Data is replicated between them, and clients are migrated gradually. Several well-established patterns can be used to achieve this.

Key Patterns for Incremental Migration

Dual Writing (or Shadowing): This is one of the most common patterns. Producer applications are modified to write to both the source and target clusters simultaneously. This ensures the new cluster is receiving real-time data and is kept in sync with the old one. Once you have confidence in the stability and performance of the new cluster, you can begin migrating consumers. After all consumers are on the new cluster, the write to the old cluster can be decommissioned [1].

Data Mirroring & Replication: Instead of modifying producers, a dedicated replication tool is used to mirror data from the source cluster to the target cluster. Apache Kafka’s own MirrorMaker 2 is a popular open-source tool for this purpose. It continuously copies topic data and consumer group offsets between clusters [2]. This pattern is powerful because it is transparent to producer applications, requiring no code changes on their side.

The Strangler Fig Pattern: This pattern, named after the vine that gradually strangles its host tree, is a powerful software architecture concept that applies well to Kafka migrations [3]. In this model:

The new Kafka cluster is set up as the "facade" or entry point.

Initially, it may simply route traffic back to the old cluster or use a mirroring tool to pull data from it.

Over time, new topics and applications are built on the new cluster directly.

Existing applications are gradually migrated over, one by one. Eventually, the old cluster is "strangled" of all its traffic and can be safely decommissioned.

Migrating Consumers

Consumer migration is often the most complex part of a phased rollout. The strategy depends heavily on whether the consuming application can tolerate duplicate messages or missed messages [1].

For applications that can handle duplicates: You can run two instances of the consumer service, one reading from the old cluster and one from the new, and migrate traffic at a load balancer.

For applications that cannot miss data: The safest approach is to stop the consumer, wait for the replication tool to copy its last committed offset to the new cluster, and then restart the consumer pointing to the new cluster.

Pros

Minimal to Zero Downtime: The primary advantage. Migrations can be performed during business hours without impacting service availability.

Reduced Risk: A gradual rollout allows for continuous testing and validation. Issues can be detected and addressed in a small part of the system without a full-scale failure.

Easier Rollback: Since the old cluster is still fully operational during the migration, rolling back a single application or a group of clients is a much simpler process.

Cons

Increased Complexity: Managing two parallel production systems, data replication, and client routing is a significant architectural challenge.

Longer Project Duration: The entire migration process, from start to finish, can span weeks or even months.

Potential for Data Inconsistencies: If data replication is not monitored carefully, it can lead to temporary data discrepancies between the two clusters.

When to Choose an Incremental Migration

This approach is the preferred choice for:

Mission-critical Kafka clusters with stringent uptime requirements (e.g., 99.99% SLA).

Large, complex deployments with hundreds of topics and client applications.

Migrations where the team wants to de-risk the process as much as possible.

Scenarios where application code changes (for dual writing) are feasible.

Side-by-Side Comparison

| Feature | “Big Bang” Cutover | Incremental Phased Migration |

|---|---|---|

| Downtime | High (hours to days) | Minimal to Zero |

| Risk | High (single point of failure) | Low (isolated failures, easier rollback) |

| Complexity | Low (conceptually simple) | High (requires parallel run, data sync) |

| Project Duration | Short | Long |

| Cost | Lower (less operational overhead during migration) | Higher (requires parallel infrastructure and more engineering effort) |

| Rollback Feasibility | Difficult and high-stress | Relatively simple and low-risk |

Choosing the Right Approach: A Decision Framework

The choice between a Big Bang and a phased migration is not purely technical; it’s a business decision that must be guided by your specific context. Ask your team the following questions to build a decision framework:

What is our business tolerance for downtime?

- This is the most critical question. If your service has a strict Service Level Agreement (SLA) and downtime translates directly to revenue loss or customer dissatisfaction, an incremental approach is almost always necessary [4].

How large and complex is our Kafka ecosystem?

- How many topics, partitions, producers, and consumers are there? A small cluster with a handful of clients is a much better candidate for a Big Bang than a massive, multi-tenant cluster that serves the entire organization [5].

What is the expertise and availability of our engineering team?

- A phased migration requires significant expertise in Kafka internals, replication tools, and distributed systems architecture. Does your team have the skills and bandwidth to manage this complexity over a prolonged period?

Can our client applications be easily modified?

- If you are considering a dual-writing strategy, you need to assess the feasibility of changing and redeploying all your producer applications. If producers are legacy systems or owned by other teams, a mirroring approach might be more practical [1].

What is the purpose of the migration?

- A simple version upgrade might be handled differently than a migration from a self-hosted cluster to a fully managed cloud service. The latter often provides tools and support that can simplify a phased migration [6].

Real-World Lessons

Examining past migrations provides invaluable insights. A large technology company migrating its observability platform found that a phased approach using dual writes was essential for maintaining its strict SLAs. Their key takeaway was the importance of building custom verification tools to ensure data fidelity between the old and new clusters in real-time.

In another case, an educational technology firm migrating to a managed service chose a phased migration using a replication tool. This allowed them to avoid any changes to their producer applications, significantly reducing the engineering effort and risk. Their primary lesson was to over-provision the replication infrastructure to ensure it could keep up with production traffic spikes, preventing lag between the source and target clusters [7].

Conclusion

Choosing a Kafka migration strategy is a balancing act between risk, complexity, and business continuity. The Big Bang approach offers speed and simplicity but comes at the cost of significant downtime and high risk, making it suitable only for non-critical systems. The Incremental Phased approach is the industry standard for critical, large-scale Kafka clusters, offering a path to zero-downtime migration by prioritizing safety and control, albeit with higher complexity and a longer timeline.

Ultimately, the right choice depends on a thorough assessment of your business requirements, technical landscape, and team capabilities. By carefully considering the trade-offs and asking the right questions, you can architect a migration plan that is not only successful but also strengthens your data infrastructure for the future.

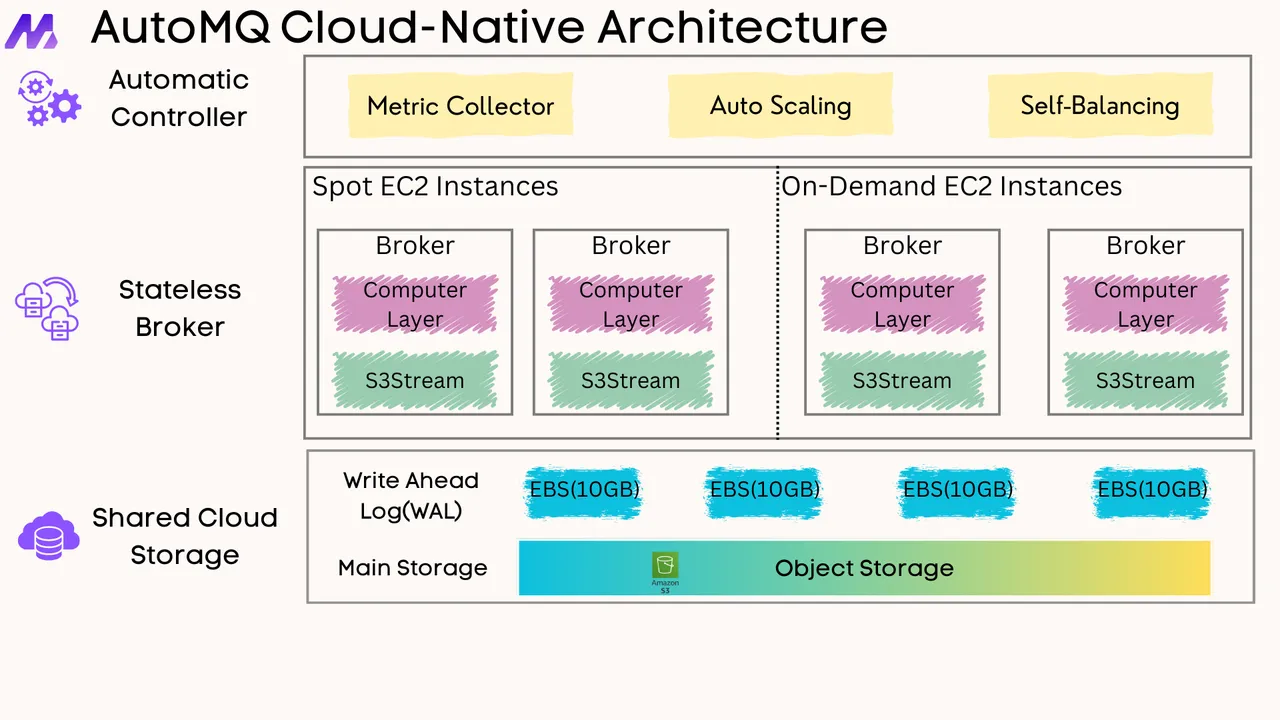

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on GitHub. Big companies worldwide are using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)