Kafka has become the central nervous system for many organizations, handling mission-critical data and serving as a single source of truth. With such importance comes the responsibility to properly secure the platform. This blog explores the essential concepts of Kafka security, configuration approaches, and best practices to help you implement a robust security framework.

Understanding Kafka Security Fundamentals

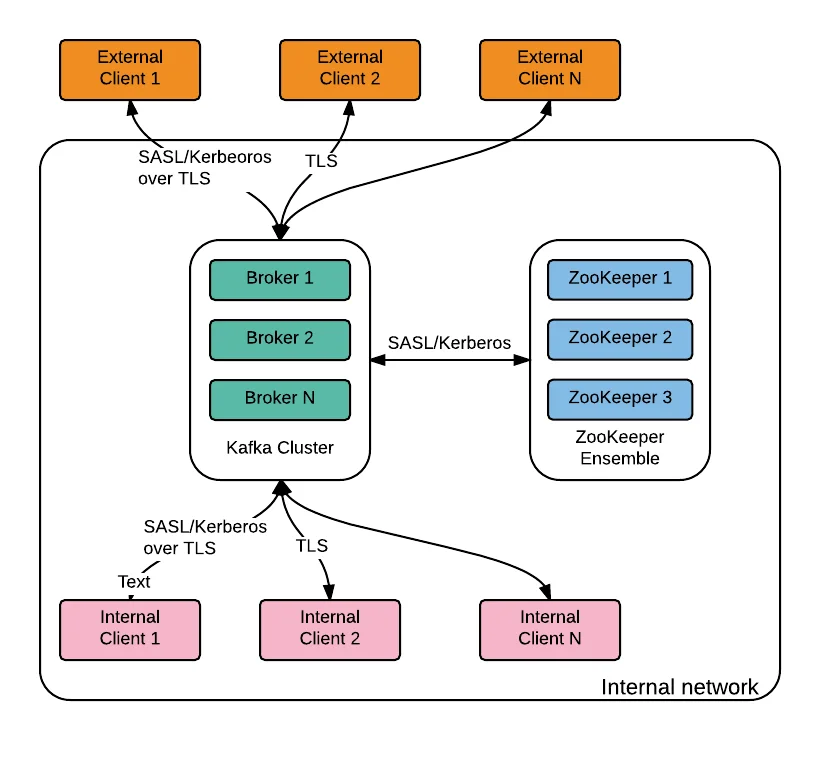

Kafka security encompasses multiple interconnected layers that work together to ensure data protection. The key components include authentication, authorization, encryption, and auditing. Prior to version 0.9, Kafka lacked built-in security features, but modern versions offer comprehensive security capabilities that address the needs of enterprise deployments.

Security Components Overview

Security in Kafka consists of three fundamental pillars, each addressing different aspects of data protection:

Authentication : Verifies the identity of clients (producers and consumers) and brokers to ensure only legitimate entities can connect to the cluster. Kafka uses the concept of KafkaPrincipal to represent the identity of users or services interacting with the cluster. Even when authentication is not enabled, Kafka associates the principal "ANONYMOUS" with requests.

Authorization : Determines what actions authenticated entities can perform on Kafka resources (topics, consumer groups, etc.). This prevents unauthorized access to sensitive data and operations.

Encryption : Protects data confidentiality during transmission between clients and brokers (data-in-transit) and when stored on disk (data-at-rest).

According to Aklivity, these components are crucial because "Kafka often serves as a central hub for data within organizations, encompassing data from various departments and applications. By default, Kafka operates in a permissive manner, allowing unrestricted access between brokers and external services".

Authentication

Authentication is the first line of defense in Kafka security. It ensures that only known and verified clients can connect to your Kafka cluster.

Supported Authentication Methods

Kafka supports several authentication protocols, each with its own strengths and use cases:

SSL/TLS Client Authentication : Uses certificates to verify client identity. Clients present their certificates to brokers, which validate them against a trusted certificate authority.

SASL (Simple Authentication and Security Layer) : A framework supporting various authentication mechanisms:

-

SASL/PLAIN: Simple username/password authentication (requires SSL/TLS for secure transmission)

-

SASL/SCRAM: More secure password-based mechanism with salted challenge-response

-

SASL/GSSAPI: Integrates with Kerberos authentication

-

SASL/OAUTHBEARER: Uses OAuth tokens for authentication

As noted in a Reddit discussion, the best practice is to "look at what the business requirements are and configure Kafka to adhere to them. It supports sasl/scram/gssapi/mtls/etc/etc so pick the one that your organization uses/supports. Each has its pros and cons".

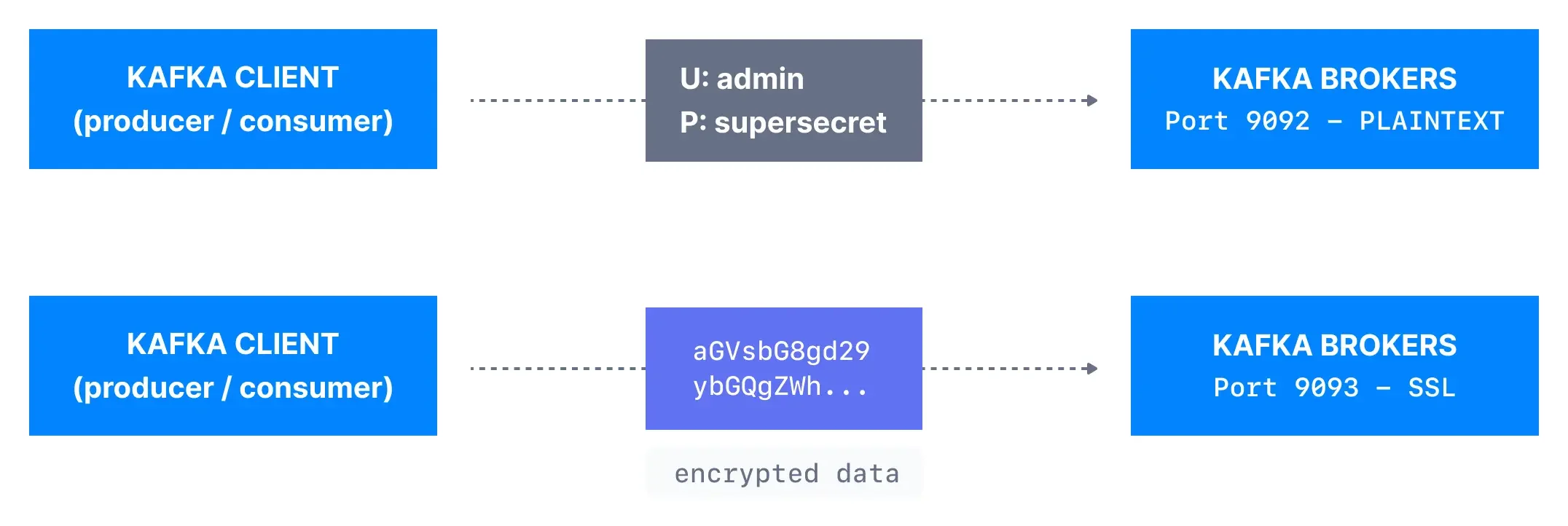

Configuring Listeners and Protocols

Listeners are network endpoints that Kafka brokers use to accept connections. Each listener can be configured with different security protocols.

# Example configuration for multiple listeners with different security protocols

listeners=PLAINTEXT://:9092,SSL://:9093,SASL_SSL://:9094

listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_SSL:SASL_SSL

According to a Reddit explanation, "PLAINTEXT is the name of the socket 'listener' which is using port 9092. It could be any port above 1024. PLAINTEXT is used in all caps for readability and so the listener name easily lines up with the security protocol map, since they will use the same term for crystal clear readability".

The available security protocols are:

-

PLAINTEXT: No security (not recommended for production)

-

SSL: Encryption using SSL/TLS

-

SASL_PLAINTEXT: Authentication without encryption

-

SASL_SSL: Authentication with encryption (recommended for production)

Authorization

Once clients are authenticated, authorization controls what they can do within the Kafka ecosystem.

Access Control Lists (ACLs)

ACLs define permissions at a granular level, allowing administrators to control read/write access to topics, consumer groups, and other Kafka resources.

For example, to allow a client to read from input topics and write to output topics, you would use commands like:

# Allow reading from input topics

bin/kafka-acls --add --allow-principal User:team1 --operation READ --topic input-topic1

# Allow writing to output topics

bin/kafka-acls --add --allow-principal User:team1 --operation WRITE --topic output-topic1

bin/kafka-acls --add --allow-principal User:team1 --operation WRITE --topic output-topic1`

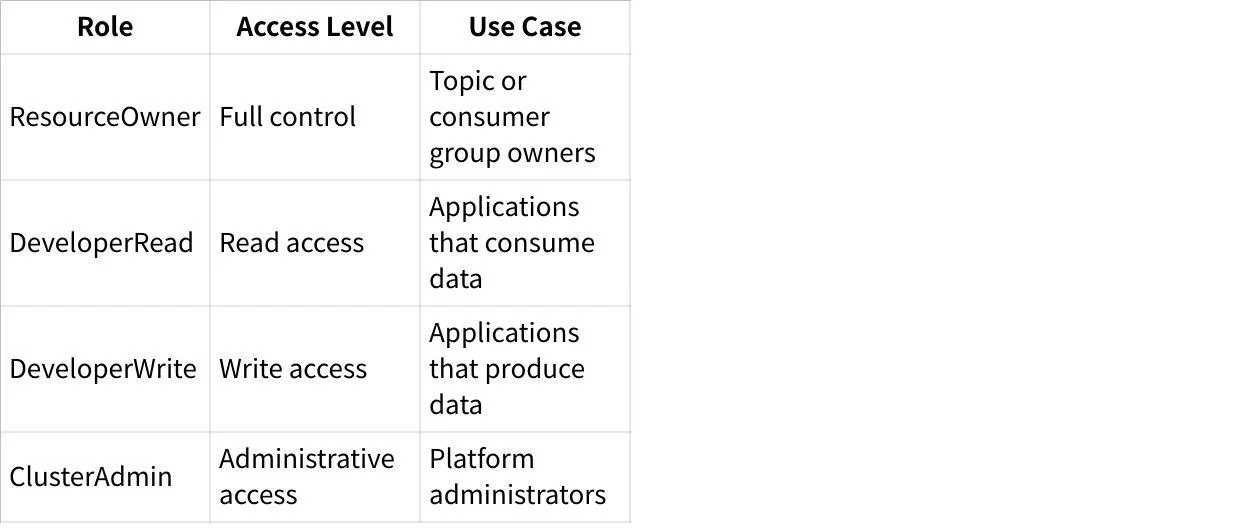

Role-Based Access Control (RBAC)

For more complex environments, especially those using Confluent Platform, Role-Based Access Control provides a more manageable way to handle permissions through predefined roles.

The following table shows common RBAC roles and their purposes:

Super Users

Super users have unlimited access to all resources, regardless of ACLs. This is crucial for administrative operations:

# Setting super users in server.properties

super.users=User:admin,User:operator

As indicated in a Reddit thread discussing secure Kafka setup, "since you deny access if no ACLs are defined, the admin user will have to be whitelisted, you can use wildcards to make life easy".

Encryption

Encryption protects data from unauthorized viewing, both during transmission and at rest.

Data-in-Transit Encryption

SSL/TLS encryption secures communication between clients and brokers. According to Dattell, "using a communications security layer, like TLS or SSL, will chip away at throughput and performance because encrypting and decrypting data packets requires processing power. However, the performance cost is typically negligible for an optimized Kafka implementation".

A basic SSL client configuration looks like this:

security.protocol=SSL

ssl.truststore.location=/path/to/kafka.client.truststore.jks

ssl.truststore.password=secret

For mutual TLS (mTLS), where clients also authenticate to the server:

security.protocol=SSL

ssl.truststore.location=/path/to/kafka.client.truststore.jks

ssl.truststore.password=secret

ssl.keystore.location=/path/to/kafka.client.keystore.jks

ssl.keystore.password=secret

ssl.key.password=secret

Data-at-Rest Encryption

Kafka itself doesn't provide built-in encryption for data at rest. Instead, you should use disk-level or file system encryption options:

-

Transparent Data Encryption (TDE)

-

Filesystem-level encryption

-

Cloud provider encryption services like AWS KMS or Azure Key Vault

Security Best Practices

Implementing these best practices will help maintain a secure Kafka environment.

Authentication Best Practices

-

Use strong authentication methods : Prefer SASL_SSL with SCRAM-SHA-256 or mTLS over simpler methods.

-

Avoid PLAINTEXT listeners in production : Always encrypt authentication credentials.

-

Rotate credentials regularly : Change passwords and certificates before they expire or if compromised.

-

Use secure credential storage : Use secure vaults like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to securely store and access secrets.

Authorization Best Practices

-

Follow the principle of least privilege : Grant only the permissions necessary for each client.

-

Use prefixed ACLs for applications : For example, use

-resource-pattern-type prefixed --topic app-name-to cover all topics with a common prefix. -

Document and review ACLs regularly : Maintain an inventory of who has access to what.

-

Set allow.everyone.if.no.acl.found=false : Deny by default and explicitly grant permissions.

Encryption Best Practices

-

Enable TLS 1.2 or higher : Older protocols have known vulnerabilities.

-

Use strong cipher suites : Configure secure ciphers and disable weak ones.

-

Implement proper certificate management : Monitor expiration dates and have a renewal process.

-

Secure private keys : Restrict access to key material and use proper permissions.

Monitoring and Auditing

-

Enable audit logging : Track authentication attempts, configuration changes, and resource access.

-

Monitor broker metrics : Watch for unusual patterns in connection attempts.

-

Implement alerting : As Dattell suggests, "with either machine learning based alerting or threshold based alerting, you can have the system notify you or your team in real-time if abnormal behavior is detected".

-

Retain logs appropriately : According to Confluent, you should "retain audit log data for longer than seven days" to meet "requirements for administrative, legal, audit, compliance, or other operational purposes".

Common Security Configurations

The following example shows a comprehensive broker security configuration with SASL and SSL:

# Broker security configuration

listeners=SASL_SSL://public:9093,SSL://internal:9094

advertised.listeners=SASL_SSL://public.example.com:9093,SSL://internal.example.com:9094

listener.security.protocol.map=SASL_SSL:SASL_SSL,SSL:SSL

# Authentication

sasl.enabled.mechanisms=SCRAM-SHA-256

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-256

# Authorization

authorizer.class.name=org.apache.kafka.metadata.authorizer.StandardAuthorizer

allow.everyone.if.no.acl.found=false

super.users=User:admin

# SSL configuration

ssl.keystore.location=/var/private/ssl/kafka.server.keystore.jks

ssl.keystore.password=keystore-secret

ssl.key.password=key-secret

ssl.truststore.location=/var/private/ssl/kafka.server.truststore.jks

ssl.truststore.password=truststore-secret

ssl.client.auth=required

For Java clients connecting to a secure Kafka cluster:

Properties props = new Properties();

props.put(CommonClientConfigs.BOOTSTRAP_SERVERS_CONFIG, "public.example.com:9093");

props.put(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, "SASL_SSL");

props.put(SaslConfigs.SASL_MECHANISM, "SCRAM-SHA-256");

props.put(SaslConfigs.SASL_JAAS_CONFIG, "org.apache.kafka.common.security.scram.ScramLoginModule required username=\"user\" password=\"password\";");

props.put(SslConfigs.SSL_TRUSTSTORE_LOCATION_CONFIG, "/path/to/truststore.jks");

props.put(SslConfigs.SSL_TRUSTSTORE_PASSWORD_CONFIG, "truststore-password");

Kafka Security in Multi-Tenant Environments

Multi-tenancy introduces additional security considerations as multiple users or applications share the same Kafka infrastructure.

Tenant Isolation

-

Topic Naming Conventions : Establish clear naming patterns that include tenant identifiers.

-

Resource Quotas : Prevent resource hogging by setting quotas on throughput and connections.

-

Separate Consumer Groups : Ensure each tenant uses distinct consumer group IDs.

As noted in Axual's Kafka Compliance Checklist, it's important to "know your topics" and "know your owners" in a multi-tenant environment: "Identifying a topic by name alone can be hard, especially if you haven't standardized topic naming. Including descriptive data on your topics, topic metadata, is extremely helpful".

Security Compliance and Governance

Meeting regulatory requirements often requires specific security controls.

Compliance Considerations

-

Data Protection Regulations : GDPR, CCPA, and other privacy laws may require encryption and access controls.

-

Industry-Specific Requirements : Financial (PCI DSS), healthcare (HIPAA), and government sectors have unique requirements.

-

Audit Capabilities : Maintain comprehensive logs for compliance auditing.

Security Governance

-

Security Policies : Establish clear policies for Kafka security configurations.

-

Regular Reviews : Periodically assess security settings against evolving threats.

-

Change Management : Document security-related changes and approvals.

Conclusion

Kafka security is multifaceted, requiring attention to authentication, authorization, and encryption. By following the concepts and best practices outlined in this guide, you can establish a robust security framework that protects your data while maintaining the performance and reliability that make Kafka valuable.

Remember that security is not a one-time setup but an ongoing process. Regularly review and update your security measures as new threats emerge and as your Kafka deployment evolves. The extra effort invested in properly securing your Kafka environment will pay dividends in protected data, regulatory compliance, and organizational peace of mind.

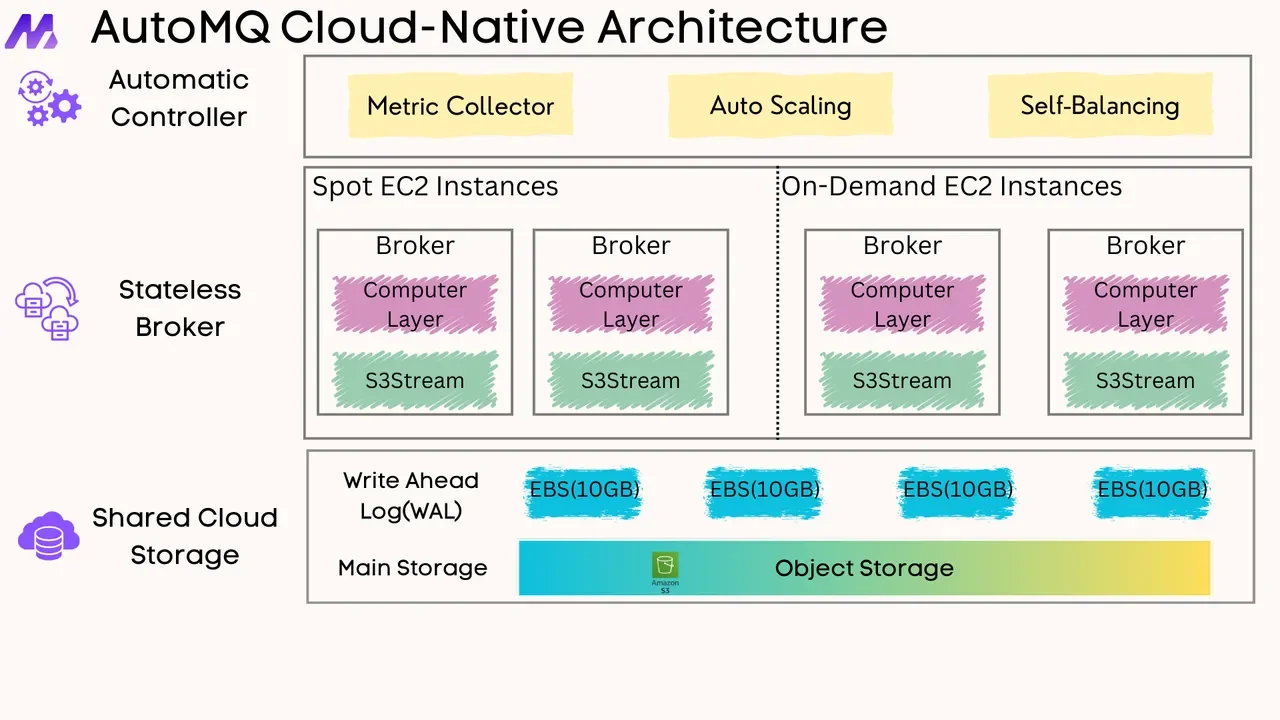

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging