Introduction

As a senior software engineer, I'm here to guide you through the complexities of building resilient data pipelines. One of the most critical aspects of this is ensuring your Apache Kafka deployment can withstand a catastrophic event like a datacenter failure. Today, we'll delve deep into a powerful, yet demanding, architecture for achieving this: the Kafka Stretch Cluster.

This blog will provide a comprehensive overview of Kafka stretch clusters, exploring their architecture, how they work, their ideal use cases, and the significant trade-offs involved. My goal is to equip you with the knowledge to determine if this advanced architecture is the right fit for your organization's needs.

What is a Kafka Stretch Cluster?

Imagine a single, unified Apache Kafka cluster, but with its brokers and other components physically distributed across multiple data centers. This is the essence of a stretch cluster. It functions as one logical cluster that spans different physical locations, such as multiple availability zones within a cloud region or even geographically distinct data centers.

The primary goal of a stretch cluster is to provide high availability (HA) and disaster recovery (DR) with the promise of a Recovery Point Objective (RPO) of zero and a Recovery Time Objective (RTO) near zero. In simpler terms, this means no data loss and near-instantaneous, automatic failover in the event of a single data center outage. It’s an active-active-active deployment across three locations, providing a robust defense against localized failures.

![Kafka Stretch Cluster Example [4]](/blog/kafka-stretch-clusters-multi-datacenter-architecture/1.png)

How do Stretch Clusters Work?

The magic of a stretch cluster lies in its architecture and its leveraging of Kafka's native replication capabilities. It’s not a separate feature you turn on, but rather a specific, disciplined way of deploying and configuring a standard Kafka cluster.

Core Architecture

A typical and highly recommended stretch cluster deployment involves three data centers. This topology is crucial for avoiding "split-brain" scenarios, where a network partition could lead to two parts of the cluster acting independently, causing data inconsistency. With three centers, the cluster can always achieve a majority quorum to make decisions .

The brokers are distributed across these three data centers. To make this distribution meaningful, you must use Kafka's rack awareness feature. By configuring each broker's broker.rack property with the name of its data center (e.g., dc-a , dc-b , dc-c ), you tell Kafka about its physical topology. When a topic is created with a replication factor of three, Kafka's controller will intelligently place the three replicas of each partition in different data centers, ensuring no single location is a single point of failure for that partition's data.

The consensus layer, which is the brain of the cluster responsible for maintaining state, must also be stretched.

-

With Apache ZooKeeper: A ZooKeeper "ensemble," typically consisting of three or five nodes, is deployed with nodes in each of the data centers (e.g., one or two nodes per DC). This ensures the ensemble can maintain a quorum (a majority of nodes) and continue to elect a leader even if one data center is completely lost.

-

With KRaft (Kafka Raft): In modern Kafka deployments, the ZooKeeper dependency is removed in favor of the built-in KRaft consensus protocol. The controller nodes, which run the Raft protocol, are distributed across the data centers, similar to the ZooKeeper ensemble. This simplifies the operational overhead by removing a separate distributed system, but the core principle of maintaining a quorum across physical locations remains the same.

The Mechanics of Synchronous Replication

Stretch clusters achieve their zero-data-loss promise through synchronous replication. Let’s walk through a message’s journey:

-

A producer sends a message to a topic partition. To ensure durability, the producer must be configured with

acks=all(oracks=-1). This tells the producer to wait for confirmation that the message has been successfully replicated to all in-sync replicas. -

The message arrives at the leader broker for that partition in, say,

dc-a. The leader writes the message to its local log. -

The leader then forwards the message to the follower replicas located in

dc-banddc-c. -

The follower replicas write the message to their own logs and send an acknowledgment back to the leader in

dc-a. -

Once the leader has received acknowledgments from all brokers in its In-Sync Replica (ISR) list (which, in a healthy cluster, includes all replicas), it sends a final acknowledgment back to the producer.

This synchronous process guarantees that by the time the producer receives a confirmation, the data is safely stored in multiple physical locations. However, this guarantee comes at a direct cost: network latency. The round-trip time between the data centers is added to every single write request. This is why stretch clusters have strict network requirements, typically needing a low-latency, high-bandwidth connection (ideally under 50ms round-trip time) to be effective.

In-Depth Failure Scenario Analysis

The true value of a stretch cluster is realized when things go wrong. Let’s analyze how the architecture responds to different types of failures.

Scenario 1: A Single Broker Fails

Imagine a broker in dc-b suddenly crashes.

-

Detection: The cluster controller, residing in one of the three data centers, quickly detects the broker's failure because it stops receiving heartbeats.

-

Leader Re-election: The controller identifies all partitions for which the failed broker was the leader. For each of these partitions, it initiates a leader election.

-

New Leader: A new leader is chosen from the remaining in-sync replicas in

dc-aordc-c. Because the data was synchronously replicated, the new leader has an identical copy of the data log. -

Client Failover: Kafka clients automatically discover the new leader for the affected partitions and redirect their produce/consume requests. The result is a brief blip in latency for some partitions, but no data is lost, and the cluster heals itself automatically.

Scenario 2: A Full Data Center Outage

Now consider a more catastrophic event: a power outage takes all of dc-c offline.

-

Mass Detection: The controller detects the loss of all brokers in

dc-c. -

ISR Shrinking: For every partition in the cluster, the controller shrinks the ISR list, removing the brokers from

dc-c. -

Mass Leader Re-election: For all partitions that had their leader in

dc-c, the controller initiates leader elections. New leaders are elected from the healthy replicas indc-aanddc-b. -

Degraded but Operational State: The cluster remains fully operational, serving all read and write traffic from the two surviving data centers. No data is lost for acknowledged writes. The cluster is now in a "degraded" state, as it can no longer tolerate another data center failure without potential data loss, but it has successfully weathered a complete disaster.

The Critical Role of min.insync.replicas

This automatic failover relies on a critical configuration: min.insync.replicas . This setting specifies the minimum number of replicas that must acknowledge a write for it to be considered successful.

In a three-replica stretch cluster, this should be set to 2 . Here’s why: if you set it to 2 and dc-c goes down, a producer request can still succeed because the leader (in dc-a ) and the follower (in dc-b ) can form a quorum of two. The write will be acknowledged. If you left it at the default of 1, the leader could acknowledge the write before replicating it, and if it failed immediately after, the data would be lost. Setting min.insync.replicas to 2 is your primary safeguard against data loss during a failure .

Use Cases and Considerations

Stretch clusters are not a one-size-fits-all solution. They are best suited for the most critical applications where the business impact of data loss or downtime is immense.

Ideal Use Cases

-

Financial Services: Core banking systems, real-time payment processing, and fraud detection platforms where every transaction must be captured.

-

E-commerce: Critical order processing and inventory management systems that must remain online and consistent during peak shopping seasons.

-

Telecommunications: Systems for real-time billing, call data records, and network monitoring that require constant uptime.

Key Considerations Before You Choose a Stretch Cluster

-

High Cost: The requirement for three synchronized data centers and a high-performance, low-latency network makes stretch clusters one of the most expensive HA solutions to implement and maintain .

-

Network Fragility: The entire cluster's performance is tethered to the health of the inter-datacenter network. Latency spikes or network partitions can cripple the cluster's write throughput or even cause cascading failures.

-

Operational Burden: Managing a distributed system across multiple data centers is inherently complex. It requires a high degree of automation, sophisticated monitoring, and a skilled operations team that understands the failure modes of distributed systems.

-

Capacity Planning: You must provision enough capacity in your remaining two data centers to handle 100% of the cluster's load in the event one data center fails. This means you are effectively running with 33% spare capacity at all times.

Monitoring and Performance Tuning in a Stretched Environment

Operating a stretch cluster requires a more nuanced approach to monitoring. Beyond standard Kafka metrics, you must focus on indicators of cross-datacenter health:

-

UnderReplicatedPartitions: This is your most critical alert. A non-zero value indicates that some partitions do not have the desired number of replicas and are at risk of data loss. -

IsrShrinksPerSec/IsrExpandsPerSec: Spikes in these metrics often signal network instability or broker issues, as followers drop out of and rejoin the ISR sets. -

Inter-Broker Network Latency: You must monitor the round-trip latency between your data centers directly. A rising trend is a major warning sign.

-

Controller Health: Monitor the

ActiveControllerCount. This should always be exactly 1. If it's 0 or flapping, your consensus layer is unstable.

When it comes to tuning, you must adjust timeouts to be more tolerant of network latency. Configurations like replica.lag.time.max.ms (how long a follower can be out of sync before being removed from the ISR) and client-side timeouts may need to be increased from their default values to avoid spurious failures in a high-latency environment.

Conclusion

Kafka stretch clusters represent the gold standard for achieving zero data loss and automatic disaster recovery in Apache Kafka. They provide a robust, self-healing architecture that can withstand a complete data center failure without manual intervention.

However, this resilience comes at a significant price in terms of cost, network dependency, and operational complexity. Before embarking on a stretch cluster deployment, perform a rigorous cost-benefit analysis. For mission-critical systems where the cost of downtime or data loss is astronomical, a stretch cluster is a powerful and justifiable solution. For other workloads, simpler and less expensive HA strategies might be more appropriate. Ultimately, the right choice depends on a clear-eyed assessment of your business needs, technical capabilities, and budget.

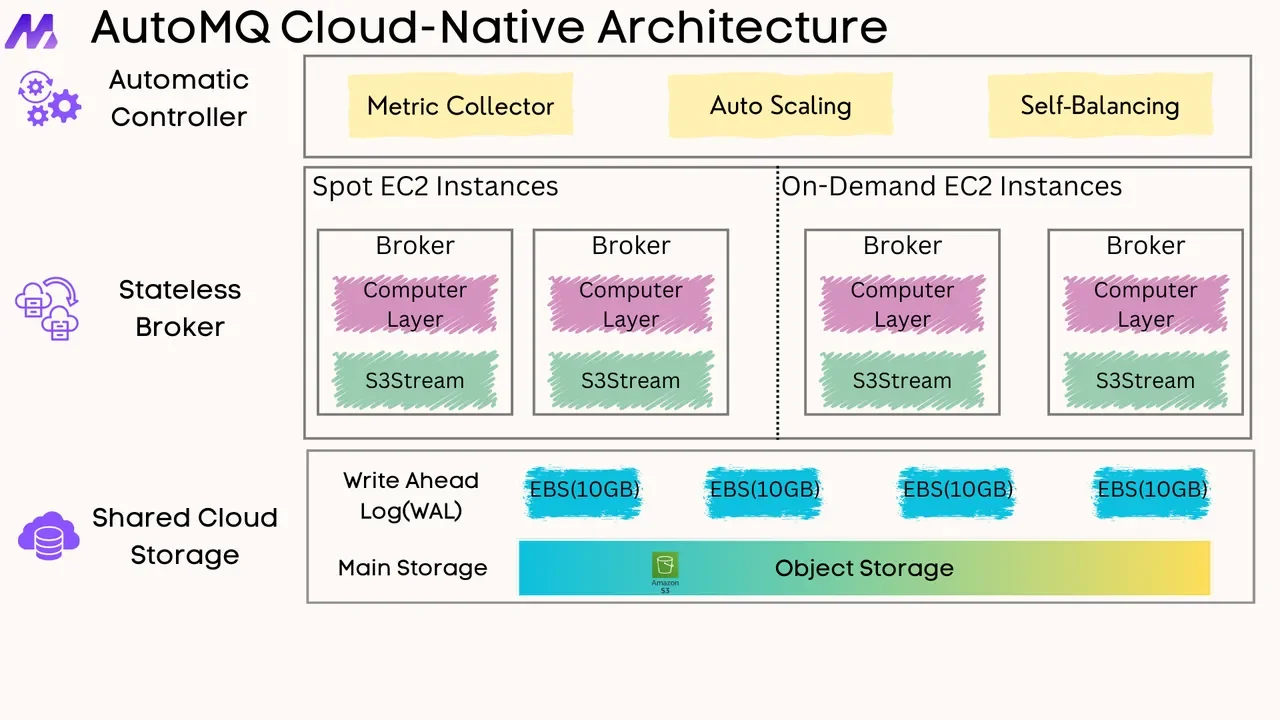

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on GitHub. Big companies worldwide are using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging