LinkedIn developed Kafka back in 2011, and it has grown into one of the most accessible messaging platforms in open source today. You'll find it especially when you have containers, microservices, and Big Data applications. Running Kafka on Kubernetes brings its own set of challenges. The disk-intensive nature of this application needs careful resource management and complex integration.

Kubernetes works great with stateless workloads. The stateful nature of Kafka creates operational complexities that are hard to ignore. Memory needs can be substantial. JVM heap typically stays under 4-5 GB, and the system depends heavily on page cache usage. These challenges become more apparent during upgrades when you need to keep the system available and performing well.

This piece will help you think about upgrading Kafka in Kubernetes environments. We'll get into how modern solutions like AutoMQ tackle these issues. AutoMQ's cloud-native architecture cuts costs by a factor of ten and improves elasticity a hundred times compared to regular Kafka deployments. These benefits make a strong case for organizations that run Kafka on Kubernetes.

Challenges of Kafka Upgrades in Kubernetes

Running Kafka upgrades in Kubernetes environments brings major technical challenges that need careful thought. The complexity comes from how Kafka works and the way it interacts with Kubernetes' orchestration.

Statefulness and Leader Rebalancing

Kafka's stateful nature creates unique challenges in Kubernetes. The system heavily relies on filesystem cache to run fast, typically using up to 30GB of RAM for caching in a 32GB system. When Kubernetes moves StatefulSet replicas to different nodes, it wipes out Kafka's filesystem cache. This forces more disk access and affects performance.

Leader rebalancing is another big challenge. The process helps brokers share workload evenly so no single broker gets overloaded. Yet rebalancing can lead to uneven partition distribution across brokers. This creates performance bottlenecks where some brokers end up overloaded while others sit idle.

Operational Complexity

Managing Kafka on Kubernetes comes with complex resource and operational needs. System admins must handle tasks like scaling, upgrades, and recovery while keeping the system stable. Operators can help, but they add another layer of complexity. They often have strong opinions about handling Kafka resources, which makes custom configurations harder.

Storage Management Complexities

Storage management is a major hurdle in Kafka-Kubernetes setups. Kafka needs persistent storage to keep data safe and fault-tolerant, which clashes with Kubernetes' dynamic nature. You must configure Persistent Volume Claims (PVCs) carefully to prevent data loss when pods restart or fail. Storage solutions also need to deliver steady low latency and high throughput for best performance.

Risk of Downtime

Kafka upgrades in Kubernetes environments carry a real risk of downtime. Clients can face disruptions when Kubernetes stops containers because it doesn't understand Kafka's graceful shutdown process. The platform sends a SIGTERM signal, waits 30 seconds, then issues a SIGKILL. This can disrupt the careful process of moving partitions and choosing leaders.

These risks can be reduced by paying attention to key configurations:

Topic replication factor should be at least 2 for two-AZ clusters and 3 for three-AZ clusters

Minimum in-sync replicas should be set to maximum value of RF-1

Client configurations should use multiple broker connection strings

Monitoring and logging add extra complexity. Kafka clusters generate lots of logs and metrics that need constant watching. Kubernetes' built-in tools might not show enough about Kafka's internal processes. This means you'll need specialized monitoring tools to track performance and health effectively.

Key Considerations for Kafka Upgrades

Kafka upgrades in Kubernetes environments need careful planning and execution. A well-laid-out approach will give a smooth transition with minimal disruption to your messaging infrastructure.

Implement Partitioned Rolling Updates

The journey of Kafka upgrades begins with the implementation of partitioned rolling updates. Leveraging the power of Kubernetes StatefulSet, we can upgrade brokers in controlled batches. For instance, consider upgrading 25% of the cluster at a time. A critical step in this process is to monitor new pods rigorously after each batch. Ensuring they join the cluster successfully, replicate partitions, and handle traffic before proceeding is an absolute must.

Graceful Shutdown & Capacity Planning

Next, we move towards the graceful shutdown and capacity planning phase. Always remember to terminate brokers gracefully. This allows sufficient time for partition reassignment to other brokers and helps avoid the risk of data loss or client errors due to abrupt shutdowns. In the realm of capacity planning, reserving at least 25% extra capacity to absorb traffic during upgrades is a wise strategy. Remember, upgrading a quarter of brokers temporarily reduces available capacity by 25%, so plan your resource allocation accordingly.

Test & Validate Upgrade Paths

The importance of testing and validating upgrade paths cannot be overstated. Pre-upgrade testing should be done to validate the upgrade path (version A → B) in a non-production environment. Some versions may require intermediate hops (e.g., A → B → C). It's also essential to consult with your Kafka distribution provider, such as Confluent or AutoMQ, to confirm compatibility and known issues.

Prioritize Cluster Upgrade Order

When it comes to upgrading, prioritizing the cluster upgrade order can help minimize potential business impact if issues arise. Starting with non-critical clusters to validate the upgrade process is often a good strategy. Mission-critical clusters should be left for the last.

Post-Upgrade Validation

Post-upgrade validation is a step that should never be skipped. Immediate checks should be carried out to ensure that connection counts return to normal, there are no error logs or API failures, and partitions are reassigned and traffic is balanced. An extended observation period of at least 3 days before updating the cluster’s metadata version is recommended. This allows time to detect latent issues while retaining rollback capability.

Rollback Preparedness

Being prepared for a rollback is another key consideration. A rollback playbook should be defined before starting the upgrade. This should include procedures to revert broker versions without data loss and to restore metadata to a pre-upgrade state. Avoid finalizing metadata version upgrades until stability is confirmed.

Client Coordination

Finally, client coordination is an integral part of any Kafka upgrade. All applications and services dependent on the Kafka cluster should be identified. Scheduling should be done wisely to avoid peak traffic periods or business-critical windows such as sales events. Coordination with client teams is essential to minimize disruption.

AutoMQ's Shared Storage Architecture: A Kubernetes-Native Solution

AutoMQ revolutionizes Apache Kafka® deployment on Kubernetes through its trailblazing shared storage architecture. The system moves away from traditional shared-nothing design and adopts a storage-compute separation strategy that matches cloud-native principles perfectly.

Stateless Broker Design

Two key storage components form the foundation of AutoMQ's architecture: EBS as Write-Ahead Log (WAL) and S3 as the primary storage. The design needs just 5-10GB of EBS storage space, which makes it an economical solution. The system exploits EBS's multi-attach capabilities and NVME reservations. This allows failover and recovery completion within milliseconds.

The architecture follows a simple rule: "high-frequency usage paths need the lowest latency and optimal performance". Direct I/O and engineering solutions help data persist with minimal latency. Consumers can read from memory cache and complete read/write processes with single-digit millisecond latency.

Zero-Downtime Upgrade Capability

AutoMQ's stateless nature makes daily operations simple. The system skips complex data migrations during upgrades and provides:

Complete state transfer upon broker shutdown without client impact

Enough time for teams to assess broker status

Quick, safe rolling updates

The auto-balancer component schedules partitions and network traffic between brokers automatically. This eliminates the need for manual partition reassignment. The Controller detects broker failures and attaches its EBS WAL to another broker in a multi-attach way. Operations continue smoothly during planned upgrades or unexpected failures.

Instant Rollback Features

The shared storage architecture enables quick recovery mechanisms. AutoMQ achieves zero RPO (Recovery Point Objective) with RTO (Recovery Time Objective) in seconds, and 99.999999999% durability. This happens through:

Automatic state offloading when a broker shuts down

Quick EBS volume mounting to other nodes during failures

Uninterrupted read and write services

The flexible architecture helps optimize costs through Spot instances, which cost nowhere near regular virtual machines - up to 90% less. The system scales like a microservice application or Kubernetes Deployment, with true auto-scaling capabilities.

S3Stream replaces Kafka's storage layer while staying fully compatible with Apache Kafka and adding cloud-native advantages. Running AutoMQ becomes as simple as managing a microservice application - something that traditional Kafka deployments struggled with.

Monitoring and Validation Strategy

Monitoring plays a vital role in running successful Kafka deployments on Kubernetes. Teams can maintain peak performance and quickly spot problems with the right monitoring setup. A resilient monitoring strategy covers multiple areas, from collecting metrics to verifying health.

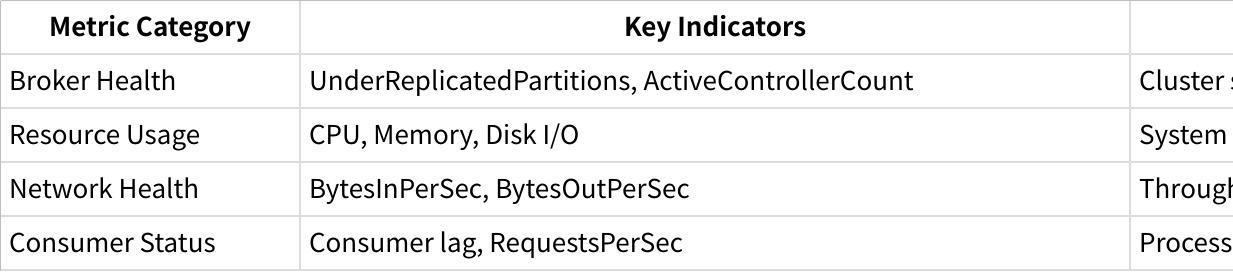

Key Metrics to Track

Teams should watch several significant metrics to monitor broker health. The UnderReplicatedPartitions metric needs quick investigation if it stays above zero for long periods. This could point to broker availability problems. The ActiveControllerCount across all brokers should equal one to show proper controller election and management.

A complete monitoring approach has these key metrics:

Disk throughput remains the biggest performance bottleneck in Kafka operations. Network throughput becomes critical when messages travel across data centers or when topics have many consumers.

Health Check Implementation

Setting up effective health checks in Kubernetes requires careful probe configuration. The kubelet uses liveness probes to know when to restart a container. This catches cases where applications run but can't make progress.

Kafka pods work best with this probe configuration:

livenessProbe:

failureThreshold: 6

initialDelaySeconds: 60

periodSeconds: 60

tcpSocket:

port: 9092

timeoutSeconds: 5

Readiness probes help determine when containers can receive traffic. The setup usually allows more time for initialization:

readinessProbe:

failureThreshold: 6

initialDelaySeconds: 120

periodSeconds: 10

tcpSocket:

port: 9092

timeoutSeconds: 5

These timeouts need careful planning. Kafka's state restoration might take longer than default probe timeouts. Setting the right initialDelaySeconds prevents containers from restarting too early during startup.

Post-Upgrade Performance Analysis

Teams should analyze performance systematically after upgrades. Start by recording baseline metrics before the upgrade, including broker CPU usage, network I/O, disk I/O, and request latency.

System administrators should verify:

System logs for error patterns

Performance metrics against pre-upgrade baselines

Data integrity across the cluster

Service dependencies and operational workflows

The validation plan should track ZooKeeper metrics because they indicate cluster health. "Outstanding requests" shows processing bottlenecks, while "Average latency" helps spot performance issues.

Performance testing after upgrades helps find potential problems. Client version changes can affect test results substantially. To cite an instance, see how upgrading from Kafka 2.7 to 3.7 increased latency from 5ms to 60ms due to client-side changes, not server issues.

Teams should track memory usage and garbage collection metrics along with CPU and thread-level metrics. This complete approach helps spot performance bottlenecks early and guides optimization work.

The monitoring setup itself needs careful planning. Tools like JMX, Grafana, and Prometheus give teams clear visibility into cluster operations. These platforms let teams:

Track critical performance indicators

Set up automated alerts for metric thresholds

Visualize long-term performance trends

Connect system events with performance changes

Conclusion

Kafka upgrades in Kubernetes environments create major operational hurdles that just need careful planning. Teams working with traditional Kafka setups face complex issues with statefulness, leader rebalancing, and possible downtime during upgrades. Organizations must prepare thoroughly before upgrades, use rolling update strategies, and set up reliable monitoring systems.

AutoMQ's shared storage design solves these problems with its trailblazing storage-compute split approach. This cloud-native solution makes upgrades easier by turning brokers into stateless components while keeping data safe through EBS and S3 storage. Teams can now complete upgrades faster, roll back instantly, and manage operations more easily.

A solid monitoring and validation plan is vital to keep performance at its best. Teams should track key metrics, set up proper health checks, and analyze post-upgrade results to ensure system stability. More companies should switch their Kafka setup to cloud-native options like AutoMQ. This move leads to simpler operations and better performance.

This new architecture fits perfectly with Kubernetes principles and offers ten times lower costs with hundred times better elasticity. The messaging system landscape keeps changing, and AutoMQ's approach shows that storage-compute separation will reshape how teams run Kafka workloads in Kubernetes environments.

.png)