Overview

Apache Kafka has become the backbone of modern real-time data streaming architectures. Its ability to handle high-throughput, low-latency data feeds makes it indispensable for a wide range of applications, from real-time analytics to event-driven microservices. When it comes to deploying Kafka, two primary models emerge: the "Classic" approach on Virtual Machines (VMs) or bare metal servers, and the more recent "Kubernetes Kafka" approach, leveraging container orchestration.

This blog aims to provide a comprehensive comparison of these two deployment strategies, helping you understand their core concepts, operational differences, and the trade-offs involved.

Understanding Apache Kafka: Core Concepts

Before diving into deployment models, let's quickly revisit Kafka's core components, which are fundamental to both approaches:

-

Brokers: These are the servers that form a Kafka cluster. Each broker stores topic partitions.

-

Topics: A topic is a category or feed name to which records are published. Topics in Kafka are multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

-

Partitions: Topics are segmented into partitions. Each partition is an ordered, immutable sequence of records that is continually appended to—a structured commit log. Partitions allow for parallelism, enabling multiple consumers to read from a topic simultaneously.

-

Producers: Applications that publish (write) streams of records to one or more Kafka topics.

-

Consumers: Applications that subscribe to (read and process) streams of records from one or more Kafka topics.

-

Metadata Management: Kafka requires a system to manage metadata about brokers, topics, partitions, and configurations. Historically, Apache ZooKeeper was the standard for this. However, newer Kafka versions offer KRaft (Kafka Raft metadata mode), which allows Kafka to manage its own metadata internally, simplifying the architecture .

What is "Classic Kafka"?

"Classic Kafka" refers to deploying Apache Kafka clusters directly onto traditional virtual machines (VMs) or dedicated bare metal servers. This was the original and, for a long time, the only way to run Kafka.

Architecture & How it Works:

In a classic setup, administrators are responsible for provisioning the underlying infrastructure, installing Kafka and its dependencies (like Java), and configuring the operating system and network.

![Kafka Architecture [32]](/blog/kubernetes-kafka-vs-classic-kafka/1.png)

-

Deployment: This typically involves manually installing Kafka binaries on each server, ensuring Java is correctly installed and configured, and tuning OS parameters like file descriptor limits (

ulimit) and memory mapping (vm.max_map_count) . Networking requires careful planning for IP addressing, port accessibility, and DNS resolution for brokers. -

Storage: Storage is managed at the VM or bare metal level, often using local disks (SSDs are preferred for performance) or Storage Area Networks (SANs).

-

Metadata Management:

-

ZooKeeper: For many existing and older setups, a separate ZooKeeper ensemble is crucial. ZooKeeper handles controller election among brokers, stores cluster metadata (broker status, topic configurations, ACLs), and helps manage consumer group coordination . A production ZooKeeper setup typically involves an odd number of nodes (e.g., 3 or 5) for fault tolerance .

-

KRaft Mode: More recent Kafka versions can run in KRaft mode, eliminating the need for a separate ZooKeeper cluster . In KRaft, a subset of Kafka brokers take on the controller role, managing metadata using an internal Raft consensus protocol. This simplifies the deployment architecture and operational overhead.

-

-

Scaling:

-

Horizontal Scaling: Involves provisioning new VMs or servers, installing Kafka, configuring them as part of the cluster, and then reassigning partitions to these new brokers. This partition reassignment is often done using the

kafka-reassign-partitions.shcommand-line tool, which requires generating a reassignment plan and executing it . -

Vertical Scaling: Involves increasing the resources (CPU, RAM, disk) of existing VMs or upgrading bare metal hardware.

-

-

High Availability (HA):

-

With ZooKeeper: Achieved through a combination of a fault-tolerant ZooKeeper ensemble (for metadata and controller election) and Kafka's own partition replication mechanism. Data for each partition is replicated across multiple brokers. If a leader broker fails, a new leader is elected from the in-sync replicas (ISRs) .

-

With KRaft: HA for metadata is managed by the internal Raft quorum among the controller nodes. Data plane HA remains reliant on Kafka's partition replication .

-

-

Upgrades: Kafka upgrades in a classic environment are typically performed as rolling upgrades to minimize downtime. This involves upgrading one broker at a time, which includes stopping the broker, updating the Kafka software, restarting it, and verifying its health before proceeding to the next. Configuration settings like

inter.broker.protocol.versionandlog.message.format.version(for ZK-based) ormetadata.version(for KRaft) need to be updated in stages . -

Monitoring: Monitoring classic Kafka deployments usually involves exposing JMX (Java Management Extensions) metrics from Kafka brokers and ZooKeeper nodes. These metrics are then often scraped by monitoring systems like Prometheus and visualized using dashboards in Grafana . Key metrics include broker health, topic/partition status, consumer lag, and resource utilization.

What is "Kubernetes Kafka"?

"Kubernetes Kafka" refers to deploying and managing Apache Kafka clusters on a Kubernetes platform. Kafka brokers and other components (like ZooKeeper if used, or KRaft controllers) run as containerized applications within Kubernetes Pods.

Architecture & How it Works:

Kubernetes brings its orchestration capabilities to manage Kafka's lifecycle, offering potential benefits in automation and standardization.

![kubernetes Kafka [31]](/blog/kubernetes-kafka-vs-classic-kafka/2.png)

-

Deployment: Kafka on Kubernetes is deployed using declarative YAML manifests.

-

StatefulSets: Kafka brokers are stateful applications, so they are typically deployed using Kubernetes StatefulSets. StatefulSets provide stable, unique network identifiers (e.g.,

kafka-0,kafka-1), persistent storage, and ordered, graceful deployment and scaling for each broker Pod . -

Persistent Storage: Data durability is achieved using PersistentVolumes (PVs) and PersistentVolumeClaims (PVCs). Each Kafka broker Pod managed by a StatefulSet gets its own PV to store its log data .

-

Networking:

-

Internal Communication: A Headless Service is often used to provide stable DNS names for each broker Pod, facilitating direct inter-broker communication and client connections to specific brokers within the cluster .

-

External Access: To expose Kafka to clients outside the Kubernetes cluster, Services of type LoadBalancer or NodePort can be used, often one per broker or via an Ingress controller with specific routing rules .

-

-

Configuration Management: Kafka configurations are typically managed using Kubernetes ConfigMaps for non-sensitive data and Secrets for sensitive data like passwords or TLS certificates .

-

-

Kafka Operators: A significant aspect of running Kafka on Kubernetes is the use of Kafka Operators. Operators are software extensions to Kubernetes that use custom resources to manage applications and their components. For Kafka, operators encode domain-specific knowledge to automate deployment, scaling, management, and operational tasks . Several open-source operators are available that simplify running Kafka on Kubernetes. These operators define Custom Resource Definitions (CRDs) for Kafka clusters, topics, users, etc., allowing users to manage Kafka declaratively.

-

Scaling:

-

Operators can simplify scaling the number of Kafka brokers by modifying the

replicascount in the Kafka custom resource. -

Partition reassignment after scaling up or before scaling down might require manual triggering of a rebalance process (e.g., by applying a

KafkaRebalancecustom resource with some operators) or is handled by more advanced features in other operators. The degree of automation varies .

-

-

High Availability (HA):

-

Kubernetes itself provides basic HA by ensuring Pods are rescheduled if a node fails.

-

StatefulSets maintain stable identities and storage for brokers across restarts.

-

Operators enhance HA with Kafka-specific logic, managing broker ID persistence, ensuring correct volume reattachment, and orchestrating graceful startup and shutdown sequences. Kafka's native replication mechanisms are still fundamental for data HA.

-

-

Upgrades: Operators often streamline the upgrade process for Kafka versions or configuration changes, providing automated or semi-automated rolling updates that respect Kafka's operational requirements.

-

Self-Healing: Kubernetes can automatically restart failed Kafka Pods. Operators add a layer of intelligence, ensuring that when a broker Pod is restarted, it correctly re-joins the cluster, reattaches to its persistent storage, and maintains its unique broker ID. Some operator solutions also offer features for automated recovery from certain failure scenarios .

-

Monitoring: Operators often expose Kafka metrics in a Prometheus-compatible format, and some provide pre-configured Grafana dashboards for easier monitoring of the Kafka cluster running within Kubernetes.

Side-by-Side Comparison: Kubernetes Kafka vs. Classic Kafka

| Feature | Classic Kafka (VMs/Bare Metal) | Kubernetes Kafka |

|---|---|---|

| Deployment & Provisioning | Manual or scripted; significant OS & Kafka configuration. | Declarative (YAML); Operators automate provisioning & configuration. |

| Scalability & Elasticity | Manual broker addition/removal; partition reassignment often manual (kafka-reassign-partitions.sh). | Broker scaling often simplified by operators (adjusting replicas); partition rebalancing automation varies by operator. K8s provides infrastructure elasticity. |

| Management & Operations | Higher manual overhead (OS patching, config sync, broker lifecycle). | Operators reduce manual effort for Day 2 operations (upgrades, scaling, some recovery). Kubernetes handles underlying node management. |

| High Availability | Relies on Kafka's replication & ZooKeeper/KRaft for metadata/controller HA. Manual intervention sometimes needed for recovery. | Kubernetes Pod HA + Operator-driven Kafka-specific recovery (maintaining broker ID, PVs). Kafka replication is still key. |

| Resource Utilization | Can achieve high efficiency on bare metal if tuned. VMs add hypervisor overhead. | K8s offers bin-packing but adds containerization/orchestration overhead. Page cache sharing can impact Kafka . Dedicated nodes can mitigate this. |

| Performance | Potentially highest on bare metal with direct hardware access. Network/disk I/O is critical. | Can be performant with proper K8s networking (e.g., hostNetwork) & storage. Page cache behavior needs careful consideration . Multiple network layers can add latency. |

| Cost (TCO) | Hardware/VM costs, software licenses (if applicable), significant operational staff time. | Potential hardware savings via better utilization; K8s platform costs; operator software costs (if commercial); skilled K8s/Kafka ops team needed. |

| Complexity & Learning Curve | Deep Kafka knowledge required. OS & network expertise crucial. | Requires Kafka knowledge + significant Kubernetes expertise. Operators abstract some Kafka complexity but add K8s complexity . |

| Ecosystem Integration | Integrates with traditional monitoring/logging. | Leverages Kubernetes ecosystem (monitoring, logging, service mesh, CI/CD). |

| Metadata Management | ZooKeeper (older setups) or KRaft (newer setups, simpler). | ZooKeeper (often run as a StatefulSet) or KRaft (managed by operator). |

Best Practices

For Classic Kafka:

-

Hardware & OS: Provision adequate CPU, ample RAM (especially for page cache), and fast, preferably SSD-based disks . Tune OS settings like

ulimitfor open file descriptors andvm.max_map_countfor memory mapping. -

Configuration: Meticulously configure

server.propertiesfor each broker, especially listeners, advertised listeners, log directories, and replication factors. -

ZooKeeper/KRaft: For ZooKeeper, maintain a healthy, isolated 3 or 5-node ensemble . For KRaft, ensure a stable controller quorum and understand its metadata replication .

-

Monitoring: Implement comprehensive monitoring of JMX metrics for brokers and ZooKeeper/KRaft controllers.

-

Security: Implement network segmentation, encryption (SSL/TLS), authentication (SASL), and authorization (ACLs).

-

Operations: Have well-defined procedures for scaling, upgrades, and disaster recovery. Regularly perform partition rebalancing if hotspots occur.

For Kubernetes Kafka:

-

Operator Choice: Evaluate available operators based on community support, feature set, automation capabilities, and your team's expertise.

-

Storage: Use appropriate StorageClasses for PersistentVolumes, considering performance (e.g., SSD-backed PVs) and durability.

-

Networking: Correctly configure

advertised.listenersfor brokers so clients (internal and external) can connect. Plan external access using LoadBalancers, NodePorts, or Ingress controllers carefully. -

Resource Management: Set appropriate CPU and memory requests and limits for Kafka Pods. Consider dedicated node pools for Kafka if performance is paramount, using taints and tolerations or node affinity .

-

Leverage Operator Features: Utilize the operator's capabilities for automated deployment, scaling, rolling updates, and configuration management through CRDs.

-

Monitoring: Integrate with Kubernetes-native monitoring tools (like Prometheus) often facilitated by the operator. Monitor Kafka-specific metrics as well as Kubernetes-level metrics.

-

StatefulSet Understanding: While operators abstract much, understanding how StatefulSets work is beneficial for troubleshooting.

When to Choose Which:

Classic Kafka might be suitable if:

-

Your organization has strong existing expertise in managing VMs or bare metal servers.

-

You require absolute, fine-grained control over hardware and OS for maximum raw performance tuning.

-

The Kafka deployment is relatively static and doesn't require frequent, dynamic scaling.

-

You are not looking to standardize on Kubernetes for other applications.

Kubernetes Kafka might be a better fit if:

-

Your organization is standardizing on Kubernetes as the platform for all applications.

-

You value declarative configurations, infrastructure-as-code, and automation for operational tasks.

-

You need to support dynamic scaling of your Kafka clusters more easily.

-

You want to leverage the broader cloud-native ecosystem for monitoring, logging, and service discovery.

Conclusion:

Choosing between Classic Kafka and Kubernetes Kafka involves weighing the trade-offs between direct control and raw performance (often associated with well-tuned classic deployments) versus operational automation, scalability, and ecosystem integration (offered by Kubernetes).

Classic Kafka provides maximum control but demands significant manual operational effort and deep Kafka-specific expertise. Kubernetes Kafka, especially when managed via an Operator, promises to simplify many operational burdens and offers better elasticity. However, it introduces Kubernetes' own complexity and requires a different set of skills.

The trend is towards containerization and orchestration for many stateful applications, including Kafka. As KRaft matures further, simplifying Kafka's own architecture, and as Kubernetes Operators become even more sophisticated, running Kafka on Kubernetes is becoming an increasingly viable and attractive option for many organizations. The best choice ultimately depends on your team's skills, existing infrastructure, operational model, and specific business requirements.

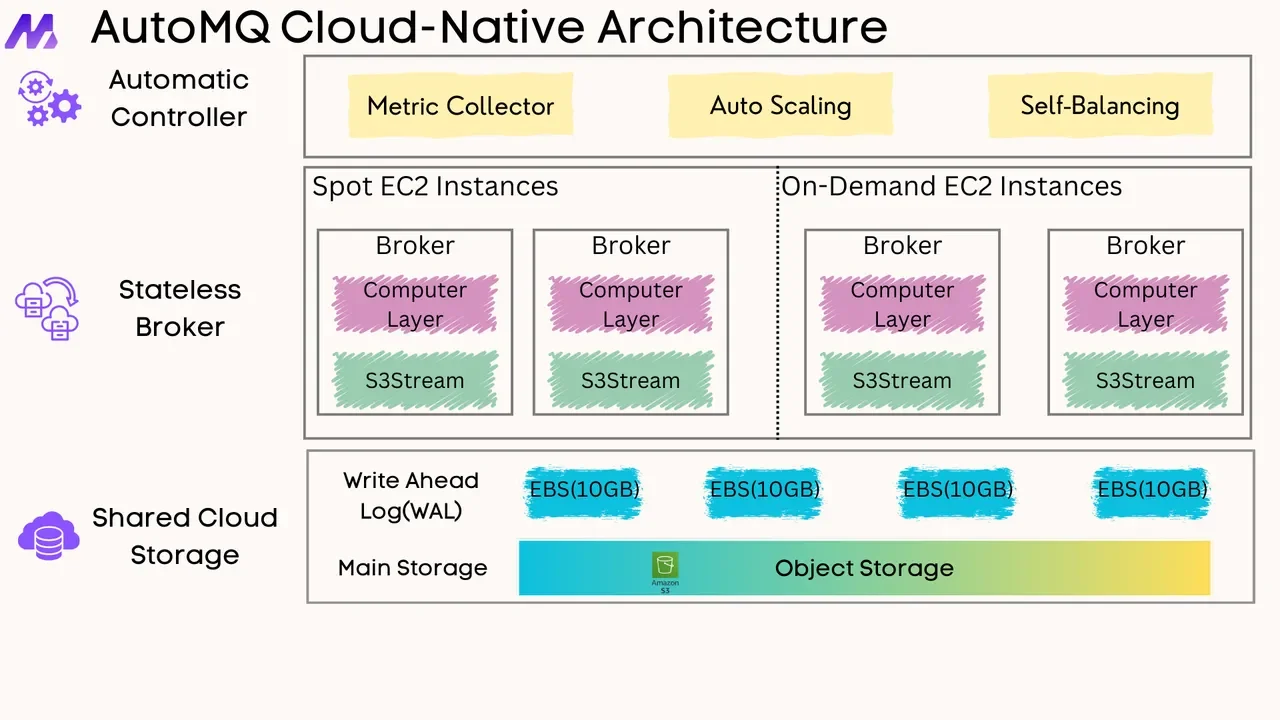

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging