Overview

In today's data-driven world, robust and scalable storage solutions are no longer a luxury but a necessity. Distributed storage systems offer a way to manage vast amounts of data by distributing it across multiple servers, providing resilience and high availability. Among the plethora of options, MinIO and Ceph stand out as popular open-source choices, each with its unique strengths and design philosophies. This blog post aims to provide a comprehensive comparison to help you understand their differences and decide which might be a better fit for your needs.

Understanding MinIO

MinIO is a high-performance, distributed object storage system. It is designed to be S3-compatible, making it a popular choice for applications built for cloud object storage environments . Simplicity and performance are at the core of MinIO's design.

Architecture & Core Concepts

MinIO can run in distributed mode, where it pools multiple drives (even across different servers) into a single object storage resource. Data protection is primarily achieved through erasure coding (specifically Reed-Solomon codes), which stripes object data and parity blocks across multiple drives. This allows MinIO to tolerate drive or node failures while minimizing storage overhead compared to simple replication . Metadata in MinIO is stored alongside the objects themselves, typically as a small JSON file ( xl.json ) on the same drives, which is written synchronously . MinIO organizes storage into server pools, and in larger deployments, these can be federated.

MinIO claims strict read-after-write and list-after-write consistency for all I/O operations in both standalone and distributed modes, provided it runs on POSIX-compliant filesystems like XFS, ZFS, or BTRFS that honor O_DIRECT and Fdatasync semantics (ext4 is discouraged due to potential consistency trade-offs) .

![MinIO Architecture [10]](/blog/minio-vs-ceph-distributed-storage-solutions-comparison/1.png)

Key Features

-

S3 API Compatibility: A primary selling point, allowing many S3-native applications to use MinIO as a backend.

-

High Performance: Optimized for high throughput and low latency, particularly for large objects.

-

Simplicity: Relatively easy to set up and manage compared to more complex systems.

-

Cloud-Native: Well-suited for containerized environments and orchestration with Kubernetes, often deployed using the MinIO Operator.

-

Data Protection: Erasure coding and bit-rot detection using HighwayHash .

-

Security: Supports server-side encryption with external Key Management Service (KMS) via KES, client-side encryption, access policies (IAM-like), and TLS for data in transit.

Understanding Ceph

Ceph is a mature, highly scalable, and unified distributed storage system. Unlike MinIO's singular focus on object storage, Ceph provides object, block, and file storage capabilities from a single cluster .

Architecture & Core Concepts

The foundation of Ceph is the Reliable Autonomic Distributed Object Store (RADOS). RADOS manages the distribution and replication of data objects across the cluster. A key component is the CRUSH (Controlled Replication Under Scalable Hashing) algorithm, which deterministically calculates data placement without relying on a central lookup table. This enables Ceph to scale massively and handle data rebalancing and recovery autonomously .

A Ceph cluster consists of several key daemons:

-

OSDs (Object Storage Daemons): Store data on local drives, handle replication, recovery, and rebalancing. They report status to Monitors.

-

Monitors (MONs): Maintain the master copy of the cluster map, which describes the cluster topology and state. They use Paxos for consensus.

-

Managers (MGRs): Provide an endpoint for external monitoring and management systems, and host modules for additional functionality (e.g., Ceph Dashboard).

-

RADOS Gateway (RGW): Provides an S3 and Swift-compatible object storage interface on top of RADOS.

-

RADOS Block Device (RBD): Provides network block devices, often used for virtual machine storage.

-

Ceph File System (CephFS): Provides a POSIX-compliant distributed file system, requiring Metadata Servers (MDS).

![Ceph Architecture [11]](/blog/minio-vs-ceph-distributed-storage-solutions-comparison/2.png)

Ceph ensures strong consistency for acknowledged write operations at the RADOS level. When data is written, it's typically replicated to a set of OSDs, and the write is acknowledged to the client only after it has been persisted to a configurable minimum number of replicas ( min_size ) .

Key Features

-

Unified Storage: Offers object, block, and file storage from one system.

-

Massive Scalability: Designed to scale from terabytes to exabytes.

-

High Availability & Durability: Achieved through data replication (default) or erasure coding, self-healing capabilities, and fault tolerance domains defined by CRUSH.

-

Data Services: Supports snapshots, thin provisioning (RBD), and various access methods.

-

Mature Ecosystem: Extensive documentation, a large community, and tools like the Ceph Dashboard for management.

-

Security: Uses

cephxfor internal authentication. RGW supports various authentication methods (Keystone, LDAP) and server-side encryption. OSD data can be encrypted at rest (LUKS) .

Side-by-Side Comparison

| Feature | MinIO | Ceph (RADOS/RGW for S3) |

|---|---|---|

| Primary Storage Type | Object (S3 API) | Object (S3/Swift via RGW), Block (RBD), File (CephFS) |

| Architecture | Simpler, focused on object storage. Distributed erasure-coded sets. | Complex, unified. RADOS core, CRUSH for placement, various daemon types. |

| Data Protection | Erasure Coding, Bit-rot detection. | Replication (default), Erasure Coding. Scrubbing for consistency. |

| Consistency (S3 Ops) | Claims strong read-after-write/list-after-write for its operations . | Strong consistency at RADOS level . RGW S3 behavior influenced by S3 API eventual consistency for certain operations (e.g., listings, cross-region). |

| Scalability | Horizontally scalable server pools. | Massively scalable via RADOS and CRUSH. |

| Performance (Object) | Generally higher raw throughput & lower latency in synthetic tests . | Consistent performance for complex workloads, good for mixed use. |

| Deployment Complexity | Relatively simple, lightweight. | More complex, requires careful planning and understanding of components. |

| Management | MinIO Console, mc CLI. | Ceph Dashboard, ceph CLI, cephadm/Rook for deployment/management. |

| Hardware | Commodity hardware, XFS/ZFS/BTRFS recommended, benefits from NVMe . Needs exclusive drive access . | Commodity hardware. Specific recommendations for MONs, OSDs (CPU, RAM, Network). BlueStore for OSD backend. |

| S3 API Compatibility | Core focus, high compatibility. Some user-reported deviations in behavior for specific edge cases or POSIX-like interpretations . | Broad S3 compatibility via RGW, uses s3-tests for verification . |

| Licensing | AGPLv3 + Commercial License . | Primarily LGPL for core components, Apache 2.0 for some tools . |

Use Cases & Best Practices

MinIO is often a strong choice for:

-

Cloud-Native Applications: Its S3 compatibility and Kubernetes integration make it ideal for microservices and applications designed for object storage.

-

AI/ML Workloads: High throughput is beneficial for data lakes supporting AI/ML pipelines.

-

High-Performance Object Storage Needs: When raw speed for object access is paramount.

-

Edge Computing: Its lightweight nature can be advantageous for edge deployments.

-

Tiered storage for data pipelines: Systems requiring an S3-compatible layer for offloading data can leverage MinIO's performance.

Best Practices for MinIO: Use recommended filesystems like XFS. Ensure adequate network bandwidth. For production, carefully consider hardware (NVMe for performance-critical workloads) and redundancy (appropriate erasure coding parity). Employ tools like KES for secure external key management.

Ceph excels in scenarios requiring:

-

Unified Storage: When you need object, block, and file storage from a single, centrally managed cluster.

-

Large-Scale Cloud Infrastructure: Powering private or public clouds (e.g., OpenStack).

-

Virtual Machine Storage: RBD is a popular backend for KVM and other hypervisors.

-

Big Data Analytics: CephFS or RGW can store large datasets for analytics platforms.

-

Backup and Archive: Its scalability and data protection options make it suitable for long-term storage.

Best Practices for Ceph: Plan your CRUSH hierarchy carefully to match your fault domains. Separate public (client-facing) and cluster (OSD internal) networks. Monitor cluster health ( ceph health ) and utilization closely. Use cephadm or Rook for modern deployments. Ensure sufficient RAM and CPU for MON and OSD nodes, especially with NVMe.

Integration with Data Ecosystems

Both MinIO and Ceph RGW, due to their S3 compatibility, can serve as storage backends for various data pipeline and processing systems that support S3 for tiered storage or as a data lake.

MinIO has published benchmarks demonstrating its capability as a high-performance tiered storage backend for stream processing platforms, highlighting its ability to decouple storage and compute effectively.

Ceph RGW can also be configured for such use cases by providing the S3 endpoint, bucket, and credentials to the relevant system. While specific public benchmarks for Ceph RGW in this exact role are less common than for MinIO, its general S3 compatibility allows for this integration.

Common Issues & Considerations

MinIO:

-

Licensing: The AGPLv3 license for the open-source version has implications for some businesses, especially if modifications are made or if it's used in a SaaS offering. A commercial license is available .

-

Data Rebalancing: MinIO does not automatically rebalance old objects across new server pools when expanding; this is typically handled for new object placements or may require manual intervention .

-

S3 API Nuances: While highly S3-compatible, some subtle differences in API behavior or interpretation compared to AWS S3 have been noted by users, particularly concerning directory-like operations or specific error handling .

-

Consistency Guarantees: MinIO's strong consistency claim relies on the underlying filesystem behaving correctly and its own distributed locking. In scenarios of complete server pool failure in a multi-pool setup, MinIO may halt I/O to the entire deployment to maintain consistency, prioritizing it over availability .

Ceph:

-

Complexity: Ceph's power and flexibility come at the cost of higher operational complexity and a steeper learning curve.

-

Resource Overhead: It can be more resource-intensive than MinIO, especially for smaller deployments, due to its multiple daemon types and RADOS overhead.

-

Performance Tuning: Achieving optimal performance across its different storage interfaces (RGW, RBD, CephFS) often requires careful tuning and understanding of the workload and Ceph internals.

-

Upgrades: While non-disruptive upgrades are possible, they need to be planned and executed carefully in large clusters.

Conclusion

Choosing between MinIO and Ceph depends heavily on your specific requirements.

Choose MinIO if:

-

You primarily need high-performance, S3-compatible object storage.

-

Simplicity of deployment and management is a high priority.

-

You are building cloud-native applications, especially on Kubernetes.

-

Your workload benefits from very high throughput for large objects.

-

The AGPLv3 license (or the cost of a commercial license) aligns with your project or business model.

Choose Ceph if:

-

You need a unified storage solution offering object, block, and/or file storage.

-

Massive scalability and extreme data durability are paramount.

-

You are building large-scale infrastructure (e.g., private cloud, HPC storage).

-

You require advanced data services and fine-grained control over data placement and resilience.

-

You have the operational expertise or resources to manage a more complex distributed system.

Both MinIO and Ceph are powerful open-source storage solutions. MinIO offers a streamlined, high-performance path for object storage, while Ceph provides a versatile, feature-rich platform for diverse storage needs at scale. Carefully evaluate your workload characteristics, scalability requirements, operational capabilities, and long-term storage strategy before making a decision.

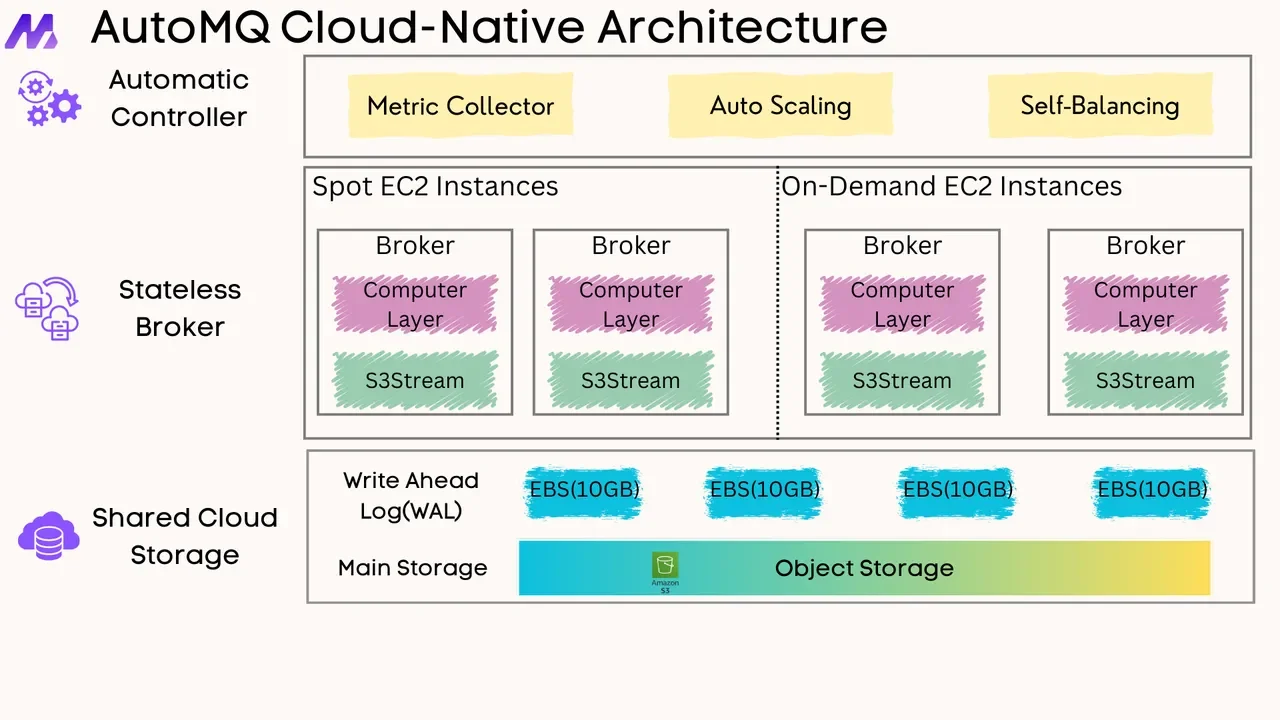

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging