Overview

Apache Kafka has become the backbone of real-time data streaming for countless organizations. As data volumes grow and the need for geographically distributed systems, disaster recovery, and data sharing across different environments increases, robust data replication between Kafka clusters is crucial. Two popular solutions for this task are Apache Kafka's own MirrorMaker 2 (MM2) and Confluent Replicator . This blog post provides a comprehensive comparison to help you understand their concepts, architecture, features, and best practices.

Core Concepts and Architecture

Understanding how these tools are built and operate is key to choosing the right one for your needs.

Apache Kafka MirrorMaker 2 (MM2)

MirrorMaker 2 was introduced as a significant improvement over the original MirrorMaker (MM1) and is designed to replicate data and topic configurations between Kafka clusters . It is built upon the Kafka Connect framework, which provides a scalable and fault-tolerant way to stream data in and out of Kafka .

![MirrorMaker 2 Architecture [11]](/blog/mirrormaker-vs-confluent-replicator-kafka-data-replication-comparison/1.png)

MM2 employs a set of Kafka Connect connectors to perform its tasks:

-

MirrorSourceConnector : This connector fetches data from topics in the source Kafka cluster and produces it to the target Kafka cluster. It also handles the replication of topic configurations and ACLs .

-

MirrorCheckpointConnector : This connector emits "checkpoints" that track consumer group offsets in the source cluster. These checkpoints are crucial for translating and synchronizing consumer group offsets to the target cluster, enabling consumers to resume processing from the correct point after a failover or migration.

-

MirrorHeartbeatConnector : This connector emits heartbeats to both source and target clusters. These heartbeats can be used to monitor the health and connectivity of the replication flow and ensure that MM2 instances are active.

![MirrorMaker 2 Architecture [11]](/blog/mirrormaker-vs-confluent-replicator-kafka-data-replication-comparison/2.png)

MM2 creates several internal topics in both source and target clusters to manage its operations, such as mm2-offset-syncs.<source-cluster-alias>.internal , <source-cluster-alias>.checkpoints.internal , and heartbeats . By default, MM2 renames topics in the target cluster by prefixing them with the source cluster's alias (e.g., sourceClusterAlias.myTopic ). This helps prevent topic name collisions and aids in routing, especially in complex multi-cluster topologies. This behavior can be overridden using the IdentityReplicationPolicy if identical topic names are required across clusters.

Confluent Replicator

Confluent Replicator is a commercial offering from Confluent, designed for robust, enterprise-grade replication between Kafka clusters. Like MM2, it is also built on the Kafka Connect framework and runs as a set of connectors within a Kafka Connect cluster, typically deployed near the destination Kafka cluster .

![Confluent Replicator Architecture [12]](/blog/mirrormaker-vs-confluent-replicator-kafka-data-replication-comparison/3.png)

Key architectural aspects of Confluent Replicator include:

-

Data and Metadata Replication : Replicator copies messages, topic configurations (including partition counts and replication factors, with some caveats), and consumer group offset translations .

-

Schema Registry Integration : A significant feature of Replicator is its integration with Confluent Schema Registry. It can migrate schemas associated with topics and handle schema translation. For Confluent Platform 7.0.0 and later, Confluent recommends Cluster Linking for schema migration over Replicator's schema translation feature for certain use cases, though Replicator still supports schema migration, especially useful for older platform versions or specific scenarios . When migrating schemas, Replicator can be configured with modes like

READONLYon the source andIMPORTon the destination for continuous migration . -

Provenance Headers : To prevent circular replication in active-active or bi-directional setups, Replicator (version 5.0.1+) automatically adds provenance headers to messages, allowing it to identify and drop messages that have already been replicated, thus avoiding infinite loops.

-

Licensing : Replicator is a proprietary, licensed component of the Confluent Platform .

Feature Comparison: MirrorMaker 2 vs. Confluent Replicator

Let's compare these tools across several key features:

| Feature | Apache Kafka MirrorMaker 2 (MM2) | Confluent Replicator |

|---|---|---|

| Underlying Framework | Kafka Connect | Kafka Connect |

| Licensing | Open-source (Apache 2.0 License) | Commercial (Part of Confluent Platform subscription) |

| Topic Configuration Sync | Yes, syncs topic configurations (e.g., partitions, replication factor with caveats). Can be enabled/disabled (sync.topic.configs.enabled) . Some limitations exist on exact RF matching if target brokers are fewer than source RF. | Yes, copies topic configurations. Can ensure partition counts and replication factors match (if destination cluster capacity allows) . |

| ACL Sync | Yes, syncs topic ACLs. Can be enabled/disabled (sync.topic.acls.enabled). Limitations include not creating service accounts in the target and downgrading ALL permissions to read-only for some managed Kafka offerings . | Yes, leverages Kafka security and requires appropriate ACLs/RBAC for its operations . ACLs themselves are typically managed at the cluster level rather than directly replicated as metadata by Replicator in the same way MM2 does. |

| Consumer Offset Sync | Yes, via MirrorCheckpointConnector and OffsetSync internal topic. sync.group.offsets.enabled=true (Kafka 2.7+) allows direct writing of translated offsets to __consumer_offsets in the target. | Yes, translates consumer offsets (primarily for Java consumers using standard offset commit mechanisms) and writes them to __consumer_offsets in the destination . |

| Topic Renaming/Prefixing | Yes, prefixes topics with source cluster alias by default (DefaultReplicationPolicy). IdentityReplicationPolicy for no prefixing. | Yes, supports topic renaming using topic.rename.format which can use variables like ${topic}. Can also implement prefixing/suffixing . |

| Schema Registry Integration | No direct integration. Schemas must be managed independently on source and target Schema Registries. Some managed Kafka services using MM2 also explicitly state that schemas are not synced by their MM2 offering. | Yes, tight integration with Confluent Schema Registry. Supports schema migration and translation (e.g., using DefaultSubjectTranslator or custom translators) . Handles schema ID mapping. |

| Loop Prevention | Primarily through default topic prefixing. IdentityReplicationPolicy in bi-directional setups requires careful design to avoid loops. | Built-in via provenance headers (Replicator 5.0.1+). |

| Data Consistency | Generally provides at-least-once semantics for cross-cluster replication due to the asynchronous nature of offset commits relative to data replication . Some managed services offer configurations aiming for exactly-once semantics (EOS) with specific flags. | Provides at-least-once delivery semantics . |

| Monitoring | Standard Kafka Connect JMX metrics. Heartbeats can be used for liveness. Monitoring via tools that consume JMX metrics . | Extensive monitoring via Confluent Control Center (C3), including latency, message rates, and lag. Exposes JMX metrics and has a Replicator Monitoring Extension REST API . |

| Configuration Management | Via Kafka Connect worker configuration files or REST API if KIP-710 enhancements are used for a dedicated MM2 cluster with REST enabled. | Via Kafka Connect worker configuration files or REST API. Rich set of configuration options specific to Replicator . |

| Ease of Use & Setup | Can be complex to configure optimally, especially for advanced scenarios like active-active. Requires understanding of Kafka Connect. | Can be simpler for common use cases if using Confluent Platform due to integration with Control Center and defined configurations. Still requires Kafka Connect knowledge. |

| Multi-DC Topologies | Supports various topologies like hub-spoke and DR. Active-active requires careful planning to manage offsets and potential re-consumption. | Designed for multi-DC deployments, including active-passive, active-active, and aggregation . Provenance headers simplify active-active. |

How They Work: Data Flow and Offset Management

MirrorMaker 2

-

Data Replication : The

MirrorSourceConnectorreads messages from whitelisted topics in the source cluster. It produces these messages to topics in the target cluster (prefixed by default). -

Configuration Sync : The

MirrorSourceConnectoralso periodically checks for new topics or configuration changes (ifsync.topic.configs.enabled=true) and ACL changes (ifsync.topic.acls.enabled=true) in the source cluster and applies them to the target cluster . -

Offset Tracking & Sync :

-

The

MirrorSourceConnectoremitsOffsetSyncrecords to an internalmm2-offset-syncs.<source-cluster-alias>.internaltopic. These records contain information about native consumer offsets and their corresponding replicated message offsets . -

The

MirrorCheckpointConnectorconsumes theseOffsetSyncrecords. It translates the source consumer group offsets to their equivalent in the target cluster. -

If

sync.group.offsets.enabled=true(available since Kafka 2.7+), theMirrorCheckpointConnectorwrites these translated offsets directly into the__consumer_offsetstopic in the target cluster. This allows consumers in the target cluster to pick up from where their counterparts left off in the source cluster. -

The

MirrorHeartbeatConnectorperiodically sends heartbeats to confirm connectivity and active replication.

-

Confluent Replicator

-

Data Replication : Replicator's source connector reads messages from specified topics in the source cluster. It preserves message timestamps by default and produces messages to the target cluster. If configured, it adds provenance headers.

-

Topic Management : Replicator can automatically create topics in the destination cluster if they don't exist, attempting to match the source topic's partition count and replication factor (if

topic.auto.create.enabled=trueand destination capacity allows) . It can also rename topics usingtopic.rename.format. -

Schema Migration : If integrated with Schema Registry, Replicator reads schemas from the source registry and writes them to the destination registry, handling subject name translation if

topic.rename.formatis used and an appropriateschema.subject.translator.classis configured . How it handles ongoing schema evolution during active replication is less explicitly detailed in public documentation but relies on the destination Schema Registry's compatibility rules. -

Offset Translation : Replicator monitors committed consumer offsets in the source cluster. It translates these offsets to their corresponding offsets in the target cluster, typically based on timestamps, and writes them to the

__consumer_offsetstopic in the destination cluster. This is primarily for Kafka clients (Java) using the standard consumer offset commit mechanisms .

Common Issues and Considerations

-

Data Duplication (At-Least-Once Semantics) : Both MM2 and Replicator generally provide at-least-once delivery. This means that in certain failure scenarios (e.g., a Replicator or MM2 task failing after producing messages but before committing its source consumer offsets), messages might be re-replicated, leading to duplicates in the target cluster . Applications consuming from replicated topics should ideally be idempotent or have deduplication logic.

-

Configuration Complexity :

-

MM2 : Fine-tuning MM2 for optimal performance and reliability (e.g., number of tasks, buffer sizes, batch sizes for embedded producer/consumer) can be complex. Correctly configuring

offset-syncs.topic.location(source or target) is crucial for DR scenarios. -

Replicator : While often simpler to start with within Confluent Platform, advanced configurations like custom subject translators or complex topic routing rules still require careful setup .

-

-

Resource Management : Both tools run on Kafka Connect and require sufficient resources (CPU, memory, network bandwidth) for the Connect workers. Under-provisioning can lead to high replication lag.

-

Replication Lag : Monitoring replication lag is critical. High lag can be due to network latency between clusters, insufficient resources for Connect workers, misconfigured Connect tasks, or overloaded source/target Kafka clusters.

-

Active-Active Challenges :

-

MM2 : Requires careful planning to avoid data duplication and ensure consistent offset translation. Topic prefixing is the default way to manage distinct data streams, but if

IdentityReplicationPolicyis used, applications or external mechanisms might be needed for loop prevention in complex setups. -

Replicator : Simplified by provenance headers, but careful consideration of consumer offset management and application design is still needed for seamless failover/failback.

-

-

Schema Management (MM2) : With MM2, schema evolution must be managed independently across clusters. This can be a significant operational overhead if not automated.

-

Licensing Costs (Replicator) : Confluent Replicator is a commercial product, and its cost can be a factor for some organizations .

Best Practices

-

Deployment Location :

-

MM2 : It's generally recommended to run the MM2 Kafka Connect cluster in the target data center or environment. This is often referred to as the "consume from remote, produce to local" pattern, which can be more resilient to network issues between data centers.

-

Replicator : Similarly, Confluent recommends deploying Replicator in the destination data center, close to the destination Kafka cluster .

-

-

Dedicated Connect Cluster : For critical replication flows, run MM2 or Replicator on a dedicated Kafka Connect cluster rather than sharing it with other Connect jobs. This provides resource isolation and simplifies tuning.

-

Monitoring :

-

MM2 : Monitor Kafka Connect JMX metrics (e.g., task status, lag, throughput), MM2-specific metrics if available (e.g., via heartbeats), and Kafka broker metrics on both clusters .

-

Replicator : Leverage Confluent Control Center for comprehensive monitoring. Also, monitor standard Kafka Connect JMX metrics . Key metrics include

MBeanslikekafka.connect.replicator:type=replicated-messages,topic=\(\[-.w\]\+),source=\(\[-.w\]\+),target=\(\[-.w\]\+)for message lag and throughput.

-

-

Capacity Planning : Ensure both source and target Kafka clusters, as well as the Kafka Connect cluster, have adequate resources (brokers, disk, network, CPU, memory) to handle the replication load.

-

Topic Filtering : Use whitelists (

topic.whitelistortopics.regexfor MM2,topic.whitelistortopic.regex.listfor Replicator) to replicate only necessary topics. Avoid replicating all topics unless essential . -

Configuration Synchronization :

-

MM2 : Understand which topic configurations are synced (

sync.topic.configs.enabled) and be aware of limitations (e.g., replication factor cannot exceed the number of brokers in the target cluster) . -

Replicator : Replicator also syncs topic configurations, but verify critical settings post-creation .

-

-

Failover Testing : Regularly test your disaster recovery and failover procedures to ensure consumer applications can correctly switch to the replicated cluster and resume processing from the correct offsets.

-

Security : Secure communication between Kafka Connect and Kafka clusters using TLS/SSL and SASL. Configure appropriate ACLs/RBAC for MM2/Replicator principals in both source and target clusters .

Conclusion

Both MirrorMaker 2 and Confluent Replicator are powerful tools for Kafka data replication, each with its strengths and ideal use cases.

-

MirrorMaker 2 is an excellent open-source choice for organizations looking for a flexible, Kafka-native solution. It's well-suited for disaster recovery, data migration, and distributing data across clusters, especially when deep integration with a commercial schema registry isn't a primary concern or when schema management is handled externally. Its learning curve can be steeper for complex configurations, and achieving exactly-once semantics often requires careful design or reliance on features from managed Kafka providers.

-

Confluent Replicator , as a commercial offering, provides a more batteries-included experience, especially for users within the Confluent ecosystem. Its tight integration with Confluent Schema Registry, robust monitoring via Control Center, and built-in features like provenance headers for active-active setups make it attractive for enterprises needing comprehensive multi-datacenter replication solutions with strong support. The licensing cost is a key consideration.

The choice between MM2 and Confluent Replicator depends on your specific technical requirements (like schema management needs), operational capabilities, existing Kafka ecosystem, and budget. Thoroughly evaluate your use cases against the features and considerations outlined in this blog to make an informed decision.

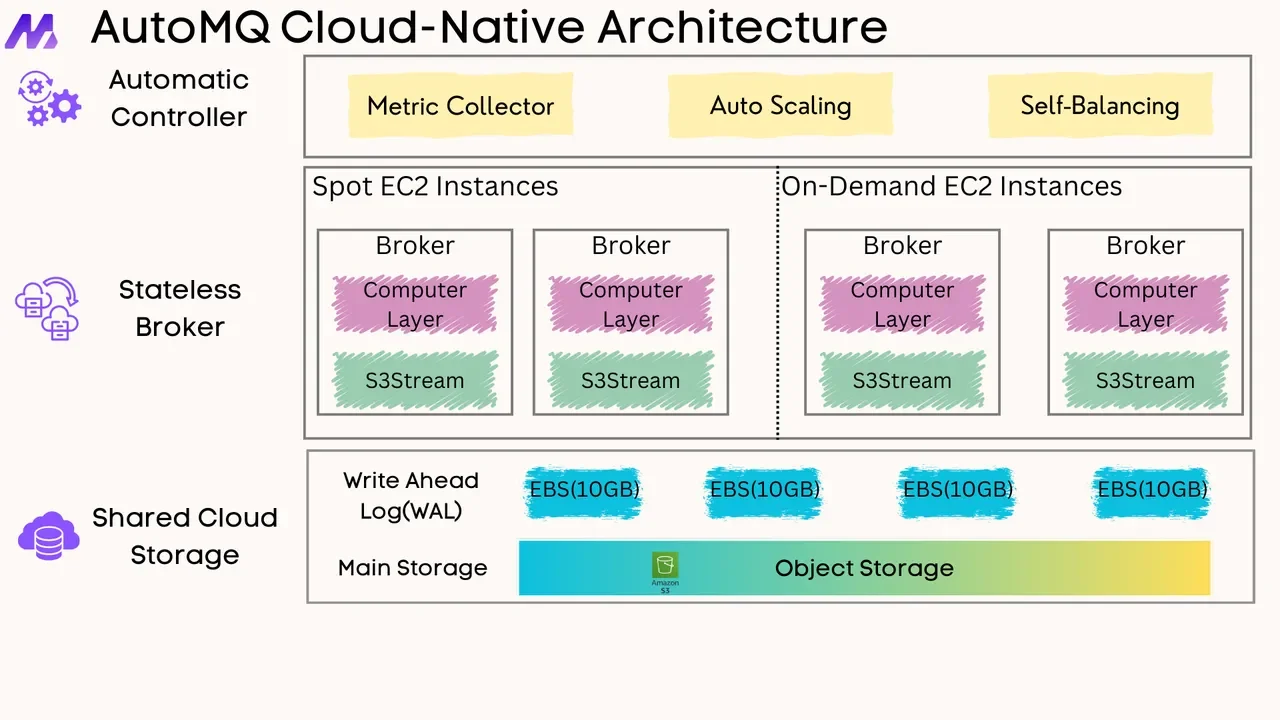

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging