Overview

Pub/Sub Messaging and Message Queuing are foundational paradigms in distributed systems, each addressing distinct communication needs. This blog provides a comprehensive explanation of these concepts, and includes a side-by-side comparison of the two.

Core Concepts

Pub/Sub Messaging

Pub/Sub (Publish-Subscribe) is an asynchronous messaging model where publishers send messages to a logical channel (topic), and subscribers receive copies of messages based on their subscriptions. The system decouples producers and consumers, allowing multiple subscribers to process the same message simultaneously. Key components include:

-

Topics : Logical channels for message categorization (e.g., "stock-updates").

-

Subscriptions : Represent interests in specific topics, enabling message delivery to subscribers .

-

Message Broker : Manages routing, persistence, and delivery (e.g., AutoMQ, Google Pub/Sub).

Pub/Sub excels in broadcasting events to multiple consumers, such as real-time notifications or data streaming pipelines.

Message Queuing

Message Queuing employs a point-to-point model where producers send messages to a queue, and a single consumer processes each message. Queues ensure messages are delivered once, in FIFO (First-In-First-Out) order, unless configured for priority handling. Key features include:

-

Queues : Buffers storing messages until consumers retrieve them .

-

Acknowledgements (ACKs) : Ensure messages are processed before removal, enhancing reliability.

-

Dead-Letter Queues (DLQs) : Handle failed messages for later analysis.

Queues are ideal for task distribution, such as order processing systems where each task must be handled once.

Architectural Differences

| Aspect | Pub/Sub | Message Queuing |

|---|---|---|

| Messaging Pattern | One-to-many (broadcast) | One-to-one (point-to-point) |

| Decoupling | High (producers unaware of subscribers) | Moderate (producers know queue endpoints) |

| Scalability | Horizontal scaling for subscribers | Horizontal scaling via competing consumers |

| Reliability | Potentially lower (no ACKs by default) | Higher (ACKs ensure delivery) |

| Delivery Order | Per-subscriber order | Strict FIFO (configurable priorities) |

| Throughput | Higher (parallel processing) | Lower (sequential processing) |

| Use Cases | Real-time analytics, event streaming | Task queues, transactional workflows |

Mechanisms and Trade-offs

Pub/Sub Messaging Workflow

-

Publishing : Producers send messages to a topic (e.g., "user-logins") .

-

Routing : The broker replicates messages to all active subscriptions .

-

Delivery : Subscribers pull or receive pushed messages via streaming .

-

Processing : Subscribers process messages asynchronously, often with at-least-once delivery guarantees .

![Pub/Sub Messaging Model[29]](/blog/pubsub-messaging-vs-message-queuing/1.png)

Challenges :

-

Message Duplication : Subscribers may receive duplicates during retries .

-

Fanout Overhead : Broadcasting to thousands of subscribers increases latency .

-

Flow Control : Subscribers must manage bursty traffic via throttling (e.g., limiting outstanding messages) .

Message Queuing Workflow

-

Enqueueing : Producers send messages to a queue (e.g., "order-payments") .

-

Dequeueing : A consumer retrieves and processes the message, sending an ACK upon success .

-

Retries : Unacknowledged messages are re-queued after a visibility timeout .

![Message Queuing Model[30]](/blog/pubsub-messaging-vs-message-queuing/2.png)

Challenges :

-

Consumer Bottlenecks : Single-threaded processing limits throughput .

-

Message Stuck : Misconfigured visibility timeouts can cause reprocessing loops .

-

Priority Handling : FIFO queues may delay high-priority tasks without explicit prioritization .

Best Practices

Pub/Sub Messaging

-

Ack After Processing : Avoid premature acknowledgments to prevent data loss .

-

Filtering : Use topic or attribute-based filtering to reduce subscriber load (e.g., Google Pub/Sub's filter expressions) .

-

Flow Control : Configure maximum outstanding messages to prevent consumer overload .

-

Ordered Messaging : Enable message ordering at the subscription level for scenarios like audit logs .

Message Queuing

-

Idempotency : Design consumers to handle duplicate messages safely .

-

DLQs for Dead Messages : Route failed messages to DLQs for debugging .

-

Batch Processing : Retrieve messages in batches to reduce API calls (e.g., AWS SQS) .

-

Auto-Scaling : Use metrics like queue depth to trigger consumer scaling .

Hybrid Systems and Modern Trends

Modern platforms like Google Pub/Sub and AutoMQ blend Pub/Sub scalability with queue-like features:

-

Google Pub/Sub : Offers per-message leasing (similar to queues) and integrates DLQs .

-

AutoMQ/Kafka : Combines Pub/Sub fanout with partition-based queues for ordered processing .

-

RabbitMQ Streams : Adds replayability and persistence to traditional queues, narrowing the gap with Pub/Sub .

Conclusion

Pub/Sub suits event-driven architectures requiring broad message distribution, while Message Queuing excels in transactional workflows needing reliability and order. The choice hinges on factors like delivery guarantees, scalability needs, and system decoupling. Hybrid systems now merge both paradigms, offering flexibility for complex use cases like real-time analytics coupled with task processing.

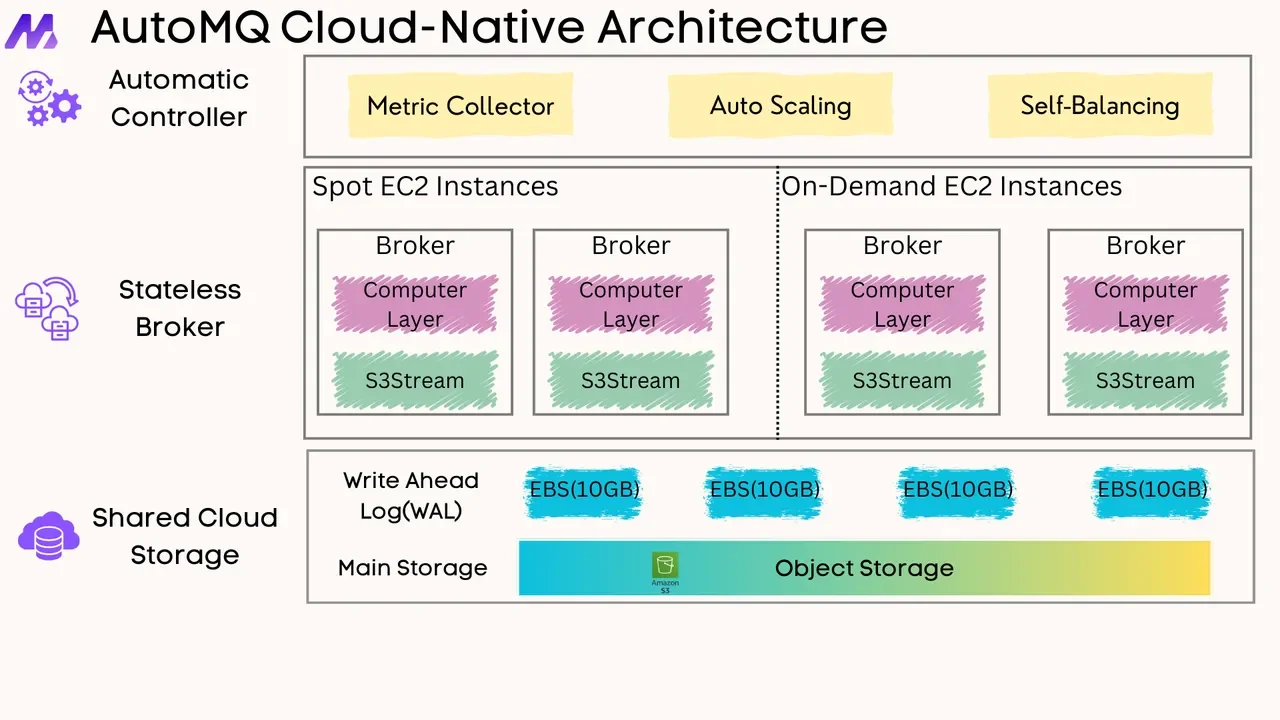

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging