Overview

The evolution of cloud computing has introduced various deployment models, each with distinct advantages and trade-offs. Among these, serverless and containerized deployments have emerged as prominent strategies for modern application development. This blog provides a comprehensive comparison of these two approaches, examining their core concepts, operational characteristics, and optimal use cases to assist architects and developers in making informed deployment decisions.

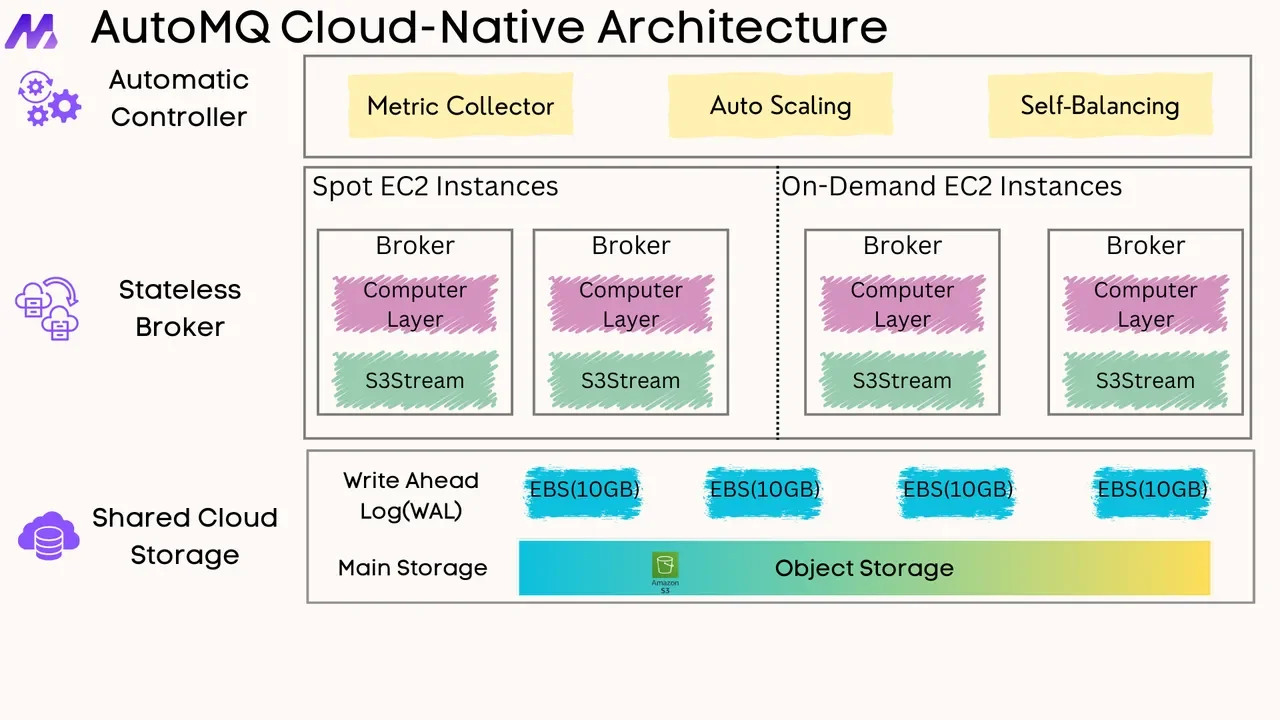

Understanding Serverless Deployment

Serverless deployment refers to an operational model where the cloud provider dynamically manages the allocation and provisioning of servers. Developers are abstracted from the underlying infrastructure, allowing them to focus solely on application code . Functions, the typical unit of deployment in serverless architectures, are executed in response to predefined events, such as HTTP requests, data modifications, or messages from a queue .

![Severless Deployment Has Less Concern Over Infrastructure [14]](/blog/serverless-deployment-vs-containerized-deployment/1.png)

Operational Mechanics:

The predominant serverless model is Function-as-a-Service (FaaS). The process generally involves:

-

Packaging application logic into discrete functions.

-

Configuring event triggers that initiate function execution.

-

The cloud platform executes the function upon trigger activation. A "cold start," or initial latency, may occur if the function is invoked for the first time or after a period of inactivity, as the execution environment is provisioned.

-

The platform automatically scales function instances based on the volume of incoming events.

-

Billing is typically based on the actual compute time consumed by the functions, often with millisecond granularity, in addition to charges for associated resources .

Key Characteristics:

-

Event-driven: Execution is predicated on the occurrence of specific events.

-

Stateless by default: Functions do not inherently maintain state across invocations; persistent state requires external storage solutions.

-

Abstracted infrastructure: Direct management of servers, operating systems, or runtime environments is eliminated for the developer.

-

Automatic scaling: The platform manages scaling in response to workload fluctuations without manual intervention.

Understanding Containerized Deployment

Containerization is a method of packaging application code with all its dependencies—libraries, binaries, and configuration files—into a standardized, isolated unit known as a container image . This image can then be instantiated as a container on any system supporting a container runtime, such as Docker. The primary benefit of containerization is the assurance of consistent application behavior across diverse computing environments, including development, testing, and production stages.

![Deployment on Containers [13]](/blog/serverless-deployment-vs-containerized-deployment/2.png)

Operational Mechanics:

-

Applications are "containerized" by defining a

Dockerfile, which outlines the steps to construct the container image. -

The resulting image is stored in a container registry (e.g., Docker Hub or a private organizational registry).

-

Application deployment involves retrieving the image and executing it as one or more container instances.

-

For managing distributed containerized applications in production, a container orchestration platform, such as Kubernetes, is typically employed . Kubernetes automates critical aspects of container lifecycle management, including deployment, scaling, self-healing, and network configuration.

Key Characteristics:

-

OS-level virtualization: Containers leverage the host operating system's kernel, resulting in lower resource overhead compared to traditional virtual machines (VMs) .

-

Portability: Facilitates a "build once, run anywhere" deployment paradigm.

-

Consistency: Ensures uniformity of the application environment across all deployment stages.

-

Resource isolation: Provides containers with distinct views of the filesystem, CPU, and memory resources.

-

Control: Affords greater control over the operating system, runtime versions, and dependencies relative to serverless models.

Side-by-Side Comparison

A comparative analysis of serverless and containerized deployments across several key dimensions is presented below:

| Feature | Serverless (FaaS) | Containerized (e.g., Kubernetes) |

|---|---|---|

| Infrastructure Management | Minimal to none (provider-managed) | Significant (requires management/configuration of orchestration) |

| Scaling | Automatic, fine-grained, event-based | Configurable, potentially complex, typically cluster-based |

| Cost Model | Pay-per-execution/invocation, often highly granular | Pay for allocated resources (VMs, load balancers) |

| Cold Starts | Potential issue, leading to initial latency | Generally not an issue if instances are pre-warmed |

| State Management | Typically stateless; external storage mandated | Supports stateful applications via persistent volumes |

| Control over Environment | Limited; restricted to provider-supplied runtimes | High; allows custom OS, libraries, and runtimes |

| Deployment Unit | Functions | Container Images |

| Runtime Limits | Subject to max execution time, memory, payload size | Fewer inherent limits, constrained by underlying hardware |

| Vendor Lock-in | Higher risk due to proprietary APIs/services | Lower risk, particularly with open standards like Kubernetes |

| Monitoring/Logging | Integrated with cloud provider tools; distributed tracing can be complex | Requires setup of dedicated tools (e.g., Prometheus, Grafana); offers greater flexibility |

| Developer Experience | Simpler for small, discrete functions; complexity can increase with numerous interconnected functions. Emphasis on business logic. | Steeper initial learning curve (Docker, Kubernetes); more tooling to manage. |

| Security | Shared responsibility model; provider secures infrastructure, developer secures code & configuration. OWASP Serverless Top 10 outlines risks such as event injection. | Shared responsibility model; developer manages more layers (OS, networking, orchestration configuration). Kubernetes has specific security best practices . |

Selection Criteria: Serverless vs. Containers

Opt for Serverless Deployment When:

-

Workloads are predominantly event-driven (e.g., image processing upon upload, reacting to database modifications, implementing simple APIs).

-

Rapid, automatic scaling is required for unpredictable or highly variable traffic patterns.

-

The objective is to minimize infrastructure management overhead and concentrate on application logic.

-

Cost optimization for applications with sporadic or low traffic is a primary concern.

-

The architecture involves microservices where individual functions can be developed as small, independent units.

-

The application can accommodate potential cold start latency for certain requests.

Common Serverless Use Cases:

-

API backends for web and mobile applications

-

Real-time data processing and stream analytics

-

Scheduled tasks or cron jobs

-

Chatbots and Internet of Things (IoT) backends

-

IT automation scripts

Opt for Containerized Deployment When:

-

Fine-grained control over the operating system, runtime environment, and dependencies is necessary.

-

Applications involve long-running processes or experience consistent traffic levels.

-

Stateful applications requiring persistent storage directly managed alongside the application are being deployed.

-

Avoiding vendor lock-in and ensuring portability across multiple cloud providers or on-premises environments is a strategic goal .

-

Existing applications are being migrated ("lift and shift") with minimal architectural modifications.

-

The development team possesses, or is prepared to develop, expertise in containerization and orchestration technologies.

Common Container Use Cases:

-

Complex web applications and microservices architectures

-

Databases and other stateful services

-

Machine learning model training and inference pipelines

-

Continuous Integration/Continuous Deployment (CI/CD) pipelines

-

Modernization of legacy applications

Best Practices

Serverless Best Practices:

-

Single Responsibility Principle: Design functions to be concise and focused on a singular task.

-

Cold Start Optimization: Utilize provisioned concurrency features where available, minimize deployment package sizes, and select performant language runtimes.

-

Externalized State Management: Employ databases or dedicated storage services for maintaining persistent state.

-

Idempotency: Architect functions to be idempotent, ensuring safe retries without unintended side effects.

-

Security: Adhere to the principle of least privilege for function roles and implement robust security measures for event data.

-

Monitoring and Logging: Leverage cloud provider-integrated tools for comprehensive logging and distributed tracing to facilitate debugging and performance monitoring.

Container Best Practices (with Kubernetes):

-

Image Optimization: Construct minimal and secure container images by removing non-essential tools and layers .

-

Resource Requests and Limits: Define CPU and memory requests and limits for pods to ensure cluster stability and efficient resource allocation .

-

Liveness and Readiness Probes: Configure health probes to enable Kubernetes to effectively manage application health and availability .

-

Security Contexts: Apply security contexts to define privilege and access control settings at the pod and container levels .

-

Network Policies: Implement network policies to enforce granular control over inter-pod traffic flow .

-

Monitoring and Alerting: Establish comprehensive monitoring (e.g., using Prometheus) and alerting mechanisms for the cluster and deployed applications .

-

Declarative Configuration: Manage deployments using declarative YAML manifests stored in version control systems (GitOps) .

Common Issues and Challenges

Serverless Issues:

-

Cold Starts: Initial latency experienced by infrequently invoked functions.

-

Execution Constraints: Limitations on execution duration, memory allocation, and payload size can pose challenges for certain workloads.

-

Debugging and Testing: Local testing and debugging of distributed serverless functions can be more intricate than for traditional monolithic applications.

-

Vendor Lock-in: Dependence on proprietary cloud provider services and APIs may complicate future migrations .

-

Complexity at Scale: Managing a large number of small, interconnected functions and their permissions can lead to increased architectural complexity.

Container Issues:

-

Management Complexity: Orchestration platforms like Kubernetes present a steep learning curve and demand considerable operational expertise .

-

Resource Overhead: While more lightweight than VMs, containers and their orchestration layer still introduce resource consumption.

-

Security Misconfigurations: The multifaceted nature of Kubernetes can result in security vulnerabilities if not configured with adherence to best practices .

-

Networking Complexity: Container networking can be intricate to understand, configure, and troubleshoot, particularly in large-scale cluster environments.

-

Cost Management: Without diligent resource optimization and governance, containerized deployments can incur substantial costs .

Hybrid Approaches: Integrating Serverless and Containers

Serverless and containerized deployments are not mutually exclusive and can be effectively combined in hybrid architectures. This allows organizations to leverage the distinct advantages of each model. Examples include:

-

Serverless functions orchestrating containerized tasks: A serverless function can initiate and manage long-running batch processes executed within containers.

-

Containers on serverless compute platforms: Services such as AWS Fargate, Azure Container Instances, and Google Cloud Run enable the execution of containers without direct management of the underlying virtual machines. This approach combines the operational simplicity of serverless with the standardized packaging of containers, proving beneficial for applications requiring a containerized environment with simplified operations and event-driven scalability.

A hybrid model often provides an optimal balance, utilizing serverless for event-driven components and highly scalable, transient tasks, while employing containers for complex, long-running services or applications with custom environmental requirements.

Conclusion

Both serverless and containerized deployment models offer powerful capabilities for cloud-native application development. The optimal choice is contingent upon specific application requirements, team expertise, operational capacity, and overarching business objectives.

-

Serverless architectures excel in event-driven scenarios, offering rapid, automatic scalability and minimal operational overhead.

-

Containerized deployments provide enhanced control, portability, and environmental consistency, particularly for complex or stateful applications.

Strategic adoption may involve selecting one model initially and evolving the architecture over time, or implementing a hybrid strategy that synergistically combines the strengths of both serverless and container technologies.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging