Overview

Choosing the right data platform is a critical decision for any organization aiming to leverage its data assets effectively. Among the leading solutions, Snowflake and Databricks stand out, each offering powerful capabilities but with distinct architectures and philosophies. This blog provides a comprehensive technical comparison to help you understand their differences and determine which platform might be a better fit for your specific needs.

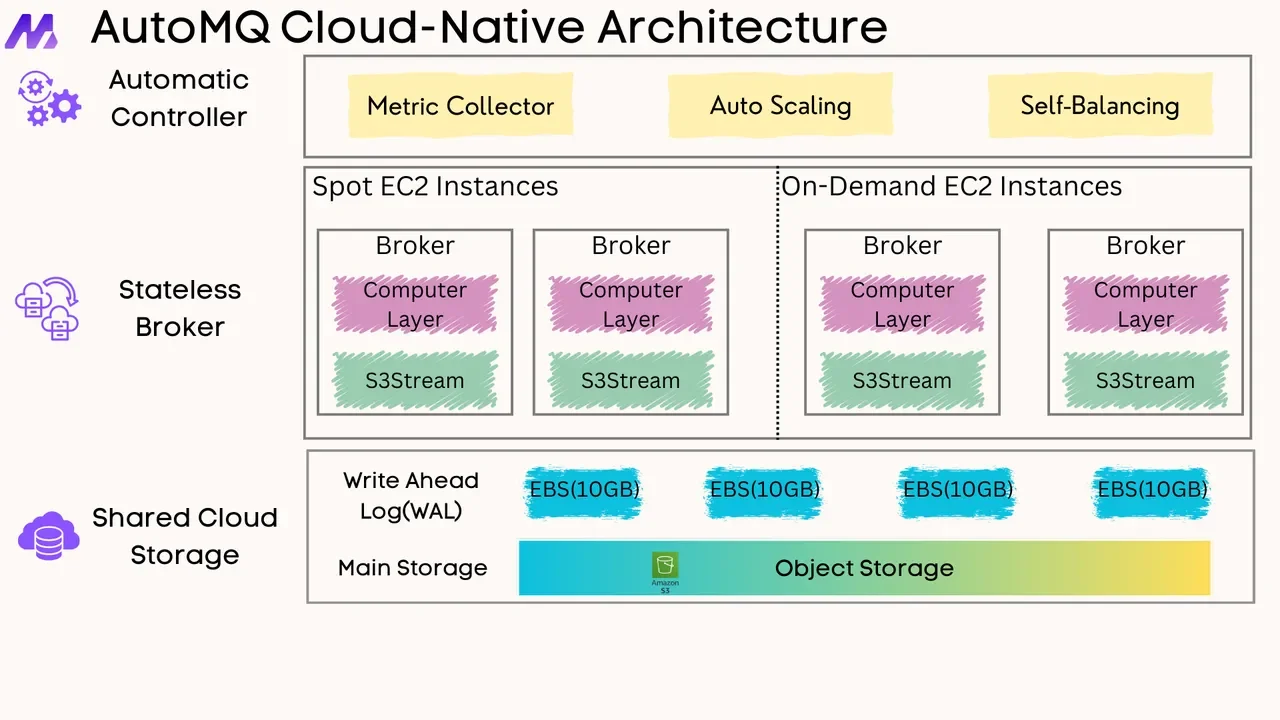

Core Architecture and Philosophy

Understanding the foundational design of Snowflake and Databricks is key to appreciating their respective strengths.

Snowflake: The Cloud Data Warehouse Reimagined

Snowflake was built from the ground up as a cloud-native data warehouse, offered as a fully managed Software-as-a-Service (SaaS) solution . Its core architectural principle is the separation of storage and compute. This allows organizations to scale these resources independently and elastically, paying only for what they use.

Snowflake's architecture consists of three distinct layers that interact seamlessly:

-

Database Storage: This layer ingests data and reorganizes it into Snowflake's internal optimized, compressed, columnar format, stored in cloud storage (AWS, Azure, or GCP) . All aspects of data storage, including file size, structure, compression, and metadata, are managed by Snowflake.

-

Query Processing: Compute resources are provided through "virtual warehouses." These are Massively Parallel Processing (MPP) compute clusters that can be spun up, resized, or shut down on demand without impacting other warehouses or the underlying storage .

-

Cloud Services: This layer acts as the "brain" of Snowflake, coordinating all activities across the platform. It manages authentication, infrastructure, metadata, query parsing and optimization, and access control .

Snowflake’s philosophy centers on providing a powerful, yet simple-to-use SQL-centric platform for data warehousing, business intelligence (BI), and analytics, abstracting away much of the underlying infrastructure complexity.

![Key Features of Snowflake Cloud Data Platform [21]](/blog/snowflake-vs-databricks-data-platforms-technical-comparison/1.png)

Databricks: The Unified Data Analytics Platform and the Lakehouse

Databricks, originating from the creators of Apache Spark, champions the "lakehouse" paradigm. The lakehouse aims to combine the best features of data lakes (flexibility, scalability for raw data) and data warehouses (data management, ACID transactions, performance) into a single, unified platform .

The core components of the Databricks platform include:

-

Delta Lake: This is an open-source storage layer that brings reliability, performance, and ACID transactions to data lakes. It extends Parquet data files with a file-based transaction log, enabling features like schema enforcement, time travel, and unified batch and streaming operations . Delta Lake is the default storage format in Databricks.

-

Apache Spark: Databricks is built on and deeply integrated with Apache Spark, providing a powerful distributed processing engine for large-scale data engineering, data science, and machine learning workloads .

-

Unity Catalog: This provides a unified governance solution for data and AI assets within Databricks, offering centralized access control, auditing, lineage, and data discovery across multiple workspaces .

Databricks' philosophy is to offer a collaborative, open platform that supports the full lifecycle of data analytics, from raw data ingestion and ETL to sophisticated machine learning model development and deployment .

![Key Features of Databricks platform [22]](/blog/snowflake-vs-databricks-data-platforms-technical-comparison/2.png)

Data Processing Capabilities

Both platforms offer robust data processing, but their approaches and strengths differ.

Languages and APIs

-

Snowflake: Primarily SQL-driven, making it highly accessible for analysts and BI professionals. For more complex programmatic logic and machine learning, Snowflake introduced Snowpark, which allows developers to write code in Python, Java, and Scala that executes directly within Snowflake's engine, leveraging its compute capabilities .

-

Databricks: Offers a polyglot environment through Apache Spark, natively supporting Python, Scala, SQL, and R. This flexibility makes it a strong choice for diverse teams of data engineers, data scientists, and ML engineers who prefer different languages for different tasks . It provides rich DataFrame APIs and libraries for a wide range of transformations and analyses.

Batch vs. Streaming Data

-

Snowflake: Excels at batch processing for traditional ETL/ELT and analytical workloads. For streaming data ingestion, Snowpipe provides continuous, micro-batch loading. For stream processing, Snowflake has introduced features like Dynamic Tables (which declaratively define data pipelines) and Tasks for scheduling SQL statements, often working in conjunction with external streaming data solutions that land data into cloud storage .

-

Databricks: Designed for both high-throughput batch processing and real-time stream processing. Spark Structured Streaming provides a high-level API for building continuous applications. Delta Live Tables (DLT) further simplifies the development and management of reliable ETL pipelines for both batch and streaming data, with built-in quality controls and monitoring .

Workload Suitability

-

Snowflake: Traditionally shines in BI, ad-hoc analytics, and enterprise data warehousing. It's also well-suited for secure data sharing across organizations. With Snowpark and recent AI/ML features like Snowflake Cortex (providing access to LLMs and ML functions via SQL) and Snowflake ML, it's increasingly catering to data science and machine learning workloads .

-

Databricks: A go-to platform for complex data engineering pipelines, large-scale ETL, advanced data science, and end-to-end machine learning model training and deployment (e.g., using MLflow and Mosaic AI for Generative AI applications). While historically stronger in these areas, Databricks is also enhancing its BI capabilities with features like Databricks SQL, aiming to provide high-performance SQL analytics directly on the lakehouse .

Storage Model and Data Formats

The way data is stored and managed is a fundamental differentiator.

Snowflake

Uses a proprietary, highly optimized columnar storage format internally. When data is loaded, Snowflake converts it into this format. While this allows Snowflake to achieve significant performance and compression, the underlying data in its native Snowflake format is not directly accessible by external tools without going through Snowflake . However, Snowflake is increasingly embracing open formats like Apache Iceberg, allowing queries on external Iceberg tables and managing Iceberg catalogs .

Databricks

Primarily uses Delta Lake, which is an open-source format built on Apache Parquet files stored in your cloud object storage (e.g., S3, ADLS Gen2, GCS) . This open nature means your data remains in your cloud storage account, accessible by other tools and engines that understand Parquet and Delta Lake. Databricks also supports various other formats like Parquet, ORC, Avro, CSV, and JSON.

Scalability and Performance

Both platforms are designed for cloud scale, but their mechanisms differ.

Snowflake

Offers seamless and independent scaling of storage and compute. Virtual warehouses can be resized almost instantly or configured to auto-scale to handle fluctuating query loads without downtime or performance degradation for other concurrent users . This is particularly beneficial for BI workloads with many concurrent users and varying query complexities.

Databricks

Provides highly flexible scalability by allowing users to configure and manage Spark clusters tailored to specific workload requirements (e.g., memory-optimized, compute-optimized, GPU-enabled clusters for ML). While this offers fine-grained control, it can also introduce more management overhead compared to Snowflake's more automated approach. Databricks is also heavily investing in serverless compute options, including Serverless SQL warehouses and Serverless compute for notebooks and jobs, to simplify operations and optimize costs .

Performance can be workload-dependent. Snowflake is highly optimized for SQL analytics and concurrent queries. Databricks, with its Spark engine, can be tuned for a wider range of data processing tasks, including I/O-intensive and compute-intensive operations common in ETL and ML .

Data Governance and Security

Robust governance and security are paramount for enterprise data platforms.

Snowflake Governance & Security

Snowflake provides a comprehensive suite of governance features, increasingly consolidated under Snowflake Horizon . Key capabilities include:

-

Discovery: AI-powered search, data lineage, and a metadata catalog.

-

Compliance & Security: Features like object tagging, data classification, access history, dynamic data masking, row-level access policies, and robust Role-Based Access Control (RBAC) .

-

Privacy: Data clean rooms for secure collaboration on sensitive data. Snowflake ensures end-to-end encryption (in transit and at rest, always on), supports network policies (IP whitelisting/blacklisting), multi-factor authentication (MFA), and integrates with private connectivity options like AWS PrivateLink and Azure Private Link . It holds numerous compliance certifications, including SOC 2 Type II, ISO 27001, HIPAA, PCI DSS, and FedRAMP .

Databricks Governance & Security

Databricks offers Unity Catalog as its unified governance solution across all data and AI assets . Its features include:

-

Centralized Metadata & Discovery: A single place to manage and discover tables, files, models, and dashboards.

-

Fine-Grained Access Control: SQL-standard based permissions for tables, views, columns (via dynamic views), and rows.

-

Data Lineage: Automated capture and visualization of data lineage down to the column level.

-

Auditing: Comprehensive audit logs for tracking access and operations.

-

Data Sharing: Securely share data across organizations using Delta Sharing, an open protocol . Unity Catalog's components are also being open-sourced, promoting interoperability . Databricks provides encryption at rest (managed by the user in their cloud storage) and in transit, network security configurations (like deploying workspaces in customer-managed VPCs), and integration with identity providers . It also meets various compliance standards like SOC 2 Type II, ISO 27001, and HIPAA .

Ecosystem and Integrations

Both platforms have rich ecosystems and integrate with a wide array of third-party tools.

Snowflake

Offers strong connectivity with leading BI tools (Tableau, Power BI, Looker, etc.), ETL/ELT tools (Fivetran, Matillion, dbt), and data science platforms. The Snowflake Data Marketplace allows organizations to discover and access third-party datasets. The Snowpark ecosystem is growing, enabling more custom application development.

Databricks

Integrates deeply with the broader big data and AI ecosystem, including ML frameworks (TensorFlow, PyTorch, scikit-learn), MLOps tools (MLflow), workflow orchestrators (Apache Airflow), and BI tools. Its foundation on open standards like Delta Lake and MLflow facilitates interoperability . Databricks also integrates tightly with cloud provider services for storage, machine learning, and IoT.

Pricing and Total Cost of Ownership (TCO)

Both platforms offer usage-based pricing models, but with different structures:

Snowflake

Pricing is based on Snowflake credits consumed for compute (virtual warehouses, billed per second with a one-minute minimum), plus separate charges for data storage and cloud services (though a portion of cloud services is included free, typically up to 10% of compute costs).

Databricks

Pricing is based on Databricks Units (DBUs) consumed per second, which vary based on the type and size of compute resources used (VM instances from the cloud provider). Users also pay their cloud provider directly for the underlying virtual machines, storage, and other cloud services .

Calculating the TCO can be complex for both, depending on the specific workloads, data volumes, query patterns, optimization efforts, and team expertise in managing resources efficiently.

Use Cases and Best Fit

Choosing between Snowflake and Databricks often comes down to your primary workloads and team expertise.

Snowflake is often a strong fit for:

Organizations prioritizing a highly managed, SQL-centric cloud data warehouse.

-

Primary workloads centered around BI, reporting, and complex SQL analytics.

-

Use cases requiring simplified data operations and administration.

-

Secure and governed data sharing with external partners.

-

Teams with strong SQL skills looking to modernize their data warehousing capabilities .

Databricks typically excels for:

Organizations needing a unified platform for diverse data workloads, including data engineering, data science, and machine learning at scale.

-

Complex ETL/ELT pipelines, especially those involving large volumes of structured and unstructured data.

-

Real-time data processing and streaming analytics.

-

End-to-end machine learning lifecycle management, from experimentation to production.

-

Teams with strong programmatic skills (Python, Scala) and a need for flexibility and control over their data processing environment .

It's important to note that both platforms are continuously evolving and expanding their capabilities, often encroaching on each other's traditional strongholds .

Conclusion

Snowflake and Databricks are both powerful and innovative data platforms, but they cater to different primary needs and philosophies. Snowflake offers a highly managed, SQL-first data warehouse experience with excellent ease of use and strong BI capabilities. Databricks provides a unified, open lakehouse platform that excels in data engineering, streaming, data science, and machine learning, offering greater flexibility and control.

The best choice depends on your organization's specific requirements, existing data ecosystem, dominant workloads, team skill sets, and strategic data goals. Many organizations even find value in using both platforms for different purposes within their broader data architecture.

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging