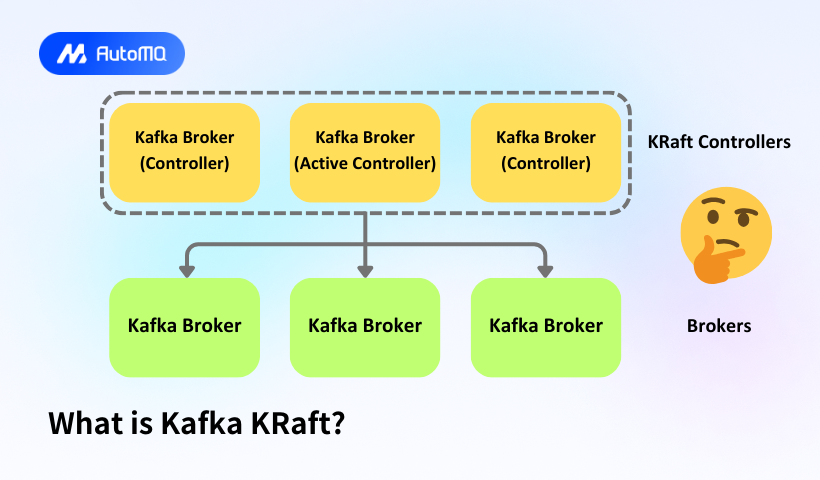

What is Kafka KRaft?

Kafka KRaft (Kafka Raft) is a consensus protocol introduced in Apache Kafka to manage metadata and handle leader elections without relying on Apache ZooKeeper. This protocol simplifies Kafka's architecture by consolidating metadata management within Kafka itself, using a variant of the Raft consensus algorithm.

Benefits of KRaft Over ZooKeeper

Simplified Architecture and Deployment

-

Reduced Complexity : Eliminates the need for a separate ZooKeeper ensemble, making Kafka a self-contained system.

-

Simplified Deployment : Easier to set up and manage since only Kafka needs to be configured.

Performance and Scalability

-

Improved Scalability : Supports a larger number of partitions, enhancing Kafka's ability to handle large-scale data processing.

-

Faster Recovery : Event-driven metadata replication, which is more efficient than ZooKeeper's RPC-based approach, reduces recovery time.

Operational Efficiency

-

Lower Operational Overhead : Reduces the need to manage two separate systems (Kafka and ZooKeeper), decreasing operational complexity.

-

Unified Security Model : Simplifies Kafka's security model by removing the need to manage ZooKeeper's security separately.

Fault Tolerance and Reliability

-

Enhanced Fault Tolerance : Improves Kafka's resilience by reducing single points of failure associated with ZooKeeper.

-

Reliable Metadata Management : Ensures consistent metadata across the cluster using the Raft protocol.

How Kafka KRaft Works

Key Components

-

Quorum Controllers : Specialized Kafka brokers that form a quorum to manage metadata. One controller acts as the leader, while others are followers.

-

**Metadata Topic: A dedicated Kafka topic used to store metadata, ensuring it is replicated across controllers.

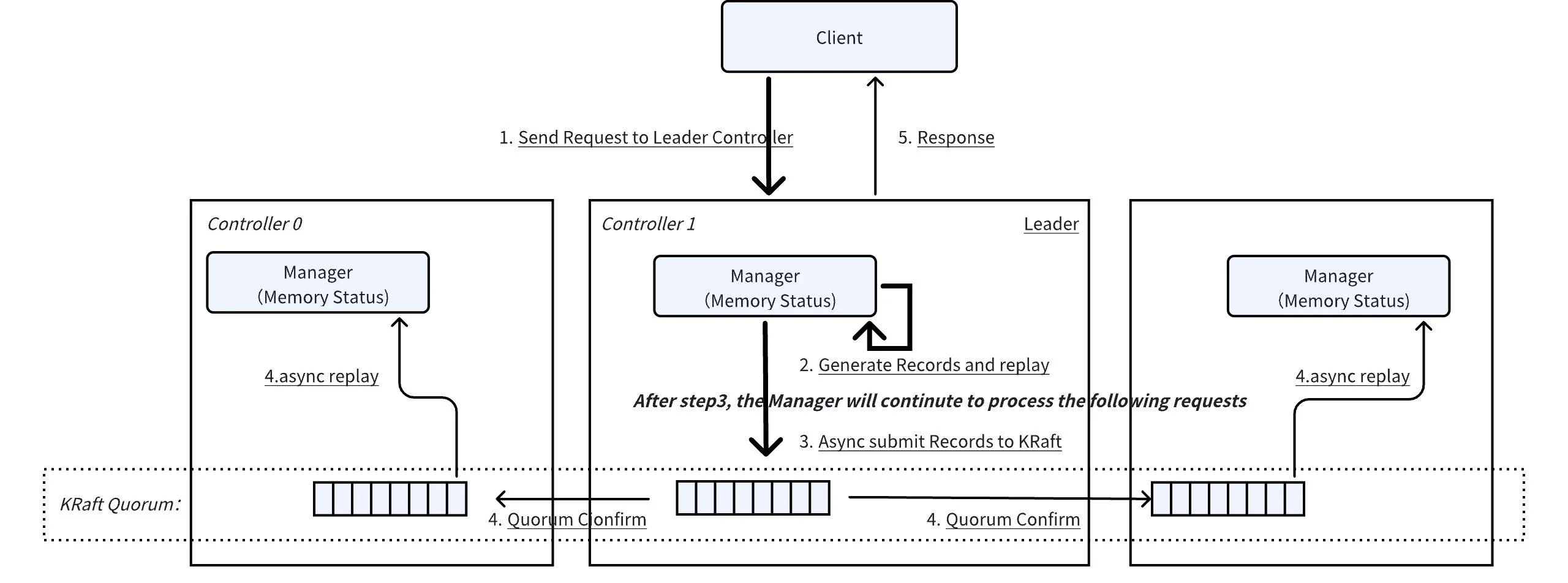

Process Flow

-

**Metadata Updates: The leader controller writes metadata changes to the metadata topic.

-

Replication : Follower controllers replicate these changes, ensuring consistency across the quorum.

-

Event-Driven Updates : Brokers fetch metadata updates from controllers using an event-driven mechanism, which is more efficient than ZooKeeper's RPCs.

-

Leader Election : The Raft protocol handles leader elections among controllers, ensuring quick failover and minimal downtime.

How AutoMQ Uses KRaft to Manage Metadata

AutoMQ is a next-generation Kafka that is 100% fully compatible and built on top of S3. AutoMQ leverages Kafka's KRaft mode to manage metadata, enhancing its efficiency and scalability in handling stream data. Here's how AutoMQ utilizes KRaft for metadata management:

Key Features of AutoMQ's Metadata Management with KRaft

-

Internal Consensus Protocol: AutoMQ uses KRaft's Raft-based consensus protocol to manage metadata internally, eliminating the need for external systems like ZooKeeper. This simplifies the architecture and reduces operational complexity.

-

Controller Quorum: In AutoMQ, a group of brokers form a quorum to manage metadata. One of these brokers acts as the leader controller, responsible for updating and replicating metadata across all brokers.

-

Metadata Replication: Each broker in AutoMQ maintains a local copy of the metadata. The leader controller ensures that any changes are propagated to all brokers, ensuring consistency across the cluster.

-

Efficient Partition Reassignment: AutoMQ's use of KRaft allows for quick and efficient partition reassignment by simply adjusting metadata mappings between brokers and partitions, without needing to physically move data.

How AutoMQ Extends KRaft for Object Storage

-

Tailored Metadata Management: AutoMQ extends KRaft to support object storage environments, balancing cost efficiency with high read and write performance. This involves managing metadata related to object storage, such as mapping partitions to MetaStreams.

-

Partition Management: When opening a partition, AutoMQ requests the MetaStream ID from the controller. If the MetaStream doesn't exist, it is created, and the mapping is persisted through the KRaft layer.

-

Metadata Updates: During data uploads, AutoMQ updates metadata through KRaft Records, ensuring that all nodes have consistent information about object states and stream offsets.

Benefits of Using KRaft in AutoMQ

-

Simplified Architecture: By using KRaft, AutoMQ avoids external dependencies for metadata management, reducing complexity and potential failure points.

-

Improved Scalability and Efficiency: AutoMQ's KRaft-based metadata management supports faster partition reassignment and more efficient cluster scaling compared to traditional Kafka setups.

Overall, AutoMQ's integration of KRaft enhances its operational efficiency, scalability, and compatibility with Kafka protocols, making it a robust solution for cloud-native stream processing.