Overview

Apache Kafka is central to modern real-time data architectures. When it comes to deploying Kafka with enterprise capabilities, two primary models from its original creators often come into discussion: a self-managed software package known as Confluent Platform, and a fully managed service, Confluent Cloud. As engineers, understanding the nuances between these two is crucial for making informed architectural decisions. This blog offers a deep-dive comparison to help you navigate this choice.

Confluent Platform: The Self-Managed Powerhouse

Confluent Platform is essentially an enterprise-grade, self-managed distribution of Apache Kafka . You download the software and run it on the infrastructure you control, whether that's on-premises, in a private cloud, or on your own managed instances in a public cloud .

It bundles open-source Apache Kafka with a suite of powerful tools and commercial features designed to simplify and enhance your Kafka experience. Key components you'll manage include :

-

Apache Kafka: The core publish-subscribe messaging system.

-

Schema Registry: For managing and validating data schemas, ensuring data consistency across applications.

-

ksqlDB: A streaming SQL engine enabling you to perform real-time data processing using SQL-like queries.

-

Kafka Connect: A framework with a rich set of connectors for streaming data between Kafka and other systems like databases, object stores, and more.

-

Control Center: A web-based GUI for managing and monitoring your Kafka clusters, topics, connectors, and other components.

![Confluent Platform Components [22]](/blog/confluent-cloud-vs-confluent-platform/1.png)

With Confluent Platform, you are responsible for all aspects of deployment, infrastructure management, operations, security configurations, and upgrades . Licensing typically involves an enterprise subscription for commercial features, though some components are available under a community license, and a developer license is often available for single-broker development environments . This model offers maximum control over your deployment.

Confluent Cloud: The Fully Managed Service

Confluent Cloud provides Apache Kafka and its ecosystem as a fully managed, cloud-native service . This means you don't have to worry about provisioning servers, installing software, or managing the underlying infrastructure; you consume Kafka as a service .

It’s built from the ground up for the cloud, leveraging a specialized Kafka engine (often referred to as Kora) designed for elasticity, performance, and operational efficiency. The core components are provided as managed services :

-

Managed Apache Kafka: Highly available and scalable Kafka clusters, with the operational complexities handled by the provider.

-

Managed Schema Registry: Ensures schema governance without you needing to deploy or maintain the registry.

-

Managed ksqlDB: Allows for serverless SQL-based stream processing.

-

Managed Connectors: A library of pre-built, fully managed connectors that simplify integration with various data sources and sinks.

-

Managed Apache Flink: A powerful, fully managed stream processing service for complex, stateful applications.

![Confluent Cloud Components [23]](/blog/confluent-cloud-vs-confluent-platform/2.png)

Confluent Cloud is available on major cloud providers (AWS, Azure, Google Cloud) and generally follows a consumption-based pricing model, offering pay-as-you-go options or annual commitments for volume discounts . This model prioritizes ease of use and reduced operational burden.

Head-to-Head: Confluent Platform vs. Confluent Cloud

Let's break down the key differences to help you weigh your options:

Operational Model & Management

-

Platform: You own the entire operational lifecycle. This means provisioning hardware (or cloud VMs), installing and configuring all Platform components, setting up monitoring, performing patches and upgrades, and managing disaster recovery . This offers granular control but requires significant Kafka expertise and operational resources.

-

Cloud: The provider manages the infrastructure, performs automated provisioning, handles maintenance and upgrades, and ensures the underlying service reliability . This drastically reduces your operational burden, allowing your team to focus more on application development rather than infrastructure management. Scaling can often be done elastically based on demand.

Scalability & Performance

-

Platform: Scaling is a manual process. You need to add more brokers, reconfigure topics for more partitions, and ensure your underlying hardware can support the load. Performance is heavily dependent on your infrastructure choices and tuning expertise. Features like Self-Balancing Clusters are available under an enterprise license to help optimize data distribution .

-

Cloud: Designed for elastic scalability. Capacity can be adjusted, often automatically or via simple controls, based on consumption units (e.g., Confluent Kafka Units - CKUs, or elastic CKUs - eCKUs). The cloud-native engine is optimized for performance in the cloud environment, and different cluster types (Basic, Standard, Dedicated, Enterprise, Freight) offer varying performance characteristics and resource limits .

Feature Set & Ecosystem

-

Core Kafka & Tools (Schema Registry, ksqlDB, Connect): Both offerings provide these fundamental components . With Platform, you have more direct control over their configuration and deployment. In Cloud, these are provided as managed services, simplifying their use but potentially offering fewer low-level configuration knobs.

-

Stream Processing (Apache Flink):

-

Cloud: Offers Apache Flink as a fully managed, serverless stream processing service. This abstracts away Flink cluster management, state backend configuration, and checkpointing, making it easier to develop and deploy complex, stateful streaming applications .

-

Platform: Confluent Platform for Apache Flink is generally available and allows you to run Flink applications . It's managed using the Confluent Manager for Apache Flink and typically deployed on Kubernetes. This gives you more control over the Flink environment but comes with the responsibility of managing the Flink clusters and their lifecycle.

-

-

Management Interface:

-

Platform: Control Center is the primary web UI for managing Kafka clusters, topics, brokers, connectors, ksqlDB queries, Schema Registry, and monitoring the overall health of your self-managed deployment .

-

Cloud: The Cloud Console is the web-based interface for provisioning and managing all your Confluent Cloud resources, including Kafka clusters, connectors, ksqlDB applications, Flink compute pools, billing, and user access.

-

-

Stream Governance:

-

Cloud: Offers a comprehensive, multi-tiered Stream Governance suite that includes features like a Data Portal for self-service discovery (Stream Catalog), interactive end-to-end Stream Lineage visualization, and Data Quality Rules integrated with Schema Registry .

-

Platform: Includes core governance capabilities as part of its enterprise offering, such as Schema Validation (broker-side enforcement), Schema Linking (for syncing schemas across clusters), and Data Contracts within Schema Registry which can enforce data quality rules . While these are powerful, the advanced, UI-driven Data Portal and Stream Lineage features highlighted in the Cloud offering are not as prominently documented as distinct, readily available features within the Platform's Control Center.

-

-

Connectors:

-

Platform: You deploy and manage Kafka Connect worker clusters yourself. Connectors are typically downloaded from a central hub, which hosts a wide array of options including community-developed, partner-supported, and commercially licensed connectors . You are responsible for the lifecycle, scaling, and monitoring of these self-managed connectors.

-

Cloud: Provides a library of fully managed connectors . The provider handles the infrastructure, deployment, scaling, and maintenance of these connectors. Billing is usually based on connector tasks and data throughput. While convenient, the list of available fully managed connectors might be more curated than the exhaustive list on the central hub for self-management.

-

Cost & Total Cost of Ownership (TCO)

-

Platform: Costs include license fees for enterprise features, all capital and operational expenses for your infrastructure (servers, storage, networking, power, cooling), and salaries for the skilled personnel needed to operate and maintain the platform . If managed efficiently, it can offer a lower TCO than building everything from open-source Kafka alone, due to enterprise features and support .

-

Cloud: Uses a consumption-based pricing model. You pay for what you use across various dimensions like data ingress/egress, storage, Kafka compute units (CKUs/eCKUs), ksqlDB streaming units (CSUs), Flink compute units (CFUs), connector tasks, and premium support tiers . This model aims to reduce TCO by abstracting away infrastructure and operational staff costs. A cost estimator tool is typically provided to help project expenses .

Security & Compliance

-

Platform: Security is largely your responsibility. You configure network security (firewalls, VPCs), authentication (SASL), authorization (ACLs), and encryption (TLS). Enterprise features often include Role-Based Access Control (RBAC) and structured audit logs .

-

Cloud: Security is a shared responsibility, but the provider manages the security of the cloud infrastructure and the managed services. It typically offers built-in encryption, RBAC, integration with cloud provider IAM, and may hold various compliance certifications (e.g., SOC 2, ISO 27001, HIPAA eligibility) which can simplify your compliance efforts.

Upgrades & Maintenance

-

Platform: You are responsible for planning and executing all upgrades and maintenance for Kafka brokers, ZooKeeper/KRaft, Schema Registry, Connect, Control Center, and other components. This requires careful adherence to documented upgrade paths and often involves planned downtime .

-

Cloud: The cloud provider handles all underlying infrastructure maintenance and service upgrades . They typically have defined policies for communicating minor updates, major upgrades, and feature deprecations, aiming to minimize disruption.

Support & SLAs

-

Platform: Commercial support is available through an enterprise subscription. However, you are ultimately responsible for the uptime and availability of your deployment, as it runs on your infrastructure.

-

Cloud: The provider offers various support plans and provides Service Level Agreements (SLAs) for the managed services, often guaranteeing specific uptime percentages (e.g., up to 99.99% for resilient multi-AZ cluster configurations) .

Making the Choice: Which Path for Your Data Streams?

The decision hinges on your organization's specific needs, technical capabilities, operational preferences, and strategic objectives.

Scenarios Favoring Confluent Platform:

-

Data Sovereignty and Full Control: When you have stringent data residency requirements, or need absolute control over every aspect of the Kafka environment, network, and data handling due to compliance or security policies.

-

Leveraging Existing Infrastructure: If you have significant investments in on-premises data centers or a private cloud infrastructure that you wish to utilize.

-

Skilled In-House Operations Team: Your organization has a dedicated team with deep expertise in managing Apache Kafka and complex distributed systems.

-

Need for Deep Customization: If your use case requires specific low-level Kafka configurations, custom component integrations, or modifications not typically exposed in a managed service.

-

Access to Bleeding-Edge Features: Occasionally, new features might be available in the self-managed platform slightly before they are fully incorporated into the managed cloud service.

-

Hybrid Cloud Deployments: When you need to deploy Kafka at the edge or in on-premises environments that then connect and replicate data to a central cloud instance. The platform can serve these edge/on-prem needs, potentially linking to the cloud service using features like Cluster Linking .

Scenarios Favoring Confluent Cloud:

-

Reduced Operational Burden: If your primary goal is to offload infrastructure management, maintenance, and complex operations to focus engineering resources on application development and business logic.

-

Speed to Market and Agility: When you need to provision and deploy Kafka clusters quickly, scale elastically, and iterate rapidly without being bogged down by infrastructure setup.

-

Limited Kafka Operational Expertise: If your team is strong in application development but lacks the specialized skills or bandwidth to manage a distributed Kafka deployment 24/7.

-

OPEX Financial Model: If you prefer a consumption-based, operational expenditure model over upfront capital expenditure for hardware and perpetual licenses.

-

Desire for Fully Managed Ecosystem: When you want to leverage fully managed services like serverless Apache Flink for stream processing, and advanced UI-driven governance tools like a discoverable Stream Catalog and interactive Stream Lineage.

-

Cloud-First Strategy: If your organization is strategically aligned with using public cloud services and wants a Kafka solution that integrates seamlessly with that environment.

Potential Hurdles and Considerations

No matter which path you choose, be aware of potential challenges:

For Confluent Platform:

-

Operational Complexity: The setup, configuration, scaling, monitoring, and upgrading of a distributed system like Kafka is inherently complex, even with the enhancements provided by the platform .

-

Resource Commitment: It demands significant investment in infrastructure (hardware, software licenses for commercial features) and skilled personnel.

-

Upgrade Management: Upgrades require careful planning, testing, and execution to avoid service disruptions.

-

High Availability/Disaster Recovery: Implementing robust HA/DR strategies is your responsibility.

For Confluent Cloud:

-

Cost Optimization: Consumption-based costs can escalate if not carefully monitored and optimized. Understanding the pricing dimensions is key .

-

Vendor Dependence: While Kafka's open APIs provide portability, heavy reliance on a specific provider's managed ecosystem and unique features can create a degree of vendor dependence.

-

Configuration Constraints: Managed services might not offer the same level of granular control over every configuration parameter as a self-managed setup .

-

Data Egress Costs: Transferring large volumes of data out of the cloud provider's network can incur significant costs.

Final Thoughts

Both Confluent Platform and Confluent Cloud are powerful, enterprise-grade solutions designed to help organizations succeed with Apache Kafka. The "best" choice is not universal; it's deeply contextual.

If your organization prioritizes ultimate control, has the requisite in-house expertise, and operates under specific constraints that necessitate self-management, Confluent Platform offers a robust foundation. Conversely, if your focus is on agility, reducing operational overhead, and leveraging a rich managed cloud ecosystem, Confluent Cloud provides a compelling, streamlined path to harnessing the power of data in motion. A thorough evaluation of your technical needs, operational capacity, financial models, and strategic roadmap will guide you to the optimal solution.

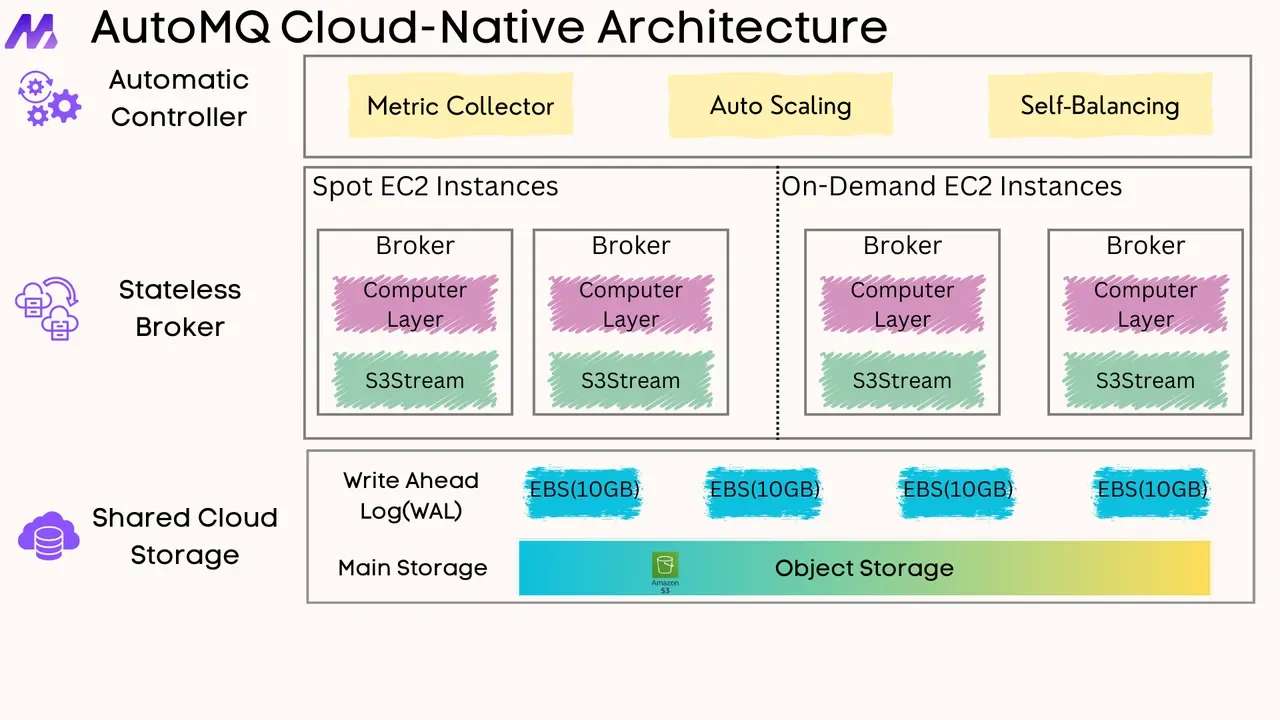

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging