Overview

Apache Kafka has emerged as a cornerstone technology for building real-time data pipelines and streaming applications. Its ability to handle high-throughput, fault-tolerant, and scalable event streams makes it indispensable for use cases ranging from real-time analytics and log aggregation to event-driven architectures and complex event processing . However, deploying and operating Kafka involves critical decisions, primarily whether to self-host your Kafka cluster or opt for a managed Kafka service.

This blog post delves into a comprehensive comparison of these two deployment models, exploring their concepts, operational responsibilities, pros and cons, best practices, and common issues. Our goal is to equip you with the knowledge needed to make an informed decision that best suits your organization's technical capabilities, budget, and strategic objectives.

Self-Hosted Apache Kafka

Self-hosting Kafka means you are responsible for every aspect of your Kafka deployment, from provisioning the underlying infrastructure to ongoing operational management .

How It Works & Operational Responsibilities:

-

Infrastructure Provisioning: You must select, procure, and configure the necessary hardware (servers with adequate CPU, RAM, and fast storage like SSDs, often in a RAID configuration) and networking infrastructure . Operating system choices (typically Linux) and JVM tuning are also under your purview .

-

Installation and Configuration: This involves manually installing Kafka and, if needed, ZooKeeper, on each server . You'll configure numerous broker parameters to tune performance, retention, replication, and security . Setting up KRaft mode requires specific configurations for controller and broker roles .

-

Cluster Management: Ongoing tasks include managing topics and partitions, monitoring cluster health and performance using tools like Prometheus and Grafana (often requiring JMX metrics), handling broker additions or removals, and performing partition rebalancing .

-

Maintenance and Upgrades: You are responsible for applying patches, updates, and version upgrades to Kafka and the underlying OS, often involving complex rolling upgrade procedures to minimize downtime .

-

Security: Implementing robust security is critical. This includes setting up encryption (TLS/SSL for data in transit), authentication (SASL mechanisms like SCRAM or mTLS), and authorization (Access Control Lists - ACLs) . Secure credential storage and regular audits are also vital .

-

Disaster Recovery (DR) & Backup: You must design and implement your own DR strategy. This involves backing up topic data, consumer offsets, configurations, and ACLs . Common multi-datacenter DR patterns include stretch clusters or replication using tools like MirrorMaker .

Pros of Self-Hosting:

-

Maximum Control and Flexibility: You have complete control over hardware selection, Kafka configurations, network topology, and security policies, allowing for deep customization and optimization for specific workloads .

-

Potential Long-Term Cost Savings: For stable, predictable, and large-scale workloads, self-hosting can sometimes be more cost-effective in the long run by leveraging existing infrastructure or optimizing hardware procurement, avoiding managed service markups .

-

Data Residency and Compliance: Full control over data location makes it easier to meet strict data residency and compliance requirements .

-

No Vendor Lock-in: You are not tied to a specific cloud provider's ecosystem or pricing model for your Kafka service.

-

Deep Expertise Building: Managing Kafka in-house fosters deep expertise within your team.

Cons of Self-Hosting:

-

High Operational Overhead: The day-to-day management, monitoring, patching, and troubleshooting of a Kafka cluster are resource-intensive and complex .

-

Requires Deep Expertise: Successfully running Kafka in production demands a skilled team with in-depth knowledge of Kafka internals, distributed systems, networking, and infrastructure management .

-

Significant Upfront Investment: Setting up the infrastructure can involve substantial capital expenditure (CAPEX) .

-

Complexity in Scaling and Maintenance: Scaling the cluster, performing upgrades, and ensuring high availability require careful planning and execution .

-

Time-to-Market: The initial setup and configuration can be time-consuming, potentially delaying project timelines .

Best Practices for Self-Hosted Kafka:

-

Capacity Planning: Carefully plan storage (considering retention, message size, replication), memory (for JVM and page cache), CPU (for processing and I/O threads), and network bandwidth .

-

Hardware and OS: Use fast SSDs, consider RAID 10, ensure sufficient RAM (e.g., 32GB+ per broker), use multi-core CPUs, and run on a stable Linux distribution. Tune JVM settings, especially garbage collection .

-

Security Hardening: Implement end-to-end encryption, strong authentication (SASL/mTLS), fine-grained authorization (ACLs), and regularly audit configurations .

-

Monitoring: Implement comprehensive monitoring for broker health, producer/consumer metrics, resource utilization (CPU, disk, network), consumer lag, and JVM performance. Utilize JMX metrics and tools like Prometheus and Grafana .

-

Disaster Recovery: Plan for multi-datacenter DR using patterns like stretch clusters or asynchronous replication. Regularly test backup and recovery procedures .

-

Performance Tuning: Optimize broker, producer, and consumer configurations (e.g., batch sizes, linger times, fetch sizes, compression) and partition strategies .

-

Upgrades: Follow rolling upgrade procedures, perform thorough testing in staging environments, and monitor closely post-upgrade .

Managed Kafka Services

Managed Kafka services offer Kafka as a turn-key solution, where a third-party provider handles the infrastructure and much of the operational management .

How They Work & Division of Responsibilities:

Managed services abstract away the complexities of setting up and maintaining Kafka clusters. The provider typically manages:

-

Hardware provisioning and maintenance.

-

Kafka software installation, patching, and upgrades.

-

Cluster availability and reliability (often backed by SLAs).

-

Basic security of the underlying infrastructure.

-

Sometimes, automated scaling and rebalancing.

The customer is generally responsible for:

-

Application-level security and access control (configuring ACLs or IAM roles provided by the service).

-

Data modeling (topic design, partitioning strategy).

-

Producer and consumer logic.

-

Monitoring application-specific metrics and consumer lag.

-

Cost management and optimization within the service.

-

Data governance specific to their data, though some providers offer tools to assist .

Pros of Managed Kafka Services:

-

Reduced Operational Burden: Significantly lowers the effort required for cluster setup, maintenance, and management, freeing up engineering teams to focus on application development .

-

Faster Time-to-Market: Quick provisioning allows teams to start using Kafka much faster than setting up a self-hosted cluster .

-

Scalability and Elasticity: Many services offer easy scaling capabilities, sometimes automatically, to handle fluctuating workloads .

-

Reliability and SLAs: Providers typically offer Service Level Agreements (SLAs) for uptime, ensuring a certain level of availability .

-

Expert Support: Access to expert support from the service provider can be invaluable for troubleshooting and optimization.

-

Predictable Operational Expenditure (OPEX): Costs are typically based on usage or provisioned capacity, shifting from CAPEX to OPEX .

-

Built-in Security Features: Often come with pre-configured security measures and integrations with cloud provider IAM systems .

Cons of Managed Kafka Services:

-

Potentially Higher Ongoing Costs: Subscription or usage-based fees can be higher than the raw infrastructure costs of a self-hosted setup, especially at very large, stable scales .

-

Less Control and Flexibility: Configuration options may be limited compared to a self-hosted environment. Customizations might not always be possible .

-

Vendor Lock-in: Relying on a specific provider can lead to vendor lock-in, making future migrations more challenging.

-

Service Limitations: Providers may impose quotas or limitations on resources, features (e.g., no JMX access on some services), or Kafka versions .

-

Data Egress Costs: Transferring data out of the cloud provider's network can incur significant costs .

-

Complexity in Hybrid Environments: Integrating managed Kafka services with on-premises systems can introduce networking and security complexities .

Best Practices for Managed Kafka Services:

-

Understand Pricing Models: Thoroughly evaluate the pricing dimensions (e.g., throughput, storage, partitions, cluster hours, data transfer) of different providers .

-

Right-size Resources and Select Appropriate Tiers: Choose service tiers and resource allocations based on your actual workload requirements to avoid over-provisioning .

-

Leverage Built-in Monitoring and Alerts: Utilize the monitoring tools provided by the service and set up alerts for key metrics and cost thresholds .

-

Optimize Data Transfer: Minimize cross-zone or cross-region data transfer where possible to reduce costs. Utilize features like rack awareness if available .

-

Implement Data Retention and Compression: Configure appropriate data retention policies and enable message compression to manage storage costs .

-

Secure Your Data and Access: Configure authentication, authorization (using service-provided IAM or Kafka ACLs), and encryption as offered by the provider .

-

Understand SLAs: Carefully review the provider's SLA to understand uptime guarantees, service credit policies, and exclusions .

Side-by-Side Comparison

| Feature | Self-Hosted Kafka | Managed Kafka Service |

|---|---|---|

| Infrastructure Mgmt. | Full responsibility (hardware, OS, network) | Provider managed |

| Kafka Operations | Full responsibility (setup, config, upgrades, DR) | Largely provider managed, some customer config |

| Initial Setup Time | Days to weeks | Minutes to hours |

| Control & Customization | High | Limited to provider offerings |

| Expertise Required | Deep Kafka & infrastructure knowledge | Less Kafka ops expertise needed |

| Cost Model | Primarily CAPEX, ongoing OPEX | Primarily OPEX (subscription/usage-based) |

| Scalability | Manual, requires planning & potential hardware | Often automated or on-demand, elastic |

| Performance | Potentially highly optimized; dependent on setup | Good, but may have overheads; provider optimized |

| Reliability/HA | User-implemented; complex | Provider-guaranteed via SLAs; built-in redundancy |

| Security | User-implemented (encryption, authN/authZ) | Built-in features, integration with cloud IAM |

| Monitoring | Requires external tools (e.g., Prometheus) | Often built-in, with integrations |

| Time-to-Market | Slower | Faster |

| Vendor Lock-in | Low | Potential, depending on provider-specific features |

| Data Governance Tools | Bring your own (e.g., separate schema registry) | Varies; some offer integrated schema registries, catalogs |

![Comparison of Self Hosted Services and Managed Services [79]](/blog/self-hosted-kafka-vs-managed-kafka/1.png)

Making the Right Choice: Self-Hosted or Managed?

The decision between self-hosting Kafka and using a managed service depends on several factors unique to your organization:

-

Team Expertise and Resources: Do you have a dedicated team with deep Kafka operational expertise? If not, a managed service can significantly lower the barrier to entry .

-

Budget and Cost Structure: Consider upfront CAPEX vs. ongoing OPEX. While self-hosting might seem cheaper for raw infrastructure, the TCO (including operational staff, training, and potential downtime) must be factored in . Managed services offer predictable costs but can become expensive at high scale if not optimized .

-

Control and Customization Needs: If you require fine-grained control over every aspect of your Kafka configuration and underlying infrastructure, or need specific customizations not offered by managed providers, self-hosting is likely the better option .

-

Time-to-Market: If speed of deployment and focusing developer efforts on applications rather than infrastructure is paramount, managed services offer a significant advantage .

-

Scalability Requirements: Managed services often provide easier and sometimes automatic scaling, which can be beneficial for workloads with high variability .

-

Security and Compliance: Both models can be secure, but the implementation responsibility differs. Managed services often come with certain compliance certifications out-of-the-box, while self-hosting gives you full control to meet specific, stringent requirements .

-

Existing Infrastructure and Cloud Strategy: Your current infrastructure (on-premises data centers vs. cloud-native) and overall cloud strategy will influence the decision. Hybrid scenarios might involve a mix of both or require careful integration planning .

Conclusion

Choosing between self-hosted Kafka and a managed Kafka service involves a trade-off between control, cost, and operational convenience. Self-hosting offers ultimate control and potential long-term cost benefits for large, stable deployments but demands significant expertise and operational effort. Managed services provide ease of use, faster deployment, and reduced operational burden, making Kafka accessible to a broader range of organizations, albeit with potential trade-offs in cost and flexibility.

Carefully evaluate your organization's specific needs, resources, and strategic goals. By understanding the nuances of each approach, you can select the Kafka deployment model that will best empower your real-time data streaming initiatives and drive business value.

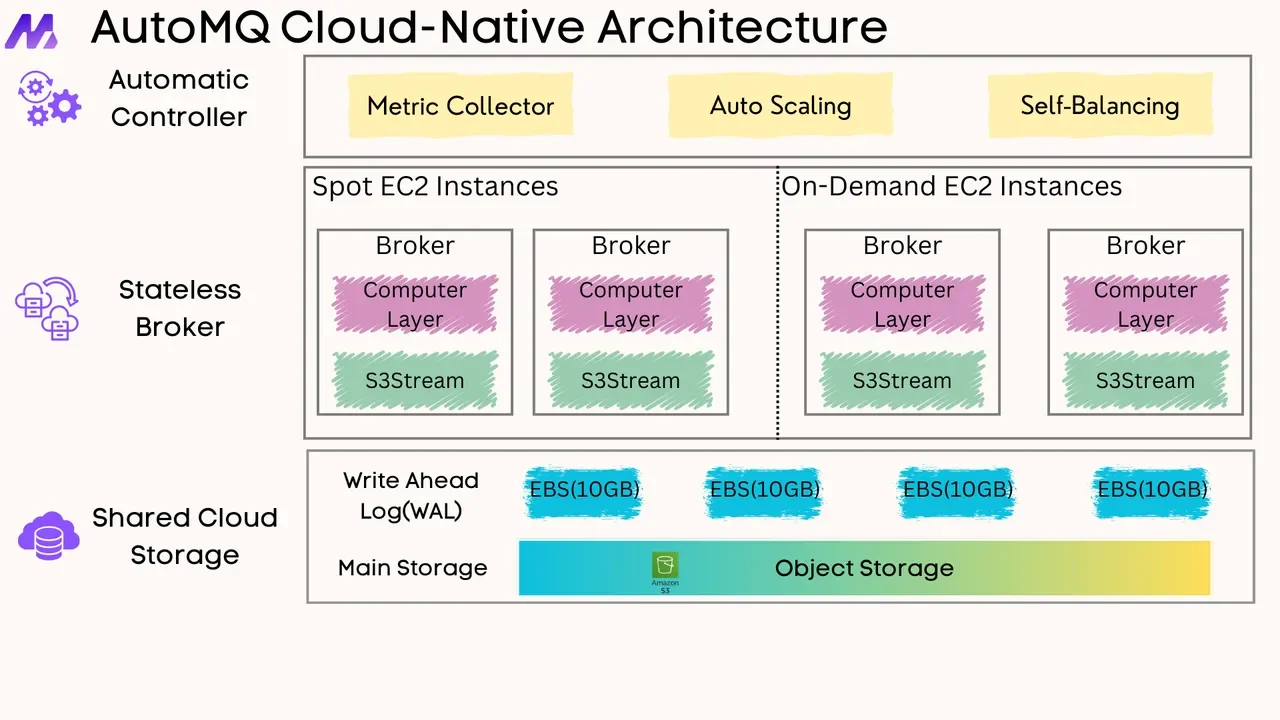

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging