Databend is a state-of-the-art, cloud-native data warehouse developed using Rust, tailored for cloud architectures and leveraging object storage. It provides enterprises with a robust big data analytics platform featuring an integrated lakehouse architecture and a separation of compute and storage resources.

This article outlines the steps to import data from AutoMQ into Databend using bend-ingest-kafka.

Environment Setup

Prepare Databend Cloud and Test Data

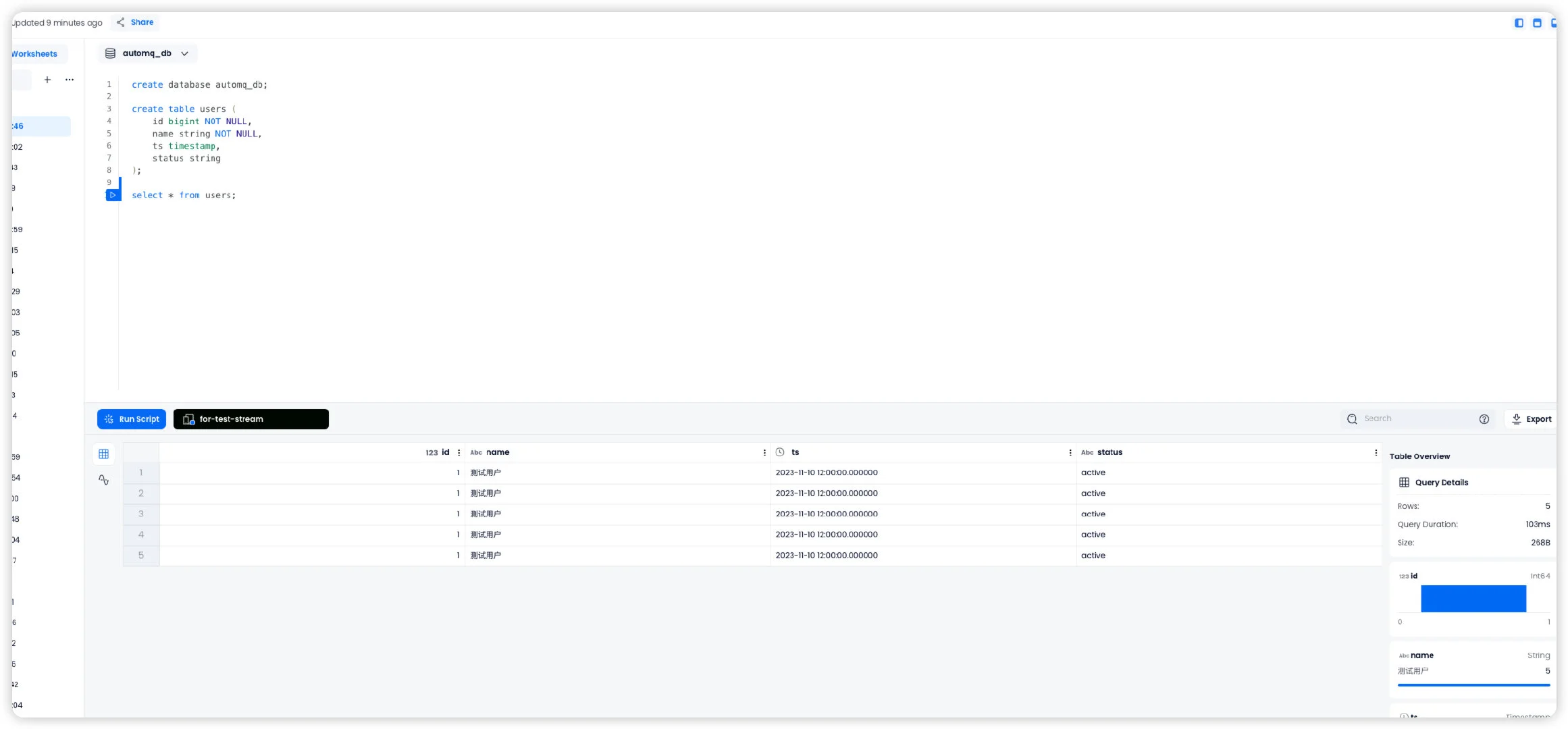

Initially, navigate to Databend Cloud to launch a Warehouse, and proceed to create a database and a test table in the worksheet.

create database automq_db;

create table users (

id bigint NOT NULL,

name string NOT NULL,

ts timestamp,

status string

)

Prepare AutoMQ and Test Data

Follow the Stand-alone Deployment guide to set up AutoMQ, ensuring there is network connectivity between AutoMQ and Databend.

Quickly create a topic named example_topic in AutoMQ and add test JSON data by following these instructions.

Create a Topic

To set up a topic using Apache Kafka® command-line tools, first ensure that you have access to a Kafka environment and the Kafka service is active. Here's an example command to create a topic:

./kafka-topics.sh --create --topic exampleto_topic --bootstrap-server 10.0.96.4:9092 --partitions 1 --replication-factor 1

Once the topic is created, use the command below to confirm that the topic was successfully established.

./kafka-topics.sh --describe example_topic --bootstrap-server 10.0.96.4:9092

Generate Test Data

Create a JSON formatted test data, matching the previous table.

{

"id": 1,

"name": "testuser",

"timestamp": "2023-11-10T12:00:00",

"status": "active"

}

Write Test Data

Write test data to a topic named `example_topic` using Kafka's command-line tools or through programming. Here is an example using the command-line tool:

echo '{"id": 1, "name": "testuser", "timestamp": "2023-11-10T12:00:00", "status": "active"}' | sh kafka-console-producer.sh --broker-list 10.0.96.4:9092 --topic example_topic

Use the following command to view the data just written to the topic:

sh kafka-console-consumer.sh --bootstrap-server 10.0.96.4:9092 --topic example_topic --from-beginning

Create a bend-ingest-databend job

bend-ingest-kafka is capable of monitoring Kafka and batching data into a Databend Table. Once bend-ingest-kafka is deployed, the data import job can be started.

bend-ingest-kafka --kafka-bootstrap-servers="localhost:9094" --kafka-topic="example_topic" --kafka-consumer-group="Consumer Group" --databend-dsn="https://cloudapp:password@host:443" --databend-table="automq_db.users" --data-format="json" --batch-size=5 --batch-max-interval=30s

Parameter Description

databend-dsn

The DSN for connecting to the warehouse, provided by Databend Cloud, is detailed in this document.

batch-size

bend-ingest-kafka accumulates data up to the specified batch-size before initiating a data synchronization.

Validate Data Import

Access the Databend Cloud worksheet and execute a query on the automq_db.users table to verify the synchronization of data from AutoMQ to the Databend Table.