Overview

The year is 2025, and the data landscape is more complex than ever. Organizations are grappling with vast volumes of data from diverse sources, making discoverability, governance, and understanding critical for success. At the heart of managing this complexity lies the catalog service. But what exactly is a catalog service in today's tech world, and how do you choose the best one for your needs? This post will delve into the core concepts, compare solutions, and highlight best practices for catalog services in 2025.

What is a Catalog Service?

In essence, a catalog service acts as a centralized system of record for all your data assets. Think of it as an intelligent library for your data, where assets are not just stored but are also described, organized, and made easily discoverable. In modern data architectures, especially those involving event streaming and distributed systems, "catalog service" typically encompasses several key components:

-

Data Catalogs : These are inventories of data assets, providing capabilities to manage and search for data across an organization . They help users find, understand, and assess the quality and trustworthiness of data. Key features often include metadata discovery (often via crawlers), rich search functionality, data lineage visualization, and collaboration tools.

-

Schema Registries : Crucial for systems dealing with event streaming (e.g., Apache Kafka), schema registries manage the schemas (like Avro, Protobuf, JSON Schema) for data in transit. They ensure that data producers and consumers have a shared understanding of the data structure and can handle schema evolution gracefully through compatibility checks and versioning.

-

Event Catalogs : More specialized than general data catalogs, event catalogs focus on documenting and discovering event-driven architectures . They help teams understand event streams, the services that produce and consume them, and their interrelationships.

-

API Catalogs : As APIs become central to modern application development, API catalogs provide a centralized, searchable repository for all APIs (internal, partner, external) along with their metadata and documentation . This aids in discovery, reuse, and governance of API assets.

-

Feature Stores : Specifically for machine learning workflows, feature stores are central repositories for storing, organizing, and serving features used in training ML models and for real-time predictions . They promote consistency and reusability of features across ML projects.

The overarching goal of these catalog services is to enhance data governance, improve data discovery, enable self-service analytics, and ensure that data is a well-understood and trusted asset.

Core Concepts and Why They Matter

Understanding the fundamental concepts behind catalog services is key to appreciating their value:

-

Metadata Management : This is the cornerstone of any catalog service. Metadata—data about data—includes technical details (schema, data types, location), business context (definitions, ownership, usage guidelines), and operational information (freshness, quality scores, lineage) . Effective metadata management ensures that data assets are not just discoverable but also understandable and trustworthy.

-

Data Discovery & Search : The ability for users to easily find relevant data assets is a primary function. Modern catalogs offer powerful search capabilities, often with filtering, faceting, and sometimes even natural language processing (NLP) or AI-assisted search to make discovery intuitive .

-

Data Lineage : Understanding the origin, movement, and transformation of data is critical for root cause analysis, impact assessment, and regulatory compliance . Data lineage visualization, often down to the column level, is a highly sought-after feature.

-

Data Governance : Catalog services are integral to implementing data governance frameworks. They help enforce data quality standards, manage access controls, classify sensitive data (e.g., PII), and ensure compliance with regulations like GDPR or CCPA .

-

Collaboration : Modern catalogs often include features that foster collaboration among data users, such as annotations, ratings, comments, Q&A, and the ability to share insights or certify datasets . This helps capture tribal knowledge and build a community around data.

-

Automation & AI/ML : Increasingly, catalog services are leveraging AI and machine learning to automate tasks like metadata ingestion, data classification (especially for PII), anomaly detection in data quality, and even suggesting relevant datasets or generating descriptions . This "active metadata" approach makes catalogs more intelligent and less reliant on manual effort.

![Data Catalog Overview [46]](/blog/best-data-catalog-service-2025-guide/1.png)

Key Solutions and Comparative Overview (2025 Landscape)

The catalog service market in 2025 offers a diverse range of solutions, from open-source projects to comprehensive enterprise platforms, often integrated within larger data fabric or data management clouds.

| Feature Area | Open Source (e.g., Apache Atlas, DataHub, Amundsen) | Cloud Provider (e.g., AWS Glue Data Catalog, Azure Purview, Google Cloud Dataplex) | Commercial Enterprise (e.g., Collibra, Alation, Informatica EDC, Atlan, Data.world) | Specialized (e.g., Schema Registries, Event Catalogs) |

|---|---|---|---|---|

| Primary Focus | Broad metadata management, governance, discovery. Often highly extensible. | Integrated with the respective cloud ecosystem, serverless options, often strong on technical metadata from cloud services. | Comprehensive data intelligence, rich governance workflows, strong business user focus, advanced AI/ML capabilities, extensive collaboration features. | Managing schemas for streaming data, documenting event-driven architectures. |

| Key Strengths | Flexibility, community support, no vendor lock-in, cost (often free to use, but support/infra costs exist). Strong on lineage (e.g., Atlas ). | Deep integration with native cloud services, pay-as-you-go pricing, managed service benefits (reduced operational overhead). Good for automated classification (e.g., AWS Glue , Purview). | End-to-end governance, user-friendly UIs, strong focus on data stewardship and business context, active metadata, automated insights, robust security and compliance features. GenAI in catalogs . | Ensuring data quality and compatibility in streaming, clear documentation for complex event flows. |

| Considerations | Often requires more in-house expertise for setup, customization, and maintenance. UI/UX can be less polished than commercial tools. | Can be tightly coupled to the cloud vendor's ecosystem. Feature depth for non-native sources might vary. | Higher cost, potential for vendor lock-in, complexity can be high for smaller organizations. | Scope is narrower than a full data catalog. |

| Typical Deployment | Self-hosted, Kubernetes. | Cloud-native SaaS. | Cloud (SaaS), hybrid, or on-premises. | Often part of a streaming platform or offered as a standalone service/tool. |

| AI/ML Capabilities | Varies; DataHub supports some AI/ML features for recommendations . Atlas focuses on core metadata. | Automated data classification (PII), some recommendation capabilities. AWS Glue has ML for deduplication . | Advanced: AI-driven curation, automated tagging, semantic search, PII detection, anomaly detection in metadata, query recommendations, GenAI for descriptions . | Generally less focused on broad AI/ML for metadata, more on schema validation rules. |

| Integrations | Good for common data sources, evolving connector ecosystem. Kafka integration is key for some. | Excellent for native cloud services, growing list of connectors for external sources. | Extensive lists of pre-built connectors for diverse data sources, BI tools, ETL tools, cloud platforms, and applications. Open APIs for custom integrations . | Primarily with streaming platforms (Kafka), message brokers, and related development tools. |

When evaluating specific solutions, consider factors like the diversity of your data sources, the technical skills of your team, your governance requirements, the need for business user self-service, and your budget. A "best" catalog service is highly contextual.

Best Practices for Success

Implementing a catalog service is not just a technology project; it's a strategic initiative that requires careful planning and execution.

-

Define Clear Goals and Scope : Understand what problems you are trying to solve. Is it improving data discovery for analysts? Enabling better governance and compliance? Facilitating self-service for business users? Start with a focused scope and expand .

-

Involve All Stakeholders : Data catalogs impact various roles – data engineers, analysts, scientists, stewards, and business users. Involve them early in the selection and implementation process to ensure buy-in and that the catalog meets diverse needs .

-

Prioritize Metadata Quality & Enrichment : A catalog is only as good as its metadata. Automate metadata ingestion where possible, but also invest in processes for manual curation and enrichment with business context, descriptions, and tags . Active metadata capabilities can greatly assist here .

-

Establish Strong Governance : Define roles, responsibilities, and processes for managing the catalog, ensuring data quality, and enforcing policies . This includes who can add/edit metadata, certify assets, and manage access.

-

Focus on User Adoption : A user-friendly interface, comprehensive training, and clear communication of benefits are crucial for adoption . Active onboarding and fostering a knowledge-sharing culture around the catalog can significantly boost its usage .

-

Integrate with Existing Workflows : The catalog should not be an isolated tool. Integrate it with your existing data tools (BI platforms, data science workbenches, ETL pipelines) to make it a natural part of users' daily workflows .

-

Iterate and Evolve : Start small, gather feedback, and continuously improve the catalog. Regularly review usage metrics, identify pain points, and adapt the catalog to changing business needs .

-

Automate Where Possible : Leverage automation for metadata collection, data quality monitoring, and even AI-driven suggestions to reduce manual effort and keep the catalog up-to-date .

Common Issues and How to Mitigate Them

Despite the benefits, organizations often face challenges when implementing and managing catalog services:

-

Metadata Staleness : Outdated metadata erodes trust.

- Mitigation: Implement automated metadata harvesting, establish refresh policies, use real-time synchronization where feasible, and involve data stewards in validating and updating metadata . Active metadata management is key.

-

Integration Complexity : Connecting to a diverse and evolving data stack can be challenging.

- Mitigation: Choose a catalog with a rich set of pre-built connectors and robust APIs. Plan integrations carefully and prioritize based on value .

-

Low User Adoption : If the catalog is difficult to use or doesn't provide clear value, users won't engage.

- Mitigation: Focus on UX, provide thorough training, clearly articulate benefits, and embed the catalog into existing workflows. Start with use cases that deliver quick wins .

-

Poor Data Quality in the Catalog : If the underlying data (and its metadata) is of poor quality, the catalog will reflect this.

- Mitigation: Integrate data quality monitoring and remediation processes with the catalog. Allow users to report issues and track their resolution .

-

Security and Compliance Concerns : Catalogs store sensitive metadata and must comply with data privacy regulations.

- Mitigation: Implement robust access controls (RBAC/ABAC), data masking for sensitive metadata, and ensure the catalog supports audit logging and compliance reporting .

-

Measuring ROI : Justifying the investment can be difficult.

- Mitigation: Define clear KPIs upfront, such as time saved in data discovery, improved data quality metrics, reduced compliance risks, and faster project delivery .

-

Organizational Resistance : Change can be hard. Teams might be used to their old ways of finding and managing data.

- Mitigation: Strong leadership vision, clear communication of benefits, and involving users in the process can help overcome resistance. Address data silos and foster a collaborative, data-driven culture .

Emerging Trends for 2025 and Beyond

The catalog service space continues to evolve rapidly. Key trends to watch in 2025 include:

-

Active Metadata Management : Catalogs are becoming more dynamic, using AI/ML to continuously analyze metadata, infer relationships, detect anomalies, and recommend actions. This "active" approach contrasts with older, more static metadata repositories .

-

Generative AI Integration : GenAI is being embedded into catalogs to automate metadata generation (e.g., business descriptions for assets), enable natural language queries for data discovery, and even assist in creating data transformation logic .

-

Data Mesh Enablement : As organizations adopt data mesh architectures (decentralized data ownership and domain-oriented data products), catalogs play a crucial role in enabling discovery, understanding, and governance of these distributed data products . The debate between federated and unified catalog approaches continues in this context .

-

Deeper AI/ML Integration for Data Preparation : Catalogs are becoming essential for preparing data for AI agents and ML models, ensuring data is well-organized, validated, and easily discoverable by these intelligent systems .

-

Increased Focus on Cloud Cost Optimization : With more data moving to the cloud, catalogs can help identify redundant or underutilized data assets, contributing to cloud cost management efforts .

-

Convergence with Data Observability : The lines between data catalogs, data quality tools, and data observability platforms are blurring, leading to more integrated solutions that provide a holistic view of data health and reliability.

Conclusion: Making the Right Choice

Choosing the "best" catalog service in 2025 depends heavily on your organization's specific context, maturity, and strategic goals. There's no one-size-fits-all answer. However, by understanding the core concepts, evaluating the diverse range of solutions based on your needs, adhering to best practices for implementation and management, and staying aware of emerging trends, you can select and leverage a catalog service that transforms your data from a complex challenge into a powerful asset. The journey requires a blend of technology, process, and people, all working together to unlock the true value of your data.

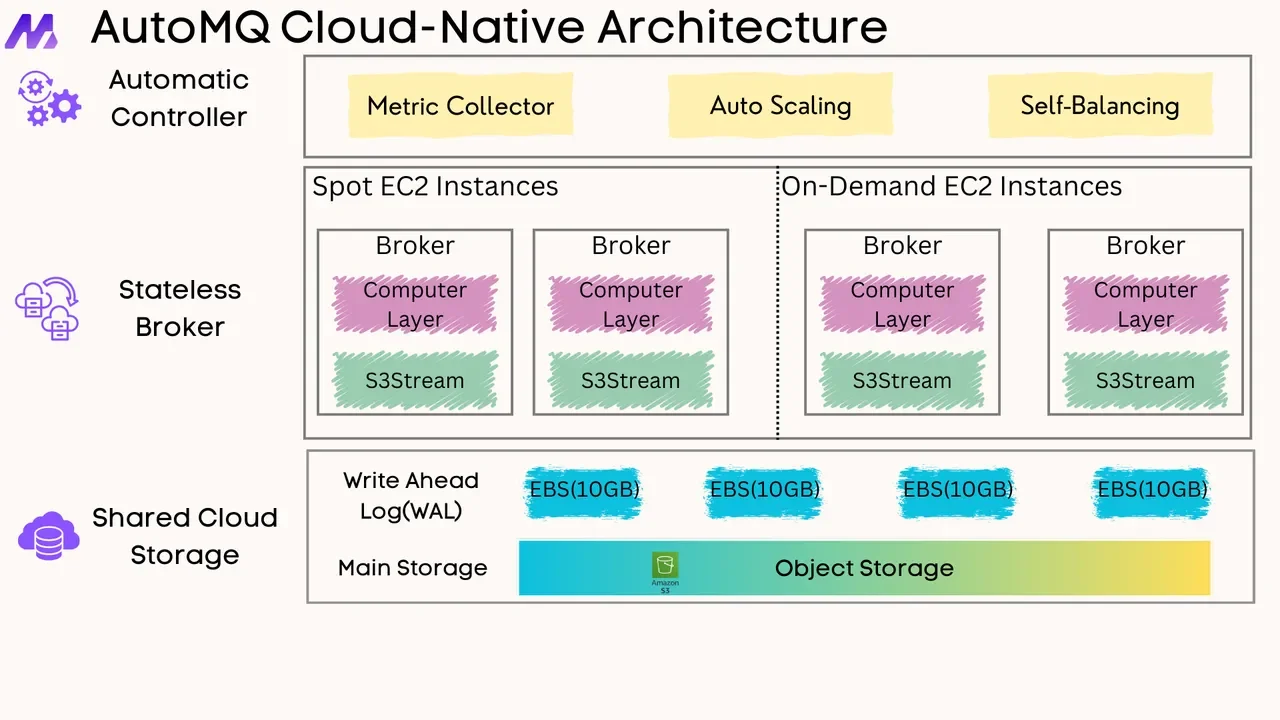

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging