Overview

Choosing the right messaging or streaming platform is a critical architectural decision. Two prominent players in this space are Apache Kafka and Amazon Simple Queue Service (SQS). While both facilitate asynchronous communication between application components, they are designed with fundamentally different philosophies and excel in different use cases. This blog post will provide a comprehensive comparison to help you understand their core concepts, architectures, features, and when to choose one over the other.

Understanding Apache Kafka

Apache Kafka is an open-source, distributed event streaming platform. Think of it as a highly scalable, fault-tolerant, and durable distributed commit log . It's designed to handle high-volume, real-time data feeds and is often the backbone for event-driven architectures and streaming analytics.

Core Kafka Concepts

-

Events/Messages: The fundamental unit of data in Kafka, representing a fact or an occurrence. Each event has a key, value, timestamp, and optional metadata headers .

-

Brokers: Kafka runs as a cluster of one or more servers called brokers. These brokers manage the storage of data, handle replication, and serve client requests .

-

Topics: Streams of events are organized into categories called topics. Topics are like named channels to which producers publish events and from which consumers subscribe .

-

Partitions: Topics are divided into one or more partitions. Each partition is an ordered, immutable sequence of events, and events are appended to the end of a partition. Partitions allow topics to be scaled horizontally across multiple brokers and enable parallel consumption .

-

Offsets: Each event within a partition is assigned a unique sequential ID number called an offset. Offsets are used by consumers to track their position in the event stream .

-

Producers: Client applications that write (publish) events to Kafka topics . Producers can choose which partition to send an event to, often based on the event key to ensure related events go to the same partition .

-

Consumers: Client applications that read (subscribe to) events from Kafka topics .

-

Consumer Groups: Consumers can be organized into consumer groups. Each partition within a topic is consumed by only one consumer within a consumer group, allowing for load balancing and parallel processing. Different consumer groups can consume the same topic independently .

-

ZooKeeper/KRaft: Historically, Kafka relied on Apache ZooKeeper for metadata management, cluster coordination, and leader election . More recent versions of Kafka are transitioning to a self-managed metadata quorum using Kafka Raft (KRaft), which simplifies architecture and reduces operational overhead .

Kafka's Architecture and How It Works

Kafka's architecture is centered around the concept of a distributed, partitioned, and replicated commit log. When a producer publishes an event to a topic, it's appended to a partition. Events are stored durably on disk for a configurable retention period, allowing for replayability . This is a key differentiator from traditional message queues.

Kafka relies heavily on the operating system's file system for storing and caching messages, leveraging sequential disk I/O for high performance (O(1) for reads and appends) . Replication across brokers ensures fault tolerance; if a broker fails, another broker with a replica of the partition can take over as the leader .

Kafka supports stream processing through libraries like Kafka Streams and ksqlDB, allowing for real-time transformation, aggregation, and analysis of data as it flows through Kafka topics . Kafka Connect provides a framework for reliably streaming data between Kafka and other systems like databases, search indexes, and file systems .

![Apache Kafka Architecture [49]](/blog/kafka-vs-sqs-messaging-streaming-platforms-comparison/1.png)

Understanding Amazon SQS

Amazon Simple Queue Service (SQS) is a fully managed message queuing service offered by Amazon Web Services (AWS). It enables you to decouple and scale microservices, distributed systems, and serverless applications. Unlike Kafka's stream-centric model, SQS is primarily a traditional message queue .

Core SQS Concepts

-

Queues: The fundamental resource in SQS. Producers send messages to queues, and consumers retrieve messages from them.

-

Messages: Data sent between components. SQS messages can be up to 256 KB of text in any format (e.g., JSON, XML) . For larger messages, a common pattern is to store the payload in Amazon S3 and send a reference to it in the SQS message.

-

Standard Queues: Offer maximum throughput, best-effort ordering (messages might be delivered out of order), and at-least-once delivery (a message might be delivered more than once) .

-

FIFO (First-In-First-Out) Queues: Designed to guarantee that messages are processed exactly once, in the precise order that they are sent . FIFO queues also support message group IDs for parallel processing of distinct ordered groups and content-based deduplication .

-

Visibility Timeout: When a consumer retrieves a message, it becomes "invisible" in the queue for a configurable period called the visibility timeout. This prevents other consumers from processing the same message. If the consumer fails to process and delete the message within this timeout, the message becomes visible again for another consumer to process .

-

Dead-Letter Queues (DLQs): Queues that other (source) queues can target for messages that can't be processed successfully. This is useful for isolating problematic messages for later analysis and troubleshooting .

-

Polling (Short and Long): Consumers retrieve messages from SQS by polling the queue.

-

Short polling returns a response immediately, even if the queue is empty.

-

Long polling waits for a specified duration for a message to arrive before returning a response, which can reduce empty receives and lower costs .

-

SQS Architecture and How It Works

SQS is a fully managed service, meaning AWS handles the underlying infrastructure, scaling, and maintenance . Messages sent to an SQS queue are stored durably across multiple Availability Zones (AZs) within an AWS region.

The message lifecycle in SQS typically involves a producer sending a message to a queue. A consumer then polls the queue, receives a message, processes it, and finally deletes the message from the queue to prevent reprocessing . The visibility timeout mechanism is crucial here for managing concurrent processing and retries.

SQS is designed for decoupling application components. For example, a web server can send a task to an SQS queue, and a separate pool of worker processes can consume and process these tasks asynchronously, allowing the web server to remain responsive .

![AWS SQS Architecture [50]](/blog/kafka-vs-sqs-messaging-streaming-platforms-comparison/2.png)

Kafka vs. SQS: Side-by-Side Comparison

| Feature | Apache Kafka | Amazon SQS |

|---|---|---|

| Primary Model | Distributed Event Streaming Platform (Log-based) | Managed Message Queue (Traditional Queue) |

| Management | Self-managed (requires setup, configuration, maintenance of brokers, ZooKeeper/KRaft) or use a managed service offering. | Fully managed by AWS |

| Data Persistence | Long-term, configurable retention (e.g., days, weeks, or forever). Events are replayable. | Short-term, up to 14 days. Messages are typically deleted after processing. |

| Message Ordering | Guaranteed within a partition. Global ordering requires a single partition. | Standard: Best-effort. FIFO: Guaranteed within a message group ID. |

| Delivery Guarantees | At-least-once (default with acks=all). Exactly-once semantics possible via idempotent producers and transactions. | Standard: At-least-once. FIFO: Exactly-once processing (with deduplication). |

| Throughput | Very high, millions of messages/second, limited by hardware and configuration. | High, scales automatically. Standard queues have nearly unlimited throughput; FIFO queues have limits (e.g., 3000 messages/sec with batching, higher with high-throughput mode). |

| Latency | Typically very low (milliseconds). | Low, but generally higher than Kafka due to polling and network overhead. |

| Scalability | Horizontal via brokers and partitions. Requires manual scaling or automation. | Automatic scaling managed by AWS. |

| Consumer Model | Pull model with consumer groups and offset management. Consumers track their own position. | Pull model with visibility timeout. SQS manages message visibility. |

| Message Replay | Yes, consumers can re-read messages from any offset within the retention period. | No native message replay for already processed messages. DLQ redrive allows reprocessing of failed messages. Limited replay options with SNS FIFO subscriptions. |

| Stream Processing | Yes, via Kafka Streams, ksqlDB, and other stream processing frameworks. | No built-in stream processing capabilities. Designed for message queuing. |

| Message Size | Default 1MB, configurable (can be larger with performance considerations). | Up to 256KB. Larger payloads require using S3 with message pointers. |

| Message Prioritization | No built-in support. Can be implemented via multiple topics or custom partitioning. | No built-in support for message prioritization in standard queues. FIFO queues process in order. |

| Complexity | Higher complexity for setup, management, and operations if self-hosted. | Lower complexity, easier to set up and use due to its managed nature. |

| Ecosystem & Tooling | Rich ecosystem (Kafka Connect, Schema Registry, numerous client libraries, monitoring tools). | Integrated with AWS ecosystem (Lambda, S3, CloudWatch, IAM). AWS SDKs. |

Operational and Ecosystem Differences

Management Overhead

-

Kafka: If self-managed, Kafka involves significant operational overhead. This includes provisioning hardware, installing and configuring Kafka and ZooKeeper/KRaft, monitoring cluster health, performing upgrades, managing security, and handling disaster recovery . Managed Kafka services can alleviate this burden.

-

SQS: Being fully managed by AWS, SQS has minimal operational overhead. AWS handles infrastructure, patching, scaling, and availability, allowing developers to focus on application logic .

Complexity

-

Kafka: Generally considered more complex to set up, develop against, and maintain, especially for teams new to distributed streaming platforms. Client configuration for producers and consumers can be intricate .

-

SQS: Simpler to get started with. The API and client SDKs are straightforward, and the managed nature abstracts away much of the underlying complexity .

Cost Structure

-

Kafka: Costs for self-managed Kafka include server hardware, storage, network bandwidth, and operational staff. For managed Kafka services, pricing typically involves instance hours, storage, data transfer, and potentially feature tiers. Cloud storage costs, particularly for long retention periods, can be significant if not optimized .

-

SQS: Follows a pay-as-you-go model, primarily based on the number of requests (sending, receiving, deleting messages) and data transfer out. There's a free tier, and costs are generally predictable for simple use cases but can scale with high volume . Long polling and batching are recommended to optimize costs .

Ecosystem and Integrations

-

Kafka: Boasts a vast open-source ecosystem with numerous connectors (via Kafka Connect) for various data sources and sinks, client libraries in many languages, and a wide array of third-party tools for monitoring, management, and stream processing .

-

SQS: Deeply integrated within the AWS ecosystem, working seamlessly with services like AWS Lambda (for serverless processing), S3, DynamoDB, SNS, and CloudWatch for monitoring .

When to Choose Kafka vs. SQS: Application Scenarios

Decoupling Microservices

-

SQS: Excellent for simple decoupling of microservices, especially within an AWS environment. It provides reliable asynchronous communication without tight coupling .

-

Kafka: Also used for decoupling microservices, particularly in event-driven architectures where services react to a stream of events. Suitable if microservices need to consume the same event history or perform stream processing .

Event Sourcing

-

Kafka: Its append-only log structure, data immutability, long-term retention, and message replay capabilities make it a strong fit for event sourcing architectures .

-

SQS: Not designed for event sourcing as messages are transient and not typically replayed after successful processing.

Task Queuing / Background Job Processing

-

SQS: A natural fit for distributing tasks to worker processes, managing retries (via visibility timeout and DLQs), and scaling workers. Commonly used for background job processing .

-

Kafka: Can be used for task queuing, but might be an overkill if simpler queue semantics are sufficient. Message prioritization is harder to achieve.

Real-time Analytics and Stream Processing

-

Kafka: The clear winner here. Designed for high-throughput, low-latency event streaming and has built-in support for stream processing (Kafka Streams, ksqlDB), making it ideal for real-time analytics, fraud detection, IoT data ingestion, and complex event processing pipelines .

-

SQS: Not suitable for stream processing. It acts as a message buffer, and any analytical processing needs to be done by consumers after retrieving messages.

Log Aggregation

-

Kafka: Widely used for aggregating logs from distributed systems due to its high throughput and ability to act as a central, durable buffer before logs are processed and sent to storage or analysis systems .

-

SQS: Can be used for queuing log messages, but Kafka's features are generally better aligned for large-scale log aggregation pipelines.

Simple Asynchronous Messaging / Buffering

-

SQS: Ideal for simpler asynchronous messaging needs where you need a reliable buffer between application components, especially if you are already using AWS services .

-

Kafka: Can serve this purpose but might introduce unnecessary complexity if advanced streaming features aren't required.

Conclusion

Both Apache Kafka and Amazon SQS are powerful platforms for building distributed applications, but they cater to different needs.

Choose Apache Kafka if:

-

You need a high-throughput, low-latency event streaming platform.

-

Real-time stream processing and analytics are primary requirements.

-

Long-term message retention and replayability (event sourcing) are crucial.

-

You require fine-grained control over the infrastructure (if self-managing) or prefer a platform with a rich open-source ecosystem.

-

Handling millions of events per second is a common scenario.

Choose Amazon SQS if:

-

You need a simple, fully managed message queue for decoupling application components.

-

Ease of use, rapid development, and minimal operational overhead are priorities.

-

Your application is primarily within the AWS ecosystem.

-

Strict message ordering and exactly-once processing are required (using SQS FIFO).

-

Buffering tasks for asynchronous processing by worker services is the main goal.

In some complex architectures, it's even possible to use both Kafka and SQS together, leveraging Kafka for its streaming capabilities and SQS for specific queuing tasks within the broader data pipeline. Ultimately, the best choice depends on a thorough understanding of your application's specific requirements, scalability needs, operational capacity, and cost considerations.

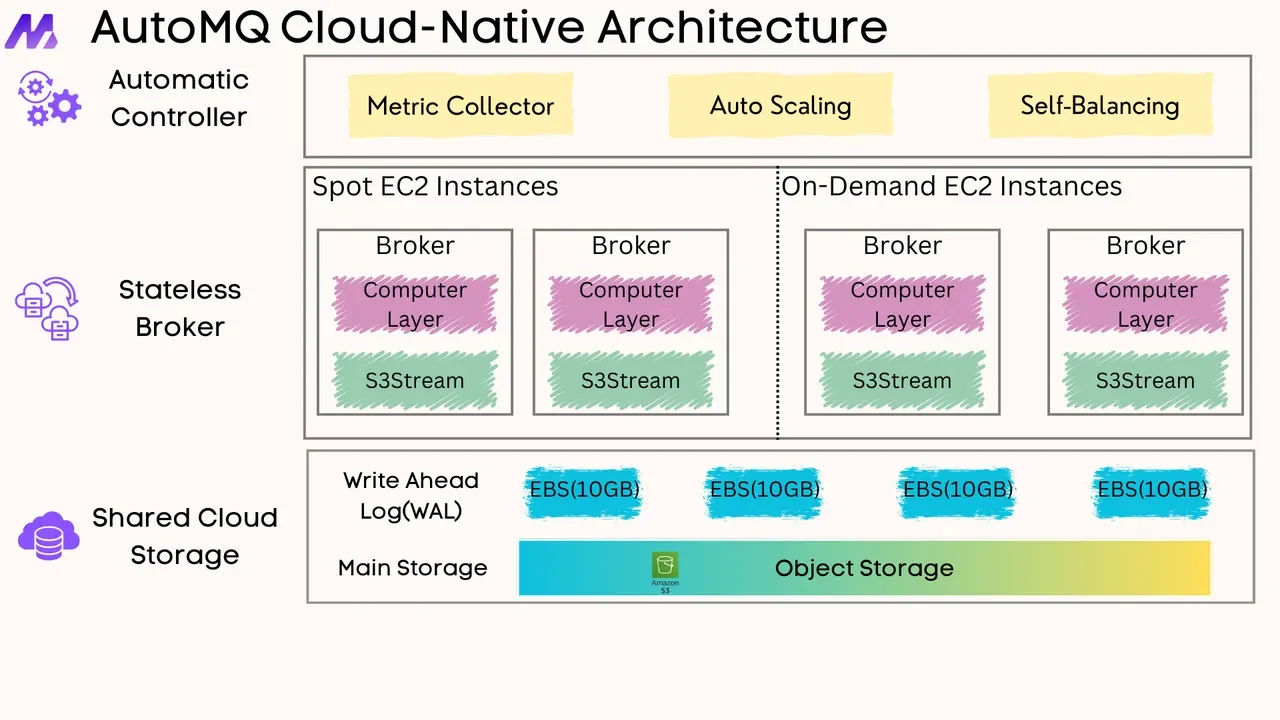

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

-

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

-

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

-

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

-

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

-

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

-

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

-

JD.com x AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging