Introduction

Apache Kafka has become the backbone of modern data architectures, powering everything from real-time analytics to event-driven microservices. As its adoption has grown, so has the ecosystem of managed Kafka services, which promise to handle the operational overhead of running this powerful but complex platform. While these services simplify deployment and management, they introduce a new challenge: understanding and controlling costs.

Choosing a managed service provider is only half the battle. The other half is selecting the right pricing model for your workload. The decision typically boils down to two primary approaches: paying for pre-allocated resources (Provisioned Capacity) or paying only for what you consume (Pay-As-You-Go). Each model has significant implications for your budget, operational flexibility, and performance guarantees.

This blog post will serve as your guide to decoding these managed Kafka pricing models. We will explore how they work, compare their respective strengths and weaknesses, and provide a framework to help you choose the most cost-effective approach for your specific needs.

The Anatomy of Managed Kafka Costs

Before we dissect the pricing models, it's crucial to understand the fundamental components that contribute to the total cost of a managed Kafka cluster. Regardless of the model you choose, your bill will be a function of these key factors:

Compute Resources : This refers to the underlying virtual machines (brokers) that run the Kafka software. The cost is influenced by the number and size (vCPU and RAM) of these instances. In some models, this is bundled into a more abstract unit, but compute power is always a primary cost driver [2].

Storage : Kafka is not just a messaging system; it's a storage system. It durably stores your data for a configured period, known as the retention period. You pay for the volume of data stored (in GB-months) and, in some cases, for the performance characteristics of the disk [4].

Data Throughput (Ingress & Egress) : This is the volume of data flowing into (ingress) and out of (egress) your cluster. Many pricing models have a per-gigabyte charge for the data you produce to and consume from Kafka.

Partitions : Topics in Kafka are split into partitions, which are the basic unit of parallelism. While partitions are a logical construct, having a very high number of them can increase memory overhead on the brokers and may incur a direct per-partition, per-hour cost in some pricing models [5].

Data Transfer : When data moves between different availability zones (AZs) for high availability and replication, cloud providers typically charge a network data transfer fee. Since a standard Kafka deployment replicates data three times across different zones, you can expect to transfer double your ingress data volume across AZs [2].

Understanding these levers is the first step toward effective cost management. Now, let's see how they are packaged within the two main pricing models.

The Provisioned Capacity Model: Predictability and Power

The Provisioned Capacity model is the traditional approach to cloud infrastructure. It’s analogous to leasing a car for a fixed period. You decide upfront what resources you need, commit to them, and pay a fixed rate, regardless of how much you use them.

How It Works

When setting up your cluster, you make explicit choices about its size and power. This includes:

Broker Instance Type and Count : You select the specific virtual machine size (e.g., number of vCPUs and amount of RAM) and the number of brokers for your cluster.

Storage Volume : You provision a specific amount of storage (e.g., 1000 GB) for each broker.

You are then billed a fixed hourly or monthly rate for this provisioned infrastructure. If your traffic grows beyond the capacity of your cluster, you must manually intervene to scale up by adding more brokers or choosing larger instance types [4].

Advantages:

Predictable Costs : Your bill is consistent and easy to forecast, making budgeting straightforward.

Performance Guarantee : Because resources are dedicated to your cluster, you have a more stable performance profile, free from the "noisy neighbor" problem.

Cost-Effective at Scale : For workloads that are consistently high and stable, the per-unit cost of provisioned resources can be lower than pay-as-you-go models.

Disadvantages:

Risk of Over-provisioning : The biggest challenge is accurately predicting your capacity needs. If you overestimate, you pay for idle resources, wasting money.

Risk of Under-provisioning : If you underestimate capacity, your application may suffer from performance degradation or outages when traffic spikes.

Requires Capacity Planning : This model puts the onus on you to perform detailed capacity planning and forecasting, which requires expertise and can be time-consuming [4].

Inflexibility : It's not well-suited for applications with "spiky" or unpredictable traffic patterns, as you can't scale down automatically to save costs during quiet periods.

Best suited for:

Mature applications with stable, predictable, high-volume workloads where performance is critical and cost forecasting is a priority.

The Pay-As-You-Go Model: Flexibility and Efficiency

The Pay-As-You-Go model, often associated with "serverless" offerings, represents a fundamental shift in cloud consumption. Continuing the car analogy, this is like using a ride-sharing service—you pay only for the trips you take. You don't have to worry about the car's maintenance, just your usage.

How It Works

With this model, you don't provision individual brokers or storage volumes. Instead, the provider manages a large, multi-tenant pool of resources, and you are billed based on your actual consumption across several metrics [1]. A typical pricing structure might include:

An hourly fee for the cluster itself.

A charge per gigabyte of data written (ingress).

A charge per gigabyte of data read (egress).

A charge per gigabyte of data stored per month.

An hourly fee for each partition [1].

The platform automatically scales the underlying resources to meet your application's real-time throughput demands, ensuring you have the capacity you need without manual intervention.

Advantages:

Extreme Flexibility : The cluster seamlessly scales up or down to handle traffic spikes, making it ideal for variable workloads.

No Capacity Planning : The operational burden of forecasting, planning, and scaling is offloaded to the provider.

Cost Efficiency for Variable Loads : You don't pay for idle capacity. This can lead to significant savings for applications with inconsistent traffic, as well as for development and testing environments.

Faster Time-to-Market : Eliminating the need to size a cluster allows teams to get started and deploy applications more quickly.

Disadvantages:

Unpredictable Costs : While you only pay for what you use, it can be difficult to predict what that usage will be. A sudden, unexpected traffic surge can lead to a surprisingly high bill.

Potentially Higher Unit Costs : The convenience and flexibility come at a premium. At very high and sustained levels of usage, the per-unit cost may be higher than what you could achieve with an optimized provisioned cluster.

Performance Variability : In some multi-tenant architectures, you may experience more performance variability compared to having dedicated, provisioned resources.

Best suited for:

New applications where the workload is unknown, applications with spiky or intermittent traffic patterns, and development/staging environments.

Head-to-Head Comparison

| Feature | Provisioned Capacity | Pay-As-You-Go |

|---|---|---|

| Cost Structure | Fixed hourly/monthly rate for pre-allocated resources (brokers, storage). | Variable, based on actual consumption (GB in/out, GB stored, partition-hours). |

| Predictability | High. Costs are stable and easy to forecast. | Low to Medium. Costs fluctuate directly with usage. |

| Flexibility | Low. Scaling is a manual process and doesn't happen automatically. | High. Automatically scales to meet real-time demand. |

| Management | Requires significant upfront capacity planning and ongoing monitoring. | Minimal management overhead; no capacity planning required. |

| Ideal Workload | Stable, predictable, and high-volume traffic. | Variable, spiky, or unpredictable traffic. New applications. |

| Primary Risk | Wasted spend from over-provisioning or performance issues from under-provisioning. | Uncontrolled cost escalation from unexpected usage spikes. |

How to Choose the Right Model for You

There is no single "best" model; the right choice depends entirely on your context. Use the following questions as a framework for your decision:

What does your workload pattern look like? This is the most critical question. Chart your application's throughput over a typical day, week, and month. Is it a relatively flat line, or does it look like a series of sharp peaks and deep valleys? A flat line points toward provisioned capacity, while peaks and valleys strongly suggest pay-as-you-go.

How mature is your application? For a brand-new application, predicting usage is nearly impossible. A pay-as-you-go model provides the perfect low-risk entry point. For a mature, stable application with years of historical data, you can confidently provision capacity and optimize for cost [4].

What is your team's operational capacity? Does your team have the expertise and time to dedicate to performance monitoring and capacity planning? If not, the hands-off nature of a pay-as-you-go model can free up your engineers to focus on building features rather than managing infrastructure [4].

Can you adopt a hybrid strategy? You don't have to choose just one model. A common and highly effective strategy is to use a hybrid approach:

Production : Use a provisioned cluster for your stable, mission-critical production workload.

Development/Staging : Use pay-as-you-go clusters for development, testing, and staging environments, which typically have sporadic usage.

Universal Cost Optimization Best Practices

Regardless of the model you select, you can further control your costs by adopting good Kafka hygiene:

Set Sensible Retention Policies : Don't store data for longer than you need to. Audit your topic retention settings and reduce them where possible [6].

Use Compression : Enabling message compression (like LZ4 or ZSTD) at the producer level can dramatically reduce throughput and storage costs with a minimal CPU trade-off [5].

Right-size Partitions : Avoid over-partitioning topics. While parallelism is good, an excessive number of partitions can create memory overhead and, in some models, directly increase costs [6].

Monitor Actively : Use monitoring tools to keep an eye on your key cost drivers. Set up alerts for unexpected spikes in throughput or storage growth so you can react quickly and avoid bill shock.

Conclusion

The choice between provisioned capacity and pay-as-you-go pricing for managed Kafka is a strategic decision that balances cost predictability against operational flexibility. The provisioned model offers a fixed, forecastable expense for stable workloads but carries the risk of wasted resources. The pay-as-you-go model provides unparalleled elasticity for dynamic workloads but demands careful monitoring to prevent uncontrolled spending.

By thoroughly analyzing your application's workload patterns, maturity, and your team's operational capabilities, you can make an informed choice that aligns with both your technical requirements and your financial goals. As managed services continue to evolve, understanding these fundamental pricing structures will remain a critical skill for any engineer building on the modern data stack.

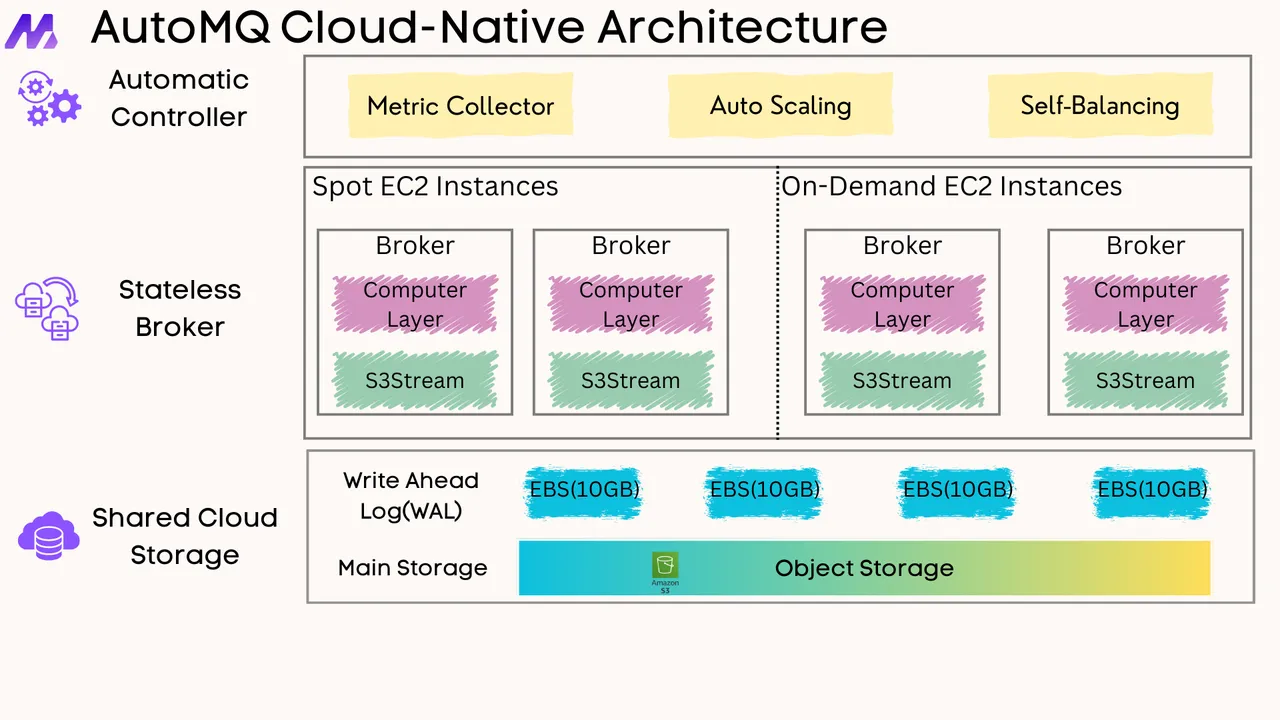

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on GitHub. Big companies worldwide are using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)