The Critical Need for Real-Time Financial Data

In today's financial markets, milliseconds matter. With over $6.6 trillion changing hands daily in global equity markets alone, traders depend on the speed of their data. High-frequency firms execute orders in microseconds, where even the slightest delay can result in missed opportunities or costly slippage.

Financial applications now operate at unprecedented scale—trading platforms push live prices to millions of users across thousands of assets, while portfolio managers track risk exposures in real time. Market data giants like Bloomberg and Reuters provide live updates to countless subscribers, and algorithmic traders require feeds with near-instant delivery. The surge in mobile trading has further heightened the demands, requiring systems to maintain reliability across inconsistent networks and unpredictable connectivity.

Beyond Kafka: Building Real-Time Financial Feeds for the Modern Web

Apache Kafka® has become a cornerstone for enterprise data distribution, functioning as a high-throughput pipeline for transactional and analytical data. However, at scale, this incurs significant costs—large enterprises often spend millions of dollars annually on Kafka infrastructure. Reducing these operational expenses has become a key priority for many organizations.

Beyond cost, financial data distribution presents unique challenges. Traffic patterns are highly volatile, requiring clusters to scale dynamically to meet fluctuating demand. Traditional Kafka’s partition-centric architecture complicates elastic scaling, as expanding capacity necessitates cumbersome partition reassignments and data migration. Additionally, Kafka alone does not natively support WebSocket or HTTP-based delivery, compelling enterprises to deploy supplementary infrastructure layers. Mobile client support further increases the complexity of data dissemination.

AutoMQ addresses these challenges through its diskless architecture, eliminating the cost and scalability limitations inherent in traditional Kafka. By decoupling storage and compute, AutoMQ facilitates seamless horizontal scaling without partition relocation overhead. When integrated with Lightstreamer, it creates a powerful, elastic real-time data distribution platform—optimized for financial use cases—while simultaneously reducing infrastructure costs and operational complexity.

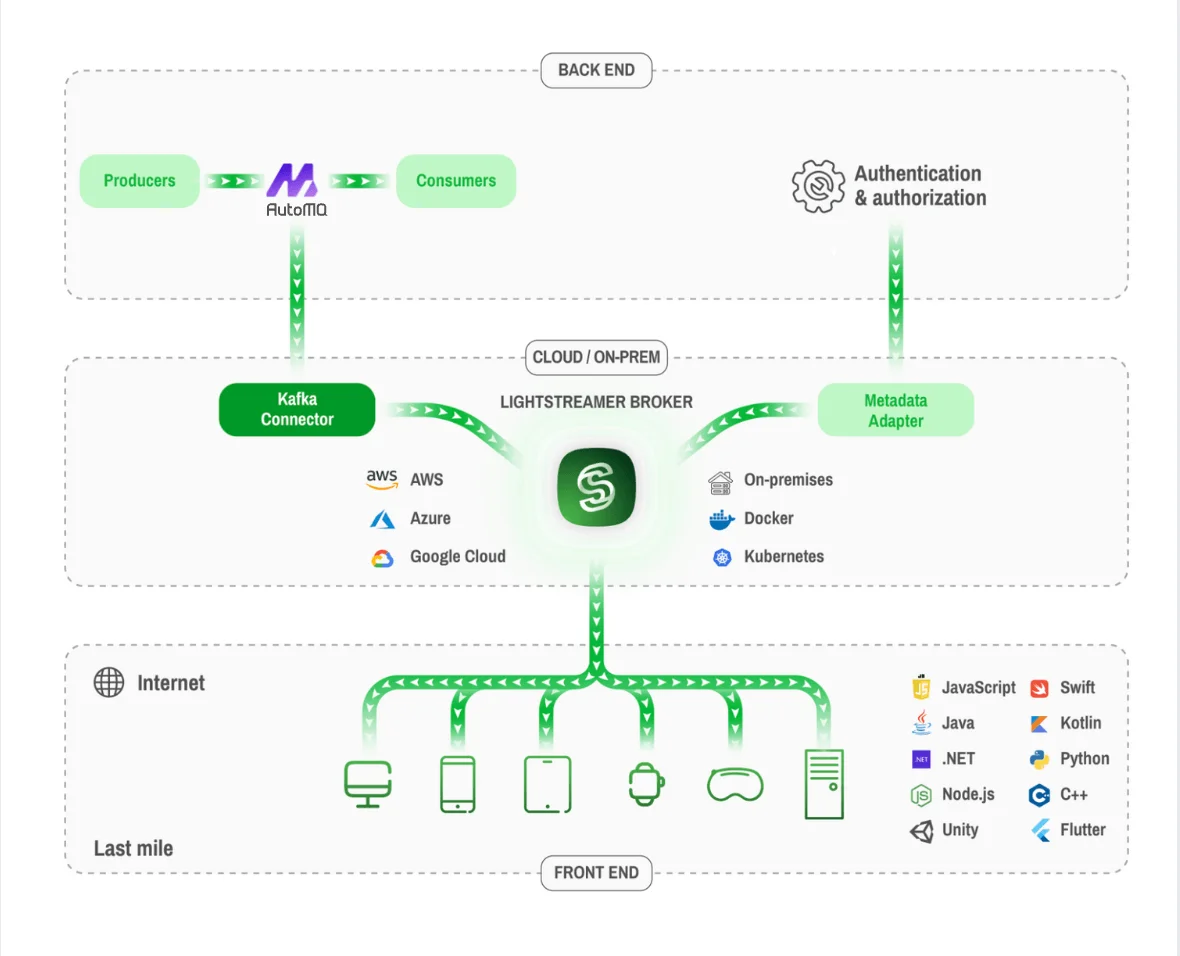

The Solution: AutoMQ + Lightstreamer Architecture

Our solution addresses these challenges by combining two complementary technologies:

AutoMQ stores data directly in S3-compatible object storage, removing the necessity for expensive local disk arrays. Its elastic scaling capabilities enable compute and storage to be scaled independently based on workload demands, leading to substantial cost reductions compared to traditional disk-based Kafka deployments. Crucially, AutoMQ maintains 100% API compatibility, ensuring existing Kafka applications function without modification.

Lightstreamer serves as a real-time streaming server that bridges the gap between Kafka and end-user applications. It efficiently handles millions of concurrent connections through its massive fanout capabilities and adaptive streaming technology, which automatically adjusts data flow based on network conditions. Supporting WebSocket, HTTP, and native mobile protocols, Lightstreamer can traverse firewalls and proxies while delivering data with low latency and high reliability to web browsers, mobile applications, and smart devices globally.

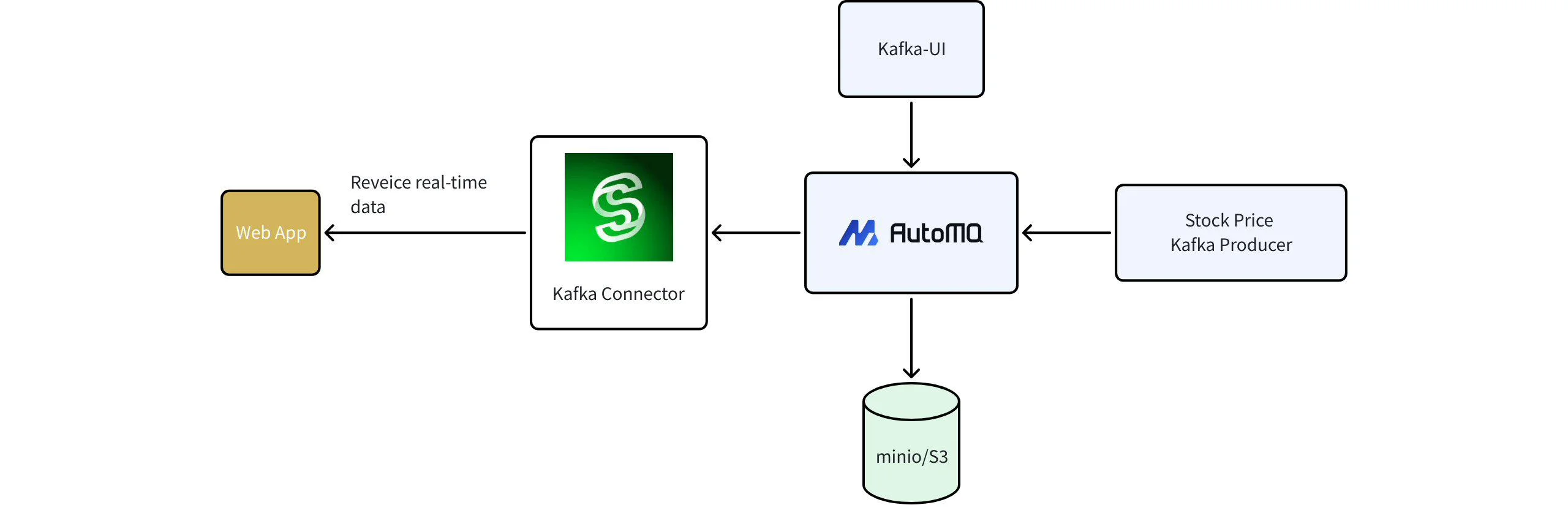

The overall architecture, illustrated in the following diagram, showcases a comprehensive real-time stock trading data pipeline. Upstream, a producer continuously generates live stock trading price data and streams it into the AutoMQ cluster. Leveraging AutoMQ's 100% Apache Kafka compatibility, data ingestion proceeds seamlessly without any protocol modifications or custom integrations. AutoMQ's innovative diskless architecture, built on object storage foundations, provides dynamic scaling capabilities that automatically adapt to fluctuating data volumes while offering virtually unlimited data retention capacity.

Downstream, the architecture utilizes the Lightstreamer Kafka Connector as an intelligent bridge, efficiently distributing real-time market data to diverse client applications via Lightstreamer's adaptive streaming platform. The demonstration highlights a responsive web application as the primary downstream consumer, although the architecture readily supports various client types, including mobile applications, IoT devices, and edge computing systems. This end-to-end solution addresses the full spectrum of challenges in financial data streaming, from high-throughput data production through reliable intermediate processing to intelligent last-mile delivery, thereby creating a robust and scalable foundation for modern trading platforms and financial applications.

Launch Real-Time Stock Price Streaming

As mentioned earlier, we have implemented a specific case of real-time stock price data sharing, and the relevant code is available in the Lightstreamer Kafka connector repository. You can quickly get started with Docker Compose using a script. This script will automatically start the producer, connector, and Lightstreamer-related components.

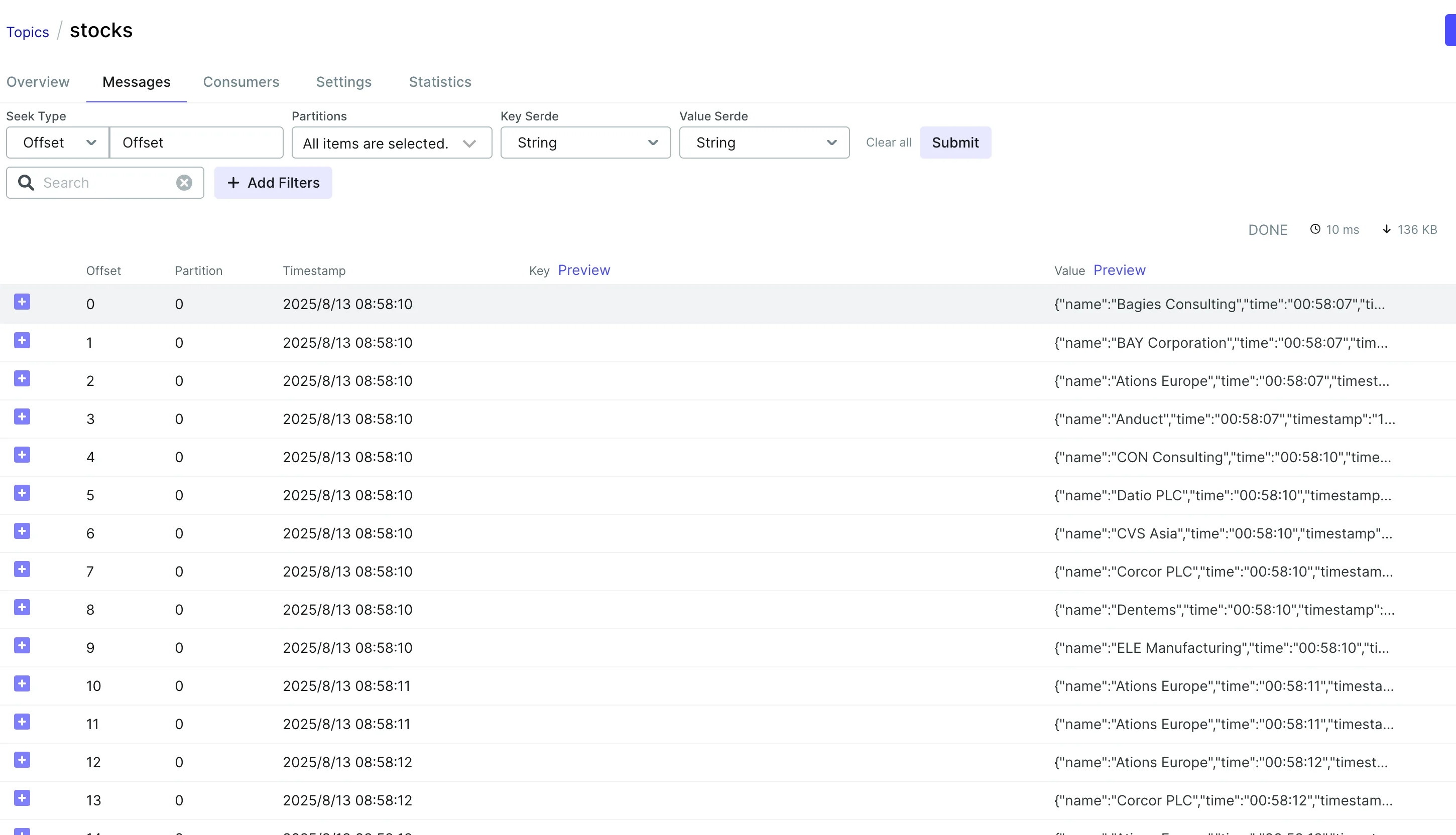

Once deployed, the system offers several interfaces for monitoring and demonstration. The Stock Price Demo is accessible at http://localhost:8080/QuickStart for viewing real-time stock price updates. Cluster monitoring and topic management can be accessed via Kafka UI at http://localhost:12000, while S3 storage management is available through the MinIO Console at http://localhost:9001 using the credentials minioadmin/minioadmin.

We can observe the continuously incoming data on the AutoMQ Topic, and concurrently, transaction data for stock market prices can be viewed on the web page.

Conclusion

The integration of AutoMQ and Lightstreamer offers a powerful solution for real-time financial data streaming, addressing the key challenges of modern trading platforms and financial applications. By combining AutoMQ's cloud-native Kafka distribution with Lightstreamer's intelligent streaming capabilities, organizations can develop scalable, cost-effective systems that deliver real-time market data to millions of concurrent users.

This architectural pattern extends beyond financial services to any application requiring high-throughput data ingestion with massive fanout capabilities, making it a valuable solution for IoT platforms, gaming systems, and real-time analytics applications. The relevant code and examples are open-sourced in the GitHub repository, and you are welcome to explore and experience them.