Introduction

In today's competitive landscape, the ability to act on data as it is created is no longer a luxury—it's a core business driver. We have moved beyond traditional batch ETL. The modern imperative is to capture, process, and analyze data in-flight, enabling real-time dashboards, instant fraud detection, and dynamic customer experiences. This is the domain of streaming analytics.

For engineering leaders and architects tasked with building these real-time capabilities, a fundamental strategic choice arises: do you buy an integrated commercial platform, or do you build a custom solution from best-of-breed open-source components?

This decision represents one of the most classic "buy vs. build" dilemmas in the data world. On one side, you have Striim, a powerful, unified platform designed to accelerate development. On the other, you have the formidable open-source stack, typically composed of Debezium, Apache Kafka, and a processing engine like Apache Flink or Kafka Streams. This blog post provides a technical, balanced comparison to help you navigate this critical architectural choice.

The "Buy" Approach: Understanding Striim

Striim is a unified, real-time data integration and streaming intelligence platform. Its core value proposition is consolidating the entire streaming analytics pipeline—from data ingestion to processing and delivery—into a single, cohesive product [1]. Instead of stitching together separate tools, Striim provides an integrated environment designed to reduce complexity and accelerate time-to-market.

Core Architecture and Functionality

Striim's architecture is built to handle the end-to-end lifecycle of streaming data. Its key functions are unified within the platform:

Continuous Ingestion: Striim uses low-impact, log-based Change Data Capture (CDC) to pull real-time data from enterprise sources like Oracle, SQL Server, and PostgreSQL. It also connects to logs, sensors, and message queues.

In-Memory Stream Processing: This is Striim's centerpiece. Data streams are processed in memory using a continuous query engine. Users can build sophisticated data pipelines using a declarative, SQL-like language called TQL (T-SQL). This allows for filtering, transformation, aggregation, and enrichment with reference data, all in-flight.

Real-Time Delivery: After processing, the resulting data streams can be delivered to a wide range of targets, including data warehouses, data lakes, other databases, or messaging systems.

Unified Development and Operations: The entire process is managed through a graphical UI that allows for building, deploying, and monitoring data pipelines from a single pane of glass. This includes features for schema evolution, data validation, and real-time alerts.

Essentially, Striim provides a ready-made factory for producing real-time analytical insights, managed and supported by a single vendor.

![Striim in Data Pipeline [1]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/69099daff96a19190a59f2e6_67480fef30f9df5f84f31d36%252F685e5a88aea6958f51d8a070_4bVo.png)

The "Build" Approach: The Composable Open-Source Stack

The "build" approach involves creating a custom streaming analytics platform by integrating several powerful, independent open-source projects. This provides ultimate flexibility but requires significant engineering effort. The typical components of this stack are:

Debezium (The Capture Engine): As a specialized CDC platform, Debezium's role is to reliably capture row-level changes from source databases and publish them as a structured stream of events [2].

Apache Kafka (The Streaming Backbone): Kafka serves as the distributed, persistent message bus [3]. It ingests the raw change events from Debezium and acts as the central nervous system of the architecture, decoupling data producers from data consumers and providing a buffer for the data streams.

Apache Flink or Kafka Streams (The Processing Engine): This is where the analytics logic resides.

Apache Flink: A powerful, distributed stream processing framework designed for complex, stateful computations over unbounded data streams. Flink is known for its robust event-time processing, windowing capabilities, and consistent state management, making it ideal for sophisticated use cases like fraud detection, anomaly detection, and complex event processing [4].

Kafka Streams: A lighter-weight Java library for building streaming applications directly on the Kafka platform. It is simpler to deploy and manage than a full Flink cluster and is an excellent choice for applications that involve transformations, stateful enrichments, and aggregations directly within the Kafka ecosystem [5].

Building with this stack means your team is responsible for integrating these components, writing the processing logic in Java or Scala, and managing the entire distributed system's lifecycle.

![the Architecture of a Change Data Capture Pipeline Based on Debezium [6]](https://static-file-demo.automq.com/6809c9c3aaa66b13a5498262/68f5ebfcc64c84e9a44ae3cc_67480fef30f9df5f84f31d36%252F685e5a8968dea7887bfba30b_rJAM.png)

Head-to-Head Comparison: The Architect's Trade-Offs

Choosing between Striim and the open-source stack is a decision based on fundamental trade-offs between speed, flexibility, cost, and complexity.

Time to Market and Development Speed

Striim: This is where the "buy" approach shines. With an integrated platform and a familiar SQL-like language, data teams can build and deploy production-ready pipelines in a fraction of the time. The graphical UI for pipeline construction and pre-built components for common tasks dramatically reduce development cycles. A project that might take months to build with open source could potentially be delivered in weeks with Striim.

Build Stack: This path is inherently slower. Development involves not just writing the core business logic in a language like Java or Scala, but also significant effort in integrating, configuring, and deploying the separate components. Engineers must handle data serialization, schema management, failure recovery, and monitoring across the entire stack, which is a substantial undertaking.

Summary: For organizations where speed and time-to-market are the primary drivers, Striim offers a decisive advantage.

Flexibility and Customization

Striim: The platform is highly flexible for use cases that can be expressed through its continuous query language and built-in components. However, like any platform, it has boundaries. If your application requires highly specialized algorithms, custom machine learning model integration, or logic that falls outside the SQL paradigm, you may face limitations.

Build Stack: This approach offers unlimited flexibility. Your team has complete control over the code and can implement any custom logic imaginable. You can integrate any third-party library, build a completely bespoke data processing model, and fine-tune every component for your specific workload. This is the single biggest reason why organizations choose to build their own platform.

Summary: For ultimate control, customization, and the ability to implement any proprietary logic, the open-source build stack is unbeatable.

Total Cost of Ownership (TCO)

This is the most nuanced comparison and a common point of confusion.

Striim ("Buy"): This approach involves upfront software licensing or subscription costs. These costs can be significant. However, the TCO may be lower when factoring in the reduced need for a large team of highly specialized (and expensive) distributed systems engineers. Faster development cycles and a single point of vendor support also contribute to a potentially more predictable and lower overall cost.

Build Stack ("Free"): The software itself is free to download and use. However, the TCO can be deceptively high. The primary cost is human capital: hiring, training, and retaining a team of expert engineers proficient in Kafka, Flink/Streams, and distributed operations. The "cost" also includes longer development timelines, ongoing maintenance, and the internal engineering effort required to keep the platform stable, secure, and up-to-date.

Summary: The TCO calculation is a strategic decision. Striim offers more predictable costs and can be lower for organizations that prioritize development speed over engineering overhead. The build stack can be cheaper only if the organization already has a dedicated platform team and is prepared for the long-term investment in operational excellence.

Operational Complexity and Maintenance

Striim: Operations are significantly simplified. You have a single platform to deploy, monitor, patch, and upgrade. If an issue arises, you have a single vendor to contact for support. This reduces the operational burden on your team.

Build Stack: This is where the "build" path carries the most risk. You are responsible for managing a complex, multi-component distributed system. Upgrading one component (e.g., Flink) can lead to compatibility issues with others (e.g., Kafka or connector libraries). You must build your own robust monitoring, alerting, and disaster recovery procedures. This requires a dedicated platform or Site Reliability Engineering (SRE) team to manage effectively.

Summary: For operational simplicity and reduced maintenance overhead, Striim is the clear winner.

How to Choose: A Decision Framework for Your Team

Choose the "Buy" Approach (Striim) when:

Speed is paramount. The integrated platform allows you to build and deploy real-time pipelines in a fraction of the time it would take to build a custom stack, making it ideal when time-to-market is the primary business driver.

Your use case is analytical. The platform excels at powering real-time dashboards and in-flight transformations that can be expressed with SQL, making it perfect for operational intelligence and modern data warehousing.

You want to empower your existing team. It allows data analysts and engineers to leverage their existing SQL skills, avoiding the high cost and lengthy process of hiring specialized distributed systems engineers.

You need predictable support and costs. A commercial license provides a formal SLA and a single point of contact for troubleshooting, which reduces operational risk and simplifies financial planning.

Choose the "Build" Approach (Debezium + Flink/Streams) when:

You require deeply custom logic. This is the best path when your processing needs, such as implementing a proprietary machine learning algorithm or complex simulation, go beyond the capabilities of a SQL-based platform.

The platform is a core strategic asset. Building gives you complete control and avoids vendor lock-in, which is critical when your real-time data capabilities are a key competitive differentiator for your business.

You have a dedicated team of expert engineers. This approach is only viable if you have in-house talent with deep expertise in the internals of Kafka, Flink/Streams, and JVM performance tuning.

Your organization is committed to long-term ownership. Building a platform means treating it as an internal product, with dedicated resources for maintenance, upgrades, on-call rotations, and operational excellence.

In short, choose to buy for speed and operational simplicity; choose to build for ultimate flexibility and strategic control.

Conclusion

The "Striim vs. Open Source" decision is not about technology in isolation; it is about strategy. It is a choice between investing in a product versus investing in a team.

Striim offers a powerful, integrated product that provides a fast lane to streaming analytics. It trades some measure of customizability for a massive gain in speed, simplicity, and operational stability.

The open-source stack of Debezium, Kafka, and Flink/Streams offers a set of powerful, unbundled components. It provides a toolkit for building a completely bespoke platform with infinite flexibility, but it demands a significant, long-term investment in expert engineering and operational maturity.

Ultimately, the best path is the one that aligns with your organization’s DNA. A realistic evaluation of your team’s skills, your project's timeline, and your company's strategic appetite for building versus buying will lead you to the right decision.

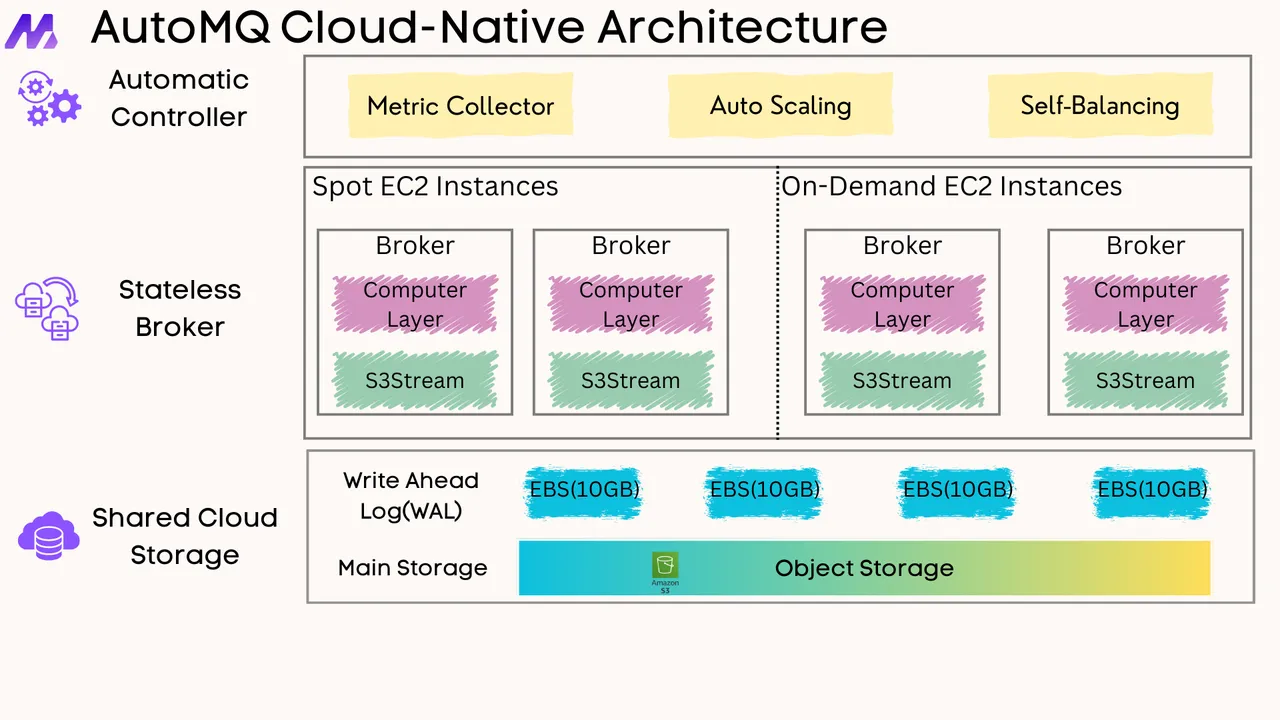

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)